在spark yarn模式下跑yarn-client时出现无法初始化SparkContext错误.

17/09/27 16:17:54 INFO mapreduce.Job: Task Id : attempt_1428293579539_0001_m_000003_0, Status : FAILED

Container [pid=7847,containerID=container_1428293579539_0001_01_000005] is running beyond virtual memory limits. Current usage: 123.5 MB of 1 GB physical memory used; 2.6 GB of 2.1 GB virtual memory used. Killing container.

jdk1.7的时候,没有如下报错,但是java1.8出现

ERROR spark.SparkContext: Error initializing SparkContext.

ERROR yarn.ApplicationMaster: RECEIVED SIGNAL 15: SIGTERM

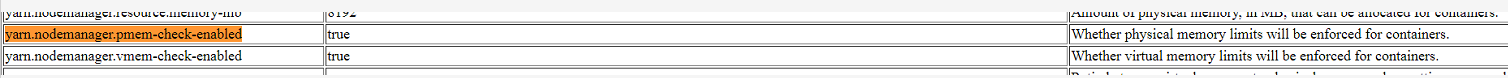

原因可能与yarn配置文件中 containers 的默认属性有关,被强制限定了物理内存

可以尝试中hadoop的conf下yarn.xml配置文件中添加一下属性:

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

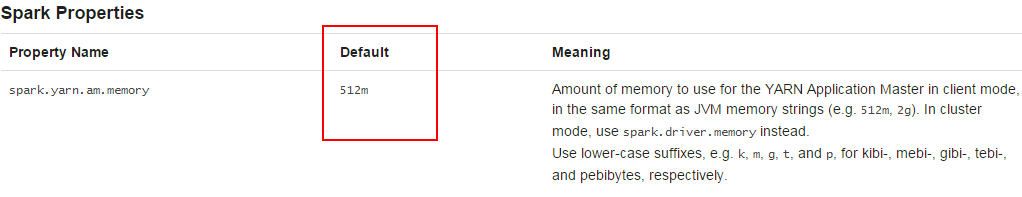

除此之外,Spark官网上也有Spark Properties说明,其中给出了默认的值

我的最终方法是直接复制SPARK_HOME/conf下的spark-defaults.conf.template,改名为spark-defaults.conf ,vim spark-defaults.conf 把默认值加到1G

spark.yarn.am.memory 1g

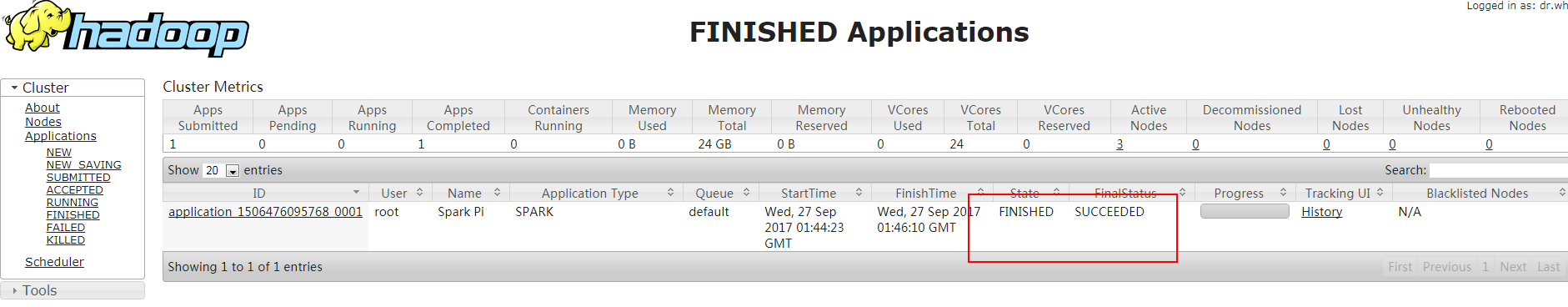

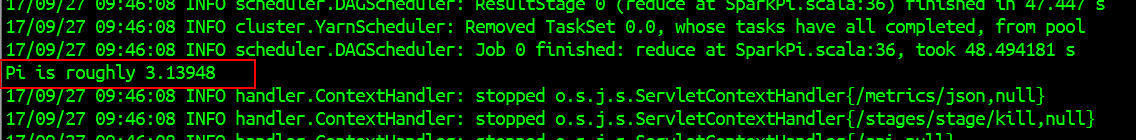

然后再执行任务命令就没有问题了

[root@srv01 conf]# ./spark-submit --class org.apache.spark.examples.SparkPi --deploy-mode client --master yarn --driver-memory 2g --queue default /usr/spark/lib/spark-examples-1.6.1-hadoop2.6.0.jar