1. namspace(scope)

1.1 name_scope:

- create namespaces, ops分组, 进而tensorboard可以各个ops进行分组显示,进而使得tensorboard的可视化效果更好,进而有助于debug

1.2 variable_scope:

-

使用tf.get_variables() [不要用 tf.Variables() ]

-

create namespaces, ops分组,并且能实现变量共享

-

本质上,variable_scope隐式地使用了name_scope()

2. Some tips

-

tf.saver.restore()只能恢复latest checkpoint,能不能restore更多自定义的checkpoint,再查下api吧

-

有个tensorborad和summary,就没必要再使用matplotlib做可视化了

-

op-level的random的特点:具有相同seed的sess.run()的第一次都是相同值,第二次,第三次的sess.run()就不会是相同的

c = tf.random_uniform([], -10, 10, seed=2) with tf.Session() as sess: print sess.run(c) # >> 3.57493 # 第一次sess.run() print sess.run(c) # >> -5.97319 # 第二次sess.run() c = tf.random_uniform([], -10, 10, seed=2) with tf.Session() as sess: print sess.run(c) # >> 3.57493 # 当前这个sess的第一次sess.run() with tf.Session() as sess: print sess.run(c) # >> 3.57493 # 当前这个sess的第一次sess.run() c = tf.random_uniform([], -10, 10, seed=2) d = tf.random_uniform([], -10, 10, seed=2) with tf.Session() as sess: print sess.run(c) # >> 3.57493 # 这个sess的第一次sess.run() print sess.run(d) # >> 3.57493 #虽然是这个sess的第二次sess.run(),但是op换了 # 并且新的op的seed和老的op是相同的,所以两次的结果相同 -

graph-level的random

-

If you don’t care about the randomization for each op inside the graph, but just want to be able to replicate result on another graph (so that other people can replicate your results on their own graph), you can use tf.set_random_seed instead. Setting the current TensorFlow random seed affects the current default graph only.

-

# a.py import tensorflow as tf # tf.set_random_seed(2) # 去掉 graph-level的seed c = tf.random_uniform([], -10, 10) d = tf.random_uniform([], -10, 10) with tf.Session() as sess: print sess.run(c) # -4.00752 print sess.run(d) # -2.98339 # b.py import tensorflow as tf tf.set_random_seed(2) c = tf.random_uniform([], -10, 10) d = tf.random_uniform([], -10, 10) with tf.Session() as sess: print sess.run(c) # -4.00752 print sess.run(d) # -4.00752

-

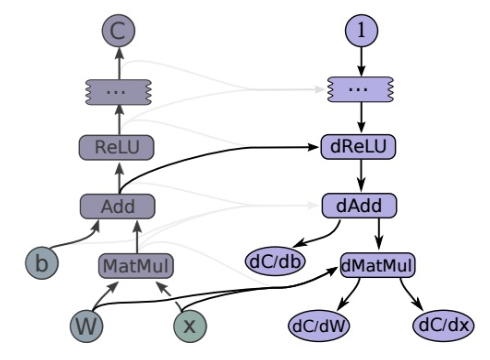

tf-Autodiff的原理:

-