环境:Ubuntu 16.04

工具:python 3.5+,scrapy1.1,pycharm

import scrapy, re, os, lxml, urllib.request from scrapy.http import Request from bs4 import BeautifulSoup class TaobaoMMSpider(scrapy.Spider): name = 'TaobaoMM' start_urls = ['https://mm.taobao.com/json/request_top_list.htm?page=1'] #在这里输入你要保存图片的地址 mainposition = '/media/liuyu/0009F608000B7B40/TaobaoMM/' # 处理第一个网页,获取总页数 def parse(self, response): content = BeautifulSoup(response.text, "lxml") totalpage = content.find('input', id="J_Totalpage").get('value') url = 'https://mm.taobao.com/json/request_top_list.htm?page=' for i in range(1): yield Request(url + str(i+1), callback=self.everypage) # 对每一页的网页进行处理,获取每位model的网页 def everypage(self, response): content = BeautifulSoup(response.text, "lxml") modelinfo = content.find_all('div', class_="personal-info") for i in modelinfo: name = i.find('a', class_="lady-name").string seconddir = self.mainposition + name os.mkdir(self.mainposition + str(name)) age = i.find('strong').string modelurl = 'https:' + i.find('a', class_="lady-name").get('href') yield Request(modelurl, callback=self.infocard, meta={'age': age, 'seconddir': seconddir}) # 处理模特卡界面,获取模特id,构造获取model信息的json链接 def infocard(self, response): content = BeautifulSoup(response.text, "lxml") modelid = content.find('input', id="J_MmuserId").get('value') infourl = 'https://mm.taobao.com/self/info/model_info_show.htm?user_id=' + modelid albumurl = 'https:' + content.find('ul', class_="mm-p-menu").find('a').get('href') yield Request(infourl, callback=self.infoprocess, meta={'seconddir': response.meta['seconddir'], 'albumurl': albumurl, 'age': response.meta['age']}) # 处理model的json网页信息,获取名字等信息,然后跳转至相册界面 def infoprocess(self, response): seconddir = response.meta['seconddir'] albumurl = response.meta['albumurl'] age = response.meta['age'] content = BeautifulSoup(response.text, "lxml") modelinfo = content.find('ul', class_="mm-p-info-cell clearfix") info = modelinfo.find_all('li') name = info[0].find('span').string with open(seconddir + '/' + name + '.txt', 'w')as file: file.write('age' + age + ' ') for i in range(6): file.write(info[i].find('span').string.replace("xa0", "") + ' ') for i in range(2): file.write(info[i+7].find('p').string + ' ') file.write('BWH: ' + info[9].find('p').string + ' ') file.write('cup_size: ' + info[10].find('p').string + ' ') file.write('shoe_size: ' + info[11].find('p').string + ' ') file.close() yield Request(albumurl, callback=self.album, meta={'seconddir': response.meta['seconddir']}) # 处理相册框架界面,获取model的ID,构造相册列表的json请求链接 def album(self, response): content = BeautifulSoup(response.text, "lxml") modelid = content.find('input', id="J_userID").get('value') url = 'https://mm.taobao.com/self/album/open_album_list.htm?_charset=utf-8&user_id%20=' + modelid yield Request(url, callback=self.allimage, meta={'url': url, 'seconddir': response.meta['seconddir']}) # 处理相册信息页面,获取总页数 def allimage(self, response): url = response.meta['url'] content = BeautifulSoup(response.text, "lxml") page = content.find('input').get('value') for i in range(int(page)): yield Request(url + '&page=' + str(i+1), callback=self.image, meta={'seconddir': response.meta['seconddir']}) # 对相册每一页进行处理,获取相册名,对每一个相册进行访问 def image(self, response): seconddir = response.meta['seconddir'] content = BeautifulSoup(response.text,"lxml") albuminfo = content.find_all('div', class_="mm-photo-cell-middle") for i in albuminfo: albumname = i.find('h4').a.string.replace(" ","") albumname=albumname.replace(" ","") thirddir = seconddir + '/' + albumname os.mkdir(thirddir) url = i.find('h4').a.get('href') pattern = re.compile('.*?user_id=(.*?)&album_id=(.*?)&album_flag') item = re.findall(pattern, url) for item in item: modelid = item[0] albumid = item[1] imageurl = 'https://mm.taobao.com/album/json/get_album_photo_list.htm?user_id=' + modelid + '&album_id=' + albumid + '&page=' yield Request(imageurl, callback=self.imageprocess, meta={'url': imageurl, 'thirddir': thirddir}) # 对相册页面进行处理,获取相册总页数 def imageprocess(self, response): url = response.meta['url'] content = response.text pattern = re.compile('.*?"totalPage":"(.*?)"') item = re.findall(pattern, content) pagenum = item[0] for i in range(int(pagenum)): imageurl = url + str(i+1) yield Request(imageurl, callback=self.saveimage, meta={'thirddir': response.meta['thirddir']}) # 处理相册页面,获得每一个照片的链接 def saveimage(self, response): thirddir = response.meta['thirddir'] content = response.text pattern = re.compile('.*?"picUrl":"(.*?)"') pattern_2 = re.compile('.*?imgextra/.*?/(.*?)/') imageurls = re.findall(pattern, content) for imageurl in imageurls: imagename_temp=re.findall(pattern_2,imageurl) imagename=imagename_temp[0] url = 'https:' + imageurl print(url) u = urllib.request.urlopen(url).read() with open(thirddir + '/' + imagename + '.jpg', 'wb')as file: file.write(u) file.close()

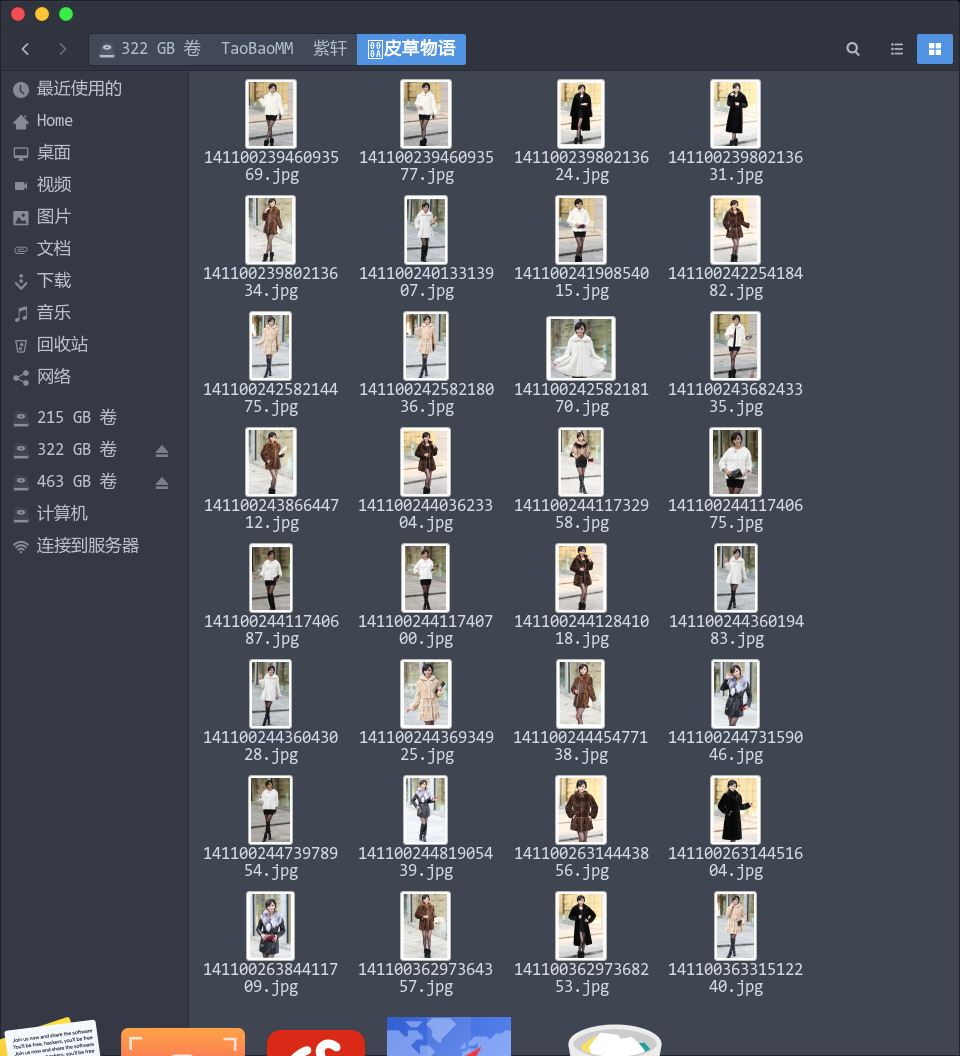

运行结果: