准备资料:

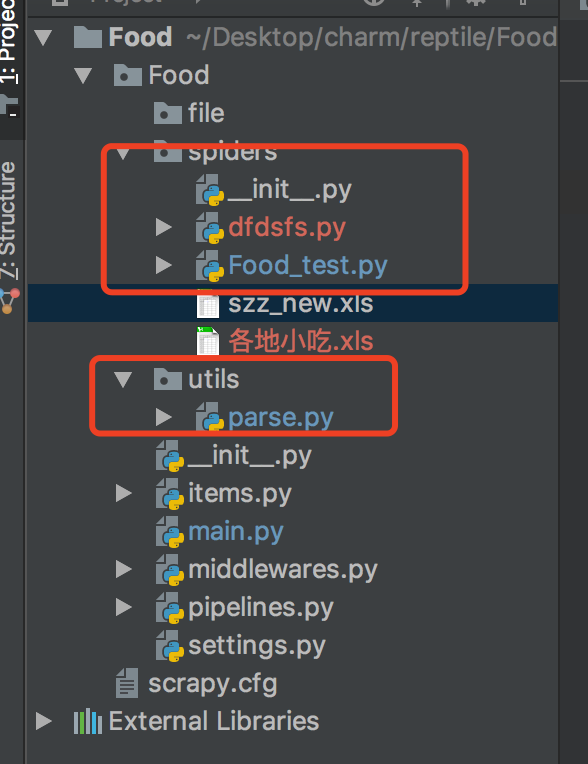

创建 scrapy 具体细节不解释了,准备mongodb,代码架构

1 # -*- coding: utf-8 -*- 2 import requests 3 import scrapy 4 from pyquery import PyQuery 5 from ..items import FoodItem 6 from ..utils.parse import parse, Home_cooking, othe_cooking, Dishes, Dishes_Details 7 from scrapy.http import Request 8 from traceback import format_exc 9 10 from pymongo import MongoClient 11 12 13 class FoodTestSpider(scrapy.Spider): 14 name = 'Food_test' 15 allowed_domains = ['meishij.net'] 16 start_urls = ['https://www.meishij.net/chufang/diy/'] 17 18 client = MongoClient() 19 db = client.test # 连接test数据库,没有则自动创建 20 my_set = db.meishi # 使用set集合,没有则自动创建 21 result = {"": {"": []}} 22 23 def parse(self, response): 24 url_list = parse(response) 25 for url in url_list: 26 item = Request(url_list[url], 27 callback=self.jiachagncai, 28 meta={"url": url}, 29 errback=self.error_back, ) 30 31 yield item 32 33 # with open('meishi.json', 'w', encoding='utf-8') as f: 34 # f.write(self.result) 35 36 def jiachagncai(self, response): 37 # requests.post().url 38 39 if response.url == 'https://www.meishij.net/chufang/diy/': 40 home = Home_cooking(response) 41 # print(home) 42 else: 43 home = othe_cooking(response) 44 for url in home: 45 # print(url) 46 name = response.meta["url"] 47 # name_= self.result.get(name,{}) 48 # print(name_) 49 # name__ = name_.get(name,{}).get(url,[]) 50 # name__.append(url) 51 # name_[url] = name__ 52 # _name = {name:name_} 53 # self.result.update(_name) 54 # print(name_) 55 # print(type(self.result.get(name))) 56 yield Request(home[url], 57 callback=self.shangping_list, 58 meta={"temp": {"first": name, "se": url}}, 59 errback=self.error_back, ) 60 61 def shangping_list(self, response): 62 date = Dishes(response) 63 for url in date: 64 yield Request(url, 65 callback=self.xiangqingye, 66 meta=response.meta['temp'], 67 errback=self.error_back, ) 68 url = PyQuery(response.text)('#listtyle1_w > div.listtyle1_page > div > a.next').attr('href') 69 if url: 70 yield Request(url, 71 callback=self.shangping_list, 72 meta={"temp": response.meta['temp']}, 73 errback=self.error_back, ) 74 75 def xiangqingye(self, response): 76 first = response.meta['first'] 77 se = response.meta['se'] 78 79 print('---------------------------', first,se) 80 temp = Dishes_Details(response) 81 self.my_set.insert({first:{se: temp}}) 82 83 # if first == '家常菜谱': 84 # print('---------------------------', first) 85 # print('===========================', se) 86 # self.my_set.insert({'jiachang': {se: temp}}) 87 # elif first == '中华菜系': 88 # print('---------------------------', first) 89 # print('===========================', se) 90 # self.my_set.insert({'zhonghua': {se: temp}}) 91 # elif first == '各地小吃': 92 # print('---------------------------', first) 93 # print('===========================', se) 94 # self.my_set.insert({'gedi': {se: temp}}) 95 # elif first == '外国菜谱': 96 # print('---------------------------', first) 97 # print('===========================', se) 98 # self.my_set.insert({'waiguo': {se: temp}}) 99 # elif first == '烘焙': 100 # print('---------------------------', first) 101 # print('===========================', se) 102 # self.my_set.insert({'hongbei': {se: temp}}) 103 # else: 104 # pass 105 106 def error_back(self, e): 107 _ = e 108 self.logger.error(format_exc())

1 __author__ = 'chenjianguo' 2 # -*- coding:utf-8 -*- 3 4 from pyquery import PyQuery 5 import re 6 7 8 def parse(response): 9 """ 10 抓取美食tab 列表: https://www.meishij.net/chufang/diy/ 11 返回列 大 tab 信息 12 :param:response 13 :return 14 """ 15 jpy = PyQuery(response.text) 16 17 tr_list = jpy('#listnav_ul > li').items() 18 19 result = dict() #result为set集合(不允许重复元素) 20 for tr in tr_list: 21 22 url = tr('a').attr('href') #爬取美食tab的url 23 text = tr('a').text() 24 if url and 'https://www.meishij.net' not in url: 25 url = 'https://www.meishij.net' + url 26 if url and 'shicai' not in url and 'pengren' not in url: 27 result[text]=url 28 return result 29 30 def Home_cooking(response): 31 ''' 32 家常菜的小tab列表 家常菜的页面元素与其他大tab 不一样需要特殊处理 https://www.meishij.net/chufang/diy/ 33 返回小tab 列表信息 34 :param response: 35 :return: 36 ''' 37 jpy = PyQuery(response.text) 38 tr_list = jpy('#listnav_con_c > dl.listnav_dl_style1.w990.bb1.clearfix > dd').items() 39 result = dict() # result为set集合(不允许重复元素) 40 for tr in tr_list: 41 url = tr('a').attr('href') #爬取家常菜小 tab的url 42 text = tr('a').text() 43 result[text] = url 44 return result 45 46 def othe_cooking(response): 47 ''' 48 其他菜的小tab列表 https://www.meishij.net/china-food/caixi/ 49 返回小tab 列表信息 50 :param response: 51 :return: 52 ''' 53 jpy = PyQuery(response.text) 54 tr_list = jpy('#listnav > div > dl > dd').items() 55 result = dict() # result为set集合(不允许重复元素) 56 for tr in tr_list: 57 url = tr('a').attr('href') # 爬取家常菜小 tab的url 58 text = tr('a').text() 59 result[text] = url 60 return result 61 62 63 def Dishes(response): 64 ''' 65 菜品列表 https://www.meishij.net/chufang/diy/jiangchangcaipu/ 66 返回菜品信息 67 :param response: 68 :return: 69 ''' 70 jpy = PyQuery(response.text) 71 tr_list = jpy('#listtyle1_list > div').items() 72 result = set() # result为set集合(不允许重复元素) 73 for tr in tr_list: 74 url = tr('a').attr('href') #爬取菜品的url 75 result.add(url) 76 # print(result,len(result)) 77 return result 78 79 def Dishes_Details(response): 80 ''' 81 菜品的详细信息 https://www.meishij.net/zuofa/nanguaputaoganfagao_2.html 82 返回 主要就是菜名、图片、用料、做法 83 :param response: 84 :return: 85 ''' 86 87 jpy = PyQuery(response.text) 88 result = {'用料':{},'统计':{},'做法':{}} 89 90 result['主图'] =jpy('body > div.main_w.clearfix > div.main.clearfix > div.cp_header.clearfix > div.cp_headerimg_w > img').attr('src') 91 result['菜名']=jpy('#tongji_title').text() 92 93 tongji = jpy('body > div.main_w.clearfix > div.main.clearfix > div.cp_header.clearfix > div.cp_main_info_w > div.info2 > ul > li').items() 94 for i in tongji: 95 result['统计'][i('strong').text()]=i('a').text() 96 97 Material = jpy('body > div.main_w.clearfix > div.main.clearfix > div.cp_body.clearfix > div.cp_body_left > div.materials > div > div.yl.zl.clearfix > ul').items() 98 temp,tag = '','' 99 for i in Material: 100 temp =(i('li > div > h4 > a').text()).replace(' ','#').split('#') 101 tag = (i('li > div > h4 > span').text()).replace(' ','#').split('#') 102 for k,v in enumerate(temp): 103 result['用料'][v]=tag[k] 104 k = jpy('body > div.main_w.clearfix > div.main.clearfix > div.cp_body.clearfix > div.cp_body_left > div.materials > div > div.yl.fuliao.clearfix > ul > li > h4 > a').text() 105 v = jpy('body > div.main_w.clearfix > div.main.clearfix > div.cp_body.clearfix > div.cp_body_left > div.materials > div > div.yl.fuliao.clearfix > ul > li > span').text() 106 result['用料'][k]=[v] 107 108 #Practice = jpy('div.measure > div > p').items() or jpy('div.measure > div > div > em').items() 109 110 Practice = jpy("em.step").items() 111 text =[] 112 count =1 113 for i in Practice: 114 if i.parent().is_("div"): 115 text = i.text() + i.parent()("p").text() 116 img = (i.parent()('img').attr('src')) 117 # result['做法'][text] = img 118 result['做法']['step_'+str(count)] = [text,img] 119 count +=1 120 121 elif i.parent().is_("p"): 122 text =i.parent()("p").text() 123 img =(i.parent().parent()('p')('img').attr('src')) 124 # result['做法'][text] = img 125 result['做法']['step_' + str(count)] = [text, img] 126 count += 1 127 else: 128 pass 129 # print(result, len(result)) 130 return result 131 132 133 def fanye(response): 134 jqy = PyQuery(response.text) 135 136 tag = jqy('#listtyle1_w > div.listtyle1_page > div > a.next').attr('href') 137 138 return tag 139 140 141 if __name__ == '__main__': 142 import requests 143 # r = requests.get('https://www.meishij.net/zuofa/youmenchunsun_15.html') 144 # r = requests.get('https://www.meishij.net/zuofa/nanguaputaoganfagao_2.html') 145 r = requests.get('https://www.meishij.net/chufang/diy/?&page=56') 146 # Dishes_Details(r) 147 tag =fanye(r) 148 print(tag)

我这里没有用到中间件和管道,数据存储到 mongodb 中,数据做分类

结果