K-近邻算法(KNN)概述

邻近算法,或者说K最近邻(kNN,k-NearestNeighbor)分类算法是数据挖掘分类技术中最简单的方法之一。所谓K最近邻,就是k个最近的邻居的意思,说的是每个样本都可以用它最接近的k个邻居来代表。Cover和Hart在1968年提出了最初的邻近算法。KNN是一种分类(classification)算法,它输入基于实例的学习(instance-based learning),属于懒惰学习(lazy learning)即KNN没有显式的学习过程,也就是说没有训练阶段,数据集事先已有了分类和特征值,待收到新样本后直接进行处理。

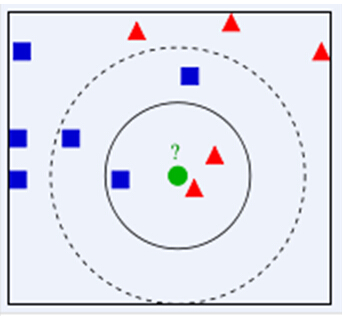

下面通过一个简单的例子说明一下:如下图,绿色圆要被决定赋予哪个类,是红色三角形还是蓝色四方形?如果K=3,由于红色三角形所占比例为2/3,绿色圆将被赋予红色三角形那个类,如果K=5,由于蓝色四方形比例为3/5,因此绿色圆被赋予蓝色四方形类。

由此也说明了KNN算法的结果很大程度取决于K的选择。

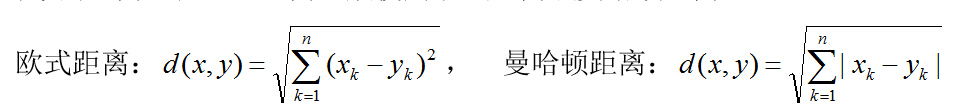

在KNN中,通过计算对象间距离来作为各个对象之间的非相似性指标,避免了对象之间的匹配问题,在这里距离一般使用欧氏距离或曼哈顿距离:

同时,KNN通过依据k个对象中占优的类别进行决策,而不是单一的对象类别决策。这两点就是KNN算法的优势。

接下来对KNN算法的思想总结一下:就是在训练集中数据和标签已知的情况下,输入测试数据,将测试数据的特征与训练集中对应的特征进行相互比较,找到训练集中与之最为相似的前K个数据,则该测试数据对应的类别就是K个数据中出现次数最多的那个分类,其算法的描述为:

1)计算测试数据与各个训练数据之间的距离;

2)按照距离的递增关系进行排序;

3)选取距离最小的K个点;

4)确定前K个点所在类别的出现频率;

5)返回前K个点中出现频率最高的类别作为测试数据的预测分类。

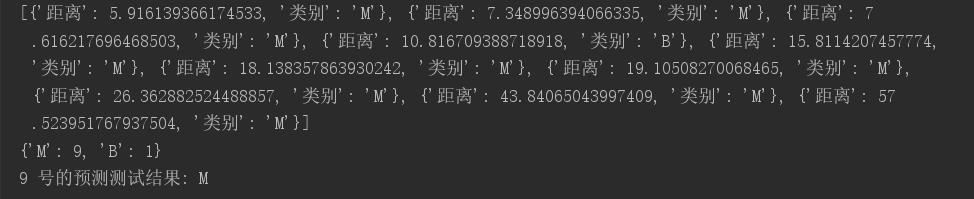

# knn算法步骤 # 1)计算测试数据与各个训练数据之间的距离,一般选取数据的10分之1作为测试集,其余为训练集; # 2)按照距离的递增关系进行排序; # 3)选取距离最小的K个点,这里可以为前k个数分配权重; # 4)确定前K个点所在类别的出现频率; # 5)返回前K个点中出现频率最高的类别作为测试数据的预测分类。 #读取数据 def Read(): listinfo = [] with open('test.csv', 'rb') as myFile: for line in myFile: info = line.decode('utf-8') info = info.rstrip(' ') listinfo.append(info.split(',')) #print(info.split(',')) return listinfo #计算距离并排序 def Distance(listinfo,list): diclist = [] id = 1 for i in range(0,len(listinfo)): # print(listinfo[i]) dic = {} distance = 0 for j in range(2,len(listinfo[i])): #print(listinfo[i][j],list[j]) distance += (float(listinfo[i][j]) -float(list[j]))**2 distance = distance**0.5 dic['距离'] = distance dic['类别'] = listinfo[i][1] diclist.append(dic) id+=1 #升序排序 diclist.sort(key=f1) # print(diclist) return diclist #以某个特征 def f1(x): return x['距离'] #选取k个数,并计算k个数的类别概率 def Count(listinfo,k,kinddic): #获取距离最小/最近的几个训练集 list = listinfo[0:k] print(list) for l in list: if l['类别'] == "M": kinddic[l['类别']] +=1 else:kinddic[l['类别']] +=1 print(kinddic) return kinddic #选取类别概率最大类别作为测试集的预测类别 def outcome(kinddic): global testid #获取字典中最大的元素 max_prices = max(zip(kinddic.values(), kinddic.keys())) # print(max_prices) print(testid,"号的预测测试结果:",max_prices[1]) listinfo = Read() listinfo = listinfo[1:] #训练集 newlistinfo = listinfo[0:90] #测试集 testinfo = listinfo[90:] testid = 9 diclist = Distance(newlistinfo,testinfo[testid]) kinddic ={'M':0,'B':0} kinddic = Count(diclist,10,kinddic) outcome(kinddic)

id,diagnosis_result,radius,texture,perimeter,area,smoothness,compactness,symmetry,fractal_dimension

1,M,23,12,151,954,0.143,0.278,0.242,0.079

2,B,9,13,133,1326,0.143,0.079,0.181,0.057

3,M,21,27,130,1203,0.125,0.16,0.207,0.06

4,M,14,16,78,386,0.07,0.284,0.26,0.097

5,M,9,19,135,1297,0.141,0.133,0.181,0.059

6,B,25,25,83,477,0.128,0.17,0.209,0.076

7,M,16,26,120,1040,0.095,0.109,0.179,0.057

8,M,15,18,90,578,0.119,0.165,0.22,0.075

9,M,19,24,88,520,0.127,0.193,0.235,0.074

10,M,25,11,84,476,0.119,0.24,0.203,0.082

11,M,24,21,103,798,0.082,0.067,0.153,0.057

12,M,17,15,104,781,0.097,0.129,0.184,0.061

13,B,14,15,132,1123,0.097,0.246,0.24,0.078

14,M,12,22,104,783,0.084,0.1,0.185,0.053

15,M,12,13,94,578,0.113,0.229,0.207,0.077

16,M,22,19,97,659,0.114,0.16,0.23,0.071

17,M,10,16,95,685,0.099,0.072,0.159,0.059

18,M,15,14,108,799,0.117,0.202,0.216,0.074

19,M,20,14,130,1260,0.098,0.103,0.158,0.054

20,B,17,11,87,566,0.098,0.081,0.189,0.058

21,B,16,14,86,520,0.108,0.127,0.197,0.068

22,B,17,24,60,274,0.102,0.065,0.182,0.069

23,M,20,27,103,704,0.107,0.214,0.252,0.07

24,M,19,12,137,1404,0.094,0.102,0.177,0.053

25,M,9,13,110,905,0.112,0.146,0.2,0.063

26,M,19,27,116,913,0.119,0.228,0.304,0.074

27,M,10,24,97,645,0.105,0.187,0.225,0.069

28,M,16,24,122,1094,0.094,0.107,0.17,0.057

29,M,15,15,102,732,0.108,0.17,0.193,0.065

30,M,11,16,115,955,0.098,0.116,0.174,0.061

31,M,11,22,125,1088,0.106,0.189,0.218,0.062

32,M,23,26,78,441,0.111,0.152,0.23,0.078

33,M,20,18,113,899,0.12,0.15,0.225,0.064

34,M,11,21,128,1162,0.094,0.172,0.185,0.063

35,M,16,23,107,807,0.104,0.156,0.2,0.065

36,M,10,13,110,870,0.096,0.134,0.19,0.057

37,M,18,12,94,633,0.098,0.11,0.189,0.061

38,B,21,11,83,524,0.09,0.038,0.147,0.059

39,M,11,15,96,699,0.094,0.051,0.157,0.055

40,M,10,14,88,559,0.102,0.126,0.172,0.064

41,M,24,16,86,563,0.082,0.06,0.178,0.056

42,M,19,27,72,371,0.123,0.122,0.19,0.069

43,M,11,11,128,1104,0.091,0.219,0.231,0.063

44,M,15,21,87,545,0.104,0.144,0.197,0.068

45,M,10,15,85,532,0.097,0.105,0.175,0.062

46,M,18,11,124,1076,0.11,0.169,0.191,0.06

47,B,22,12,52,202,0.086,0.059,0.177,0.065

48,M,20,14,86,535,0.116,0.123,0.213,0.068

49,B,20,21,78,449,0.103,0.091,0.168,0.06

50,B,25,11,87,561,0.088,0.077,0.181,0.057

51,B,19,25,75,428,0.086,0.05,0.15,0.059

52,B,19,22,87,572,0.077,0.061,0.135,0.06

53,B,25,15,76,438,0.083,0.048,0.187,0.061

54,M,14,26,120,1033,0.115,0.149,0.209,0.063

55,M,18,25,97,713,0.091,0.071,0.162,0.057

56,B,18,13,73,409,0.095,0.055,0.192,0.059

57,M,10,19,126,1152,0.105,0.127,0.192,0.06

58,M,17,20,96,657,0.114,0.137,0.203,0.068

59,B,22,15,83,527,0.081,0.038,0.182,0.055

60,B,23,26,54,225,0.098,0.053,0.168,0.072

61,B,15,18,65,312,0.113,0.081,0.274,0.07

62,B,25,15,55,222,0.124,0.09,0.183,0.068

63,M,12,22,96,646,0.105,0.201,0.195,0.073

64,B,24,17,59,261,0.077,0.088,0.234,0.07

65,M,16,19,83,499,0.112,0.126,0.191,0.066

66,M,11,21,97,668,0.117,0.148,0.195,0.067

67,B,12,13,60,269,0.104,0.078,0.172,0.069

68,B,18,12,72,394,0.081,0.047,0.152,0.057

69,B,16,17,59,251,0.107,0.141,0.211,0.08

70,B,17,21,81,503,0.098,0.052,0.159,0.057

71,M,21,18,124,1130,0.09,0.103,0.158,0.055

72,B,9,26,59,244,0.098,0.153,0.19,0.09

73,M,21,12,114,929,0.107,0.183,0.193,0.065

74,M,22,25,90,584,0.101,0.128,0.166,0.066

75,B,18,13,79,471,0.092,0.068,0.172,0.059

76,M,21,18,104,818,0.092,0.084,0.18,0.054

77,B,10,17,88,559,0.129,0.105,0.24,0.066

78,M,11,21,120,1006,0.107,0.215,0.215,0.067

79,M,16,18,144,1245,0.129,0.345,0.291,0.081

80,B,22,16,83,506,0.099,0.095,0.172,0.06

81,B,10,18,74,402,0.11,0.094,0.184,0.07

82,B,17,21,86,520,0.108,0.154,0.194,0.069

83,M,10,15,172,1878,0.106,0.267,0.183,0.068

84,M,20,14,129,1132,0.122,0.179,0.163,0.072

85,B,25,21,77,443,0.097,0.072,0.208,0.06

86,M,14,13,121,1075,0.099,0.105,0.213,0.06

87,M,19,26,94,648,0.094,0.099,0.208,0.056

88,M,19,11,122,1076,0.09,0.121,0.195,0.056

89,B,11,11,80,466,0.088,0.094,0.193,0.064

90,B,12,23,96,652,0.113,0.134,0.212,0.063

91,B,23,27,95,663,0.09,0.086,0.169,0.059

92,M,10,12,100,728,0.092,0.104,0.172,0.061

93,B,14,14,85,552,0.074,0.051,0.139,0.053

94,B,10,17,87,555,0.102,0.082,0.164,0.057

95,M,22,26,100,706,0.104,0.155,0.186,0.063

96,M,23,16,132,1264,0.091,0.131,0.21,0.056

97,B,22,14,78,451,0.105,0.071,0.19,0.066

98,B,19,27,62,295,0.102,0.053,0.135,0.069

99,B,21,24,74,413,0.09,0.075,0.162,0.066

100,M,16,27,94,643,0.098,0.114,0.188,0.064