爬取网页地址:

数据库连接代码:

def db_connect(): try: db=pymysql.connect('localhost','root','zzm666','payiqing') print('database connect success') return db except Exception as e: raise e return 0

爬取代码:

def pa_website(db): driver = webdriver.Chrome() driver.get('https://ncov.dxy.cn/ncovh5/view/pneumonia?from=timeline&isappinstalled=0') time.sleep(5)#页面渲染等待,保证数据完整性 driver.find_element_by_xpath('//*[@id="root"]/div/div[4]/div[9]/div[21]').click()#点击更多数据,页面数据未加载完 divs=driver.find_elements_by_xpath('//*[@id="root"]/div/div[4]/div[9]/div[@class="fold___85nCd"]')#找到要爬取的数据上一次代码路径 cursor = db.cursor() for div in divs: address=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[1]').text) confirm_issue=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[2]').text) all_confirm=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[3]').text) dead=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[4]').text) cure=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[5]').text) with open('data.csv','a',newline="") as csvfile:#创建data.csv文件,(推荐采用这种方式) writer=csv.writer(csvfile,delimiter=',') writer.writerow([address,confirm_issue,all_confirm,dead,cure]) sql="insert into info(id,address,confirm_issue,all_confirm,dead,cure)values ('%d','%s','%s','%s','%s','%s')"%(0,address,confirm_issue,all_confirm,dead,cure) try: cursor.execute(sql) db.commit() print('数据插入成功') except Exception as e: raise e db.close()

爬取流程:

1.获取目标网址

2.获取上一级目标路径

3.遍历路径下的目标

4.获取数据信息

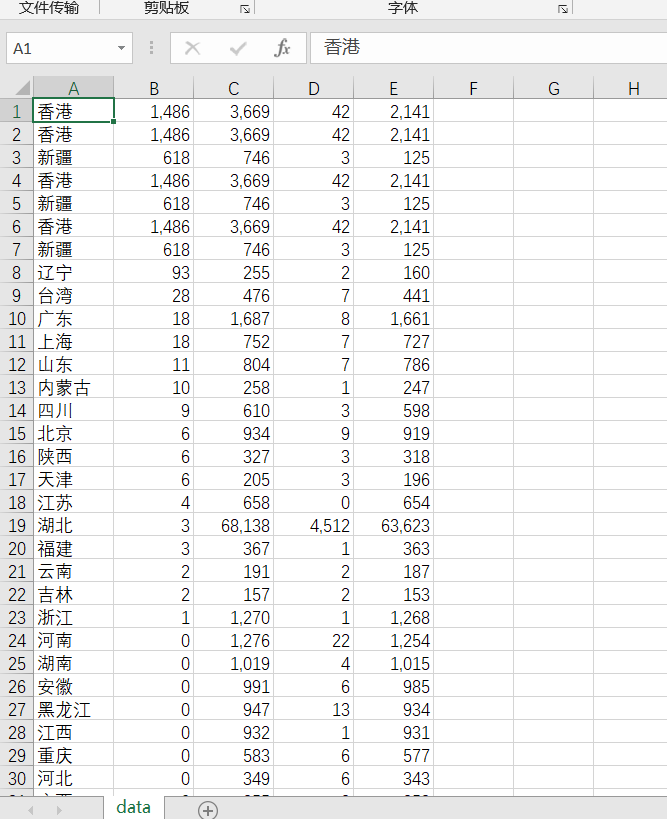

5.生存csv文件展示(可以省略)

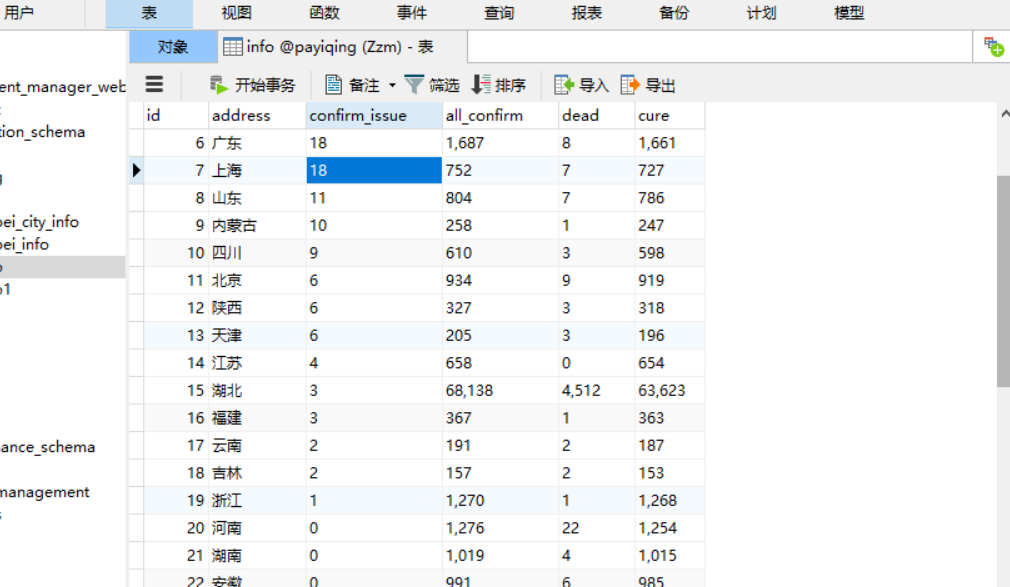

6.插入数据库

7.当数据全部插入后,关闭数据库

附(总源码+程序截图):

import csv import requests from selenium import webdriver import pymysql import time from selenium.webdriver import ActionChains #动作链,滑动验证码登录 def db_connect(): try: db=pymysql.connect('localhost','root','zzm666','payiqing') print('database connect success') return db except Exception as e: raise e return 0 def pa_website(db): driver = webdriver.Chrome() driver.get('https://ncov.dxy.cn/ncovh5/view/pneumonia?from=timeline&isappinstalled=0') time.sleep(5) driver.find_element_by_xpath('//*[@id="root"]/div/div[4]/div[9]/div[21]').click() divs=driver.find_elements_by_xpath('//*[@id="root"]/div/div[4]/div[9]/div[@class="fold___85nCd"]') cursor = db.cursor() for div in divs: address=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[1]').text) confirm_issue=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[2]').text) all_confirm=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[3]').text) dead=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[4]').text) cure=str(div.find_element_by_xpath('.//div[@class="areaBlock1___3qjL7"]/p[5]').text) with open('data.csv','a',newline="") as csvfile: writer=csv.writer(csvfile,delimiter=',') writer.writerow([address,confirm_issue,all_confirm,dead,cure]) sql="insert into info(id,address,confirm_issue,all_confirm,dead,cure)values ('%d','%s','%s','%s','%s','%s')"%(0,address,confirm_issue,all_confirm,dead,cure) try: cursor.execute(sql) db.commit() print('数据插入成功') except Exception as e: raise e db.close() def main(): db = db_connect() pa_website(db) if __name__=="__main__": main()