1.手写数字数据集

- from sklearn.datasets import load_digits

- digits = load_digits()

import numpy as np

from sklearn.datasets import load_digits

digits = load_digits()

x_data = digits.data.astype(np.float32)

x_target = digits.target.astype(np.float32).reshape(-1, 1)

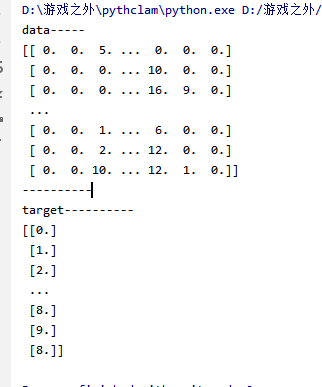

print("data-----")

print(x_data)

print("-"*10)

print("target"+"-"*10)

print(x_target)

2.图片数据预处理

- x:归一化MinMaxScaler()

- y:独热编码OneHotEncoder()或to_categorical

- 训练集测试集划分

- 张量结构

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler, OneHotEncoder

#归一化

scaler = MinMaxScaler()

X_data = scaler.fit_transform(x_data)

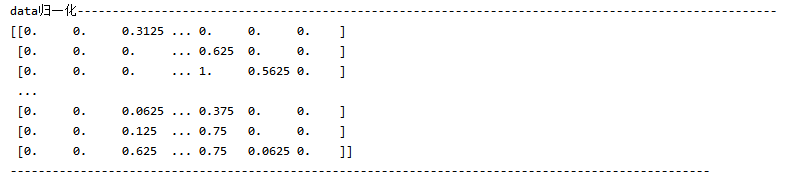

print("data归一化"+"-"*100)

print(X_data)

print("-"*100)

# one-hot编码

X_target = OneHotEncoder().fit_transform(x_target).todense()

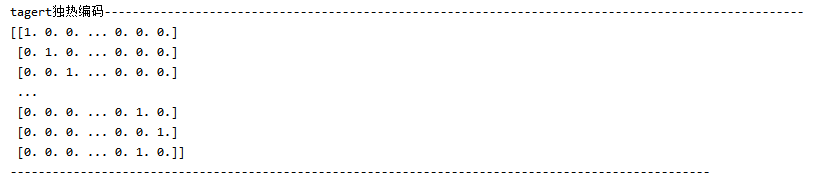

print("tagert独热编码"+"-"*100)

print(X_target)

print("-"*100)

# 转换为图片的格式

X_data_1 = X_data.reshape(-1, 8, 8, 1)

#训练集测试集划分

X_train, X_test, y_train, y_test = train_test_split(X_data_1, X_target, test_size=0.2, random_state=0, stratify=X_target)

print('X_train.shape, X_test.shape, y_train.shape, y_test.shape:',X_train.shape, X_test.shape, y_train.shape, y_test.shape)

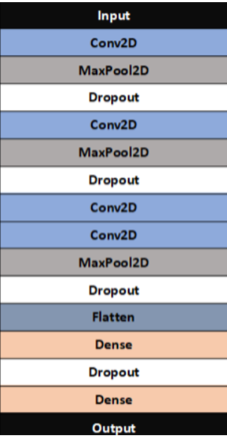

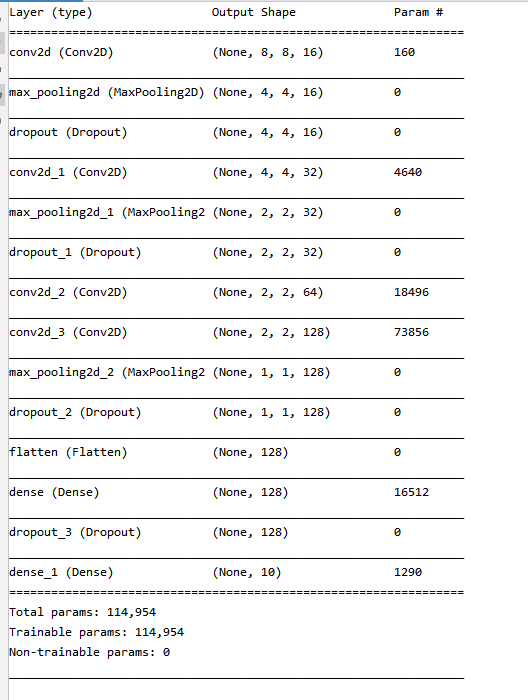

3.设计卷积神经网络结构

- 绘制模型结构图,并说明设计依据。

rom tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPool2D

model = Sequential()

ks = [3, 3] # 卷积核

model.add(Conv2D(filters=16, kernel_size=ks, padding='same', input_shape=X_train.shape[1:], activation='relu'))# 一层卷积

model.add(MaxPool2D(pool_size=(2, 2)))# 池化层

model.add(Dropout(0.25))

model.add(Conv2D(filters=32, kernel_size=ks, padding='same', activation='relu'))# 二层卷积

model.add(MaxPool2D(pool_size=(2, 2)))# 池化层

model.add(Dropout(0.25))

model.add(Conv2D(filters=64, kernel_size=ks, padding='same', activation='relu'))# 三层卷积

model.add(Conv2D(filters=128, kernel_size=ks, padding='same', activation='relu'))# 四层卷积

model.add(MaxPool2D(pool_size=(2, 2)))# 池化层

model.add(Dropout(0.25))

model.add(Flatten())# 平坦层

model.add(Dense(128, activation='relu'))# 全连接层

model.add(Dropout(0.25))

model.add(Dense(10, activation='softmax'))# 激活函数

model.summary()

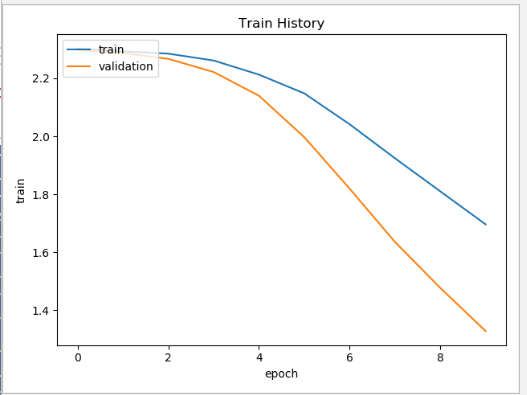

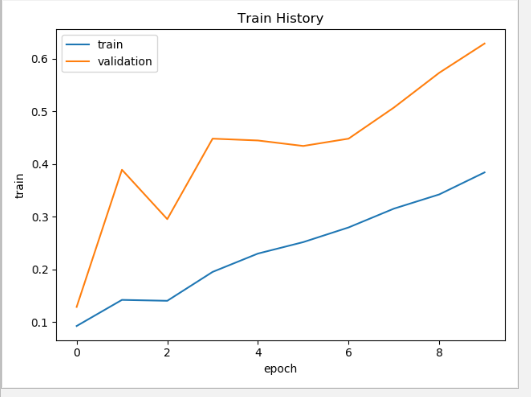

4.模型训练

- model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

- train_history = model.fit(x=X_train,y=y_train,validation_split=0.2, batch_size=300,epochs=10,verbose=2)

import matplotlib.pyplot as plt

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

train_history = model.fit(x=X_train,y=y_train,validation_split=0.2, batch_size=300,epochs=10,verbose=2)

def show_train_history(train_history, train, validation):

plt.plot(train_history.history[train])

plt.plot(train_history.history[validation])

plt.title('Train History')

plt.ylabel('train')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.show()

# 准确率

show_train_history(train_history, 'accuracy', 'val_accuracy')

# 损失率

show_train_history(train_history, 'loss', 'val_loss')

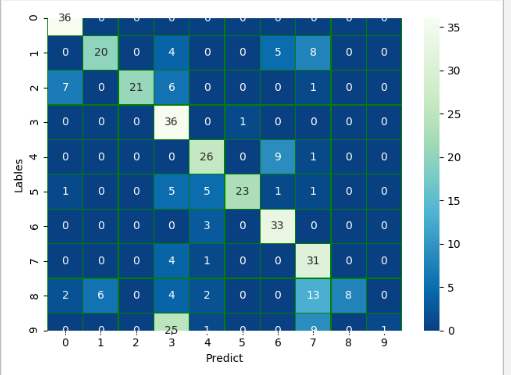

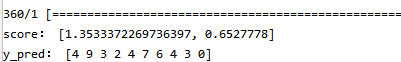

5.模型评价

- model.evaluate()

- 交叉表与交叉矩阵

- pandas.crosstab

- seaborn.heatmap

import matplotlib.pyplot as plt

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

train_history = model.fit(x=X_train,y=y_train,validation_split=0.2, batch_size=300,epochs=10,verbose=2)

def show_train_history(train_history, train, validation):

plt.plot(train_history.history[train])

plt.plot(train_history.history[validation])

plt.title('Train History')

plt.ylabel('train')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.show()

# 准确率

show_train_history(train_history, 'accuracy', 'val_accuracy')

# 损失率

show_train_history(train_history, 'loss', 'val_loss')