一、Ceph的配置文件

Ceph 配置文件可用于配置存储集群内的所有守护进程、或者某一类型的所有守护进程。要配置一系列守护进程,这些配置必须位于能收到配置的段落之下。默认情况下,无论是ceph的服务端还是客户端,配置文件都存储在/etc/ceph/ceph.conf文件中

如果修改了配置参数,必须使用/etc/ceph/ceph.conf文件在所有节点(包括客户端)上保持一致。

ceph.conf 采用基于 INI 的文件格式,包含具有 Ceph 守护进程和客户端相关配置的多个部分。每个部分具有一个使用 [name] 标头定义的名称,以及键值对的一个或多个参数

[root@ceph2 ceph]# cat ceph.conf

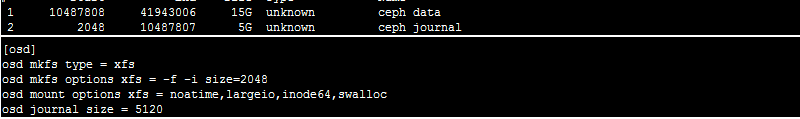

[global] #存储所有守护进程之间通用配置。它应用到读取配置文件的所有进程,包括客户端。配置影响 Ceph 集群里的所有守护进程。 fsid = 35a91e48-8244-4e96-a7ee-980ab989d20d #这个ID和ceph -s查看的ID是一个 mon initial members = ceph2,ceph3,ceph4 #monitor的初始化配置,定义ceph最初安装的时候定义的monitor节点,必须在启动的时候就准备就绪的monitor节点。 mon host = 172.25.250.11,172.25.250.12,172.25.250.13 public network = 172.25.250.0/24 cluster network = 172.25.250.0/24 [osd] #配置影响存储集群里的所有 ceph-osd 进程,并且会覆盖 [global] 下的同一选项 osd mkfs type = xfs osd mkfs options xfs = -f -i size=2048 osd mount options xfs = noatime,largeio,inode64,swalloc osd journal size = 5120

注:配置文件使用#和;来注释,参数名称可以使用空格、下划线、中横线来作为分隔符。如osd journal size 、 osd_jounrnal_size 、 osd-journal-size是有效且等同的参数名称

1.1 osd介绍

一个裸磁盘给ceph后,会被格式化成xfs格式,并且会分两个分区,一个数据区和一个日志区

[root@ceph2 ceph]# fdisk -l

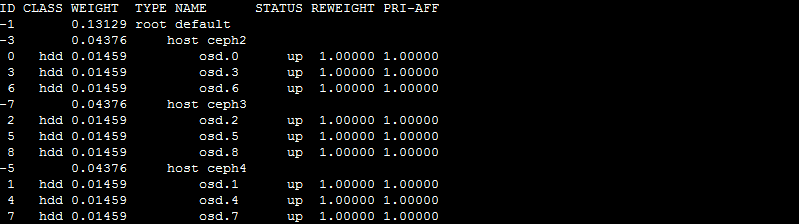

OSD的id和磁盘对应关系

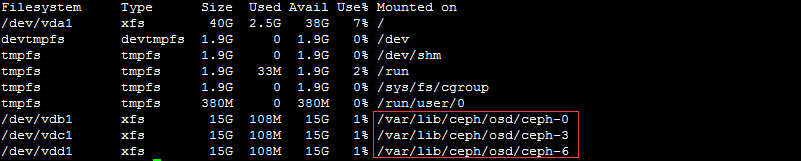

[root@ceph2 ceph]# df -hT

Ceph的配置文件位置和工作目录分别为:/etc/ceph和 cd /var/lib/ceph/

[root@ceph2 ceph]# ceph osd tree

二、删除一个存储池

2.1 修改配置文件

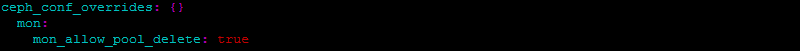

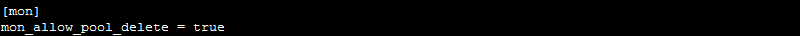

先配置一个参数为mon_allow_pool_delete为true

[root@ceph1 ceph-ansible]# vim /etc/ceph/ceph.conf [global] fsid = 35a91e48-8244-4e96-a7ee-980ab989d20d mon initial members = ceph2,ceph3,ceph4 mon host = 172.25.250.11,172.25.250.12,172.25.250.13 public network = 172.25.250.0/24 cluster network = 172.25.250.0/24 [osd] osd mkfs type = xfs osd mkfs options xfs = -f -i size=2048 osd mount options xfs = noatime,largeio,inode64,swalloc osd journal size = 5120 [mon] #添加配置 mon_allow_pool_delete = true

2.2 同步各个节点

[root@ceph1 ceph-ansible]# ansible all -m copy -a 'src=/etc/ceph/ceph.conf dest=/etc/ceph/ceph.conf owner=ceph group=ceph mode=0644'

ceph3 | SUCCESS => { "changed": true, "checksum": "18ad6b3743d303bdd07b8655be547de35f9b4e55", "dest": "/etc/ceph/ceph.conf", "failed": false, "gid": 1001, "group": "ceph", "md5sum": "8415ae9d959d31fdeb23b06ea7f61b1b", "mode": "0644", "owner": "ceph", "size": 500, "src": "/root/.ansible/tmp/ansible-tmp-1552807199.08-216306208753591/source", "state": "file", "uid": 1001 } ceph4 | SUCCESS => { "changed": true, "checksum": "18ad6b3743d303bdd07b8655be547de35f9b4e55", "dest": "/etc/ceph/ceph.conf", "failed": false, "gid": 1001, "group": "ceph", "md5sum": "8415ae9d959d31fdeb23b06ea7f61b1b", "mode": "0644", "owner": "ceph", "size": 500, "src": "/root/.ansible/tmp/ansible-tmp-1552807199.09-46038387604349/source", "state": "file", "uid": 1001 } ceph2 | SUCCESS => { "changed": true, "checksum": "18ad6b3743d303bdd07b8655be547de35f9b4e55", "dest": "/etc/ceph/ceph.conf", "failed": false, "gid": 1001, "group": "ceph", "md5sum": "8415ae9d959d31fdeb23b06ea7f61b1b", "mode": "0644", "owner": "ceph", "size": 500, "src": "/root/.ansible/tmp/ansible-tmp-1552807199.04-33302205115898/source", "state": "file", "uid": 1001 } ceph1 | SUCCESS => { "changed": false, "checksum": "18ad6b3743d303bdd07b8655be547de35f9b4e55", "failed": false, "gid": 1001, "group": "ceph", "mode": "0644", "owner": "ceph", "path": "/etc/ceph/ceph.conf", "size": 500, "state": "file", "uid": 1001 }

或者配置此选项

[root@ceph1 ceph-ansible]# vim /usr/share/ceph-ansible/group_vars/all.yml

重新执行palybook

[root@ceph1 ceph-ansible]# ansible-playbook site.yml

配置文件修改后不会立即生效,要重启相关的进程,比如所有的osd,或所有的monitor

[root@ceph2 ceph]# cat /etc/ceph/ceph.conf

同步成功

2.3 每个节点重启服务

[root@ceph2 ceph]# systemctl restart ceph-mon@serverc

或者

[root@ceph2 ceph]# systemctl restart ceph-mon.target

也可以使用ansible同时启动

[root@ceph1 ceph-ansible]# ansible mons -m shell -a ' systemctl restart ceph-mon.target' #这种操作坚决不允许在生产环境操作 ceph2 | SUCCESS | rc=0 >> ceph4 | SUCCESS | rc=0 >> ceph3 | SUCCESS | rc=0 >>

2.4 删除池

[root@ceph2 ceph]# ceph osd pool ls testpool EC-pool [root@ceph2 ceph]# ceph osd pool delete EC-pool EC-pool --yes-i-really-really-mean-it pool 'EC-pool' removed [root@ceph2 ceph]# ceph osd pool ls testpool

三、修改配置文件

3.1 临时修改一个配置文件

[root@ceph2 ceph]# ceph tell mon.* injectargs '--mon_osd_nearfull_ratio 0.85' #在输出信息显示需要重启服务,但是配置已经生效 mon.ceph2: injectargs:mon_osd_nearfull_ratio = '0.850000' (not observed, change may require restart) mon.ceph3: injectargs:mon_osd_nearfull_ratio = '0.850000' (not observed, change may require restart) mon.ceph4: injectargs:mon_osd_nearfull_ratio = '0.850000' (not observed, change may require restart) [root@ceph2 ceph]# ceph tell mon.* injectargs '--mon_osd_full_ratio 0.95' mon.ceph2: injectargs:mon_osd_full_ratio = '0.950000' (not observed, change may require restart) mon.ceph3: injectargs:mon_osd_full_ratio = '0.950000' (not observed, change may require restart) mon.ceph4: injectargs:mon_osd_full_ratio = '0.950000' (not observed, change may require restart) [root@ceph2 ceph]# ceph daemon osd.0 config show|grep nearfull "mon_osd_nearfull_ratio": "0.850000", [root@ceph2 ceph]# ceph daemon mon.ceph2 config show|grep mon_osd_full_ratio "mon_osd_full_ratio": "0.950000",

3.2 元变量介绍

所谓元变量是即Ceph内置的变量。可以用它来简化ceph.conf文件的配置:

$cluster:Ceph存储集群的名称。默认为ceph,在/etc/sysconfig/ceph文件中定义。例如,log_file参数的默认值是/var/log/ceph/$cluster-$name.log。在扩展之后,它变为/var/log/ceph/ceph-mon.ceph-node1.log

$type:守护进程类型。监控器使用mon;OSD使用osd,元数据服务器使用mds,管理器使用mgr,客户端应用使用client。如在[global]部分中将pid_file参数设定义为/var/run/$cluster/$type.$id.pid,它会扩展为/var/run/ceph/osd.0.pid,表示ID为0的 OSD。对于在ceph-node1上运行的MON守护进程,它扩展为/var/run/ceph/mon.ceph-node1.pid

$id:守护进程实例ID。对于ceph-node1上的MON,设置为ceph-node1。对于osd.1,它设置为1。如果是客户端应用,这是用户名

$name:守护进程名称和实例ID。这是$type.$id的快捷方式

$host:其上运行了守护进程的主机的名称

补充:

关闭所有ceph进程

[root@ceph2 ceph]# ps -ef|grep "ceph-"|grep -v grep|awk '{print $2}'|xargs kill -9

3.3 组件之间启用cephx认证配置

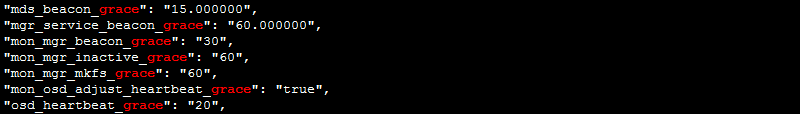

[root@ceph2 ceph]# ceph daemon osd.3 config show|grep grace

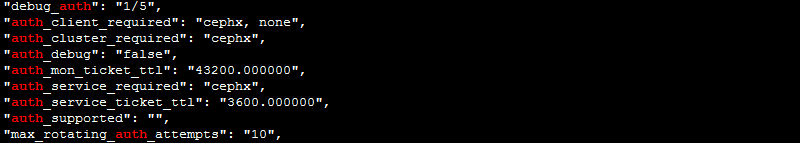

[root@ceph2 ceph]# ceph daemon mon.ceph2 config show|grep auth

修改配置文件

[root@ceph1 ceph-ansible]# vim /etc/ceph/ceph.conf

auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx

[root@ceph1 ceph-ansible]# ansible all -m copy -a 'src=/etc/ceph/ceph.conf dest=/etc/ceph/ceph.conf owner=ceph group=ceph mode=0644'

[root@ceph1 ceph-ansible]# ansible mons -m shell -a ' systemctl restart ceph-mon.target'

[root@ceph1 ceph-ansible]# ansible mons -m shell -a ' systemctl restart ceph-osd.target'

[root@ceph1 ceph-ansible]# ansible mons -m shell -a ' systemctl restart ceph-mgr.target'

[root@ceph2 ceph]# ceph daemon mon.ceph2 config show|grep auth

cephx验证

四、Ceph用户管理

用户管理需要用户名和秘钥

[root@ceph2 ceph]# cat ceph.client.admin.keyring

[client.admin] key = AQD7fYxcnG+wCRAARyLuAewyDcGmTPb5wdNRvQ== caps mds = "allow *" caps mgr = "allow *" caps mon = "allow *" caps osd = "allow *"

假如没有秘钥环,在执行ceph -s等相关操作就会报错,如在ceph1执行

[root@ceph1 ceph-ansible]# ceph -s

2019-03-17 16:19:31.824428 7fc87b255700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory 2019-03-17 16:19:31.824437 7fc87b255700 -1 monclient: ERROR: missing keyring, cannot use cephx for authentication 2019-03-17 16:19:31.824439 7fc87b255700 0 librados: client.admin initialization error (2) No such file or directory [errno 2] error connecting to the cluster

4.1 Ceph授权

Ceph把数据以对象的形式存于个存储池中,Ceph用户必须具有访问存储池的权限能够读写数据

Ceph用caps来描述给用户的授权,这样才能使用Mon,OSD和MDS的功能

caps也用于限制对某一存储池内的数据或某个命名空间的访问

Ceph管理用户可在创建或更新普通用户是赋予其相应的caps

Ceph常用权限说明:

r:赋予用户读数据的权限,如果我们需要访问集群的任何信息,都需要先具有monitor的读权限

w:赋予用户写数据的权限,如果需要在osd上存储或修改数据就需要为OSD授予写权限

x:赋予用户调用对象方法的权限,包括读和写,以及在monitor上执行用户身份验证的权限

class-read:x的子集,允许用户调用类的read方法,通常用于rbd类型的池

class-write:x的子集,允许用户调用类的write方法,通常用于rbd类型的池

*:将一个指定存储池的完整权限(r、w和x)以及执行管理命令的权限授予用户

profile osd:授权一个用户以OSD身份连接其它OSD或者Monitor,用于OSD心跳和状态报告

profile mds:授权一个用户以MDS身份连接其他MDS或者Monitor

profile bootstrap-osd:允许用户引导OSD。比如ceph-deploy和ceph-disk工具都使用client.bootstrap-osd用户,该用户有权给OSD添加密钥和启动加载程序

profile bootstrap-mds:允许用户引导MDS。比如ceph-deploy工具使用了client.bootstrap-mds用户,该用户有权给MDS添加密钥和启动加载程序

4.2 添加用户

[root@ceph2 ceph]# ceph auth add client.ning mon 'allow r' osd 'allow rw pool=testpool' #当用户不存在,则创建用户并授权;当用户存在,当权限不变,则不进行任何输出;当用户存在,不支持修改权限 added key for client.ning [root@ceph2 ceph]# ceph auth add client.ning mon 'allow r' osd 'allow rw' Error EINVAL: entity client.ning exists but cap osd does not match [root@ceph2 ceph]# ceph auth get-or-create client.joy mon 'allow r' osd 'allow rw pool=mytestpool' #当用户不存在,则创建用户并授权并返回用户和key,当用户存在,权限不变,返回用户和key,,当用户存在,权限修改,则返回报错 [client.joy] key = AQBiBY5cJ2gBLBAA/ZCGDdp6JWkPuuU0YaLsrw== [root@ceph2 ceph]# cat ceph.client.admin.keyring [client.admin] key = AQD7fYxcnG+wCRAARyLuAewyDcGmTPb5wdNRvQ== caps mds = "allow *" caps mgr = "allow *" caps mon = "allow *" caps osd = "allow *" [root@ceph2 ceph]# ceph auth get-or-create client.joy mon 'allow r' osd 'allow rw' #当用户不存在,则创建用户并授权只返回key;当用户存在,权限不变,只返回key;当用户存在,权限修改,则返回报错 Error EINVAL: key for client.joy exists but cap osd does not match

4.3 删除用户

[root@ceph2 ceph]# ceph auth get-or-create client.xxx #创建一个xxx用户 [client.xxx] key = AQAOB45c4KIoCRAAF/kDd7r4uUjKEdEHOSP8Xw== [root@ceph2 ceph]# ceph auth del client.xxx #删除xxx用户 updated [root@ceph2 ceph]# ceph auth get client.xxx #用户已经删除 Error ENOENT: failed to find client.xxx in keyring [root@ceph2 ceph]# ceph auth get client.ning exported keyring for client.ning [client.ning] key = AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw== caps mon = "allow r" caps osd = "allow rw pool=testpool" [root@ceph2 ceph]# ceph auth get-key client.ning AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw==[root@ceph2 ceph]#

4.4 导出用户

[root@ceph2 ceph]# ceph auth get-or-create client.ning -o ./ceph.client.ning.keyring [root@ceph2 ceph]# ll ./ceph.client.ning.keyring -rw-r--r-- 1 root root 62 Mar 17 16:39 ./ceph.client.ning.keyring [root@ceph2 ceph]# cat ./ceph.client.ning.keyring [client.ning] key = AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw==

ceph1上验证验证操作

[root@ceph2 ceph]# scp ./ceph.client.ning.keyring 172.25.250.10:/etc/ceph/ 把秘钥传给cph1 [root@ceph1 ceph-ansible]# ceph -s #发现没有成功,需要制定ID或用户 2019-03-17 16:47:01.936939 7fbad2aae700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory 2019-03-17 16:47:01.936950 7fbad2aae700 -1 monclient: ERROR: missing keyring, cannot use cephx for authentication 2019-03-17 16:47:01.936951 7fbad2aae700 0 librados: client.admin initialization error (2) No such file or directory [errno 2] error connecting to the cluster [root@ceph1 ceph-ansible]# ceph -s --name client.ning #指定用户查看 cluster: id: 35a91e48-8244-4e96-a7ee-980ab989d20d health: HEALTH_OK services: mon: 3 daemons, quorum ceph2,ceph3,ceph4 mgr: ceph4(active), standbys: ceph2, ceph3 osd: 9 osds: 9 up, 9 in data: pools: 1 pools, 128 pgs objects: 3 objects, 21938 bytes usage: 972 MB used, 133 GB / 134 GB avail pgs: 128 active+clean [root@ceph1 ceph-ansible]# ceph osd pool ls --name client.ning testpool [root@ceph1 ceph-ansible]# ceph osd pool ls --id ning testpool [root@ceph1 ceph-ansible]# [root@ceph1 ceph-ansible]# rados -p testpool ls --id ning test2 test [root@ceph1 ceph-ansible]# rados -p testpool put aaa /etc/ceph/ceph.conf --id ning #验证数据的上传下载 [root@ceph1 ceph-ansible]# rados -p testpool ls --id ning test2 aaa test [root@ceph1 ceph-ansible]# rados -p testpool get aaa /root/aaa.conf --name client.ning [root@ceph1 ceph-ansible]# diff /root/aaa.conf /etc/ceph/ceph.conf

4.5 导入用户

[root@ceph2 ceph]# ceph auth export client.ning -o /etc/ceph/ceph.client.ning-1.keyring export auth(auid = 18446744073709551615 key=AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw== with 2 caps) [root@ceph2 ceph]# ll total 20 -rw------- 1 ceph ceph 151 Mar 16 12:39 ceph.client.admin.keyring -rw-r--r-- 1 root root 121 Mar 17 17:32 ceph.client.ning-1.keyring -rw-r--r-- 1 root root 62 Mar 17 16:39 ceph.client.ning.keyring -rw-r--r-- 1 ceph ceph 589 Mar 17 16:12 ceph.conf drwxr-xr-x 2 ceph ceph 23 Mar 16 12:39 ceph.d -rw-r--r-- 1 root root 92 Nov 23 2017 rbdmap [root@ceph2 ceph]# cat ceph.client.ning-1.keyring #带有授权信息 [client.ning] key = AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw== caps mon = "allow r" caps osd = "allow rw pool=testpool" [root@ceph2 ceph]# ceph auth get client.ning exported keyring for client.ning [client.ning] key = AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw== caps mon = "allow r" caps osd = "allow rw pool=testpool"

4.6 用户被删除,恢复用户

[root@ceph2 ceph]# cat ceph.client.ning.keyring #秘钥环没有权限信息 [client.ning] key = AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw== [root@ceph2 ceph]# ceph auth del client.ning #删除这个用户 updated [root@ceph1 ceph-ansible]# ll /etc/ceph/ceph.client.ning.keyring #在客户端,秘钥环依然存在 -rw-r--r-- 1 root root 62 Mar 17 16:40 /etc/ceph/ceph.client.ning.keyring [root@ceph1 ceph-ansible]# ceph -s --name client.ning #秘钥环的用户被删除,无效 2019-03-17 17:49:13.896609 7f841eb27700 0 librados: client.ning authentication error (1) Operation not permitted [errno 1] error connecting to the cluster [root@ceph2 ceph]# ceph auth import -i ./ceph.client.ning-1.keyring #使用ning-1.keyring恢复 imported keyring [root@ceph2 ceph]# ceph auth list |grep ning #用户恢复 installed auth entries: client.ning [root@ceph1 ceph-ansible]# ceph osd pool ls --name client.ning #客户端验证,秘钥生效 testpool EC-pool

4.7 修改用户权限

两种方法,一种是直接删除这个用户,重新创建具有新权限的用户,但是会导致使用这个用户连接的客户端,都将全部失效。可以使用下面的方法修改

ceph auth caps 用户修改用户授权。如果给定的用户不存在,直接返回报错。如果用户存在,则使用新指定的权限覆盖现有权限。所以,如果只是给用户新增权限,则原来的权限需要原封不动的带上。如果需要删除原来的权限,只需要将该权限设定为空即可。

[root@ceph2 ceph]# ceph auth get client.joy #查看用户权限 exported keyring for client.joy [client.joy] key = AQBiBY5cJ2gBLBAA/ZCGDdp6JWkPuuU0YaLsrw== caps mon = "allow r" caps osd = "allow rw pool=mytestpool" [root@ceph2 ceph]# ceph osd pool ls testpool EC-pool [root@ceph2 ceph]# ceph auth caps client.joy mon 'allow r' osd 'allow rw pool=mytestpool,allow rw pool=testpool' #对用户joy添加对testpool这个池的权限 updated caps for client.joy [root@ceph2 ceph]# ceph auth get client.joy #查看成功添加 exported keyring for client.joy [client.joy] key = AQBiBY5cJ2gBLBAA/ZCGDdp6JWkPuuU0YaLsrw== caps mon = "allow r" caps osd = "allow rw pool=mytestpool,allow rw pool=testpool" [root@ceph2 ceph]# rados -p testpool put joy /etc/ceph/ceph.client.admin.keyring --id joy #但是没有秘钥,需要导出秘钥 2019-03-17 18:01:36.602310 7ff35e71ee40 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.joy.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory 2019-03-17 18:01:36.602337 7ff35e71ee40 -1 monclient: ERROR: missing keyring, cannot use cephx for authentication 2019-03-17 18:01:36.602340 7ff35e71ee40 0 librados: client.joy initialization error (2) No such file or directory couldn't connect to cluster: (2) No such file or directory [root@ceph2 ceph]# ceph auth get-or-create client.joy -o /etc/ceph/ceph.client.joy.keyring #导出秘钥 [root@ceph2 ceph]# rados -p testpool put joy /etc/ceph/ceph.client.admin.keyring --id joy #上传数据测试测试 [root@ceph2 ceph]# rados -p testpool ls --id joy #测试成功,用户权限修改成功 joy test2 aaa test [root@ceph2 ceph]# ceph auth caps client.joy mon 'allow r' osd 'allow rw pool=testpool' #去掉对mytestpool的权限 updated caps for client.joy [root@ceph2 ceph]# ceph auth get client.joy #修改成功 exported keyring for client.joy [client.joy] key = AQBiBY5cJ2gBLBAA/ZCGDdp6JWkPuuU0YaLsrw== caps mon = "allow r" caps osd = "allow rw pool=testpool" [root@ceph2 ceph]# ceph auth caps client.ning mon '' osd '' #清除掉所有权限,但是必须保留对mon的读权限 Error EINVAL: moncap parse failed, stopped at end of '' [root@ceph2 ceph]# ceph auth caps client.ning mon 'allow r' osd '' #成功清除所有权限,但是还有mon的权限 updated caps for client.ning [root@ceph2 ceph]# ceph auth get client.ning exported keyring for client.ning [client.ning] key = AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw== caps mon = "allow r" caps osd = "" [root@ceph2 ceph]# ceph auth get-or-create joyning #即也不可以创建空权限用户,既没有monitor读权限的用户 Error EINVAL: bad entity name

4.8 推送用户

创建的用户主要用于客户端授权,所以需要将创建的用户推送至客户端。如果需要向同一个客户端推送多个用户,可以将多个用户的信息写入同一个文件,然后直接推送该文件

[root@ceph2 ceph]# ceph-authtool -C /etc/ceph/ceph.keyring #创建一个秘钥文件 creating /etc/ceph/ceph.keyring [root@ceph2 ceph]# ceph-authtool ceph.keyring --import-keyring ceph.client.ning.keyring #把用户client.ning添加进秘钥文件 importing contents of ceph.client.ning.keyring into ceph.keyring [root@ceph2 ceph]# cat ceph.keyring #查看 [client.ning] key = AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw== [root@ceph2 ceph]# ceph-authtool ceph.keyring --import-keyring ceph.client.joy.keyring #把用户client.ning添加进秘钥文件 importing contents of ceph.client.joy.keyring into ceph.keyring [root@ceph2 ceph]# cat ceph.keyring #查看有两个用户,可以把这文件推送给客户端,就可以使用这两个用户的权限 [client.joy] key = AQBiBY5cJ2gBLBAA/ZCGDdp6JWkPuuU0YaLsrw== [client.ning] key = AQAcBY5coY/rLxAAvq99xcSOrwLI1ip0WAw2Sw==

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!