本文是在集群已经搭建好的基础上来说的,还没有搭建好集群的小伙伴还请自行百度!

启动spark-shell之前要先启动hive metastore 和 hiveservice2

hive --service metastore & hiveserver2

然后再启动spark-shell

spark-shell --master yarn --deploy-medo client

启动之后可能会抛出一些异常

[root@master hadoop]# spark-shell --master yarn --deploy-mode client Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 18/06/04 09:46:55 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/06/04 09:47:00 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME. 18/06/04 09:47:35 WARN DFSClient: Caught exception java.lang.InterruptedException at java.lang.Object.wait(Native Method) at java.lang.Thread.join(Thread.java:1252) at java.lang.Thread.join(Thread.java:1326) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder(DFSOutputStream.java:609) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.endBlock(DFSOutputStream.java:370) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:546) 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.metastore.hbase.aggregate.stats.false.positive.probability does not exist 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.llap.io.orc.time.counters does not exist 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.orc.splits.ms.footer.cache.ppd.enabled does not exist 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.server2.metrics.enabled does not exist 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.llap.am.liveness.connection.timeout.ms does not exist 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.server2.thrift.client.connect.retry.limit does not exist 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.llap.io.allocator.direct does not exist 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.llap.auto.enforce.stats does not exist 18/06/04 09:47:59 WARN HiveConf: HiveConf of name hive.llap.client.consistent.splits does not exist

这些警告不影响咱们的运行

scala> val rdd=sc.parallelize(1 to 100,5) rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:24 scala> rdd.count res0: Long = 100 scala>

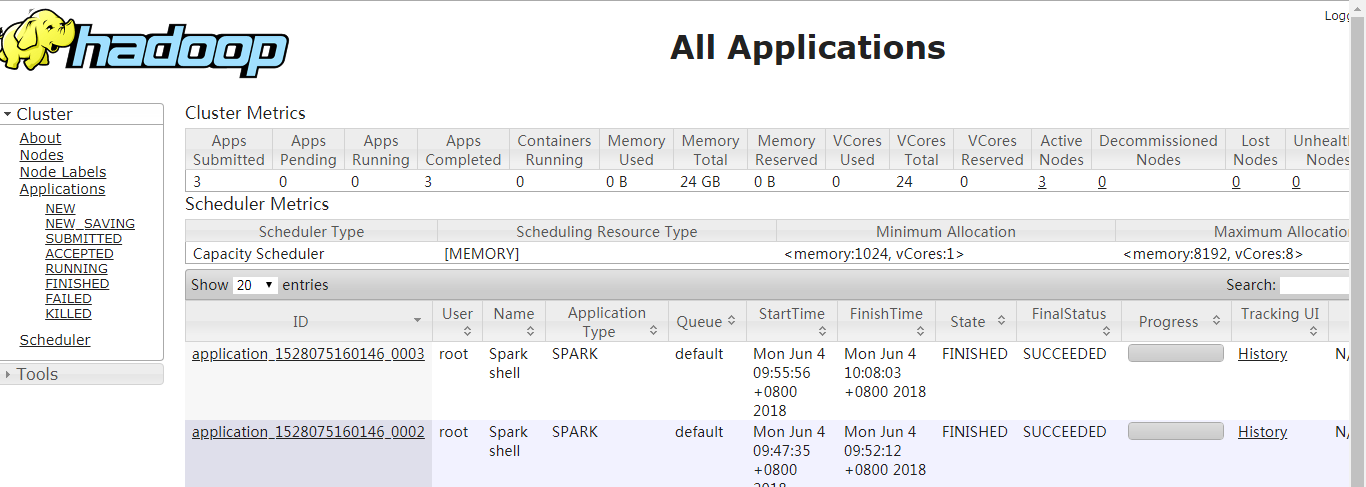

spark的UI页面