这段时间刚刚接触hadoop这个东西,简单的应用了一下,具体的应该是模仿应用,看代码:

spring中配置扫描配置文件:

applicationContext.xml

<bean id="propertyConfigurer" class="cn.edu.hbcf.vo.CustomizedPropertyConfigurer"> <property name="locations"> <list> <value>classpath:system.properties</value> </list> </property> </bean>

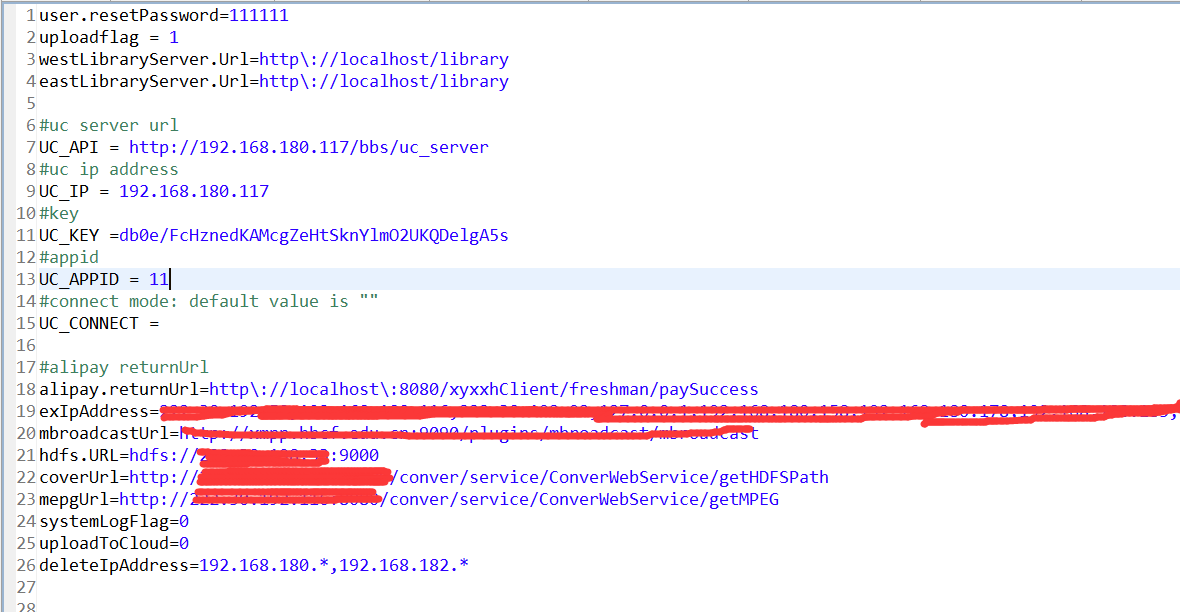

配置文件代码如下:

system.properties:

controller如下:

DFSController如下:

package cn.edu.hbcf.cloud.hadoop.dfs.controller; import java.io.BufferedInputStream; import java.io.BufferedOutputStream; import java.io.IOException; import java.io.OutputStream; import java.io.UnsupportedEncodingException; import java.util.List; import java.util.UUID; import javax.servlet.http.HttpServletRequest; import javax.servlet.http.HttpServletResponse; import javax.servlet.http.HttpSession; import org.apache.commons.lang.StringUtils; import org.apache.hadoop.fs.FSDataInputStream; import org.apache.hadoop.io.IOUtils; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.stereotype.Controller; import org.springframework.ui.Model; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RequestMethod; import org.springframework.web.bind.annotation.RequestParam; import org.springframework.web.bind.annotation.ResponseBody; import org.springframework.web.multipart.MultipartFile; import cn.edu.hbcf.cloud.hadoop.dfs.service.DFSService; import cn.edu.hbcf.cloud.hadoop.dfs.vo.DfsInfo; import cn.edu.hbcf.common.constants.WebConstants; import cn.edu.hbcf.common.hadoop.CFile; import cn.edu.hbcf.common.hadoop.FileManager; import cn.edu.hbcf.common.hadoop.FileSysFactory; import cn.edu.hbcf.common.hadoop.FileSysType; import cn.edu.hbcf.common.vo.Criteria; import cn.edu.hbcf.common.vo.ExceptionReturn; import cn.edu.hbcf.common.vo.ExtFormReturn; import cn.edu.hbcf.plugin.oa.pojo.NoteBook; import cn.edu.hbcf.privilege.pojo.BaseUsers; @Controller @RequestMapping("/dfs") public class DFSController { @Autowired private DFSService dfsService; @RequestMapping(method=RequestMethod.GET) public String index(HttpServletRequest request,HttpSession session,Model model){ return "plugins/cloud/web/views/hadoop/dfs/dfs"; } @RequestMapping(value = "/play",method=RequestMethod.GET) public String play(HttpServletRequest request,HttpSession session,Model model){ return "plugins/cloud/web/views/hadoop/dfs/play"; } @RequestMapping("/upload") public void upload(@RequestParam MultipartFile file, HttpServletRequest request, HttpServletResponse response){ String uploadFileName = file.getOriginalFilename(); FileManager fileMgr=FileSysFactory.getInstance(FileSysType.HDFS); String path=request.getParameter("path"); HttpSession session=request.getSession(true); BaseUsers u = (BaseUsers) session.getAttribute(WebConstants.CURRENT_USER); // 获取文件后缀名 String fileType = StringUtils.substringAfterLast(uploadFileName, "."); String saveName = UUID.randomUUID().toString().replace("-", "")+("".equals(fileType) ? "" : "." + fileType); String filePath="/filesharesystem/"+u.getAccount()+path; try { fileMgr.putFile(file.getInputStream(), filePath, saveName); System.out.println("path="+filePath+" uploadFileName="+uploadFileName); StringBuffer buffer = new StringBuffer(); buffer.append("{success:true,fileInfo:{fileName:'").append(uploadFileName).append("',"); buffer.append("filePath:'").append(filePath+saveName).append("',"); buffer.append("projectPath:'").append(filePath+saveName).append("',"); buffer.append("storeName:'").append(saveName).append("',"); buffer.append("fileSize:").append((float)file.getSize()/1024); buffer.append("}}"); response.setContentType("text/html;charset=utf-8;"); response.getWriter().write(buffer.toString()); response.getWriter().flush(); response.getWriter().close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } @RequestMapping(value="/downloadFile", method = RequestMethod.GET) public void download(HttpServletRequest request, HttpServletResponse response,String filePath,String fileName) { //String fileName=request.getParameter("fileName"); try { fileName=new String(fileName.getBytes("ISO-8859-1"),"UTF-8"); filePath=new String(filePath.getBytes("ISO-8859-1"),"UTF-8"); } catch (UnsupportedEncodingException e1) { // TODO Auto-generated catch block e1.printStackTrace(); } OutputStream output = null; BufferedInputStream bis=null; BufferedOutputStream bos=null; FileManager fileMgr=FileSysFactory.getInstance(FileSysType.HDFS); //response.setContentType("application/x-msdownload"); //response.setCharacterEncoding("UTF-8"); try { output = response.getOutputStream(); response.setHeader("Content-Disposition","attachment;filename="+new String(fileName.getBytes("gbk"),"iso-8859-1")); bis=new BufferedInputStream(fileMgr.getFile(filePath)); bos=new BufferedOutputStream(output); byte[] buff=new byte[2048]; int bytesRead; while(-1!=(bytesRead=bis.read(buff,0,buff.length))){ bos.write(buff,0,bytesRead); } } catch (UnsupportedEncodingException e) { // TODO Auto-generated catch block e.printStackTrace(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally{ if(bis!=null) try { bis.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } if(bos!=null) try { bos.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } @RequestMapping("/delete") public void delete(String path, HttpServletResponse response){ FileManager fileMgr=FileSysFactory.getInstance(FileSysType.HDFS); try { fileMgr.deleteFile(path); StringBuffer buffer = new StringBuffer(); buffer.append("{success:true}"); response.setContentType("text/html;charset=utf-8;"); response.getWriter().write(buffer.toString()); response.getWriter().flush(); response.getWriter().close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } @RequestMapping(value ="/queryMovie",method=RequestMethod.GET) public void queryMovie( HttpServletRequest request, HttpServletResponse response){ response.setContentType("application/octet-stream"); FileManager fileMgr = FileSysFactory.getInstance(FileSysType.HDFS); FSDataInputStream fsInput = fileMgr.getStreamFile("/filesharesystem/admin/test/a.swf"); OutputStream os; try { os = response.getOutputStream(); IOUtils.copyBytes(fsInput, os, 4090, false); os.flush(); os.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } /** * 根据提交的路径,从云上获取swf格式和picture文档内容 */ @RequestMapping(value = "/queryResource", method = RequestMethod.GET) public void queryResource(HttpServletRequest request, HttpServletResponse response, String resourcePath) { response.setContentType("application/octet-stream"); FileManager fileMgr = FileSysFactory.getInstance(FileSysType.HDFS); FSDataInputStream fsInput = fileMgr.getStreamFile(resourcePath); OutputStream os = null; try { os = response.getOutputStream(); IOUtils.copyBytes(fsInput, os, 4090, false); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally{ try { if(os != null){ os.flush(); os.close(); } if(fsInput!= null){ fsInput.close(); } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } @RequestMapping(value = "/queryListForTree", method = RequestMethod.POST) @ResponseBody public List<CFile> queryListForTree(HttpServletRequest request) { HttpSession session=request.getSession(true); BaseUsers u = (BaseUsers) session.getAttribute(WebConstants.CURRENT_USER); Criteria criteria = new Criteria(); criteria.put("username", u.getAccount()); return dfsService.queryListForTree(criteria); } @RequestMapping(value="/status",method=RequestMethod.GET) public String dfsIndex(HttpServletRequest request,HttpSession session,Model model){ return "plugins/cloud/web/views/hadoop/dfs/dfsStatus"; } @RequestMapping(value="/status", method = RequestMethod.POST) @ResponseBody public Object get(HttpSession session){ try{ DfsInfo dfs = dfsService.getDfsInfo(); if(dfs != null){ return new ExtFormReturn(true,dfs); }else{ return new ExtFormReturn(false); } }catch(Exception e){ e.printStackTrace(); return new ExceptionReturn(e); } } @RequestMapping(value="/dataNode", method = RequestMethod.POST) @ResponseBody public Object dataNode(){ try{ return dfsService.getDataNodeList(); }catch(Exception e){ e.printStackTrace(); return new ExceptionReturn(e); } } }

重点应用了,上传,删除,查询云操作

其中,hadoop的几个实体类如下:

CFile.java

package cn.edu.hbcf.common.hadoop; import java.util.List; public class CFile { private String name; private long size; private int leaf; private String createTime; private List<CFile> children; private String fullPath; public String getFullPath() { return fullPath; } public void setFullPath(String fullPath) { this.fullPath = fullPath; } public String getName() { return name; } public void setName(String name) { this.name = name; } public long getSize() { return size; } public void setSize(long size) { this.size = size; } public int getLeaf() { return leaf; } public void setLeaf(int leaf) { this.leaf = leaf; } public List<CFile> getChildren() { return children; } public void setChildren(List<CFile> children) { this.children = children; } public String getCreateTime() { return createTime; } public void setCreateTime(String createTime) { this.createTime = createTime; } }

FileManager.java

package cn.edu.hbcf.common.hadoop; import java.io.DataInputStream; import java.io.InputStream; import java.util.List; import org.apache.hadoop.fs.FSDataInputStream; public interface FileManager { public void createDirForUser(String dirName); public boolean isDirExistForUser(String uname); public void deleteFile(String fileName); public void getFile(String userName,String remoteFileName,String localFileName); public void putFile(String directoryName,String remoteFileName,String localFileName); public List<CFile> listFileForUser(String uname,String filePrefix); public void putFile(InputStream input,String path,String remoteFileName); public DataInputStream getFile(String remoteFileName); public FSDataInputStream getStreamFile(String remoteFileName); public boolean isFileExist(String filePath); }

FileSysFactory.java

package cn.edu.hbcf.common.hadoop; public class FileSysFactory { private static FileManager instanceApacheHDFS=null; private final static FileManager instanceNormalFileSys=null; public static synchronized FileManager getInstance(FileSysType fileSysType){ switch(fileSysType){ case HDFS: if(instanceApacheHDFS==null){ instanceApacheHDFS=new HDFSFileManager(); } return instanceApacheHDFS; case NormalFileSys: } return null; } }

FileSysType.java

package cn.edu.hbcf.common.hadoop; public enum FileSysType { HDFS,NormalFileSys }

HDFSFileManager.java

package cn.edu.hbcf.common.hadoop; import java.io.BufferedInputStream; import java.io.DataInputStream; import java.io.DataOutputStream; import java.io.IOException; import java.io.InputStream; import java.util.ArrayList; import java.util.List; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataInputStream; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import cn.edu.hbcf.common.springmvc.CustomizedPropertyConfigurer; public class HDFSFileManager implements FileManager { private final static String root_path="/filesharesystem/"; private static FileSystem fs=null; public HDFSFileManager(){ if(fs==null){ try{ Configuration conf=new Configuration(true); String url =(String)CustomizedPropertyConfigurer.getContextProperty("hdfs.URL"); //conf.set("fs.default.name", url); conf.set("fs.defaultFS", url); fs=FileSystem.get(conf); }catch(IOException e){ e.printStackTrace(); } } } public void createDirForUser(String dirName) { // TODO Auto-generated method stub try { Path f=new Path(root_path); fs.mkdirs(new Path(f,dirName)); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } public void deleteFile(String fileName) { // TODO Auto-generated method stub try{ Path f=new Path(fileName); fs.delete(f); }catch(IOException e){ e.printStackTrace(); } } public void getFile(String userName, String remoteFileName, String localFileName) { // TODO Auto-generated method stub } public DataInputStream getFile(String remoteFileName) { // TODO Auto-generated method stub try { return fs.open(new Path(remoteFileName)); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } return null; } public FSDataInputStream getStreamFile(String remoteFileName) { // TODO Auto-generated method stub try { return fs.open(new Path(remoteFileName)); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } return null; } public boolean isDirExistForUser(String uname) { // TODO Auto-generated method stub Path f=new Path(root_path+uname); try { return fs.exists(f); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } return false; } public List<CFile> listFileForUser(String uname, String filePrefix) { List<CFile> list=new ArrayList<CFile>(); String fp=root_path+uname+"/"+filePrefix; Path path=new Path(fp); try { FileStatus[] stats = fs.listStatus(path); for(int i=0;i<stats.length;i++){ CFile file=new CFile(); int len=stats[i].getPath().toString().lastIndexOf("/"); String fileName=stats[i].getPath().toString().substring(len+1); java.text.Format formatter=new java.text.SimpleDateFormat("yyyy-MM-dd HH:mm:ss"); String createTime=formatter.format(stats[i].getModificationTime()); if(stats[i].isDir()){ file.setLeaf(0); file.setSize(stats[i].getBlockSize()); file.setName(fileName); file.setCreateTime(createTime); String newpath=filePrefix+"/"+fileName; file.setChildren(listFileForUser(uname,newpath)); file.setFullPath(stats[i].getPath().toString().replace("hdfs://master:9000", "")); list.add(file); }else{ file.setLeaf(1); file.setName(fileName); file.setCreateTime(createTime); file.setFullPath(stats[i].getPath().toString().replace("hdfs://master:9000", "")); list.add(file); } } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } return list; } public void putFile(String directoryName, String remoteFileName, String localFileName) { // TODO Auto-generated method stub } public void putFile(InputStream input, String path, String remoteFileName) { // TODO Auto-generated method stub final int taskSize=1024; BufferedInputStream istream=null; DataOutputStream ostream=null; istream=new BufferedInputStream(input); try { ostream=fs.create(new Path(path+"/"+remoteFileName)); int bytes; byte[] buffer=new byte[taskSize]; while((bytes=istream.read(buffer))>=0){ ostream.write(buffer,0,bytes); } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); }finally{ try { if(istream!=null) istream.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } try { if(ostream!=null) ostream.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } } @Override public boolean isFileExist(String filePath) { // TODO Auto-generated method stub Path f=new Path(filePath); try { return fs.exists(f); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } return false; } }

用到的几个jar包:

<dependency> <groupId>org.apache.solr</groupId> <artifactId>solr-solrj</artifactId> <version>4.10.3</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.4.0</version> <exclusions> <exclusion> <artifactId>jasper-runtime</artifactId> <groupId>tomcat</groupId> </exclusion> <exclusion> <artifactId>jasper-compiler</artifactId> <groupId>tomcat</groupId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <!-- <version>2.0.0-cdh4.4.0</version> --> <version>2.2.0</version> <exclusions> <exclusion> <artifactId>jdk.tools</artifactId> <groupId>jdk.tools</groupId> </exclusion> </exclusions> </dependency>

最后,需要配置一下环境变量:

HADOOP_HOME

C:UsersPromiseDesktophadoop-common-2.2.0-bin-master

`P8J[`JM.png)

`P8J[`JM.png)