1 # coding:utf-8 2 # auth:xiaomozi 3 #date:2018.4.19 4 #爬取智联招聘职位信息 5 6 7 import urllib 8 from lxml import etree 9 import time 10 import random 11 import pdb 12 13 def downloader(kw,pages): 14 '''下载器 15 :param kw: 搜索关键字 16 :param pages: 搜索的页码,数组 17 :return: 返回爬取到的HTML集合 18 ''' 19 for page in pages: 20 print("the {}page is downloading".format(page)) 21 infourl = 'https://sou.zhaopin.com/jobs/searchresult.ashx?jl=%E6%B7%B1%E5%9C%B3&kw={}&sm=0&p={}'.format(kw, str(page)) 22 time.sleep(random.uniform(0.5, 2.1)) 23 info = urllib.urlopen(infourl).read() 24 yield info 25 26 def extractor(html): 27 ''' 28 提取工作岗位信息,返回一个yield 29 :param html: html字符串 30 :return: 生成器 31 ''' 32 et=etree.HTML(html) 33 tablerows = et.xpath('//div[@class="newlist_list_content"]/table[@class="newlist"]/tr[1]') 34 item = {} 35 for tr in tablerows: 36 tr = etree.HTML(etree.tostring(tr)) 37 item['job'] = tr.xpath('//td[@class="zwmc"]//a[1]/text()') 38 item['com_name'] = tr.xpath('//td[@class="gsmc"]/a[1]/text()') 39 item['salary'] = tr.xpath('//td[@class="zwyx"]/text()') 40 item['address'] = tr.xpath('//td[@class="gzdd"]/text()') 41 yield item 42 43 def saveInfo(items): 44 ''' 45 保存到本地或打印到控制台 46 :param items: 工资岗位信息,类型为生成器 47 :return: 返回处理结果状态 48 ''' 49 for i in items: 50 print(i) 51 return('finished') 52 53 #调用 54 infohtmls= downloader(kw='GIS',pages=range(1,5)) 55 for html in infohtmls: 56 myitems=extractor(html) 57 saveInfo(myitems)

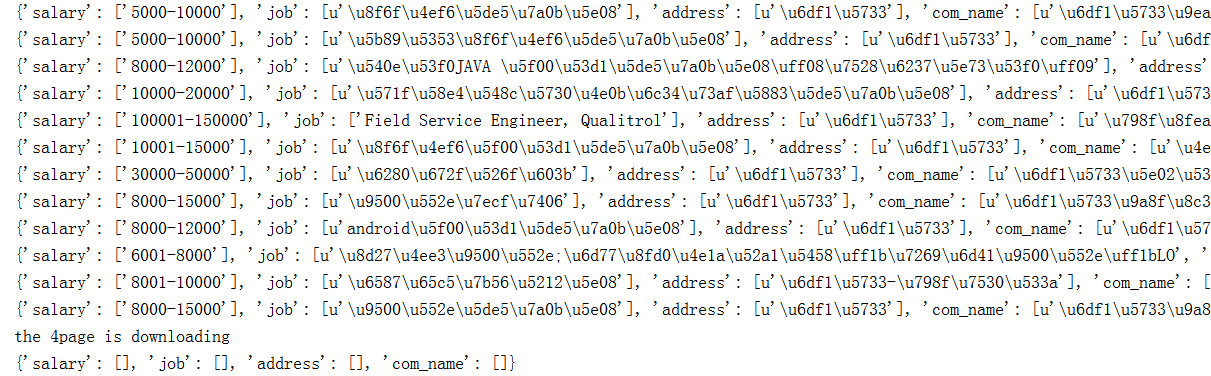

执行结果截图如下:爬取到了满满的3页哦。

版权所有,请多指教 >_*