windows环境下编写hadoop程序

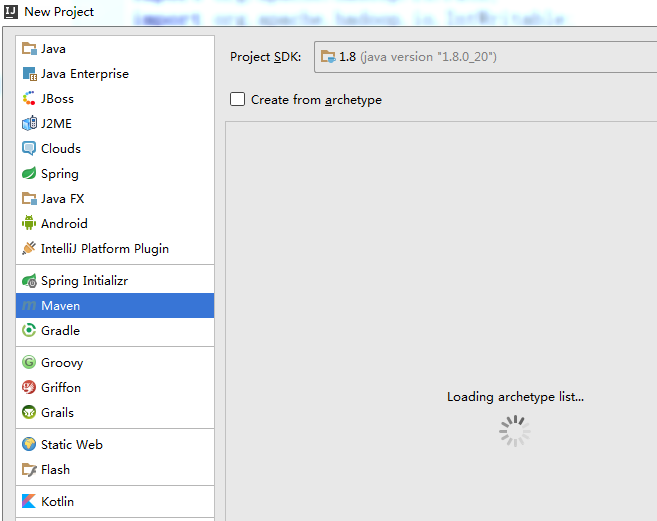

- 新建:File->new->Project->Maven->next

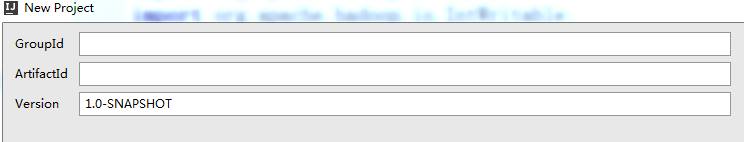

GroupId 和ArtifactId 随便写(还是建议规范点)->finfsh

会生成pom.xml,文件内容如下

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.hadoopbook</groupId>

<artifactId>hadoop-demo</artifactId>

<packaging>jar</packaging>

<dependencies>

<dependency>

<groupId>commons-beanutils</groupId>

<artifactId>commons-beanutils</artifactId>

<version>1.9.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>2.7.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.7.0</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

</dependencies>

</project>

- 可以网上找个wordCount(单词计数)源码进行测试,复制进去会发现以下的那些包都是报红,因为许多类都是无法识别的。

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

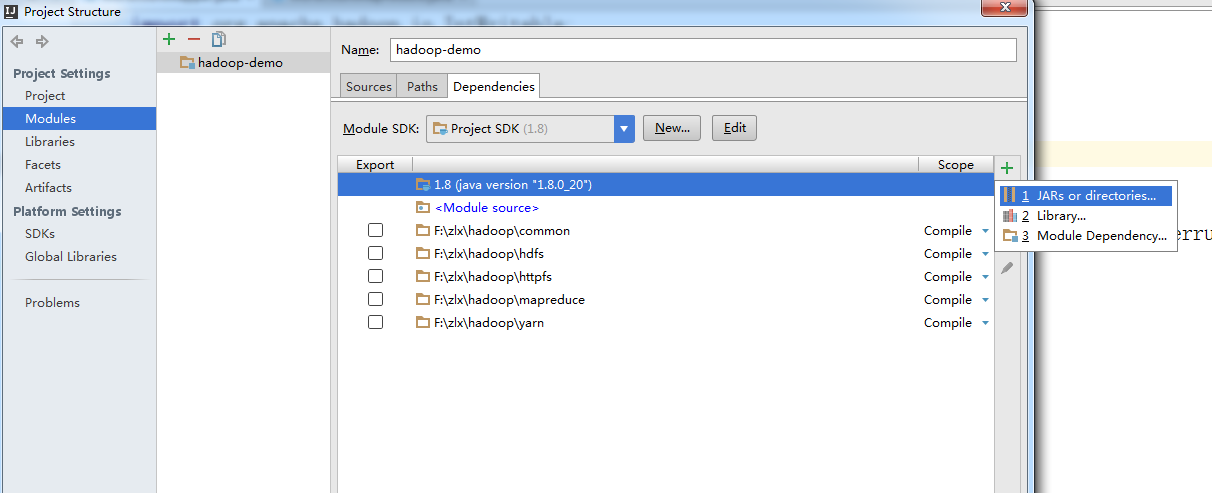

接下来打开File->project Structure->Modules->右侧+->JARs or directories

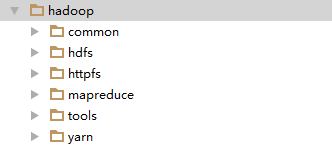

将你hadoop集群里面下载的jar包全部导入进去

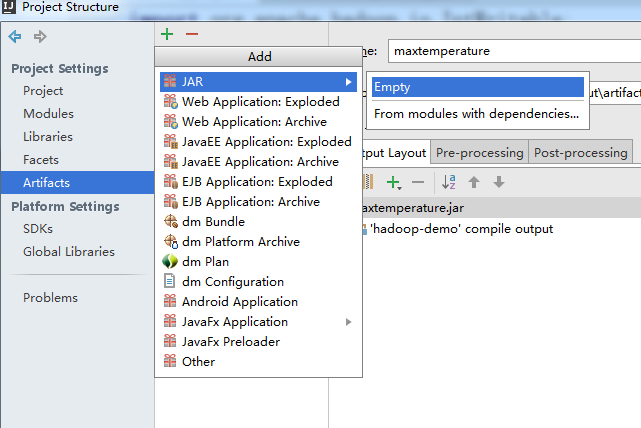

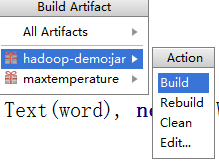

点击左侧Arifacts ->+->JAR->empty

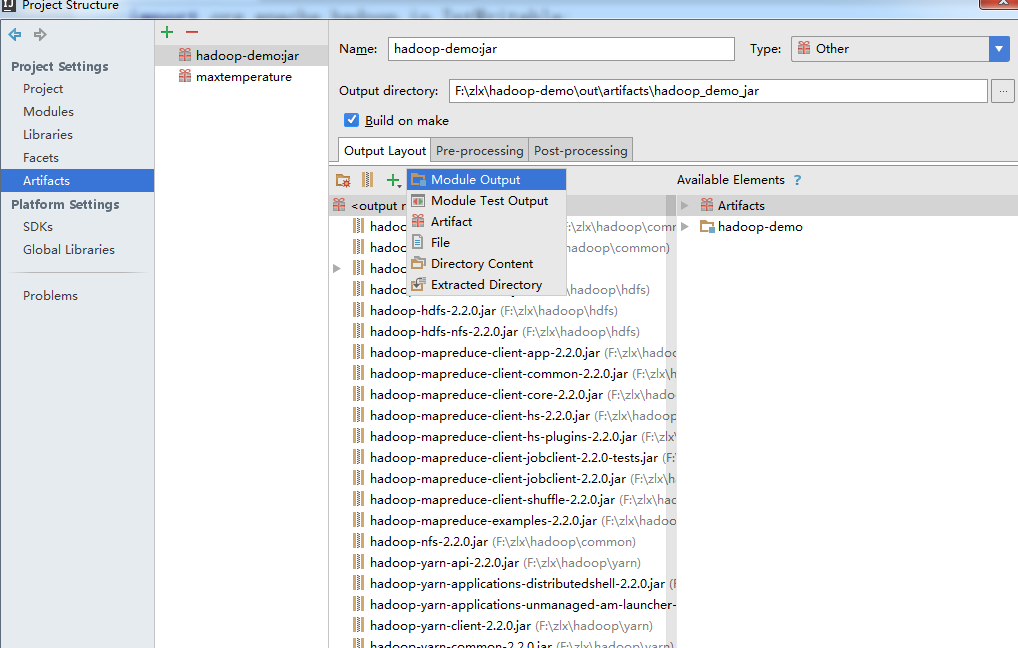

点击output layout下方的+,选择module output,然后勾选我们的项目,点击确定,这时报错的信息就没有了

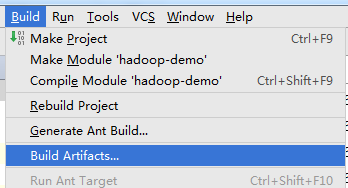

idea打包成jar

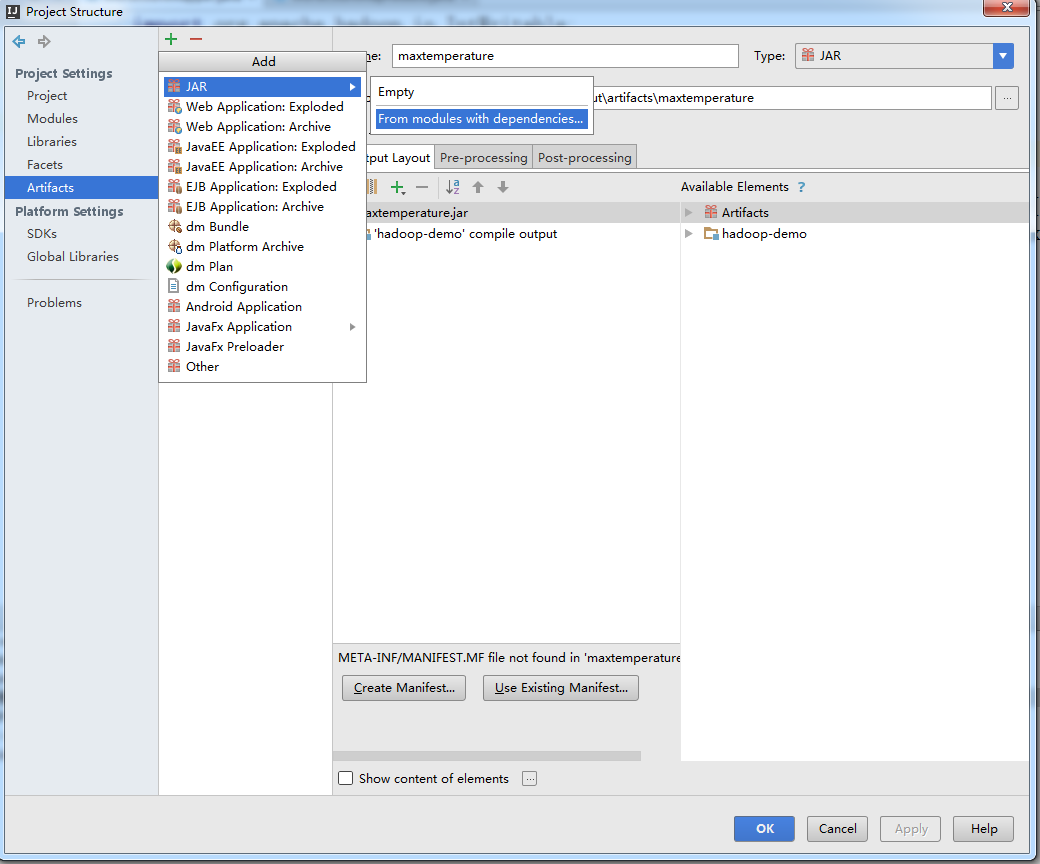

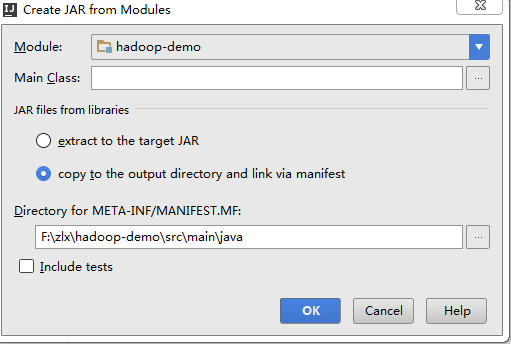

- File->project Structure->点击左侧Arifacts->+->JAR->From modules with dependenciestu,Build on make打上勾

MAain.class为程序的主方法,相当于程序的入口

JAR files from libraries选第二个,选定输出路径->ok

- Build->Build Arifacts->项目的jar->build->到输出路径查看即可。

上传jar包到hadoop集群并运行

- 利用远程工具将生成的.jar上传到hadoop主节点的目录下(/app/hadoop/hadoop-2.2.0)目录根据自己的情况而定

- 创建input目录

hadoop fs -mkdir -p /usr/hadoop/input

- 复制本地文件到hdfs文件系统

hadoop fs -put test.txt /usr/hadoop/input

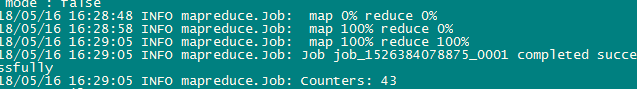

- 现在.jar有了,输入文件有了,执行.jar。切记不要自己手动提前新建输出文件

hadoop jar hadoop_demo_jar/hadoop-demo.jar workCount /usr/hadoop/input /usr/hadoop/output

- 执行成功

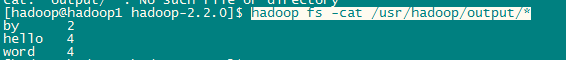

- 查看输出结果

hadoop fs -cat /usr/hadoop/output/*

HDFS常用命令可以参考这篇博客写得不错:https://blog.csdn.net/sunshingheavy/article/details/53227581