webmagic 是一个很好并且很简单的爬虫框架,其教程网址:http://my.oschina.net/flashsword/blog/180623

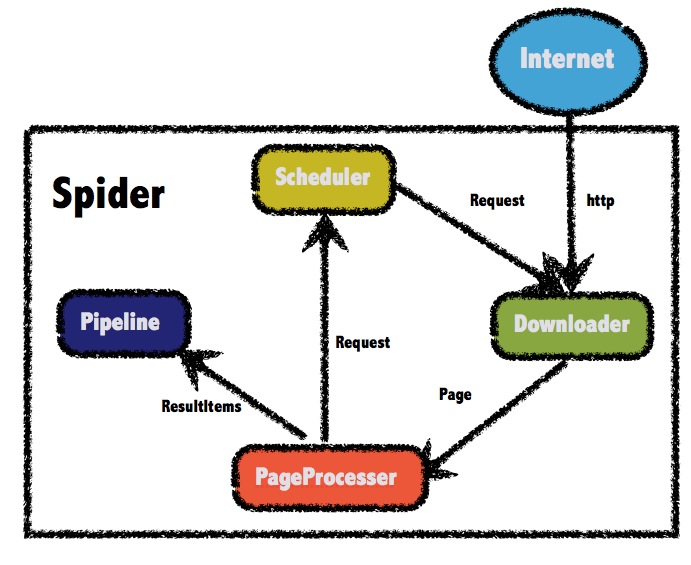

webmagic参考了scrapy的模块划分,分为Spider(整个爬虫的调度框架)、Downloader(页面下载)、PageProcessor(链接提取和页面分析)、Scheduler(URL管理)、Pipeline(离线分析和持久化)几部分。只不过scrapy通过middleware实现扩展,而webmagic则通过定义这几个接口,并将其不同的实现注入主框架类Spider来实现扩展。

关于Scheduler(URL管理) 最基本的功能是实现对已经爬取的URL进行标示。

目前scheduler有三种实现方式:

1)内存队列

2)文件队列

3)redis队列

文件队列保存URL,能实现中断后,继续爬取时,实现增量爬取。

如果我只有一个主页的URL,比如:http://www.cndzys.com/yundong/。如果直接引用webmagic的FileCacheQueueScheduler的话,你会发现第二次启动的时候,什么也爬不到。可以说第二次启动基本不爬取数据了。因为FileCacheQueueScheduler 把http://www.cndzys.com/yundong/ 记录了,然后不再进行新的爬取。虽然是第二次增量爬取,但还是需要保留某些URL重新爬取,以保证爬取结果是我们想要的。我们可以重写FileCacheQueueScheduler里的比较方法。

package com.fortunedr.crawler.expertadvice;

import java.io.BufferedReader;

import java.io.Closeable;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileReader;

import java.io.FileWriter;

import java.io.IOException;

import java.io.PrintWriter;

import java.util.LinkedHashSet;

import java.util.Set;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.Executors;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicBoolean;

import java.util.concurrent.atomic.AtomicInteger;

import org.apache.commons.io.IOUtils;

import org.apache.commons.lang3.math.NumberUtils;

import us.codecraft.webmagic.Request;

import us.codecraft.webmagic.Task;

import us.codecraft.webmagic.scheduler.DuplicateRemovedScheduler;

import us.codecraft.webmagic.scheduler.MonitorableScheduler;

import us.codecraft.webmagic.scheduler.component.DuplicateRemover;

/**

* Store urls and cursor in files so that a Spider can resume the status when shutdown.<br>

*增加去重的校验,对需要重复爬取的网址进行正则过滤

* @author code4crafter@gmail.com <br>

* @since 0.2.0

*/

public class SpikeFileCacheQueueScheduler extends DuplicateRemovedScheduler implements MonitorableScheduler,Closeable {

private String filePath = System.getProperty("java.io.tmpdir");

private String fileUrlAllName = ".urls.txt";

private Task task;

private String fileCursor = ".cursor.txt";

private PrintWriter fileUrlWriter;

private PrintWriter fileCursorWriter;

private AtomicInteger cursor = new AtomicInteger();

private AtomicBoolean inited = new AtomicBoolean(false);

private BlockingQueue<Request> queue;

private Set<String> urls;

private ScheduledExecutorService flushThreadPool;

private String regx;

public SpikeFileCacheQueueScheduler(String filePath) {

if (!filePath.endsWith("/") && !filePath.endsWith("\")) {

filePath += "/";

}

this.filePath = filePath;

initDuplicateRemover();

}

private void flush() {

fileUrlWriter.flush();

fileCursorWriter.flush();

}

private void init(Task task) {

this.task = task;

File file = new File(filePath);

if (!file.exists()) {

file.mkdirs();

}

readFile();

initWriter();

initFlushThread();

inited.set(true);

logger.info("init cache scheduler success");

}

private void initDuplicateRemover() {

setDuplicateRemover(

new DuplicateRemover() {

@Override

public boolean isDuplicate(Request request, Task task) {

if (!inited.get()) {

init(task);

}

boolean temp=false;

String url=request.getUrl();

temp=!urls.add(url);//原来验证URL是否存在

//正则匹配

if(url.matches(regx)){//二次校验,如果符合我们需要重新爬取的,返回false。可以重新爬取

temp=false;

}

return temp;

}

@Override

public void resetDuplicateCheck(Task task) {

urls.clear();

}

@Override

public int getTotalRequestsCount(Task task) {

return urls.size();

}

});

}

private void initFlushThread() {

flushThreadPool = Executors.newScheduledThreadPool(1);

flushThreadPool.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

flush();

}

}, 10, 10, TimeUnit.SECONDS);

}

private void initWriter() {

try {

fileUrlWriter = new PrintWriter(new FileWriter(getFileName(fileUrlAllName), true));

fileCursorWriter = new PrintWriter(new FileWriter(getFileName(fileCursor), false));

} catch (IOException e) {

throw new RuntimeException("init cache scheduler error", e);

}

}

private void readFile() {

try {

queue = new LinkedBlockingQueue<Request>();

urls = new LinkedHashSet<String>();

readCursorFile();

readUrlFile();

// initDuplicateRemover();

} catch (FileNotFoundException e) {

//init

logger.info("init cache file " + getFileName(fileUrlAllName));

} catch (IOException e) {

logger.error("init file error", e);

}

}

private void readUrlFile() throws IOException {

String line;

BufferedReader fileUrlReader = null;

try {

fileUrlReader = new BufferedReader(new FileReader(getFileName(fileUrlAllName)));

int lineReaded = 0;

while ((line = fileUrlReader.readLine()) != null) {

urls.add(line.trim());

lineReaded++;

if (lineReaded > cursor.get()) {

queue.add(new Request(line));

}

}

} finally {

if (fileUrlReader != null) {

IOUtils.closeQuietly(fileUrlReader);

}

}

}

private void readCursorFile() throws IOException {

BufferedReader fileCursorReader = null;

try {

fileCursorReader = new BufferedReader(new FileReader(getFileName(fileCursor)));

String line;

//read the last number

while ((line = fileCursorReader.readLine()) != null) {

cursor = new AtomicInteger(NumberUtils.toInt(line));

}

} finally {

if (fileCursorReader != null) {

IOUtils.closeQuietly(fileCursorReader);

}

}

}

public void close() throws IOException {

flushThreadPool.shutdown();

fileUrlWriter.close();

fileCursorWriter.close();

}

private String getFileName(String filename) {

return filePath + task.getUUID() + filename;

}

@Override

protected void pushWhenNoDuplicate(Request request, Task task) {

queue.add(request);

fileUrlWriter.println(request.getUrl());

}

@Override

public synchronized Request poll(Task task) {

if (!inited.get()) {

init(task);

}

fileCursorWriter.println(cursor.incrementAndGet());

return queue.poll();

}

@Override

public int getLeftRequestsCount(Task task) {

return queue.size();

}

@Override

public int getTotalRequestsCount(Task task) {

return getDuplicateRemover().getTotalRequestsCount(task);

}

public String getRegx() {

return regx;

}

/**

* 设置保留需要重复爬取url的正则表达式

* @param regx

*/

public void setRegx(String regx) {

this.regx = regx;

}

}

那么在爬虫时就引用自己特定的FileCacheQueueScheduler就可以

spider.addRequest(requests);

SpikeFileCacheQueueScheduler file=new SpikeFileCacheQueueScheduler(filePath);

file.setRegx(regx);//http://www.cndzys.com/yundong/(index)?[0-9]*(.html)?

spider.setScheduler(file );

这样就实现了增量爬取。

优化的想法:一般某个网站的内容列表都是首页是最新内容。上面的方式是可以实现增量爬取,但是还是需要爬取很多“无用的”列表页面。

能不能实现,当爬取到上次"最新"URL之后就不再爬取。就是不用爬取其他多余的leib