netty不同于socket,其上次API没有提供设置backlog的选项,而是依赖于操作系统的somaxconn和tcp_max_syn_backlog,对于不同OS或版本,该值不同,建议根据实际并发量针对性设置。

对于linux,可通过cat /proc/sys/net/core/somaxconn查看,如下所示:

[root@dev-server ~]# cat /proc/sys/net/core/somaxconn

128

其含义官方说的很清楚,是三次握手通过后等待被应用accept的数量,如下所示:

The behavior of the backlog argument on TCP sockets changed with Linux 2.2. Now it specifies the queue length for completely established sockets waiting to be accepted, instead of the number of incomplete connection requests. The maximum length of the queue for incomplete sockets can be set using/proc/sys/net/ipv4/tcp_max_syn_backlog. When syncookies are enabled there is no logical maximum length and this setting is ignored.

tcp_max_syn_backlog则是尚未完成握手的数量。

按照官方所述,可通过如下方式更改:

echo 2048 > /proc/sys/net/core/somaxconn

以下测试基于centos 6.6 x86-64,我们先看直接修改/proc/sys/net/core/somaxconn的效果。

[root@dev-server sbin]# cat /proc/sys/net/core/somaxconn

10

修改后,打开几个ssh窗口,如下:

[root@dev-server sbin]# netstat -ano | grep 22 | grep tcp | grep EST

tcp 0 0 192.168.146.128:22 192.168.146.1:8058 ESTABLISHED keepalive (4902.43/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8113 ESTABLISHED keepalive (4997.28/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8122 ESTABLISHED keepalive (5011.72/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8419 ESTABLISHED keepalive (5422.60/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8411 ESTABLISHED keepalive (5412.07/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8085 ESTABLISHED keepalive (4952.01/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8284 ESTABLISHED keepalive (5244.48/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8291 ESTABLISHED keepalive (5250.04/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:7120 ESTABLISHED keepalive (3950.53/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8497 ESTABLISHED keepalive (5542.61/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8061 ESTABLISHED keepalive (4909.67/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8013 ESTABLISHED keepalive (4831.05/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8103 ESTABLISHED keepalive (4986.47/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8292 ESTABLISHED keepalive (5254.47/0/0)

tcp 0 52 192.168.146.128:22 192.168.146.1:8511 ESTABLISHED on (0.24/0/0)

tcp 0 0 192.168.146.128:22 192.168.146.1:8289 ESTABLISHED keepalive (5247.21/0/0)

[root@dev-server sbin]# netstat -ano | grep 22 | grep tcp | grep EST | wc -l

16

因为ssh连接数默认值的原因,一直没有生效,现在换成nginx。

用nginx作为服务器,ngnix默认连接1024个,我们将它改到2000,重启。

然后java发socket连接:

public static void main(String[] args) throws InterruptedException { List<Socket> list = new ArrayList<Socket>(); for(int i=0;i<2000;i++) { try { Socket socket = new Socket("192.168.146.128",80); list.add(socket); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } System.out.println("连接已经全部建立"); Thread.sleep(1000000); }

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1035

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1033

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1033

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1035

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1033

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "SYN" | wc -l

8

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "SYN" | wc -l

8

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1033

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1033

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "SYN" | wc -l

7

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "SYN" | wc -l

8

在/etc/sysctl.conf中添加如下:

net.core.somaxconn = 100

sysctl -p即可。

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

0

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

200

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

200

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

1026

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1034

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

1026

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1035

最大连接数好像还是1024,somaxconn像是最大连接数的限制,当一个监听端口尝试建立的连接超过1024时,其他尚未被接受的连接状态会处于SYN_RECV状态,如下:

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "SYN"

tcp 0 0 192.168.146.128:80 192.168.146.1:21131 SYN_RECV on (3.68/2/0)

tcp 0 0 192.168.146.128:80 192.168.146.1:21138 SYN_RECV on (0.30/0/0)

tcp 0 0 192.168.146.128:80 192.168.146.1:21133 SYN_RECV on (0.28/0/0)

tcp 0 0 192.168.146.128:80 192.168.146.1:21130 SYN_RECV on (0.68/2/0)

tcp 0 0 192.168.146.128:80 192.168.146.1:21122 SYN_RECV on (2.68/3/0)

tcp 0 0 192.168.146.128:80 192.168.146.1:21128 SYN_RECV on (0.88/2/0)

tcp 0 0 192.168.146.128:80 192.168.146.1:21132 SYN_RECV on (3.48/2/0)

tcp 0 0 192.168.146.128:80 192.168.146.1:21120 SYN_RECV on (0.08/3/0)

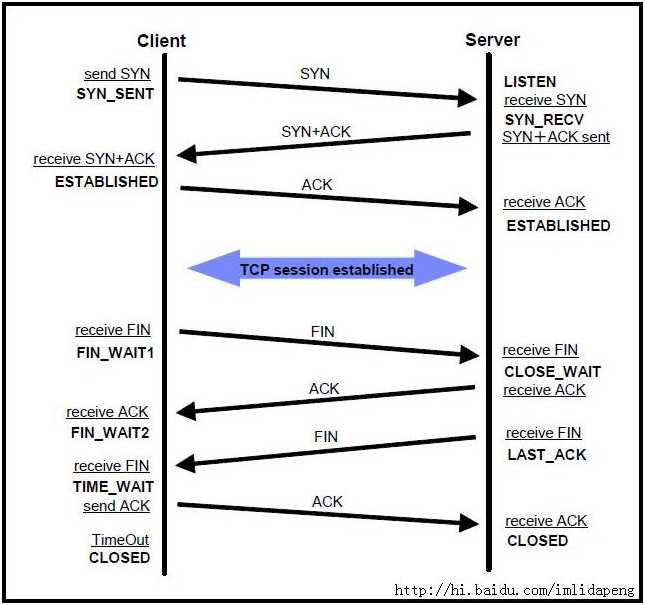

服务端收到建立连接的SYN、但没有收到ACK包或者没有发出SYNC+ACK的时候处在SYN_RECV状态。如下所示:

PS:上面这张图的关闭部分其实不是完全正确的,左边称为主动发起方、右边称为被动方才是正确的,因为作为发起者完全可以是server。

现在我们来看重启之后的情况:

[root@dev-server ~]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SY" | wc -l

1128

[root@dev-server ~]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

1116

[root@dev-server ~]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

1116

[root@dev-server ~]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SY" | wc -l

1134

可以发现,上下波动,基本上还是这个值左右。

现在增加看看,

[root@dev-server ~]# sysctl -p

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

error: "net.bridge.bridge-nf-call-ip6tables" is an unknown key

error: "net.bridge.bridge-nf-call-iptables" is an unknown key

error: "net.bridge.bridge-nf-call-arptables" is an unknown key

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

net.core.somaxconn = 2000

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

1527

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

1527

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1549

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1555

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1555

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1555

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1557

[root@dev-server sbin]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST|SYN" | wc -l

1557

可以发现,已经上来了,因为系统vmware配置原因还没有全部连接上来。

======

现在我们同时减少nginx和somaxconn的值,来看下:

nginx最大连接数设置100,somaxconn设置为200.

[root@dev-server conf]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

76

[root@dev-server conf]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

76

[root@dev-server conf]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

88

[root@dev-server conf]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

88

[root@dev-server conf]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

88

[root@dev-server conf]# netstat -ano | grep 128:80 | grep tcp | egrep -e "EST" | wc -l

发现最大连接数同时受应用程序和somaxconn控制,应用小时取应用,应用大时取了1024。

因为用的是短连接测试,抽空再找时间测试下长连接的情况。