Nova集成Ceph

1. 配置ceph.conf

# 如果需要从ceph rbd中启动虚拟机,必须将ceph配置为nova的临时后端; # 推荐在计算节点的配置文件中启用rbd cache功能; # 为了便于故障排查,配置admin socket参数,这样每个使用ceph rbd的虚拟机都有1个socket将有利于虚拟机性能分析与故障解决; # 相关配置只涉及全部计算节点ceph.conf文件的[client]与[client.cinder]字段,以compute01节点为例

# 如果需要从ceph rbd中启动虚拟机,必须将ceph配置为nova的临时后端; # 推荐在计算节点的配置文件中启用rbd cache功能; # 为了便于故障排查,配置admin socket参数,这样每个使用ceph rbd的虚拟机都有1个socket将有利于虚拟机性能分析与故障解决; # 相关配置只涉及全部计算节点ceph.conf文件的[client]与[client.cinder]字段,以compute01节点为例 [root@compute01 ~]# vim /etc/ceph/ceph.conf [client] rbd cache = true rbd cache writethrough until flush = true admin socket = /var/run/ceph/guests/$cluster-$type.$id.$pid.$cctid.asok log file = /var/log/qemu/qemu-guest-$pid.log rbd concurrent management ops = 20 [client.cinder] keyring = /etc/ceph/ceph.client.cinder.keyring # 创建ceph.conf文件中指定的socker与log相关的目录,并更改属主 [root@compute01 ~]# mkdir -p /var/run/ceph/guests/ /var/log/qemu/ [root@compute01 ~]# chown qemu:libvirt /var/run/ceph/guests/ /var/log/qemu/

2. 配置nova.conf

# 在全部计算节点配置nova后端使用ceph集群的vms池,以compute01节点为例

[root@compute01 ~]# vim /etc/nova/nova.conf

[libvirt]

virt_type=kvm

images_type = rbd

images_rbd_pool = vms

images_rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_user = cinder

# uuid前后一致

rbd_secret_uuid = 40333768-948a-4572-abe0-716762154a1e

disk_cachemodes="network=writeback"

live_migration_flag="VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_PERSIST_DEST,VIR_MIGRATE_TUNNELLED"

# 禁用文件注入

inject_password = false

inject_key = false

inject_partition = -2

# 虚拟机临时root磁盘discard功能,”unmap”参数在scsi接口类型磁盘释放后可立即释放空间

hw_disk_discard = unmap

[root@compute02 ceph]# systemctl restart libvirtd.service openstack-nova-compute.service

3. 配置live-migration

1)修改/etc/libvirt/libvirtd.conf

# 在全部计算节点操作,以compute01节点为例; # 以下给出libvirtd.conf文件的修改处所在的行num [root@compute01 ~]# egrep -vn "^$|^#" /etc/libvirt/libvirtd.conf # 取消以下三行的注释 22:listen_tls = 0 33:listen_tcp = 1 45:tcp_port = "16509" # 取消注释,并修改监听端口 55:listen_addr = "10.100.214.205" # 取消注释,同时取消认证 158:auth_tcp = "none"

2)修改/etc/sysconfig/libvirtd

# 在全部计算节点操作,以compute01节点为例; # 以下给出libvirtd文件的修改处所在的行num [root@compute01 ~]# egrep -vn "^$|^#" /etc/sysconfig/libvirtd # 取消注释 9:LIBVIRTD_ARGS="--listen"

4)重启服务

[root@compute01 ~]# systemctl restart libvirtd.service openstack-nova-compute.service

[root@compute01 ~]# ss -tunpl | grep 16509

tcp LISTEN 0 128 10.100.214.205:16509 *:* users:(("libvirtd",pid=12696,fd=13))

4. 验证

1)创建基于ceph存储的bootable存储卷

# 当nova从rbd启动instance时,镜像格式必须是raw格式,否则虚拟机在启动时glance-api与cinder均会报错; # 首先进行格式转换,将*.img文件转换为*.raw文件

[root@controller01 ~]# qemu-img convert -f qcow2 -O raw ~/cirros-0.3.5-x86_64-disk.img ~/cirros-0.3.5-x86_64-disk.raw

# 生成raw格式镜像 [root@controller01 ~]# openstack image create "cirros-raw" --file ~/cirros-0.3.5-x86_64-disk.raw --disk-format raw --container-format bare --public

# 使用新镜像创建bootable卷

[root@controller01 ~]# cinder create --image-id 666e592d-a4b5-4d8a-8aa6-4eaf5d818e97 --volume-type ceph --name centos75 25

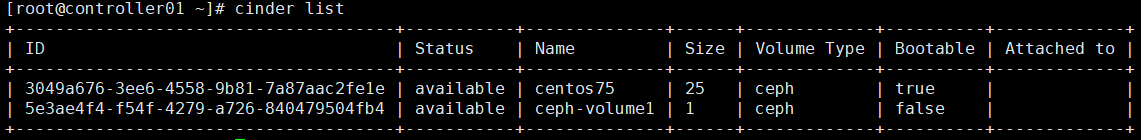

# 查看新创建的bootable卷 [root@controller01 ~]# cinder list

# 从基于ceph后端的volumes新建实例; # “--boot-volume”指定具有”bootable”属性的卷,启动后,虚拟机运行在volumes卷

创建网络

openstack network create external

创建subnet

openstack subnet create --network external --allocation-pool start=10.100.214.150,end=10.100.214.200 --dns-nameserver 8.8.8.8 --gateway 10.100.214.254 --subnet-range 10.100.214.0/24 subnet01

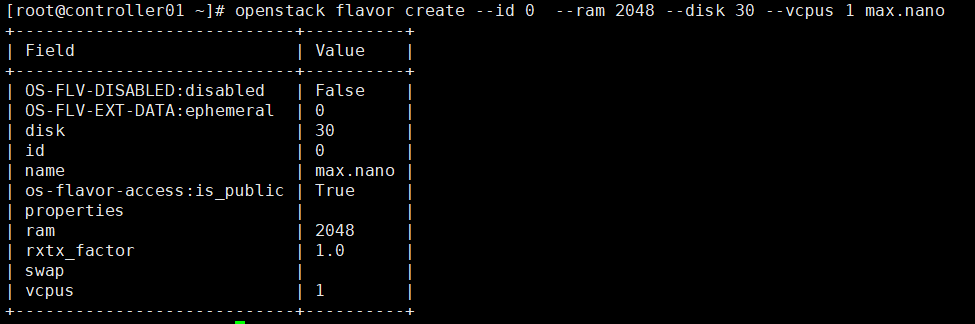

创建主机规格

[root@controller01 ~]# openstack flavor create --id 0 --ram 2048 --disk 30 --vcpus 1 max.nano

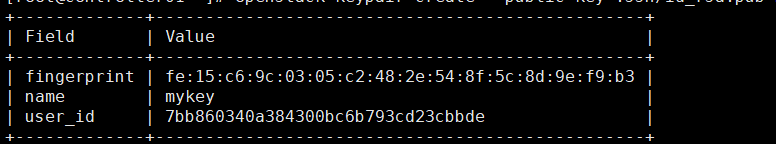

创建密钥对

[root@controller01 ~]# openstack keypair create --public-key .ssh/id_rsa.pub mykey

创建安全组规则(使用默认的)

[root@controller01 ~]# openstack security group list

+--------------------------------------+---------+------------------------+----------------------------------+------+

| ID | Name | Description | Project | Tags |

+--------------------------------------+---------+------------------------+----------------------------------+------+

| 7e8b4179-842f-4555-b5ac-f6d946371230 | default | Default security group | 8152877d890d4727ac6f01a94e67ae15 | [] |

+--------------------------------------+---------+------------------------+----------------------------------+------+

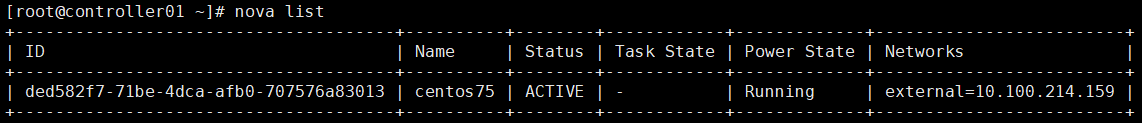

实例创建:

[root@controller01 ~]# nova boot --flavor max.nano --boot-volume 3049a676-3ee6-4558-9b81-7a87aac2fe1e --nic net-id=f931d6ff-6f30-4acf-9025-4961eeae21ea --security-group default --key-name mykey centos75

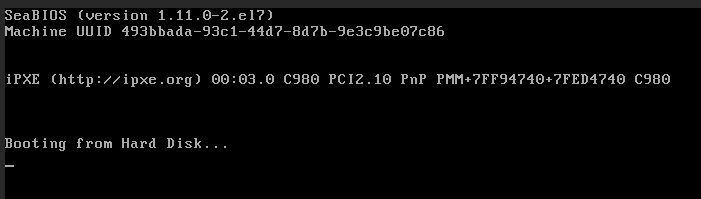

2)从ceph rbd启动虚拟机

[root@controller01 ~]# nova boot --flavor max.nano --image 666e592d-a4b5-4d8a-8aa6-4eaf5d818e97 --nic net-id=f931d6ff-6f30-4acf-9025-4961eeae21ea --security-group default --key-name mykey test01

进入页面发现:

openstack image set --property hw_disk_bus=ide --property hw_vif_model=e1000 666e592d-a4b5-4d8a-8aa6-4eaf5d818e97

openstack image set --property hw_disk_bus=ide --property hw_vif_model=e1000 bcad5a65-3ff6-4b88-bc24-d4043097895c

重建虚拟机: