环境规划

k8s-master1 192.168.248.161 kube-apiserver kube-controller-manager kube-scheduler k8s-master2 192.168.248.162 kube-apiserver kube-controller-manager kube-scheduler k8s-node01 192.168.248.162 kubelet kube-proxy docker LB(Master) 192.168.248.202 Nginx Keepalived LB(Backup) 192.168.248.206 Nginx Keepalived nfs-server etcd01 192.168.248.202 etcd etcd02 192.168.248.220 etcd etcd03 192.168.248.224 etcd VIP:192.168.248.209

备注:因为服务器资源问题,只部署了2个master节点1个node节点,并且192.168.248.162这台机器即充当master节点又充当唯一的node节点,192.168.248.202这台机器即充当LB又充当etcd01,etcd集群的安装部署参考https://www.cnblogs.com/zhangmingcheng/p/13625664.html,LB的集群的安装部署参考https://www.cnblogs.com/zhangmingcheng/p/13646907.html。

系统初始化(master节点与node节点需要执行):

#系统更新

yum update -y

#安装ipvs相关模块

yum install -y epel-release conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

#关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

#关闭selinux:

setenforce 0 # 临时

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

关闭swap:

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

cat /etc/fstab

#同步系统时间:

ntpdate time.windows.com

#添加hosts(可忽略):

vim /etc/hosts

192.168.248.161 k8s-master1

192.168.248.162 k8s-master2

192.168.248.162 k8s-node01

#修改主机名(可忽略):

hostnamectl set-hostname NAME

#设置内核 将桥接的IPv4流量传递到iptables的链

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

#准备部署目录

mkdir -p /opt/etcd/{cfg,ssl,bin}

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

echo 'export PATH=/opt/kubernetes/bin:$PATH' > /etc/profile.d/k8s.sh

echo 'export PATH=/opt/etcd/bin:$PATH' > /etc/profile.d/etcd.sh

source /etc/profile

部署k8s集群

生成各组件所需证书

#创建CA配置文件

cd tls/k8s/

cat << EOF | tee ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

#创建CA签名请求

cat << EOF | tee ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

#创建kubernetes证书所需文件

cat << EOF | tee server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"10.0.0.1",

"192.168.248.161",

"192.168.248.162",

"192.168.248.209",

"192.168.248.202",

"192.168.248.206"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

#注:上述文件hosts字段中IP为所有Master/LB/VIP IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

#创建kube-proxy证书所需文件

cat << EOF | tee kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

#创建admin证书签名请求

cat << EOF | tee admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

#生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

#分发证书

scp *.pem k8s-masterIP:/opt/kubernetes/ssl/

scp kube-proxy*.pem ca.pem k8s-nodeIP:/opt/kubernetes/ssl/

准备二进制文件

cd /opt wget https://dl.k8s.io/v1.18.6/kubernetes-server-linux-amd64.tar.gz tar xf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bin/ scp kube-apiserver kube-scheduler kube-controller-manager kubelet kube-proxy kubectl k8s-masterIP:/opt/kubernetes/bin/ scp kubelet kube-proxy k8s-nodeIP:/opt/kubernetes/bin/

部署k8s-master1

#创建kube-apiserver配置文件

vim /opt/kubernetes/cfg/kube-apiserver.conf

KUBE_APISERVER_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--etcd-servers=https://192.168.248.202:2379,https://192.168.248.220:2379,https://192.168.248.224:2379

--bind-address=192.168.248.161

--secure-port=6443

--advertise-address=192.168.248.161

--allow-privileged=true

--service-cluster-ip-range=10.0.0.0/24

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction,DefaultStorageClass

--authorization-mode=RBAC,Node

--enable-bootstrap-token-auth=true

--token-auth-file=/opt/kubernetes/cfg/token.csv

--service-node-port-range=30000-32767

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem

--tls-cert-file=/opt/kubernetes/ssl/server.pem

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem

--client-ca-file=/opt/kubernetes/ssl/ca.pem

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem

--etcd-cafile=/opt/etcd/ssl/ca.pem

--etcd-certfile=/opt/etcd/ssl/server.pem

--etcd-keyfile=/opt/etcd/ssl/server-key.pem

--audit-log-maxage=30

--audit-log-maxbackup=3

--audit-log-maxsize=100

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

#创建kube-apiserver系统服务

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

#创建controller-manager配置文件

vim /opt/kubernetes/cfg/kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--leader-elect=true

--master=127.0.0.1:8080

--address=127.0.0.1

--allocate-node-cidrs=true

--cluster-cidr=10.244.0.0/16

--service-cluster-ip-range=10.0.0.0/24

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem

--root-ca-file=/opt/kubernetes/ssl/ca.pem

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem

--experimental-cluster-signing-duration=87600h0m0s"

#创建controller-manager系统服务

vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

#创建scheduler配置文件

vim /opt/kubernetes/cfg/kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--leader-elect

--master=127.0.0.1:8080

--address=127.0.0.1"

#创建scheduler系统服务

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

启用TLS Bootstrapping

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > /opt/kubernetes/cfg/token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

cat /opt/kubernetes/cfg/token.csv

d5ff43e323ee5ffedec6fd630e37daaf,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

#启动master组件

systemctl daemon-reload

systemctl enable kube-apiserver kube-controller-manager kube-scheduler

systemctl restart kube-apiserver kube-controller-manager kube-scheduler

systemctl status kube-apiserver kube-controller-manager kube-scheduler

注意:需要将etcd服务器上的ssl证书目录上传到k8s-master1:/opt/etcd目录

部署k8s-master2

#拷贝master1组件配置文件

scp -r /opt/kubernetes 192.168.0.132:/opt/

scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service 192.168.0.132:/usr/lib/systemd/system

#需要修改master2的apiserver配置文件为本地IP,启动服务即可

注意:需要将etcd服务器上的ssl证书目录上传到k8s-master2:/opt/etcd目录

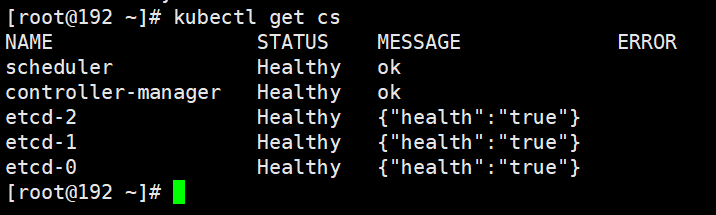

查看集群信息

kubectl get cs

给kubelet-bootstrap授权用户允许请求证书(master节点执行)

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

部署node节点

生成bootstrap.kubeconfig文件

KUBE_APISERVER="https://192.168.248.209:6443" # apiserver IP:PORT

TOKEN="d5ff43e323ee5ffedec6fd630e37daaf" # 与token.csv里保持一致

# 生成 kubelet bootstrap kubeconfig 配置文件

kubectl config set-cluster kubernetes

--certificate-authority=/opt/kubernetes/ssl/ca.pem

--embed-certs=true

--server=${KUBE_APISERVER}

--kubeconfig=bootstrap.kubeconfig

#设置客户端认证参数

kubectl config set-credentials "kubelet-bootstrap"

--token=${TOKEN}

--kubeconfig=bootstrap.kubeconfig

#设置上下文参数

kubectl config set-context default

--cluster=kubernetes

--user="kubelet-bootstrap"

--kubeconfig=bootstrap.kubeconfig

#设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

拷贝到配置文件路径:

cp bootstrap.kubeconfig /opt/kubernetes/cfg

matser节点批准kubelet证书申请并加入集群

# 查看kubelet证书请求 kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-uCEGPOIiDdlLODKts8J658HrFq9CZ--K6M4G7bjhk8A 6m3s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending # 批准申请 kubectl certificate approve node-csr-uCEGPOIiDdlLODKts8J658HrFq9CZ--K6M4G7bjhk8A

安装docker(此步骤忽略)

部署kubelet和kube-proxy

#创建 kubelet bootstrapping kubeconfig 文件

vim /opt/kubernetes/cfg/bootstrap.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /opt/kubernetes/ssl/ca.pem

server: https://192.168.248.209:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubelet-bootstrap

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kubelet-bootstrap

user:

token: d5ff43e323ee5ffedec6fd630e37daaf

#创建kubelet配置文件

vim /opt/kubernetes/cfg/kubelet.conf

KUBELET_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--hostname-override=192.168.248.162 ###各node节点hostname

--network-plugin=cni

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig

--config=/opt/kubernetes/cfg/kubelet-config.yml

--cert-dir=/opt/kubernetes/ssl

--pod-infra-container-image=lizhenliang/pause-amd64:3.0"

#创建kubelet-config.yml文件

vim /opt/kubernetes/cfg/kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

#创建kubelet系统服务

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

###创建kube-proxy配置文件

vim /opt/kubernetes/cfg/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR2akNDQXFhZ0F3SUJBZ0lVZDZQM04vSmhRNUtJczZ1elA3OXo5UHgzWExjd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEl3TURrd09EQXlNVFl3TUZvWERUSTFNRGt3TnpBeU1UWXdNRm93WlRFTE1Ba0cKQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbGFXcHBibWN4RERBSwpCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByZFdKbGNtNWxkR1Z6Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBeHA4akMzekVSbVY4bnovUThycnQKZmVxdExKaFZ2cDdMbGdwWFhYNTBJaDFyOG5xcWNJOElmakVMRG00NGE3Sm5WYTdlRWJubCtSVjJzc2RhRlRPVQpTOXQvWDFJZHhHZHh1UEllSms3VjVwU2FFMjQ3ZDF2NmxHWThFUjNaME5IZnIwOEUzeHNsaG1vUzI5MFN0MHBJCjY4S0VuRFFGQ1VSaG9CMzE4VEtDbDV5K3pJMHQ4M3VoUVEybmV6SkV0WHVZNVVuUVVZMU1mUkhMRzg5SnplYUkKdXlvbSsrdEg5VWFSdmFPVFUzYkpFQldnUHpMakcrTkpaSi9VNHA1ak0vQ1FuTC9UbS8wdWd0c0IvbWlVMTlVNgp5RWtnV2tjOGxYSkM2OWhNYUtNSVVaZFBiQjZOZk80Y0IycmxSSDRiekM0QTY5bFpYR2piYUU0U1BqTXZwaVh1CklRSURBUUFCbzJZd1pEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0VnWURWUjBUQVFIL0JBZ3dCZ0VCL3dJQkFqQWQKQmdOVkhRNEVGZ1FVZE9aL0lSbU9LT051K1pIcEZNOEVoNFNiellVd0h3WURWUjBqQkJnd0ZvQVVkT1ovSVJtTwpLT051K1pIcEZNOEVoNFNiellVd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEcDdrR2JlREpGZ2E0RjRGd2p4ClJLVkxsQkYrVFFSOHA5Vno2bTA2eVFTcWpEcXNIUTNVeStMaFZoTWJCQTdBOFhIOEtNVzByMWVQMi9Vd1NsaHYKMlVZdWZEcW5nYWNRRG0xSTE4cjRhRjl6c1UzN29Ycko3V0V6S1QzY2RQZHltWVhjUWN4eGZkQnMxdHpjU1B4TQpVbUVsVG9kQ0lNMjhuRHdoT1kvZ1JSZlFycTVISjhjQ1BaSVhtME9lQ2xKRzdHQVRXWC8xNW9hb2FCakQ3cVVhCmErZXZYQUcvZldhekF2dzJuS3VESisvdldXQnlQdER2TUNyRDNQNkxhZk5aY2s3Y044YjZ1Y2pHS3JpUVg3YncKUDVvQ1Vsd21HaTVWKzBHdkt1UmdGWkxpbStVektQUFhiUGVMVzlaNlo1U0tsM0RwY0FKcmtCZ3N4bTVQMzZITApvUWs9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://192.168.248.209:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-proxy

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-proxy

user:

client-certificate: /opt/kubernetes/ssl/kube-proxy.pem

client-key: /opt/kubernetes/ssl/kube-proxy-key.pem

#vim /opt/kubernetes/cfg/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 192.168.248.162:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: 192.168.248.162

clusterCIDR: 10.244.0.0/24

#创建kube-proxy系统服务

vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#将配置文件和系统服务文件分发到各node节点即可启动服务

systemctl daemon-reload

systemctl enable kubelet kube-proxy

systemctl restart kubelet kube-proxy

systemctl status kubelet kube-proxy

查看节点

kubectl get node NAME STATUS ROLES AGE VERSION 192.168.248.162 NotReady <none> 7s v1.18.6

注:由于网络插件还没有部署,节点会没有准备就绪 NotReady

部署CNI网络

先准备好CNI二进制文件:

下载地址:https://github.com/containernetworking/plugins/releases/download/v0.8.6/cni-plugins-linux-amd64-v0.8.6.tgz

解压二进制包并移动到默认工作目录:

mkdir /opt/cni/bin tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

部署CNI网络:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yml

默认镜像地址无法访问,修改为docker hub镜像仓库。

# cat kube-flannel.yaml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: lizhenliang/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: lizhenliang/flannel:v0.12.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

# kubectl apply –f kube-flannel.yaml

# kubectl get pods -n kube-system

部署好网络插件,Node准备就绪。

授权apiserver访问kubelet

# cat apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

# kubectl apply -f apiserver-to-kubelet-rbac.yaml

部署dashboard(默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部)

# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta4/aio/deploy/recommended.yaml

# vim recommended.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

type: NodePort

selector:

k8s-app: kubernetes-dashboard

# kubectl apply -f recommended.yaml

访问地址:https://NodeIP:30001

创建service account并绑定默认cluster-admin管理员集群角色:

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

使用输出的token登录Dashboard。

部署coredns

# cat coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

serviceAccountName: coredns

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

containers:

- name: coredns

image: coredns/coredns:1.2.6

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

# kubectl apply -f coredns.yaml

DNS解析测试:

[root@192 opt]# kubectl run -it --rm dns-test --image=busybox:1.28.4 sh If you don't see a command prompt, try pressing enter. / # nslookup kubernetes Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local Name: kubernetes Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local / #

备注:

1、TLS Bootstraping

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

TLS bootstraping 工作流程:

创建上述配置文件中token文件:

cat > /opt/kubernetes/cfg/token.csv << EOF c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper" EOF

格式:token,用户名,UID,用户组

token也可自行生成替换:

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

参考:https://www.cnblogs.com/wyt007/p/13356734.html?utm_source=tuicool

参考:https://www.cnblogs.com/yuezhimi/p/12931002.html

参考:https://www.jianshu.com/p/49a4dcd30313

高可用的K8S集群部署方案:https://www.cnblogs.com/ants/p/11489598.html