基本命令:

[root@master shell]# cd ${HBASE_HOME}

[root@master hbase]# pwd

/opt/hbase

[root@master hbase]# ./bin/hbase shell

hbase(main):001:0> status

1 active master, 0 backup masters, 2 servers, 0 dead, 1.0000 average load

hbase(main):002:0> create 'test','info'

;

0 row(s) in 2.3730 seconds

=> Hbase::Table - test

hbase(main):005:0* status

1 active master, 0 backup masters, 2 servers, 0 dead, 1.5000 average load

hbase(main):009:0> list 'test'

TABLE

test

1 row(s) in 0.0230 seconds

=> ["test"]

hbase(main):011:0> describe 'test'

Table test is ENABLED

test

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSIO

N => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

1 row(s) in 0.0860 seconds

插入和更新

hbase(main):012:0> put 'test', 'row_key1', 'info:course','physic'

0 row(s) in 0.1670 seconds

hbase(main):013:0> put 'test', 'row_key1', 'info:course','math' #这条数据会将上面的一条update掉

0 row(s) in 0.0340 seconds

hbase(main):014:0> put 'test', 'row_key2', 'info:score','98'

0 row(s) in 0.0060 seconds

hbase(main):015:0> put 'test', 'row_key2', 'info:score','90' #这条数据会将上面的一条update掉

0 row(s) in 0.0330 seconds

hbase(main):016:0> scan 'test'

ROW COLUMN+CELL

row_key1 column=info:course, timestamp=1524017746124, value=math

row_key2 column=info:score, timestamp=1524017756181, value=90

2 row(s) in 0.0880 seconds

列族:

hbase(main):017:0> put 'test', 'row_key2', 'info:course','math'

0 row(s) in 0.0210 seconds

hbase(main):018:0> put 'test', 'row_key1', 'info:score','98'

0 row(s) in 0.0080 seconds

hbase(main):020:0> scan 'test'

ROW COLUMN+CELL

row_key1 column=info:course, timestamp=1524017746124, value=math

row_key1 column=info:score, timestamp=1524017895247, value=98

row_key2 column=info:course, timestamp=1524017889154, value=math

row_key2 column=info:score, timestamp=1524017899494, value=90

2 row(s) in 0.0150 seconds

查询

hbase(main):023:0> get 'test', 'row_key1'

COLUMN CELL

info:course timestamp=1524017746124, value=math

info:score timestamp=1524017895247, value=98

2 row(s) in 0.0260 seconds

hbase(main):024:0>

注意,我从node2节点获取数据:

[root@node2 ~]# /opt/hbase/bin/hbase shell

2018-04-18 10:23:54,026 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hbase/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.6, rUnknown, Mon May 29 02:25:32 CDT 2017

hbase(main):001:0> scan 'test'

ROW COLUMN+CELL

row_key1 column=info:course, timestamp=1524017746124, value=math

row_key1 column=info:score, timestamp=1524017895247, value=98

row_key2 column=info:course, timestamp=1524017889154, value=math

row_key2 column=info:score, timestamp=1524017899494, value=90

2 row(s) in 0.2680 seconds

hbase(main):002:0>

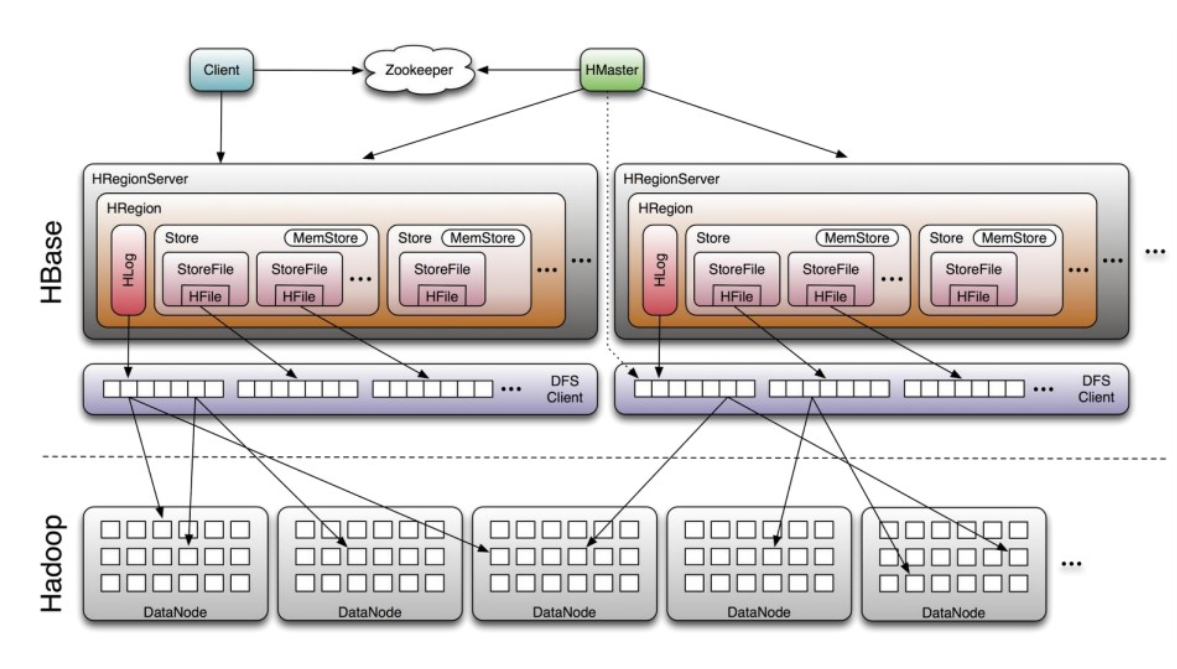

由此可以得出hbase是基于hdfs存储的,可以多读多写。而数据又是分布式存储的(hdfs)。

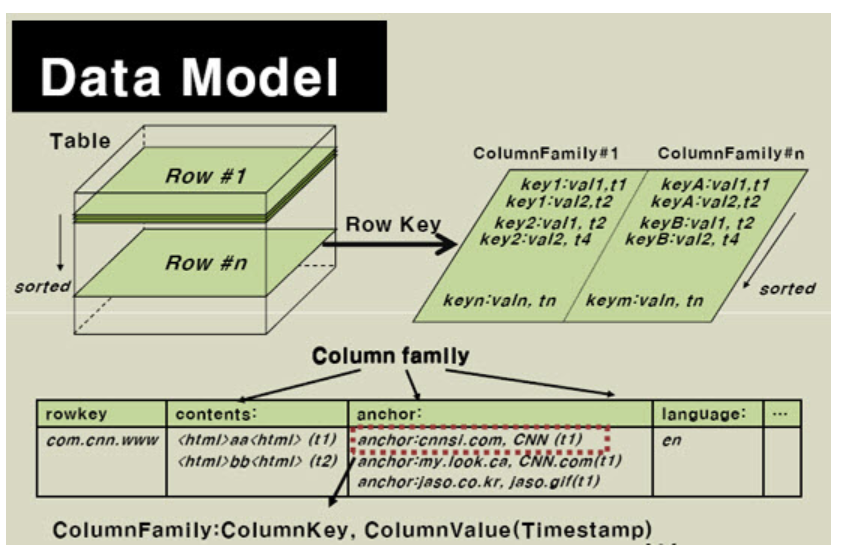

数据模型图:

[root@master hadoop]# vi /opt/hbase/conf/hbase-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- /** * * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. See the NOTICE file * distributed with this work for additional information * regarding copyright ownership. The ASF licenses this file * to you under the Apache License, Version 2.0 (the * "License"); you may not use this file except in compliance * with the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ --> <configuration> <property> <name>hbase.rootdir</name> <value>hdfs://master:9000/hbase/hbase_db</value> </property>

此处的 hbase.rootdir : hdfs://master:9000/hbase/hbase_db ,如果用shell命令用 ls 是查不出来的,

因为它是一个hdfs的相对路径,而存储hdfs的数据目录是可在hdfs-site.xml里配置,但是数据是以block形式存储的,只能用hdfs的命令查询

查看hdfs数据存储路径

[root@master hadoop]# vi /opt/hadoop/etc/hadoop/hdfs-site.xml

<!-- 设置datanode存放的路径 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoop/data</value>

</property>

</configuration>

从上面的配置可以看出,hdfs的数据是存放在datanode服务器下的。所以在master下没这个目录的

[root@master hadoop]# cd /opt/hadoop/data

-bash: cd: /opt/hadoop/data: No such file or directory

[root@node1 current]# cd /opt/hadoop/data

[root@node1 data]# ls

current in_use.lock

[root@node1 data]#

[root@node2 hadoop]# cd /opt/hadoop/data

[root@node2 data]# ls

current in_use.lock

[root@node2 data]#

查看hbase的数据存放路径

hadoop fs -ls /hbase/hbase_db

[root@master hadoop]# hadoop fs -ls /hbase/hbase_db (备注:现在的版本hadoop fs被改成了hdfs fs)

Found 9 items

drwxr-xr-x - root supergroup 0 2018-04-18 10:04 /hbase/hbase_db/.tmp

drwxr-xr-x - root supergroup 0 2018-04-18 11:10 /hbase/hbase_db/MasterProcWALs

drwxr-xr-x - root supergroup 0 2018-04-18 09:32 /hbase/hbase_db/WALs

drwxr-xr-x - root supergroup 0 2018-04-18 10:18 /hbase/hbase_db/archive

drwxr-xr-x - root supergroup 0 2018-04-17 02:59 /hbase/hbase_db/corrupt

drwxr-xr-x - root supergroup 0 2018-04-15 10:29 /hbase/hbase_db/data

-rw-r--r-- 3 root supergroup 42 2018-04-15 10:29 /hbase/hbase_db/hbase.id

-rw-r--r-- 3 root supergroup 7 2018-04-15 10:29 /hbase/hbase_db/hbase.version

drwxr-xr-x - root supergroup 0 2018-04-18 11:43 /hbase/hbase_db/oldWALs

表test的数据存放目录/hbase/hbase_db/data/default/test/

[root@node1 opt]# hadoop fs -ls /hbase/hbase_db/data/default/test/402710672d039c97b3006531c037d9ae

Found 4 items

-rw-r--r-- 3 root supergroup 39 2018-04-18 10:05 /hbase/hbase_db/data/default/test/402710672d039c97b3006531c037d9ae/.regioninfo

drwxr-xr-x - root supergroup 0 2018-04-18 11:17 /hbase/hbase_db/data/default/test/402710672d039c97b3006531c037d9ae/.tmp

drwxr-xr-x - root supergroup 0 2018-04-18 11:17 /hbase/hbase_db/data/default/test/402710672d039c97b3006531c037d9ae/info

drwxr-xr-x - root supergroup 0 2018-04-18 10:05 /hbase/hbase_db/data/default/test/402710672d039c97b3006531c037d9ae/recovered.edits

但是看不了,二进制文件。

需要导出数据库数据后,再进行cat查看;

[root@node1 opt]# /opt/hbase/bin/hbase org.apache.hadoop.hbase.mapreduce.Export test /opt/hbase/testdata2

这个目录/opt/hbase/testdata2 是hdfs的目录

[root@node1 opt]# hdfs dfs -ls /opt/hbase/testdata2

Found 2 items

-rw-r--r-- 2 root supergroup 0 2018-04-18 12:46 /opt/hbase/testdata2/_SUCCESS

-rw-r--r-- 2 root supergroup 322 2018-04-18 12:46 /opt/hbase/testdata2/part-m-00000

[root@node1 opt]# hdfs dfs -cat /opt/hbase/testdata2/part-m-00000

文件存储:

备注:因为export调用的是mar-red模块,需要库支持,所以需要添加 mapreduce.application.classpath

[root@master hadoop]# vi /opt/hadoop/etc/hadoop/mapred-site.xml

#插入以下几行

<property>

<name>mapreduce.application.classpath</name>

<value>

/opt/hadoop/etc/hadoop,

/opt/hadoop/share/hadoop/common/*,

/opt/hadoop/share/hadoop/common/lib/*,

/opt/hadoop/share/hadoop/hdfs/*,

/opt/hadoop/share/hadoop/hdfs/lib/*,

/opt/hadoop/share/hadoop/mapreduce/*,

/opt/hadoop/share/hadoop/mapreduce/lib/*,

/opt/hadoop/share/hadoop/yarn/*,

/opt/hadoop/share/hadoop/yarn/lib/*

</value>

</property>

(未完待续)