记一次-------- sqoop同步mysql到hive 执行太慢

背景: Ambari2.7.4管理的HDP3.1.4版本。

查看yarn日志,发现有报错,但是程序还是执行完成了,

报错内容如下:

2021-04-14 17:29:40,131 ERROR [Job ATS Event Dispatcher] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Exception while publishing configs on JOB_SUBMITTED Event for the job : job_1618388791260_0003

org.apache.hadoop.yarn.exceptions.YarnException: Failed while publishing entity

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl$TimelineEntityDispatcher.dispatchEntities(TimelineV2ClientImpl.java:548)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl.putEntities(TimelineV2ClientImpl.java:149)

at org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler.publishConfigsOnJobSubmittedEvent(JobHistoryEventHandler.java:1254)

at org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler.processEventForNewTimelineService(JobHistoryEventHandler.java:1414)

at org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler.handleTimelineEvent(JobHistoryEventHandler.java:742)

at org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler.access$1200(JobHistoryEventHandler.java:93)

at org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler$ForwardingEventHandler.handle(JobHistoryEventHandler.java:1795)

at org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler$ForwardingEventHandler.handle(JobHistoryEventHandler.java:1791)

at org.apache.hadoop.yarn.event.AsyncDispatcher.dispatch(AsyncDispatcher.java:197)

at org.apache.hadoop.yarn.event.AsyncDispatcher$1.run(AsyncDispatcher.java:126)

at java.lang.Thread.run(Thread.java:748)

Caused by: com.sun.jersey.api.client.ClientHandlerException: java.net.SocketTimeoutException: Read timed out

at com.sun.jersey.client.urlconnection.URLConnectionClientHandler.handle(URLConnectionClientHandler.java:155)

at com.sun.jersey.api.client.Client.handle(Client.java:652)

at com.sun.jersey.api.client.WebResource.handle(WebResource.java:682)

at com.sun.jersey.api.client.WebResource.access$200(WebResource.java:74)

at com.sun.jersey.api.client.WebResource$Builder.put(WebResource.java:539)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl.doPutObjects(TimelineV2ClientImpl.java:291)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl.access$000(TimelineV2ClientImpl.java:66)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl$1.run(TimelineV2ClientImpl.java:302)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl$1.run(TimelineV2ClientImpl.java:299)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl.putObjects(TimelineV2ClientImpl.java:299)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl.putObjects(TimelineV2ClientImpl.java:251)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl$EntitiesHolder$1.call(TimelineV2ClientImpl.java:374)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl$EntitiesHolder$1.call(TimelineV2ClientImpl.java:367)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl$TimelineEntityDispatcher$1.publishWithoutBlockingOnQueue(TimelineV2ClientImpl.java:495)

at org.apache.hadoop.yarn.client.api.impl.TimelineV2ClientImpl$TimelineEntityDispatcher$1.run(TimelineV2ClientImpl.java:433)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

... 1 more

Caused by: java.net.SocketTimeoutException: Read timed out

at java.net.SocketInputStream.socketRead0(Native Method)

at java.net.SocketInputStream.socketRead(SocketInputStream.java:116)

at java.net.SocketInputStream.read(SocketInputStream.java:171)

at java.net.SocketInputStream.read(SocketInputStream.java:141)

at java.io.BufferedInputStream.fill(BufferedInputStream.java:246)

解决: 发生这种情况是因为来自ATSv2的嵌入式HBASE崩溃。 需要重置ATsv2内嵌HBASE数据库

- 停止yarn服务: ambari -> yarn-Actions -> stop

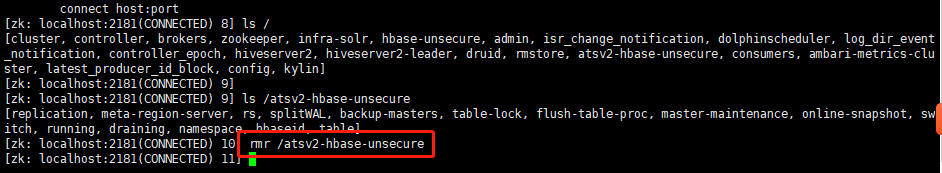

- 删除zookeeper上的ATSv2 Znode

[root@ ~]# cd /usr/hdp/3.1.4.0-315/zookeeper/bin [root@ bin]# ./zkCli.sh ...... [zk: localhost:2181(CONNECTED) 1] rmr /atsv2-hbase-unsecure

- 删除HDFS时间线服务目录内的HBASE数据

hdfs dfs -rm -r /atsv2/hbase

- 启动yarn服务:Ambari -> Yarn-actions -> start

再次测试执行:速度提升20倍的样子

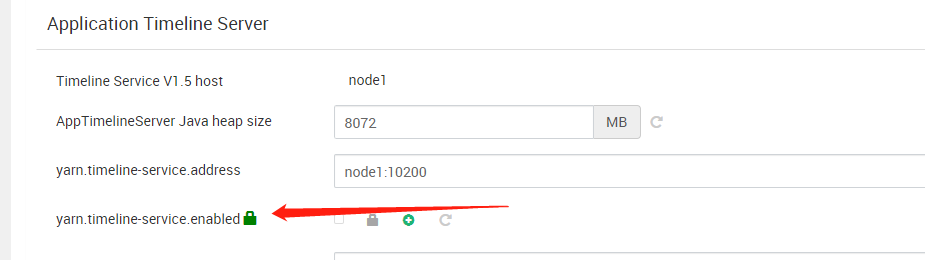

二、关闭yarn的timeline 服务即可