来源:http://www.cnblogs.com/justinzhang/p/4261851.html

This document is from my evernote, when I was still at baidu, I have a complete hadoop development/Debug environment. But at that time, I was tired of writing blogs. It costs me two day’s spare time to recovery from where I was stoped. Hope the blogs will keep on. Still cherish the time speed there, cause when doing the same thing at both different time and different place(company), the things are still there, but mens are no more than the same one. Talk too much, Let’s go on.

在Hadoop集群搭建,已经搭建好了一个用于开发/测试的haoop集群,在这篇文章中,将介绍如何使用eclipse作为开发环境来进行程序的开发和测试。

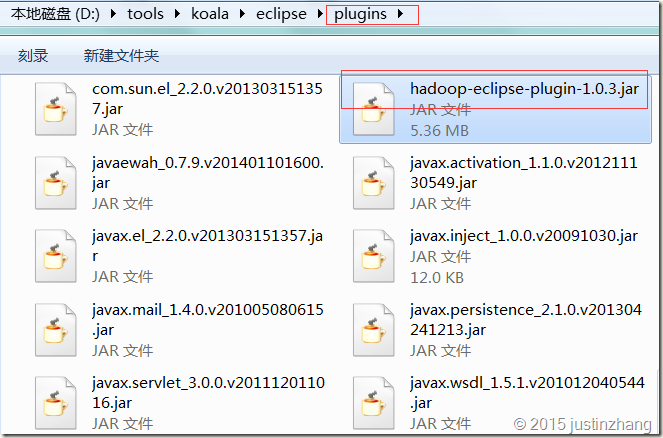

1.) 在这个地址http://download.csdn.net/detail/uestczhangchao/8409179 下载, hadoop-eclipse-plugin-1.0.3.jar的eclipse插件,本文使用 Eclipse Java EE IDE for Web Developers. Version: Luna Release (4.4.0) 作为IDE工具,将下载好的hadoop-eclipse-plugin-1.0.3.jar文件放到eclipse的plugin目录中(如果是MyEclispe则放到:D:program_filesMyEclipseMyEclipse 10dropinssvnplugins 目录中)

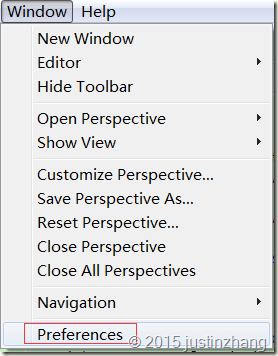

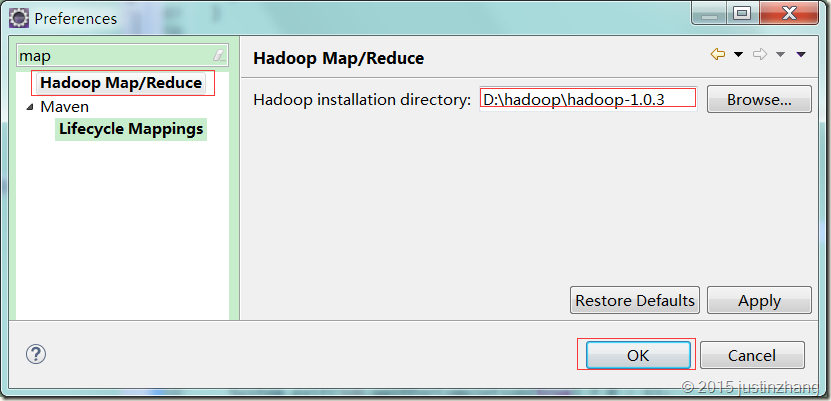

2.) 在Eclipse的Windows->Preferences中,选择Hadoop Map/Reduce,设置好Hadoop的安装目录,这里,我直接从linux的/home/hadoop/hadoop-1.0.3拷贝过来的,点击OK按钮:

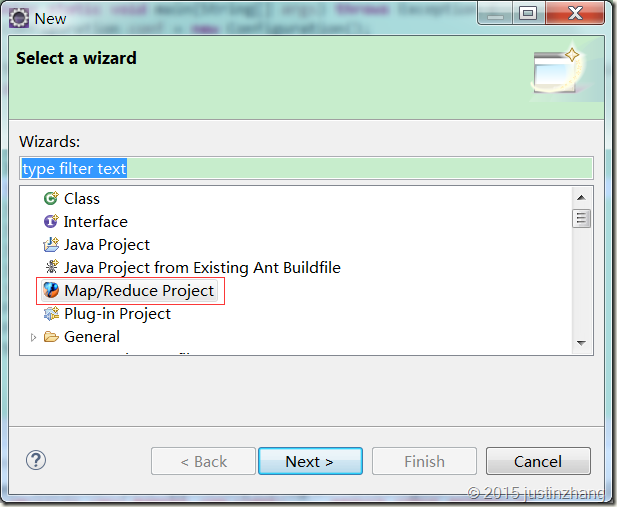

3.) 新建一个Map/Reduce Project

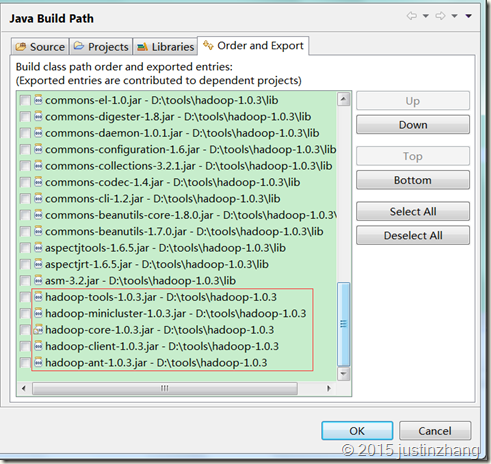

4.) 新建Map/Reduce Project后,会生成如下的两个目录, DFS Locations和suse的Java工程,在java工程中,自动加入对hadoop包的依赖:

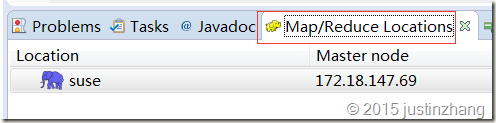

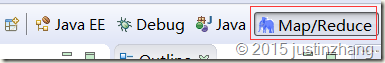

5.) 是用该插件建立的工程,有专门的视图想对应:

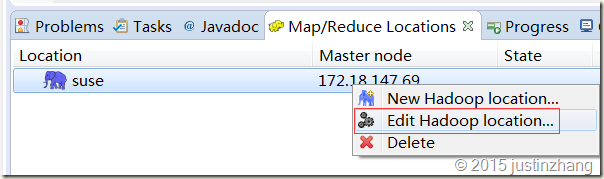

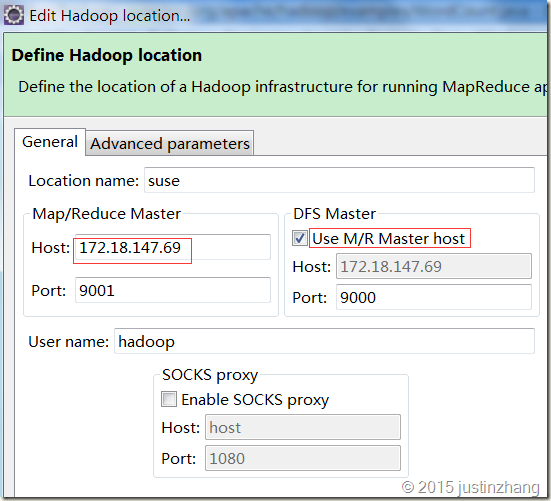

6.)在Map/Reduce Locations中,选择Edit Hadoop Location…选项,Map/Recuce Master和 DFS Master的设置:

7.)在Advanced parameters中,设置Hadoop的配置选项,将dfs.data.dir设置成和linx环境中的一样,在Advanced parameters中,将所有与路径相关的都设置成对应的Linux路径即可:

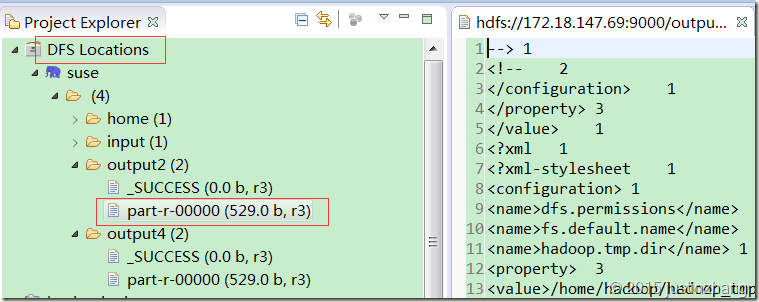

8.)将Hadoop集群相关的配置设置好后,可以在DFS location中看到Hadoop集群上的文件,可以进行添加和删除操作:

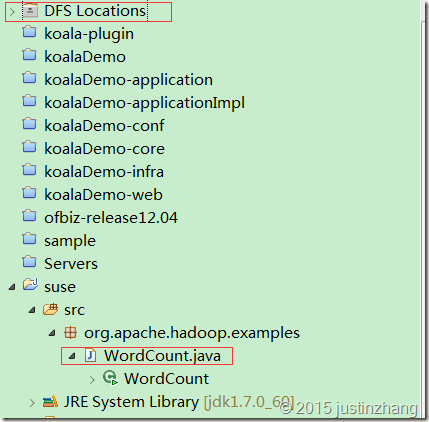

9.)在生成的Java工程中,添加Map/Reduce程序,这里我添加了一个WordCount程序作为测试:

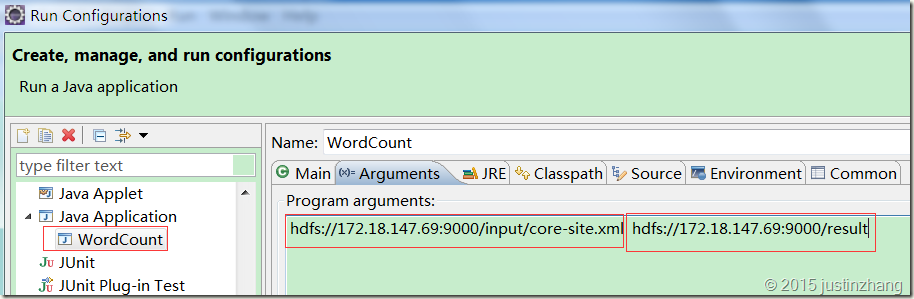

10.) 在Java工程的Run Configurations中设置WordCount的Arguments,第一个参数为输入文件在hdfs的路径,第二个参数为hdfs的输出路径:

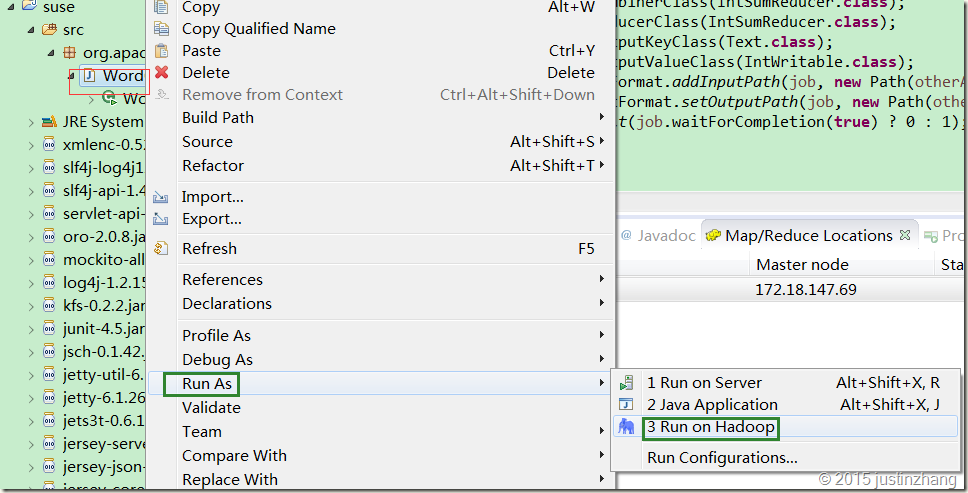

11.)设置好Word Count的RunConfiguration后,选择Run As-> Run on Hadoop:

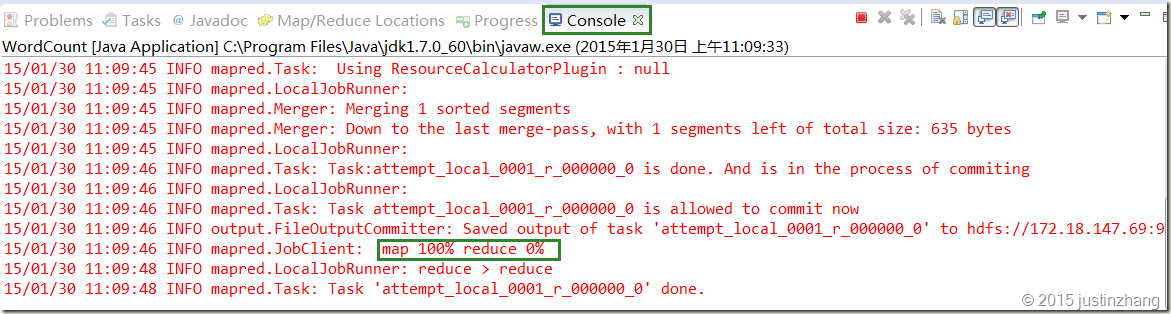

12.) 在Console中可以看到Word Count运行的输出日志信息:

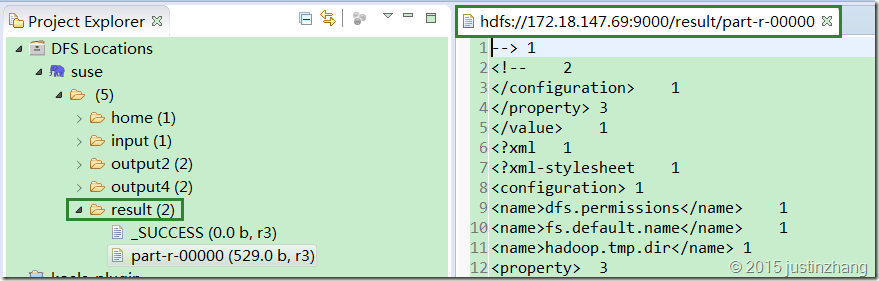

13.)在DFS Location中可以看到,Word Count在result目录下生成的结果:

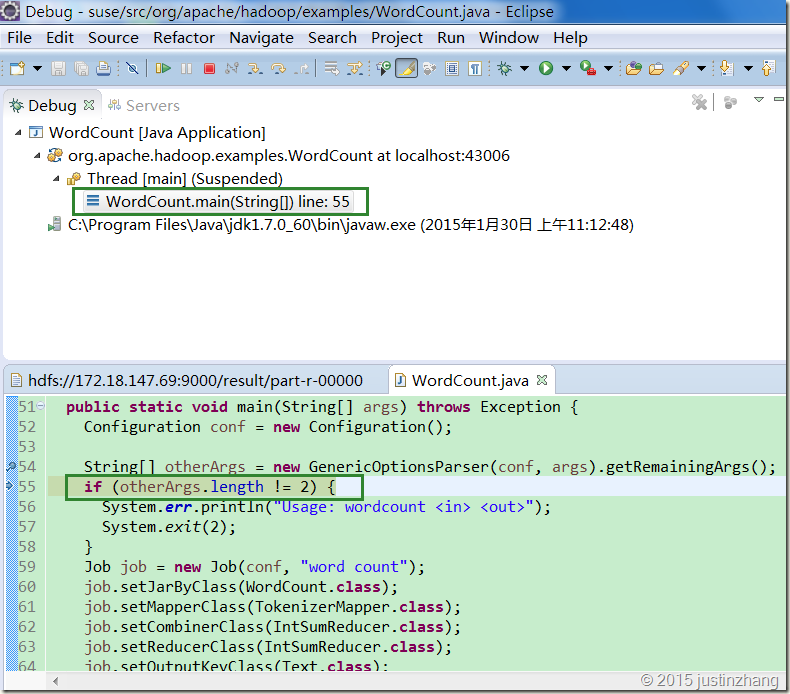

14.)进行Word Count程序的调试,在WordCount.java中设置好断点,点击debug按钮,就可以进行程序的调试了:

至此, Hadoop+Eclipse的开发环境搭建完成。

15.) 搭建环境的异常情况处理,在搭建环境的过程中,遇到的比较棘手的问题如下,提示widows上的用户没有权限,这个异常的处理在修改hadoop FileUtil.java,解决权限检查的问题文章中进行介绍,需要通过修改hadoop的源代码,重新编译进行修复:

15/01/30 10:08:17 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

15/01/30 10:08:17 ERROR security.UserGroupInformation: PriviledgedActionException as:zhangchao3 cause:java.io.IOException: Failed to set permissions of path: mphadoop-zhangchao3mapredstagingzhangchao3502228304.staging to 0700

Exception in thread "main" java.io.IOException: Failed to set permissions of path: mphadoop-zhangchao3mapredstagingzhangchao3502228304.staging to 0700

at org.apache.hadoop.fs.FileUtil.checkReturnValue(FileUtil.java:689)

at org.apache.hadoop.fs.FileUtil.setPermission(FileUtil.java:662)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:509)

at org.apache.hadoop.fs.RawLocalFileSystem.mkdirs(RawLocalFileSystem.java:344)

at org.apache.hadoop.fs.FilterFileSystem.mkdirs(FilterFileSystem.java:189)

at org.apache.hadoop.mapreduce.JobSubmissionFiles.getStagingDir(JobSubmissionFiles.java:116)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:856)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:850)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1121)

at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:850)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:500)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:530)

at org.apache.hadoop.examples.WordCount.main(WordCount.java:68)