K8s重新加入节点

1.重置node节点环境在slave节点上执行

[root@node2 ~]# kubeadm reset [reset] WARNING: changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted. [reset] are you sure you want to proceed? [y/N]: y [preflight] running pre-flight checks [reset] stopping the kubelet service [reset] unmounting mounted directories in "/var/lib/kubelet" [reset] removing kubernetes-managed containers [reset] cleaning up running containers using crictl with socket /var/run/dockershim.sock [reset] failed to list running pods using crictl: exit status 1. Trying to use docker instead[reset] no etcd manifest found in "/etc/kubernetes/manifests/etcd.yaml". Assuming external etcd [reset] deleting contents of stateful directories: [/var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes] [reset] deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki] [reset] deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

2.关闭slave节点的交换分区

[root@node3 ~]# swapoff -a

处理token和CA证书过期

1.在master上执行

[root@k8s-master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 5a949445e86e76a060f6c3a6c2f571df4361085387cde7068493b418d484bb14 [root@k8s-master ~]# kubeadm token create lsv2rv.7q0or3flbjne8s0k

2.在slave上执行

[root@node2 ~]# kubeadm join 192.168.11.141:6443 --token lsv2rv.7q0or3flbjne8s0k --discovery-token-ca-cert-hash sha256:5a949445e86e76a060f6c3a6c2f571df4361085387cde7068493b418d484bb14 [preflight] running pre-flight checks [WARNING RequiredIPVSKernelModulesAvailable]: the IPVS proxier will not be used, because the following required kernel modules are not loaded: [ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh] or no builtin kernel ipvs support: map[ip_vs_wrr:{} ip_vs_sh:{} nf_conntrack_ipv4:{} ip_vs:{} ip_vs_rr:{}] you can solve this problem with following methods: 1. Run 'modprobe -- ' to load missing kernel modules; 2. Provide the missing builtin kernel ipvs support I0517 23:38:53.384827 2531 kernel_validator.go:81] Validating kernel version I0517 23:38:53.384903 2531 kernel_validator.go:96] Validating kernel config [WARNING Hostname]: hostname "node2" could not be reached [WARNING Hostname]: hostname "node2" lookup node2 on 192.168.11.2:53: no such host [discovery] Trying to connect to API Server "192.168.11.141:6443" [discovery] Created cluster-info discovery client, requesting info from "https://192.168.11.141:6443" [discovery] Requesting info from "https://192.168.11.141:6443" again to validate TLS against the pinned public key [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.11.141:6443" [discovery] Successfully established connection with API Server "192.168.11.141:6443" [kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.11" ConfigMap in the kube-system namespace [kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [preflight] Activating the kubelet service [tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap... [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "node2" as an annotation This node has joined the cluster: * Certificate signing request was sent to master and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster.

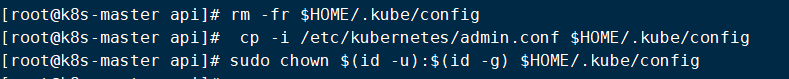

3.kubectl命令过期

# rm -fr $HOME/.kube/config

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

k8s命令实例

pod的创建和删除

[root@k8s-master ~]#kubectl run nginx-deploy --image=nginx:1.14-alpine --port=80 --replicas=2 [root@k8s-master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d 10.244.1.11 node2 nginx-deploy-5b595999-sdfcz 1/1 Running 0 226d 10.244.1.10 node2 [root@node2 ~]# curl 10.244.1.11 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> [root@k8s-master ~]# kubectl delete pods nginx-deploy-5b595999-sdfcz pod "nginx-deploy-5b595999-sdfcz" deleted [root@k8s-master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d 10.244.1.11 node2 nginx-deploy-5b595999-s4cjv 0/1 ContainerCreating 0 4s <none> node3 [root@k8s-master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d 10.244.1.11 node2 nginx-deploy-5b595999-s4cjv 1/1 Running 0 25s 10.244.2.5 node3

service的管理

service是用来为pod提供固定访问端点的

service地址是通过iptables或者ipvs规则来实现的 不能直接ping通 但是却可以通过地址访问到对应的服务

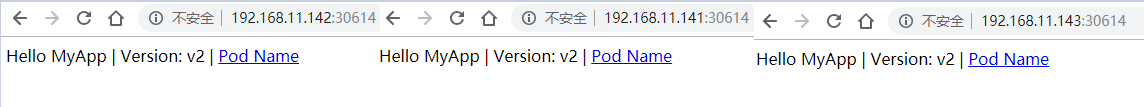

[root@k8s-master ~]# kubectl run myapp --image=ikubernetes/myapp:v1 --replicas=2 deployment.apps/myapp created [root@k8s-master ~]# kubectl get deployment NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE myapp 2 2 2 0 14s nginx-deploy 2 2 2 2 226d [root@k8s-master ~]# kubectl get deployment -w NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE myapp 2 2 2 0 23s nginx-deploy 2 2 2 2 226d myapp 2 2 2 1 55s myapp 2 2 2 2 1m ^C[root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-848b5b879b-9kd6s 1/1 Running 0 1m myapp-848b5b879b-dvtgn 1/1 Running 0 1m nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d nginx-deploy-5b595999-s4cjv 1/1 Running 0 56m [root@k8s-master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myapp-848b5b879b-9kd6s 1/1 Running 0 1m 10.244.2.6 node3 myapp-848b5b879b-dvtgn 1/1 Running 0 1m 10.244.1.12 node2 nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d 10.244.1.11 node2 nginx-deploy-5b595999-s4cjv 1/1 Running 0 57m 10.244.2.5 node3 [root@k8s-master ~]# wget -O - -q 10.244.2.6 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> [root@k8s-master ~]# kubectl expose deployment myapp --name=myapp --port=80 service/myapp exposed [root@k8s-master ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 226d myapp ClusterIP 10.101.171.64 <none> 80/TCP 8s nginx ClusterIP 10.106.232.252 <none> 80/TCP 6m [root@k8s-master ~]# kubectl scale --replicas=5 deployment=myapp error: resource(s) were provided, but no name, label selector, or --all flag specified [root@k8s-master ~]# kubectl scale --replicas=5 deployment myapp deployment.extensions/myapp scaled [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE client 1/1 Running 0 5m myapp-848b5b879b-5vqx5 0/1 ContainerCreating 0 12s myapp-848b5b879b-98pht 0/1 ContainerCreating 0 12s myapp-848b5b879b-9kd6s 1/1 Running 0 10m myapp-848b5b879b-dvtgn 1/1 Running 0 10m myapp-848b5b879b-s6bjh 0/1 ContainerCreating 0 12s nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d nginx-deploy-5b595999-s4cjv 1/1 Running 0 1h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE client 1/1 Running 0 5m myapp-848b5b879b-5vqx5 1/1 Running 0 15s myapp-848b5b879b-98pht 1/1 Running 0 15s myapp-848b5b879b-9kd6s 1/1 Running 0 10m myapp-848b5b879b-dvtgn 1/1 Running 0 10m myapp-848b5b879b-s6bjh 1/1 Running 0 15s nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d nginx-deploy-5b595999-s4cjv 1/1 Running 0 1h [root@k8s-master ~]# kubectl scale --replicas=3 deployment myapp deployment.extensions/myapp scaled [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE client 1/1 Running 0 5m myapp-848b5b879b-5vqx5 1/1 Running 0 30s myapp-848b5b879b-9kd6s 1/1 Running 0 10m myapp-848b5b879b-dvtgn 1/1 Running 0 10m nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d nginx-deploy-5b595999-s4cjv 1/1 Running 0 1h [root@k8s-master ~]# kubectl set image deployment myapp myapp=ikubernetes/myapp:v2 deployment.extensions/myapp image updated [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE client 1/1 Running 0 9m myapp-74c94dcb8c-mjjpw 0/1 ContainerCreating 0 14s myapp-848b5b879b-5vqx5 1/1 Running 0 4m myapp-848b5b879b-9kd6s 1/1 Running 0 14m myapp-848b5b879b-dvtgn 1/1 Running 0 14m nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d nginx-deploy-5b595999-s4cjv 1/1 Running 0 1h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE client 1/1 Running 0 10m myapp-74c94dcb8c-l2j8v 0/1 ContainerCreating 0 32s myapp-74c94dcb8c-mjjpw 1/1 Running 0 1m myapp-848b5b879b-9kd6s 1/1 Running 0 15m myapp-848b5b879b-dvtgn 1/1 Running 0 15m nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d nginx-deploy-5b595999-s4cjv 1/1 Running 0 1h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE client 1/1 Running 0 11m myapp-74c94dcb8c-dhhln 1/1 Running 0 1m myapp-74c94dcb8c-l2j8v 1/1 Running 0 1m myapp-74c94dcb8c-mjjpw 1/1 Running 0 2m nginx-deploy-5b595999-d7rpg 1/1 Running 0 226d nginx-deploy-5b595999-s4cjv 1/1 Running 0 1h [root@k8s-master ~]# kubectl edit svc myapp 修改service的type ClusterType 改成NodePort service/myapp edited [root@k8s-master ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 226d myapp NodePort 10.101.171.64 <none> 80:30614/TCP 19m nginx ClusterIP 10.106.232.252 <none> 80/TCP 25m

通过service可以把服务映射到集群中的任何一个节点上