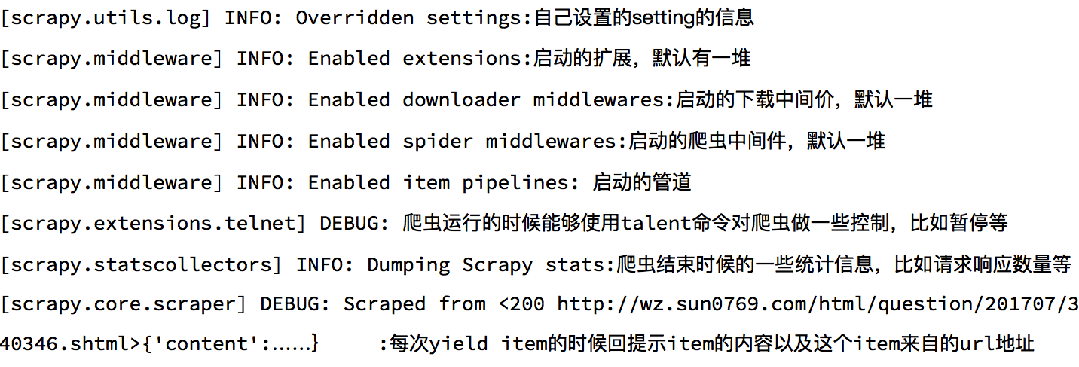

1、debug了解

2、scrapy shell了解

Scrapy shell是一个交互终端,我们可以在未启动spider的情况下尝试及调试代码,也可以用来测试XPath表达式 使用方法: scrapy shell https://gosuncn.zhiye.com/social/ response.url:当前响应的url地址 response.request.url:当前响应对应的请求的url地址 response.headers:响应头 response.body:响应体,也就是html代码,默认是byte类型 response.requests.headers:当前响应的请求头

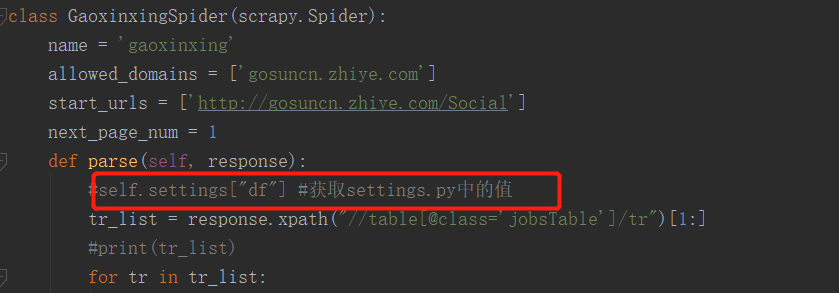

3、settings.py

# -*- coding: utf-8 -*- # Scrapy settings for gosuncn project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html #settings文件一般存取全局变量,如数据库的账号,密码等### BOT_NAME = 'gosuncn' SPIDER_MODULES = ['gosuncn.spiders'] NEWSPIDER_MODULE = 'gosuncn.spiders' LOG_LEVEL="WARNING" # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'gosuncn (+http://www.yourdomain.com)' # Obey robots.txt rules #遵守ROBOT协议,也就是会先请求ROBOT协议 ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16) #并发数 #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #下载延迟,每次请求前,先睡3秒 #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #和DOWNLOAD_DELAY配合使用 #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #设置COOKIES,默认携带COOKIES信息 #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #默认请求头 #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} #中间件 # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'gosuncn.middlewares.GosuncnSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'gosuncn.middlewares.GosuncnDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} #配置pipelines,数字为权重值,越小越先执行 # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'gosuncn.pipelines.GosuncnPipeline': 300, } LOG_LEVEL ="WARNING" LOG_FILE = "./log.log" #对爬虫进行限速 # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False #HTTP缓存 # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

4、pipelines的open_spider和close_spider函数

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import re from gosuncn.items import GosuncnItem class GosuncnPipeline(object): def open_spider(self,spider): """ 爬虫启动时执行,启动数据库连接 :param spider: :return: """ spider.hello = "open" def process_item(self, item, spider): if isinstance(item,GosuncnItem): item["content"] = self.process_content(item["content"]) print(item) return item def process_content(self,content): content =[re.sub(r" |' '","",i) for i in content] content = [i for i in content if len(i)>0] return content def close_spider(self,spider): """ 爬虫结束时执行,关闭数据库连接 :param spider: :return: """ spider.hello = "close" # class GosuncnPipeline1(object): # def process_item(self, item, spider): # if isinstance(item,GosuncnItem): # print(item) # return item