一.简单说明

ceph mimic版本在对MDS的稳定性等方面做了大量的改进和优化,这里我们k8s集群使用ceph时需要使用cephfs,因此对MDS的稳定性方面有很高的要求,因此,我们选择ceph Mimic版本来进行部署。

ceph Mimic版本的具体更新可查看以下链接:

[链接](http://docs.ceph.com/docs/master/releases/mimic/?highlight=backfill#upgrading-from-luminous

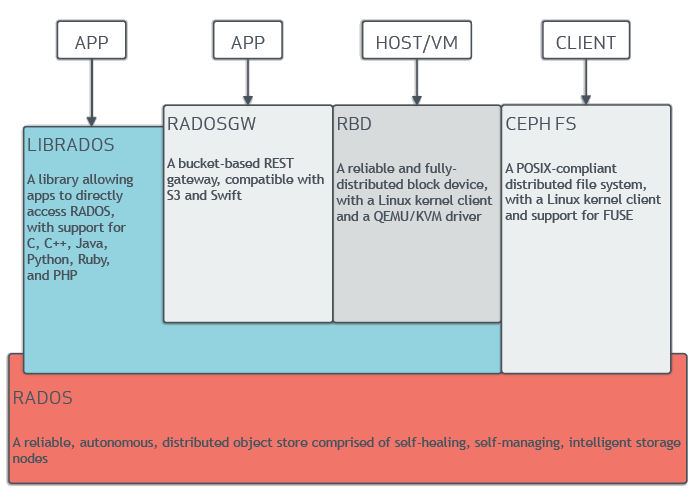

ceph的架构如下图所示:

二.集群部署

- 基于k8s集群部署完毕后,我们可以进行部署配置ceph集群

| 主机名 | IP | 功能 | 系统 |

|---|---|---|---|

| master01 | 192.168.1.188 | mon.osd.mgr | centos7.5最小化 |

| master02 | 192.168.1.189 | mon.osd.mgr | centos7.5最小化 |

| master03 | 192.168.1.191 | mon.osd.mgr | centos7.5最小化 |

| node-01 | 192.168.1.193 | osd | centos7.5最小化 |

2.1 基础环境配置

2.1.1 关闭防火墙和禁用selinux

所有节点都要执行:

[root@master-01 ~]#systemctl stop firewalld

[root@master-01 ~]#systemctl disable firewalld

[root@master-01 ~]#sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@master-01 ~]#setenforce 0

2.1.2 配置服务器的NTP时间同步

所有节点都要执行:

[root@master-01 ~]#yum install ntp ntpdate -y

[root@master-01 ~]#timedatectl set-ntp yes

2.1.3 配置所有节点/etc/hosts

[root@master-01 ~]#cat /et/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.185 cluster.kube.com

192.168.1.188 master-01

192.168.1.189 master-02

192.168.1.191 master-03

192.168.1.193 node-01

2.1.4 配置部署节点到所有节点的无秘钥访问

[root@master-01 ~]#ssh-keygen 一直enter键即可

[root@master-01 ~]#ssh-copy-id root@192.168.1.188

2.2 安装ceph[所有节点]

2.2.1 配置ceph的yum源

[root@master-01 ~]# cat /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=http://download.ceph.com/rpm-mimic/el7/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://download.ceph.com/rpm-mimic/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=http://download.ceph.com/rpm-mimic/el7/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

2.2.2 安装epel-release

[root@master-01 ~]# yum install -y epel-release

2.2.3 安装ceph和ceph-deploy

[root@master-01 ~]# yum install -y ceph ceph-deploy

2.2.4 查看ceph的版本

[root@master-01 ~]# ceph -v

ceph version 13.2.4 (b10be4d44915a4d78a8e06aa31919e74927b142e) mimic (stable)

2.3 部署ceph的mon服务[部署节点执行即可]

[root@master-01 ~]# cd /etc/ceph

#这里是创建三个Mon节点

[root@master-01 ceph]# ceph-deploy new master-01 master-02 master-03

#这里是执行mon的初始化

[root@master-01 ceph]# ceph-deploy mon create-initial

2.4 部署ceph的mgr服务

[root@master-01 ~]# cd /etc/ceph

[root@master-01 ceph]# ceph-deploy mgr create master-01 master-02 master-03

#这里是同步配置文件到所有集群节点

[root@master-01 ceph]# ceph-deploy admin master-01 master-02 master-03 node-01

2.5 部署ceph的osd服务

[root@master-01 ~]# cd /etc/ceph

#这里是将做OSD的磁盘分区格式化

[root@master-01 ceph]# ceph-deploy disk zap master-01 /dev/sdb

[root@master-01 ceph]# ceph-deploy osd create --data /dev/sdb master-01

2.6 开启ceph的Dashboard

[root@master-01 ~]# ceph mgr module enable dashboard #开启dashboard

[root@master-01 ~]# ceph dashboard create-self-signed-cert

[root@master-01 ~]# ceph dashboard set-login-credentials admin admin #创建管理员

[root@master-01 ~]# ceph mgr services #确认验证

2.7 部署ceph的mds服务

[root@master-01 ~]# cd /etc/ceph

[root@master-01 ceph]# ceph-deploy mds create master-01 master-02 master-03

2.8 创建ceph的文件系统

#创建数据存储池

[root@master-01 ~]# ceph osd pool create datapool 256 256

#创建元数据存储池

[root@master-01 ~]# ceph osd pool create metapool 256 256

#创建文件系统

[root@master-01 ~]# ceph fs new cephfs metapool datapool

2.9 查看集群服务

[root@master-01 ~]# ceph -s

cluster:

id: 087099a6-b310-40ac-8631-9d3436141f66

health: HEALTH_OK

services:

mon: 3 daemons, quorum master-01,master-02,master-03

mgr: master-01(active), standbys: master-02, master-03

mds: cephfs-1/1/1 up {0=master-03=up:active}, 2 up:standby

osd: 8 osds: 8 up, 8 in

data:

pools: 2 pools, 512 pgs

objects: 22 objects, 2.2 KiB

usage: 8.0 GiB used, 272 GiB / 280 GiB avail

pgs: 512 active+clean