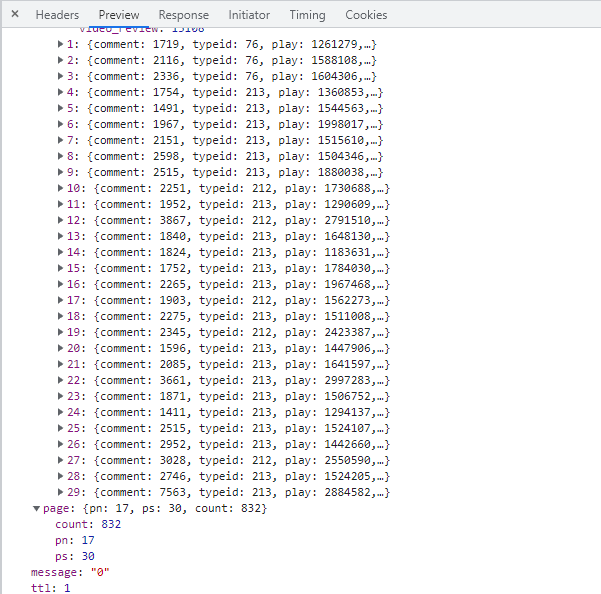

接口直接返回的是json数据格式,那就不用去findall各种class了直接处理json数据保存即可

Request URL: https://api.bilibili.com/x/space/arc/search?mid=390461123&ps=30&tid=0&pn=17&keyword=&order=pubdate&jsonp=jsonp Request Method: GET Status Code: 200 Remote Address: 123.6.7.66:443 Referrer Policy: no-referrer-when-downgrade access-control-allow-credentials: true access-control-allow-headers: Origin,No-Cache,X-Requested-With,If-Modified-Since,Pragma,Last-Modified,Cache-Control,Expires,Content-Type,Access-Control-Allow-Credentials,DNT,X-CustomHeader,Keep-Alive,User-Agent,X-Cache-Webcdn access-control-allow-methods: GET,POST,PUT,DELETE access-control-allow-origin: https://space.bilibili.com bili-status-code: 0 bili-trace-id: 4fb516b50d619c81 cache-control: no-cache content-encoding: br content-type: application/json; charset=utf-8 date: Tue, 23 Nov 2021 05:49:54 GMT expires: Tue, 23 Nov 2021 05:49:53 GMT idc: shjd vary: Origin x-bili-trace-id: 4fb516b50d619c81 x-cache-webcdn: BYPASS from blzone02 :authority: api.bilibili.com :method: GET :path: /x/space/arc/search?mid=390461123&ps=30&tid=0&pn=17&keyword=&order=pubdate&jsonp=jsonp :scheme: https accept: application/json, text/plain, */* accept-encoding: gzip, deflate, br accept-language: zh-CN,zh;q=0.9 cookie: buvid3=89EFA719-1D0F-BB2E-FE21-6C7BDCE8053B38280infoc; CURRENT_FNVAL=976; _uuid=210E48834-E65E-AD99-7F37-6771109799A8837281infoc; video_page_version=v_old_home_11; blackside_state=1; rpdid=|(k||)R|Y|)k0J'uYJ~um~kR|; PVID=1; innersign=0 origin: https://space.bilibili.com referer: https://space.bilibili.com/390461123/video?tid=0&page=17&keyword=&order=pubdate sec-ch-ua: "Google Chrome";v="95", "Chromium";v="95", ";Not A Brand";v="99" sec-ch-ua-mobile: ?0 sec-ch-ua-platform: "Windows" sec-fetch-dest: empty sec-fetch-mode: cors sec-fetch-site: same-site user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36 mid: 390461123 ps: 30 tid: 0 pn: 17 keyword: order: pubdate jsonp: jsonp

案例一: 单页爬取

from bs4 import BeautifulSoup #引用BeautifulSoup库 import requests #引用requests import os #os import pandas as pd import csv import codecs import re import xlwt #excel操作 import time import json #https://api.bilibili.com/x/space/arc/search?mid=390461123&ps=30&tid=0&pn=2&keyword=&order=pubdate&jsonp=jsonp url = 'https://api.bilibili.com/x/space/arc/search?mid=390461123&ps=30&tid=0&pn=1&keyword=&order=pubdate&jsonp=jsonp' fake_headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36' } #访问快手界面 first_request = requests.get(url=url,headers=fake_headers) first_data =first_request.json() #转成json字符串 # text = first_request.text # data = json.loads(text) # str转成json item = first_data['data']['list']['vlist'] # 从全部数据中取出vlist liebiao 项 #写模式打开csv文件 csv_obj = open('bilibili.csv', 'w', encoding="utf-8") #写入一行标题 csv.writer(csv_obj).writerow(["aid", "图片链接", "标题"]) listaid = [] for d in item: # plist = d.find('img')['src'] listaid.append(d['aid']) #逐个写入电影信息 print("===============正在写入id为:%s,的信息===============" %(d['aid'])) csv.writer(csv_obj).writerow([d['aid'],d['pic'],d['title']]) print("======aid={0}完成: {1}====".format(d['aid'],'over')) #将format后面的内容以此填充 #关闭 csv_obj.close() print("finshed")

从上面url链接看出:

https://api.bilibili.com/x/space/arc/search?mid=390461123&ps=30&tid=0&pn=分页页数&keyword=&order=pubdate&jsonp=jsonp

案例二:分页爬取需要的数据保存到csv中,另外下载图片到本地

from bs4 import BeautifulSoup #引用BeautifulSoup库 import requests #引用requests import os #os import pandas as pd import csv import codecs import re import xlwt #excel操作 import time import json #通用的爬取方法 def scrape_api(url): fake_headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36' } response=requests.get(url=url,headers=fake_headers) # text = first_request.text # data = json.loads(text) # str转成json return response.json()#读取接口返回的json信息,转化成json字符串 #通用的分页方法 def scrape_page(page): #https://api.bilibili.com/x/space/arc/search?mid=390461123&ps=30&tid=0&pn=2&keyword=&order=pubdate&jsonp=jsonp url = 'https://api.bilibili.com/x/space/arc/search?mid=390461123&ps=30&tid=0&pn={page}&keyword=&order=pubdate&jsonp=jsonp'.format(page=page) return scrape_api(url) #通用的csv表格 def scrpe_csv(item): # #写模式打开csv文件 csv_obj = open('bilibili.csv', 'a+', encoding="utf-8") # #写入一行标题 csv.writer(csv_obj).writerow(["aid", "图片链接", "标题"]) listaid = [] for d in item: listaid.append(d['aid']) #逐个写入电影信息 print("===============正在写入id为:%s,的信息===============" %(d['aid'])) csv.writer(csv_obj).writerow([d['aid'],d['pic'],d['title']]) #print("======aid={0}完成: {1}====".format(d['aid'],'over')) #将format后面的内容以此填充 #关闭 csv_obj.close() print("finshed") # w:以写方式打开, # a:以追加模式打开 (从 EOF 开始, 必要时创建新文件) # r+:以读写模式打开 # w+:以读写模式打开 (参见 w ) # a+:以读写模式打开 (参见 a ) # rb:以二进制读模式打开 # wb:以二进制写模式打开 (参见 w ) # ab:以二进制追加模式打开 (参见 a ) # rb+:以二进制读写模式打开 (参见 r+ ) # wb+:以二进制读写模式打开 (参见 w+ ) # ab+:以二进制读写模式打开 (参见 a+ ) #下载图片到本地 def download_img(item): pic_l = []#把所有图片地址放到这个数组里头 for dd in item: pic_l.append(dd['pic']) if not os.path.exists(r'picture'): os.mkdir(r'picture') for i in pic_l: #i == http://i0.hdslb.com/bfs/archive/c6490a18ce51d821b0edc9701bc8c16353fbea4a.jpg pic = requests.get(i) #split():拆分字符串。通过指定分隔符对字符串进行切片,并返回分割后的字符串列表(list): p_name=i.split('/') #['http:', '', 'i0.hdslb.com', 'bfs', 'archive', 'c6490a18ce51d821b0edc9701bc8c16353fbea4a.jpg'] imgadres = p_name[5] #c6490a18ce51d821b0edc9701bc8c16353fbea4a.jpg print(imgadres) with open('picture\\'+imgadres,'wb') as f: f.write(pic.content) print("imgdown_finshed") #挨个调用方法 def datacsv(): pages=28 #总页数 data = [] for page in range(1,pages): print("===========当前为第%s页============="%(page)) indexdata = scrape_page(page) allres=indexdata.get('data') item = allres.get('list').get('vlist')# 从全部数据中取出vlist liebiao 项 scrpe_csv(item) #csv表格 time.sleep(1) download_img(item) #图片下载 time.sleep(1) if __name__=='__main__': datacsv()