1.Spider爬虫代码

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from yszd.items import YszdItem 4 5 6 class YszdSpiderSpider(scrapy.Spider): 7 # 爬虫名称,启动爬虫时必须的参数 8 name = 'yszd_spider' 9 # 爬取域范围,运行爬虫在这个域名下爬取数据(可选) 10 allowed_domains = ['itcast.cn'] 11 # 起始url列表,爬虫执行后第一批请求将从这个列表里获取 12 start_urls = ['http://www.itcast.cn/channel/teacher.shtml'] 13 14 def parse(self, response): 15 # //表示跳级定位,即对当前元素的所有子节点进行查找,一般开头都是使用跳级定位 16 # div[@class='li_txt'] : 查找div且属性class='li_txt'的 17 node_list = response.xpath("//div[@class='li_txt']") 18 # 存储所有item字段 19 # items = [] 20 for node in node_list: 21 # 创建item字段对象用来存储信息 22 item = YszdItem() 23 # extract() : 将xpath对象转换为Unicode字符串 24 name = node.xpath("./h3/text()").extract() 25 title = node.xpath("./h4/text()").extract() 26 info = node.xpath("./p/text()").extract() 27 28 item['name'] = name[0] 29 item['title'] = title[0] 30 item['info'] = info[0] 31 32 yield item 33 # items.append(item)

1 # -*- coding: utf-8 -*- 2 3 # Define here the models for your scraped items 4 # 5 # See documentation in: 6 # https://doc.scrapy.org/en/latest/topics/items.html 7 8 import scrapy 9 10 11 class YszdItem(scrapy.Item): 12 name = scrapy.Field() 13 title = scrapy.Field() 14 info = scrapy.Field()

3.Pipelines管道代码

1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html 7 8 import json 9 10 11 class YszdPipeline(object): 12 def __init__(self): 13 self.f = open("yszd.json", "w") 14 15 def process_item(self, item, spider): 16 # ensure_ascii默认是True,会把内容转换为unicode 17 text = json.dumps(dict(item), ensure_ascii=False) + " " 18 self.f.write(text) 19 return item 20 21 def close_spider(self, spider): 22 self.f.close()

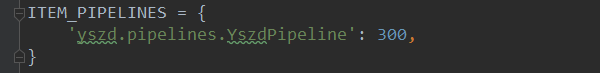

4.setting代码(开启管道,300表示优先级,越小优先级越高)

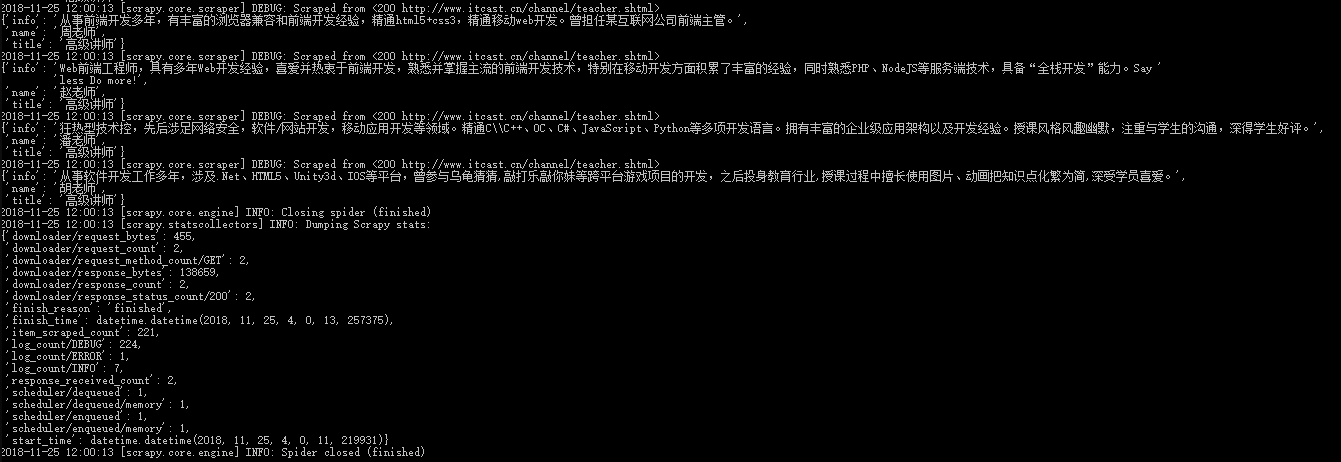

5.运行爬虫

执行命令:scrapy crawl yszd_spider

注意:yszd_spider为你定义爬虫的名称,与1中的第8行代码对应!

6.执行结果