利用hadoop自带的测试程序测试集群性能

使用TestDFSIO、mrbench、nnbench、Terasort 、sort 几个使用较广的基准测试程序

测试程序在:

${HADOOP_HOME}/share/hadoop/mapreduce/

一、查看工具

$ hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar

An example program must be given as the first argument.

Valid program names are:

DFSCIOTest: Distributed i/o benchmark of libhdfs.

DistributedFSCheck: Distributed checkup of the file system consistency.

JHLogAnalyzer: Job History Log analyzer.

MRReliabilityTest: A program that tests the reliability of the MR framework by injecting faults/failures

NNdataGenerator: Generate the data to be used by NNloadGenerator

NNloadGenerator: Generate load on Namenode using NN loadgenerator run WITHOUT MR

NNloadGeneratorMR: Generate load on Namenode using NN loadgenerator run as MR job

NNstructureGenerator: Generate the structure to be used by NNdataGenerator

SliveTest: HDFS Stress Test and Live Data Verification.

TestDFSIO: Distributed i/o benchmark.

fail: a job that always fails

filebench: Benchmark SequenceFile(Input|Output)Format (block,record compressed and uncompressed), Text(Input|Output)Format (compressed and uncompressed)

gsleep: A sleep job whose mappers create 1MB buffer for every record.

largesorter: Large-Sort tester

loadgen: Generic map/reduce load generator

mapredtest: A map/reduce test check.

minicluster: Single process HDFS and MR cluster.

mrbench: A map/reduce benchmark that can create many small jobs

nnbench: A benchmark that stresses the namenode w/ MR.

nnbenchWithoutMR: A benchmark that stresses the namenode w/o MR.

sleep: A job that sleeps at each map and reduce task.

testbigmapoutput: A map/reduce program that works on a very big non-splittable file and does identity map/reduce

testfilesystem: A test for FileSystem read/write.

testmapredsort: A map/reduce program that validates the map-reduce framework's sort.

testsequencefile: A test for flat files of binary key value pairs.

testsequencefileinputformat: A test for sequence file input format.

testtextinputformat: A test for text input format.

threadedmapbench: A map/reduce benchmark that compares the performance of maps with multiple spills over maps with 1 spill

timelineperformance: A job that launches mappers to test timline service performance.

二、TestDFSIO

改程序主要用于测试集群的io性能

查看参数选项

$ hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar TestDFSIO 20/05/27 14:11:42 INFO fs.TestDFSIO: TestDFSIO.1.8 Missing arguments. Usage: TestDFSIO [genericOptions] -read [-random | -backward | -skip [-skipSize Size]] | -write | -append | -truncate | -clean [-compression codecClassName] [-nrFiles N] [-size Size[B|KB|MB|GB|TB]] [-resFile resultFileName] [-bufferSize Bytes] [-rootDir][hduser@yjt mapreduce]$

1、测试HDFS写性能

向hdfs集群写10个128M的文件

$ hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar TestDFSIO -write -nrFiles 10 -size 128MB -resFile /home/hduser/TestDFSIO_result.txt

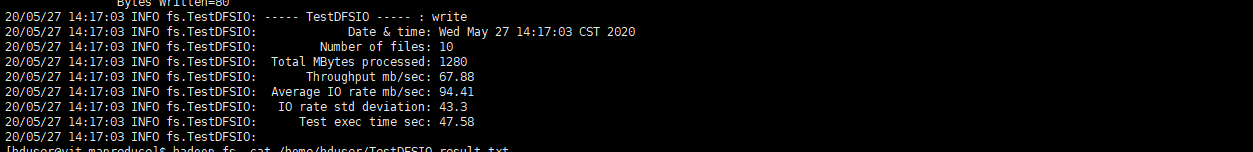

输出结果截取的最后一段,这段信息也就是最终的结果,或者可以查看本地系统的/home/hduser/TestDFSIO_result.txt文件,改文件也是最终的测试结果

从上图看:

写入是个10件,

吞吐量:67.88 mb/sec

平均IO速率:94.41 mb/sec

IO rate std deviation(IO速率标准偏差): 43.3mb/sec

总的执行时间:47.58s

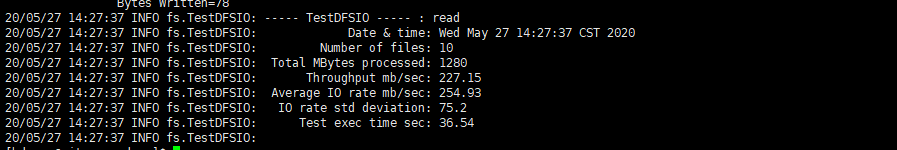

2、测试hdfs读性能

从hdfs读取10个128m的文件

$ hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar TestDFSIO -read -nrFiles 10 -size 128MB -resFile /home/hduser/TestDFSIO_read_result.txt

结果如下:

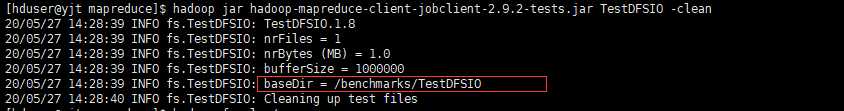

3、清除测试数据

hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar TestDFSIO -clean

测试数据默认存储在集群的/benchmarks目录下:

三、nnbech

nnbench用于测试NameNode的负载,它会生成很多与HDFS相关的请求,给NameNode施加较大的压力。这个测试能在HDFS上模拟创建、读取、重命名和删除文件等操作。

用法:

$ hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar nnbench --help Usage: nnbench <options> Options: -operation <Available operations are create_write open_read rename delete. This option is mandatory> * NOTE: The open_read, rename and delete operations assume that the files they operate on, are already available. The create_write operation must be run before running the other operations. -maps <number of maps. default is 1. This is not mandatory> -reduces <number of reduces. default is 1. This is not mandatory> -startTime <time to start, given in seconds from the epoch. Make sure this is far enough into the future, so all maps (operations) will start at the same time. default is launch time + 2 mins. This is not mandatory> -blockSize <Block size in bytes. default is 1. This is not mandatory> -bytesToWrite <Bytes to write. default is 0. This is not mandatory> -bytesPerChecksum <Bytes per checksum for the files. default is 1. This is not mandatory> -numberOfFiles <number of files to create. default is 1. This is not mandatory> -replicationFactorPerFile <Replication factor for the files. default is 1. This is not mandatory> -baseDir <base DFS path. default is /benchmarks/NNBench. This is not mandatory> -readFileAfterOpen <true or false. if true, it reads the file and reports the average time to read. This is valid with the open_read operation. default is false. This is not mandatory> -help: Display the help statement

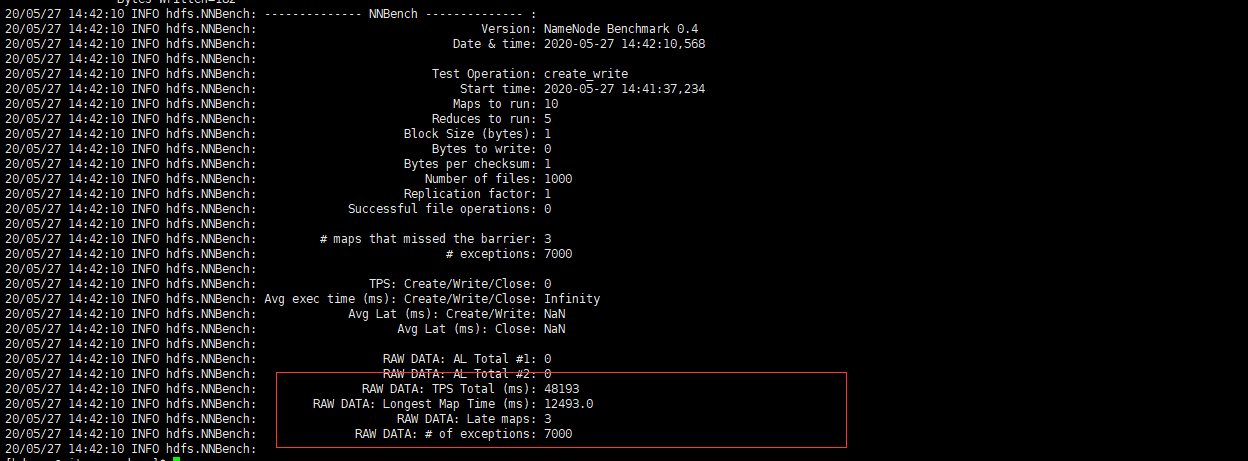

测试:使用10个map,5个reduce,创建1000个文件,并且写入数据以及打开和读取数据

$ hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar nnbench -operation create_write -maps 10 -reduces 5 -numberOfFiles 1000 -readFileAfterOpen true

测试结果:

四、mrbench

mrbench会多次重复执行一个小作业,用于检查在机群上小作业的运行是否可重复以及运行是否高效。

用法:

$ hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar mrbench --help MRBenchmark.0.0.2 Usage: mrbench [-baseDir <base DFS path for output/input, default is /benchmarks/MRBench>] [-jar <local path to job jar file containing Mapper and Reducer implementations, default is current jar file>] [-numRuns <number of times to run the job, default is 1>] [-maps <number of maps for each run, default is 2>] [-reduces <number of reduces for each run, default is 1>] [-inputLines <number of input lines to generate, default is 1>] [-inputType <type of input to generate, one of ascending (default), descending, random>] [-verbose]

测试:

$ hadoop jar hadoop-mapreduce-client-jobclient-2.9.2-tests.jar mrbench -numRuns 10 -maps 10 -reduces 5 -inputLines 10 -inputType descending

结果:

五、Terasort

Terasort是测试Hadoop的一个有效的排序程序。通过Hadoop自带的Terasort排序程序,测试不同的Map任务和Reduce任务数量,对Hadoop性能的影响。 实验数据由程序中的teragen程序生成,数量为1G和10G。

一个TeraSort测试需要按三步:

1. TeraGen生成随机数据

2. TeraSort对数据排序

3. TeraValidate来验证TeraSort输出的数据是否有序,如果检测到问题,将乱序的key输出到目录

用法:

$ hadoop jar hadoop-mapreduce-examples-2.9.2.jar --help Unknown program '--help' chosen. Valid program names are: aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files. aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files. bbp: A map/reduce program that uses Bailey-Borwein-Plouffe to compute exact digits of Pi. dbcount: An example job that count the pageview counts from a database. distbbp: A map/reduce program that uses a BBP-type formula to compute exact bits of Pi. grep: A map/reduce program that counts the matches of a regex in the input. join: A job that effects a join over sorted, equally partitioned datasets multifilewc: A job that counts words from several files. pentomino: A map/reduce tile laying program to find solutions to pentomino problems. pi: A map/reduce program that estimates Pi using a quasi-Monte Carlo method. randomtextwriter: A map/reduce program that writes 10GB of random textual data per node. randomwriter: A map/reduce program that writes 10GB of random data per node. secondarysort: An example defining a secondary sort to the reduce. sort: A map/reduce program that sorts the data written by the random writer. sudoku: A sudoku solver. teragen: Generate data for the terasort terasort: Run the terasort teravalidate: Checking results of terasort wordcount: A map/reduce program that counts the words in the input files. wordmean: A map/reduce program that counts the average length of the words in the input files. wordmedian: A map/reduce program that counts the median length of the words in the input files. wordstandarddeviation: A map/reduce program that counts the standard deviation of the length of the words in the input files

测试:

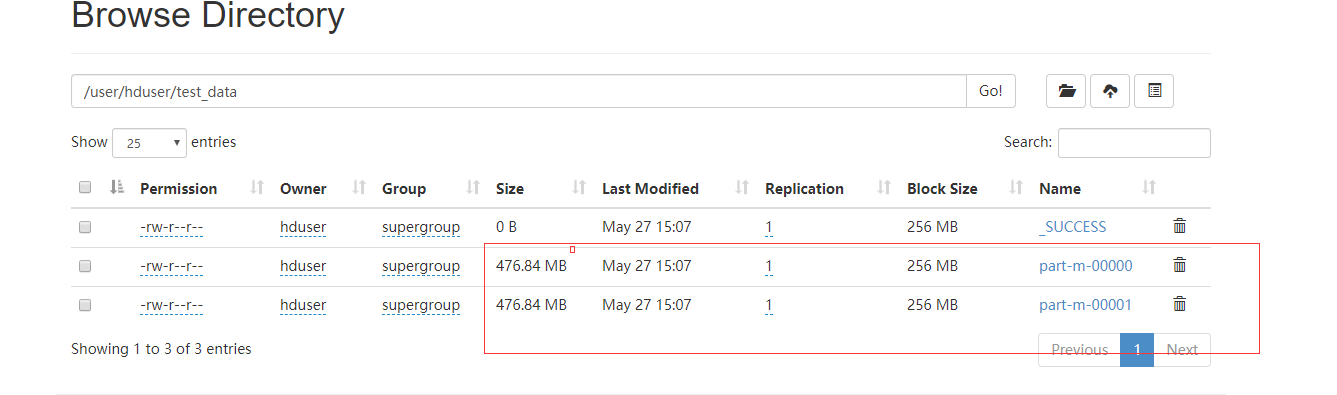

1. TeraGen生成随机数,生成1G的随机数据,结果存放在/user/hduser/test_data

$ hadoop jar hadoop-mapreduce-examples-2.9.2.jar teragen 10000000 test_data # 注意,这个测试数据大小不能写成1g或者1t等这样的格式,在测试的时候使用这种格式,发现生成的数据大小为0

2、TeraSort排序,将结果输出到目录/user/hduser/terasort-output

$ hadoop jar hadoop-mapreduce-examples-2.9.2.jar terasort test_data terasort-output

查看hdfs上的数据:

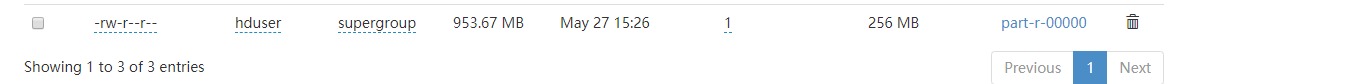

3、使用teravalidate检查排序的结果是否正确

$ hadoop jar hadoop-mapreduce-examples-2.9.2.jar teravalidate terasort-output terasort-validate

查看terasort-validate/part-r-0000,以确定发生了哪些错误

$ hadoop fs -cat terasort-validate/part-r-00000 checksum 4c49607ac53602

本文借鉴:

https://blog.csdn.net/lingeio/article/details/93869306

https://www.cnblogs.com/zhaohz/p/12117079.html

$ hadoop fs -cat terasort-validate/part-r-00000checksum4c49607ac53602