独立部署spark历史服务

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.Spark的Standalone运行模式部署实战案例

博主推荐阅读:

https://www.cnblogs.com/yinzhengjie2020/p/13122259.html

二.JobHistoryServer配置

1>.启动HDFS和Spark服务并创建存放spark的日志目录

[root@hadoop101.yinzhengjie.org.cn ~]# zookeeper.sh start 启动服务 ========== zookeeper101.yinzhengjie.org.cn zkServer.sh start ================ /yinzhengjie/softwares/jdk1.8.0_201/bin/java ZooKeeper JMX enabled by default Using config: /yinzhengjie/softwares/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== zookeeper102.yinzhengjie.org.cn zkServer.sh start ================ /yinzhengjie/softwares/jdk1.8.0_201/bin/java ZooKeeper JMX enabled by default Using config: /yinzhengjie/softwares/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== zookeeper103.yinzhengjie.org.cn zkServer.sh start ================ /yinzhengjie/softwares/jdk1.8.0_201/bin/java ZooKeeper JMX enabled by default Using config: /yinzhengjie/softwares/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# start-dfs.sh Starting namenodes on [hadoop101.yinzhengjie.org.cn hadoop106.yinzhengjie.org.cn] hadoop101.yinzhengjie.org.cn: starting namenode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-namenode-hadoop101.yinzhengjie.org.cn.out hadoop106.yinzhengjie.org.cn: starting namenode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-namenode-hadoop106.yinzhengjie.org.cn.out hadoop106.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop106.yinzhengjie.org.cn.out hadoop101.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop101.yinzhengjie.org.cn.out hadoop105.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop105.yinzhengjie.org.cn.out hadoop102.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop102.yinzhengjie.org.cn.out hadoop103.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop103.yinzhengjie.org.cn.out hadoop104.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop104.yinzhengjie.org.cn.out Starting journal nodes [hadoop103.yinzhengjie.org.cn hadoop104.yinzhengjie.org.cn hadoop102.yinzhengjie.org.cn] hadoop104.yinzhengjie.org.cn: starting journalnode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-journalnode-hadoop104.yinzhengjie.org.cn.out hadoop103.yinzhengjie.org.cn: starting journalnode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-journalnode-hadoop103.yinzhengjie.org.cn.out hadoop102.yinzhengjie.org.cn: starting journalnode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-journalnode-hadoop102.yinzhengjie.org.cn.out Starting ZK Failover Controllers on NN hosts [hadoop101.yinzhengjie.org.cn hadoop106.yinzhengjie.org.cn] hadoop101.yinzhengjie.org.cn: starting zkfc, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-zkfc-hadoop101.yinzhengjie.org.cn.out hadoop106.yinzhengjie.org.cn: starting zkfc, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-zkfc-hadoop106.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop105.yinzhengjie.org.cn ~]# /yinzhengjie/softwares/spark/sbin/start-all.sh starting org.apache.spark.deploy.master.Master, logging to /yinzhengjie/softwares/spark/logs/spark-root-org.apache.spark.deploy.master.Master-1-hadoop105.yinzhengjie.org.cn.out hadoop104.yinzhengjie.org.cn: starting org.apache.spark.deploy.worker.Worker, logging to /yinzhengjie/softwares/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop104.yinzhengjie.org.cn.out hadoop101.yinzhengjie.org.cn: starting org.apache.spark.deploy.worker.Worker, logging to /yinzhengjie/softwares/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop101.yinzhengjie.org.cn.out hadoop106.yinzhengjie.org.cn: starting org.apache.spark.deploy.worker.Worker, logging to /yinzhengjie/softwares/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop106.yinzhengjie.org.cn.out hadoop102.yinzhengjie.org.cn: starting org.apache.spark.deploy.worker.Worker, logging to /yinzhengjie/softwares/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop102.yinzhengjie.org.cn.out hadoop103.yinzhengjie.org.cn: starting org.apache.spark.deploy.worker.Worker, logging to /yinzhengjie/softwares/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop103.yinzhengjie.org.cn.out [root@hadoop105.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 5750 JournalNode 5687 DataNode 5546 Worker 5902 Jps hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 5667 QuorumPeerMain 5811 JournalNode 5971 Jps 5524 Worker 5748 DataNode hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 5776 Jps 5447 Master 5643 DataNode hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 5859 Worker 6388 DataNode 6108 QuorumPeerMain 6892 Jps 6271 NameNode 6687 DFSZKFailoverController hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 5968 Jps 5747 DataNode 5811 JournalNode 5673 QuorumPeerMain 5530 Worker hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 5684 NameNode 5751 DataNode 5545 Worker 5865 DFSZKFailoverController 6028 Jps [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -mkdir -p /yinzhengjie/spark/jobhistory #创建spark日志目录后我们可以在HDFS的webUI查看对应的日志信息哟~

2>.配置spark-default.conf文件

[root@hadoop101.yinzhengjie.org.cn ~]# cp /yinzhengjie/softwares/spark/conf/spark-defaults.conf.template /yinzhengjie/softwares/spark/conf/spark-defaults.conf #创建配置文件 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/spark/conf/spark-defaults.conf #编辑配置文件启用日志功能并指定日志输出目录为HDFS路径 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# egrep -v "^*#|^$" /yinzhengjie/softwares/spark/conf/spark-defaults.conf spark.eventLog.enabled true spark.eventLog.dir hdfs://hadoop101.yinzhengjie.org.cn:9000/yinzhengjie/spark/jobhistory [root@hadoop101.yinzhengjie.org.cn ~]# 相关参数说明: spark.eventLog.enabled: 指定受启用日志功能。 spark.eventLog.dir: 指定日志存储路径,Application在运行过程中所有的信息均记录在该属性指定的路径下。

3>.配置spark-env.sh文件

[root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/spark/conf/spark-env.sh [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# egrep -v "^*#|^$" /yinzhengjie/softwares/spark/conf/spark-env.sh SPARK_MASTER_HOST=hadoop105.yinzhengjie.org.cn SPARK_MASTER_PORT=6000 export SPARK_HISTORY_OPTS="-Dspark.history.ui.port=18080 -Dspark.history.retainedApplications=30 -Dspark.history.fs.logDirectory=hdfs://hadoop101.yinzhengjie.org.cn:9000/yinzhengjie/spark/jobhistory" [root@hadoop101.yinzhengjie.org.cn ~]# 相关参数说明: -Dspark.history.ui.port: 指定WEBUI访问的端口号为18080。 -Dspark.history.retainedApplications: 指定保存Application历史记录的个数,如果超过这个值,旧的应用程序信息将被删除,这个是内存中的应用数,而不是页面上显示的应用数。 -Dspark.history.fs.logDirectory: 配置了该属性后,在start-history-server.sh时就无需再显式的指定路径,Spark History Server页面只展示该指定路径下的信息。

4>.分发修改过的配置文件

[root@hadoop101.yinzhengjie.org.cn ~]# rsync-hadoop.sh /yinzhengjie/softwares/spark/conf/spark-defaults.conf ******* [hadoop102.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 ******* [hadoop103.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 ******* [hadoop104.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 ******* [hadoop105.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 ******* [hadoop106.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# rsync-hadoop.sh /yinzhengjie/softwares/spark/conf/spark-defaults.conf ******* [hadoop102.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 ******* [hadoop103.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 ******* [hadoop104.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 ******* [hadoop105.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 ******* [hadoop106.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/spark/conf/spark-defaults.conf] ******* 命令执行成功 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

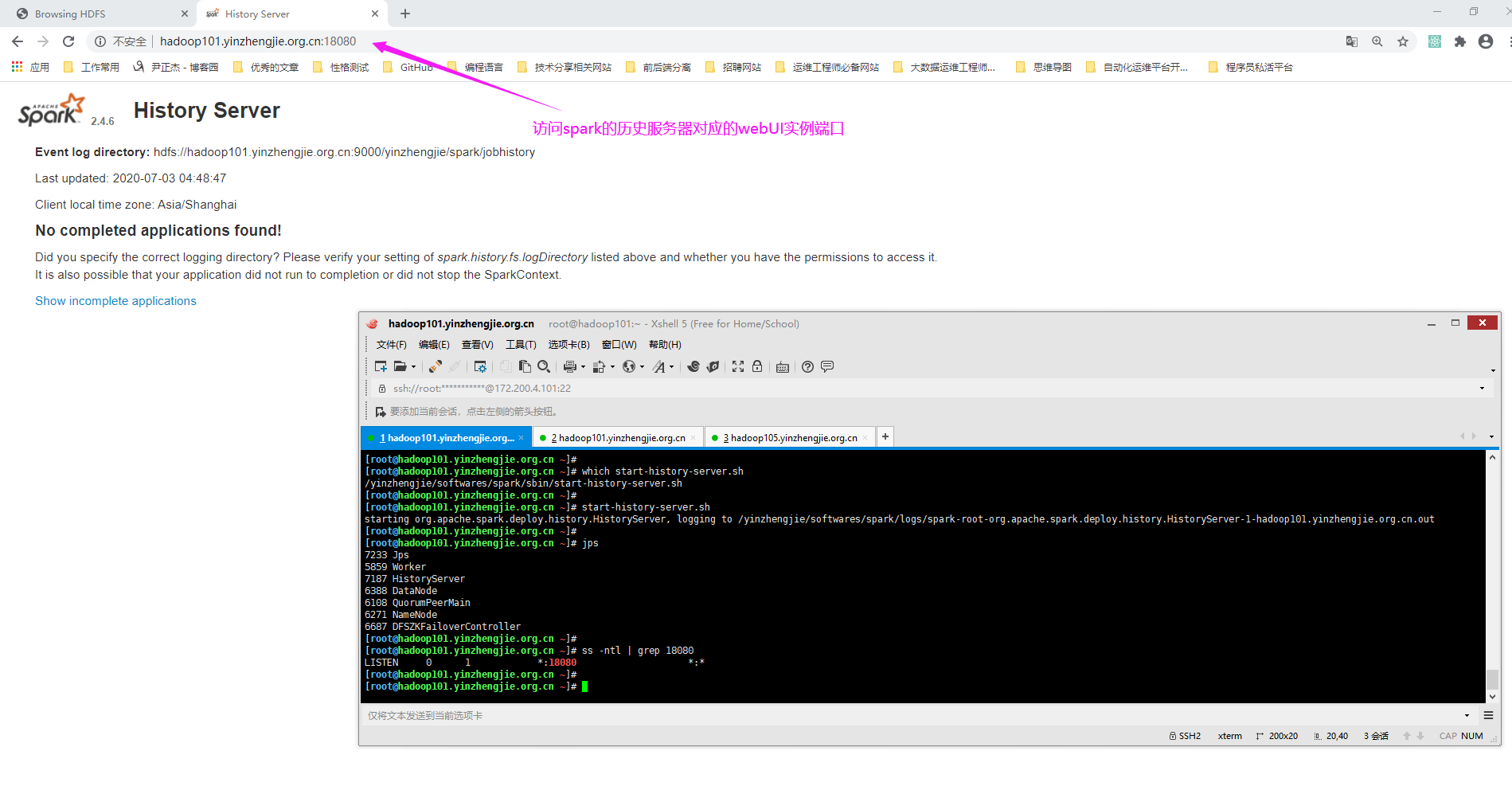

5>.启动历史服务器

[root@hadoop101.yinzhengjie.org.cn ~]# which start-history-server.sh /yinzhengjie/softwares/spark/sbin/start-history-server.sh [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# start-history-server.sh starting org.apache.spark.deploy.history.HistoryServer, logging to /yinzhengjie/softwares/spark/logs/spark-root-org.apache.spark.deploy.history.HistoryServer-1-hadoop101.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# jps 7233 Jps 5859 Worker 7187 HistoryServer 6388 DataNode 6108 QuorumPeerMain 6271 NameNode 6687 DFSZKFailoverController [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# ss -ntl | grep 18080 LISTEN 0 1 *:18080 *:* [root@hadoop101.yinzhengjie.org.cn ~]#

三.再次提交任务,观察spark历史服务器的webUI

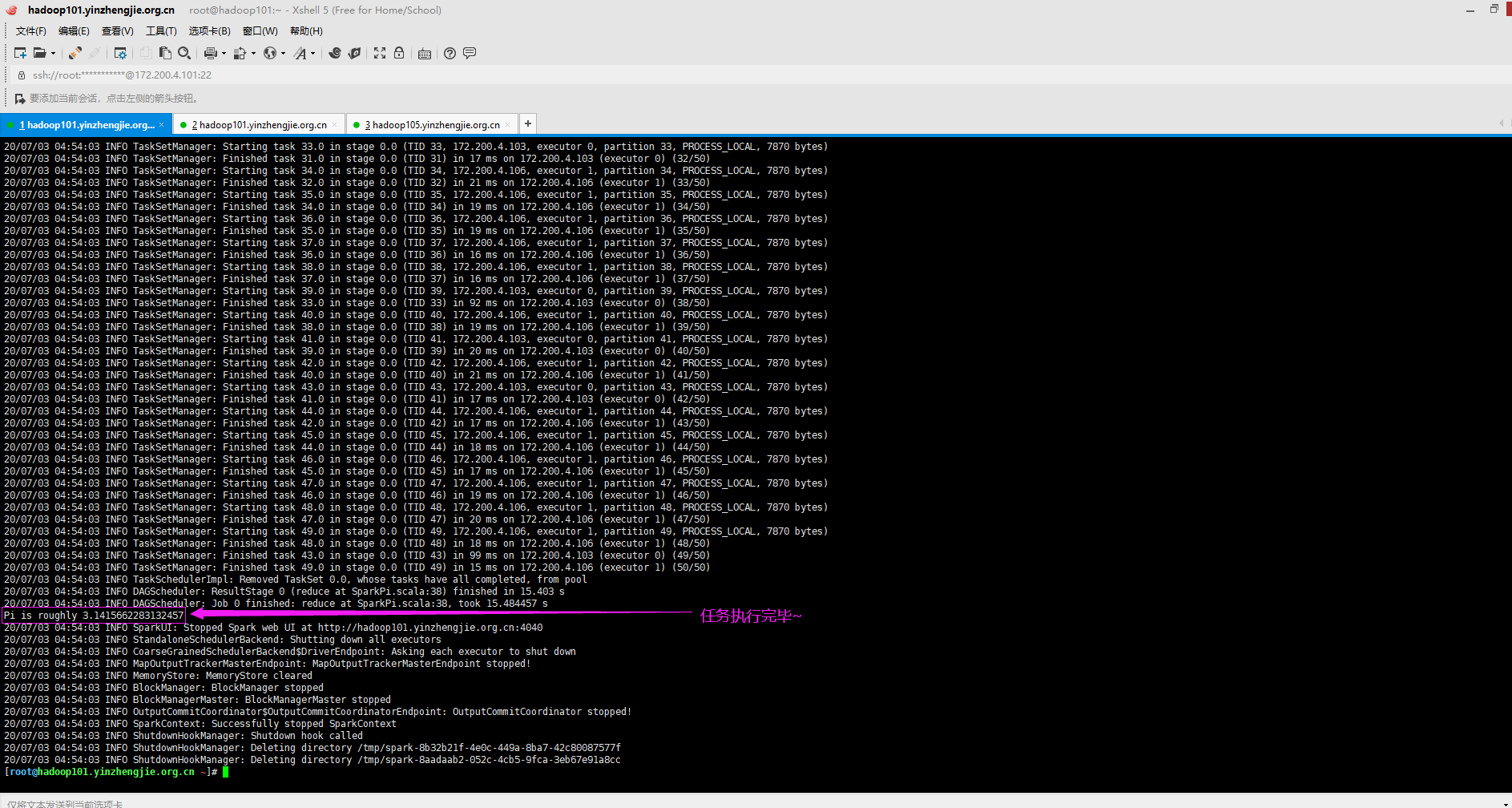

1>.执行spark任务(依旧使用官方的圆周率案例)

[root@hadoop101.yinzhengjie.org.cn ~]# spark-submit > --class org.apache.spark.examples.SparkPi > --master spark://hadoop105.yinzhengjie.org.cn:6000 > --executor-memory 1G > --total-executor-cores 2 > /yinzhengjie/softwares/spark/examples/jars/spark-examples_2.11-2.4.6.jar > 50 20/07/03 04:53:38 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties 20/07/03 04:53:39 INFO SparkContext: Running Spark version 2.4.6 20/07/03 04:53:39 INFO SparkContext: Submitted application: Spark Pi 20/07/03 04:53:39 INFO SecurityManager: Changing view acls to: root 20/07/03 04:53:39 INFO SecurityManager: Changing modify acls to: root 20/07/03 04:53:39 INFO SecurityManager: Changing view acls groups to: 20/07/03 04:53:39 INFO SecurityManager: Changing modify acls groups to: 20/07/03 04:53:39 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 20/07/03 04:53:40 INFO Utils: Successfully started service 'sparkDriver' on port 18213. 20/07/03 04:53:40 INFO SparkEnv: Registering MapOutputTracker 20/07/03 04:53:40 INFO SparkEnv: Registering BlockManagerMaster 20/07/03 04:53:40 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 20/07/03 04:53:40 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 20/07/03 04:53:40 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-0568623f-b300-40b7-92b4-b31fbbcf4cc9 20/07/03 04:53:40 INFO MemoryStore: MemoryStore started with capacity 366.3 MB 20/07/03 04:53:40 INFO SparkEnv: Registering OutputCommitCoordinator 20/07/03 04:53:41 INFO Utils: Successfully started service 'SparkUI' on port 4040. 20/07/03 04:53:41 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://hadoop101.yinzhengjie.org.cn:4040 20/07/03 04:53:41 INFO SparkContext: Added JAR file:/yinzhengjie/softwares/spark/examples/jars/spark-examples_2.11-2.4.6.jar at spark://hadoop101.yinzhengjie.org.cn:18213/jars/spark-examples_2.11-2.4.6.jar with timestamp 1593723221137 20/07/03 04:53:41 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://hadoop105.yinzhengjie.org.cn:6000... 20/07/03 04:53:41 INFO TransportClientFactory: Successfully created connection to hadoop105.yinzhengjie.org.cn/172.200.4.105:6000 after 27 ms (0 ms spent in bootstraps) 20/07/03 04:53:42 INFO StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20200703045341-0000 20/07/03 04:53:42 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 27931. 20/07/03 04:53:42 INFO NettyBlockTransferService: Server created on hadoop101.yinzhengjie.org.cn:27931 20/07/03 04:53:42 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 20/07/03 04:53:42 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, hadoop101.yinzhengjie.org.cn, 27931, None) 20/07/03 04:53:42 INFO BlockManagerMasterEndpoint: Registering block manager hadoop101.yinzhengjie.org.cn:27931 with 366.3 MB RAM, BlockManagerId(driver, hadoop101.yinzhengjie.org.cn, 27931, None) 20/07/03 04:53:42 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20200703045341-0000/0 on worker-20200703042422-172.200.4.103-34000 (172.200.4.103:34000) with 1 core(s) 20/07/03 04:53:42 INFO StandaloneSchedulerBackend: Granted executor ID app-20200703045341-0000/0 on hostPort 172.200.4.103:34000 with 1 core(s), 1024.0 MB RAM 20/07/03 04:53:42 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20200703045341-0000/1 on worker-20200703042422-172.200.4.106-36143 (172.200.4.106:36143) with 1 core(s) 20/07/03 04:53:42 INFO StandaloneSchedulerBackend: Granted executor ID app-20200703045341-0000/1 on hostPort 172.200.4.106:36143 with 1 core(s), 1024.0 MB RAM 20/07/03 04:53:42 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, hadoop101.yinzhengjie.org.cn, 27931, None) 20/07/03 04:53:42 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, hadoop101.yinzhengjie.org.cn, 27931, None) 20/07/03 04:53:44 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20200703045341-0000/0 is now RUNNING 20/07/03 04:53:44 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20200703045341-0000/1 is now RUNNING 20/07/03 04:53:47 INFO EventLoggingListener: Logging events to hdfs://hadoop101.yinzhengjie.org.cn:9000/yinzhengjie/spark/jobhistory/app-20200703045341-0000 20/07/03 04:53:47 INFO StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0 20/07/03 04:53:47 INFO SparkContext: Starting job: reduce at SparkPi.scala:38 20/07/03 04:53:47 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 50 output partitions 20/07/03 04:53:47 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38) 20/07/03 04:53:47 INFO DAGScheduler: Parents of final stage: List() 20/07/03 04:53:47 INFO DAGScheduler: Missing parents: List() 20/07/03 04:53:47 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents 20/07/03 04:53:48 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 2.0 KB, free 366.3 MB) 20/07/03 04:53:48 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1381.0 B, free 366.3 MB) 20/07/03 04:53:48 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop101.yinzhengjie.org.cn:27931 (size: 1381.0 B, free: 366.3 MB) 20/07/03 04:53:48 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1163 20/07/03 04:53:48 INFO DAGScheduler: Submitting 50 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14)) 20/07/03 04:53:48 INFO TaskSchedulerImpl: Adding task set 0.0 with 50 tasks 20/07/03 04:53:53 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.200.4.103:51801) with ID 0 20/07/03 04:53:53 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 172.200.4.103, executor 0, partition 0, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:53:53 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.200.4.106:46019) with ID 1 20/07/03 04:53:53 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, 172.200.4.106, executor 1, partition 1, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:53:55 INFO BlockManagerMasterEndpoint: Registering block manager 172.200.4.103:13366 with 366.3 MB RAM, BlockManagerId(0, 172.200.4.103, 13366, None) 20/07/03 04:53:56 INFO BlockManagerMasterEndpoint: Registering block manager 172.200.4.106:18965 with 366.3 MB RAM, BlockManagerId(1, 172.200.4.106, 18965, None) 20/07/03 04:54:00 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.200.4.103:13366 (size: 1381.0 B, free: 366.3 MB) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, 172.200.4.103, executor 0, partition 2, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 9389 ms on 172.200.4.103 (executor 0) (1/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, 172.200.4.103, executor 0, partition 3, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 35 ms on 172.200.4.103 (executor 0) (2/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4, 172.200.4.103, executor 0, partition 4, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 21 ms on 172.200.4.103 (executor 0) (3/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5, 172.200.4.103, executor 0, partition 5, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 23 ms on 172.200.4.103 (executor 0) (4/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6, 172.200.4.103, executor 0, partition 6, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 28 ms on 172.200.4.103 (executor 0) (5/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7, 172.200.4.103, executor 0, partition 7, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 24 ms on 172.200.4.103 (executor 0) (6/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8, 172.200.4.103, executor 0, partition 8, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 21 ms on 172.200.4.103 (executor 0) (7/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9, 172.200.4.103, executor 0, partition 9, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 21 ms on 172.200.4.103 (executor 0) (8/50) 20/07/03 04:54:02 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.200.4.106:18965 (size: 1381.0 B, free: 366.3 MB) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 10.0 in stage 0.0 (TID 10, 172.200.4.103, executor 0, partition 10, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 21 ms on 172.200.4.103 (executor 0) (9/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 11.0 in stage 0.0 (TID 11, 172.200.4.103, executor 0, partition 11, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 10.0 in stage 0.0 (TID 10) in 22 ms on 172.200.4.103 (executor 0) (10/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 12.0 in stage 0.0 (TID 12, 172.200.4.103, executor 0, partition 12, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 11.0 in stage 0.0 (TID 11) in 22 ms on 172.200.4.103 (executor 0) (11/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 13.0 in stage 0.0 (TID 13, 172.200.4.103, executor 0, partition 13, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 12.0 in stage 0.0 (TID 12) in 16 ms on 172.200.4.103 (executor 0) (12/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 14.0 in stage 0.0 (TID 14, 172.200.4.103, executor 0, partition 14, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 13.0 in stage 0.0 (TID 13) in 16 ms on 172.200.4.103 (executor 0) (13/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 15.0 in stage 0.0 (TID 15, 172.200.4.103, executor 0, partition 15, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 14.0 in stage 0.0 (TID 14) in 23 ms on 172.200.4.103 (executor 0) (14/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 16.0 in stage 0.0 (TID 16, 172.200.4.103, executor 0, partition 16, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 15.0 in stage 0.0 (TID 15) in 30 ms on 172.200.4.103 (executor 0) (15/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 17.0 in stage 0.0 (TID 17, 172.200.4.103, executor 0, partition 17, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 16.0 in stage 0.0 (TID 16) in 21 ms on 172.200.4.103 (executor 0) (16/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 18.0 in stage 0.0 (TID 18, 172.200.4.106, executor 1, partition 18, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 19.0 in stage 0.0 (TID 19, 172.200.4.103, executor 0, partition 19, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 9076 ms on 172.200.4.106 (executor 1) (17/50) 20/07/03 04:54:02 INFO TaskSetManager: Finished task 17.0 in stage 0.0 (TID 17) in 19 ms on 172.200.4.103 (executor 0) (18/50) 20/07/03 04:54:02 INFO TaskSetManager: Starting task 20.0 in stage 0.0 (TID 20, 172.200.4.103, executor 0, partition 20, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 19.0 in stage 0.0 (TID 19) in 16 ms on 172.200.4.103 (executor 0) (19/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 21.0 in stage 0.0 (TID 21, 172.200.4.106, executor 1, partition 21, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 18.0 in stage 0.0 (TID 18) in 27 ms on 172.200.4.106 (executor 1) (20/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 22.0 in stage 0.0 (TID 22, 172.200.4.103, executor 0, partition 22, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 20.0 in stage 0.0 (TID 20) in 16 ms on 172.200.4.103 (executor 0) (21/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 23.0 in stage 0.0 (TID 23, 172.200.4.106, executor 1, partition 23, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 21.0 in stage 0.0 (TID 21) in 22 ms on 172.200.4.106 (executor 1) (22/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 24.0 in stage 0.0 (TID 24, 172.200.4.103, executor 0, partition 24, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 22.0 in stage 0.0 (TID 22) in 23 ms on 172.200.4.103 (executor 0) (23/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 25.0 in stage 0.0 (TID 25, 172.200.4.106, executor 1, partition 25, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 23.0 in stage 0.0 (TID 23) in 26 ms on 172.200.4.106 (executor 1) (24/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 26.0 in stage 0.0 (TID 26, 172.200.4.103, executor 0, partition 26, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 24.0 in stage 0.0 (TID 24) in 20 ms on 172.200.4.103 (executor 0) (25/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 27.0 in stage 0.0 (TID 27, 172.200.4.103, executor 0, partition 27, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 26.0 in stage 0.0 (TID 26) in 15 ms on 172.200.4.103 (executor 0) (26/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 28.0 in stage 0.0 (TID 28, 172.200.4.106, executor 1, partition 28, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 25.0 in stage 0.0 (TID 25) in 26 ms on 172.200.4.106 (executor 1) (27/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 29.0 in stage 0.0 (TID 29, 172.200.4.103, executor 0, partition 29, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 27.0 in stage 0.0 (TID 27) in 18 ms on 172.200.4.103 (executor 0) (28/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 30.0 in stage 0.0 (TID 30, 172.200.4.106, executor 1, partition 30, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 28.0 in stage 0.0 (TID 28) in 21 ms on 172.200.4.106 (executor 1) (29/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 31.0 in stage 0.0 (TID 31, 172.200.4.103, executor 0, partition 31, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 29.0 in stage 0.0 (TID 29) in 19 ms on 172.200.4.103 (executor 0) (30/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 32.0 in stage 0.0 (TID 32, 172.200.4.106, executor 1, partition 32, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 30.0 in stage 0.0 (TID 30) in 19 ms on 172.200.4.106 (executor 1) (31/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 33.0 in stage 0.0 (TID 33, 172.200.4.103, executor 0, partition 33, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 31.0 in stage 0.0 (TID 31) in 17 ms on 172.200.4.103 (executor 0) (32/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 34.0 in stage 0.0 (TID 34, 172.200.4.106, executor 1, partition 34, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 32.0 in stage 0.0 (TID 32) in 21 ms on 172.200.4.106 (executor 1) (33/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 35.0 in stage 0.0 (TID 35, 172.200.4.106, executor 1, partition 35, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 34.0 in stage 0.0 (TID 34) in 19 ms on 172.200.4.106 (executor 1) (34/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 36.0 in stage 0.0 (TID 36, 172.200.4.106, executor 1, partition 36, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 35.0 in stage 0.0 (TID 35) in 19 ms on 172.200.4.106 (executor 1) (35/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 37.0 in stage 0.0 (TID 37, 172.200.4.106, executor 1, partition 37, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 36.0 in stage 0.0 (TID 36) in 16 ms on 172.200.4.106 (executor 1) (36/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 38.0 in stage 0.0 (TID 38, 172.200.4.106, executor 1, partition 38, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 37.0 in stage 0.0 (TID 37) in 16 ms on 172.200.4.106 (executor 1) (37/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 39.0 in stage 0.0 (TID 39, 172.200.4.103, executor 0, partition 39, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 33.0 in stage 0.0 (TID 33) in 92 ms on 172.200.4.103 (executor 0) (38/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 40.0 in stage 0.0 (TID 40, 172.200.4.106, executor 1, partition 40, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 38.0 in stage 0.0 (TID 38) in 19 ms on 172.200.4.106 (executor 1) (39/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 41.0 in stage 0.0 (TID 41, 172.200.4.103, executor 0, partition 41, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 39.0 in stage 0.0 (TID 39) in 20 ms on 172.200.4.103 (executor 0) (40/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 42.0 in stage 0.0 (TID 42, 172.200.4.106, executor 1, partition 42, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 40.0 in stage 0.0 (TID 40) in 21 ms on 172.200.4.106 (executor 1) (41/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 43.0 in stage 0.0 (TID 43, 172.200.4.103, executor 0, partition 43, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 41.0 in stage 0.0 (TID 41) in 17 ms on 172.200.4.103 (executor 0) (42/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 44.0 in stage 0.0 (TID 44, 172.200.4.106, executor 1, partition 44, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 42.0 in stage 0.0 (TID 42) in 17 ms on 172.200.4.106 (executor 1) (43/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 45.0 in stage 0.0 (TID 45, 172.200.4.106, executor 1, partition 45, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 44.0 in stage 0.0 (TID 44) in 18 ms on 172.200.4.106 (executor 1) (44/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 46.0 in stage 0.0 (TID 46, 172.200.4.106, executor 1, partition 46, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 45.0 in stage 0.0 (TID 45) in 17 ms on 172.200.4.106 (executor 1) (45/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 47.0 in stage 0.0 (TID 47, 172.200.4.106, executor 1, partition 47, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 46.0 in stage 0.0 (TID 46) in 19 ms on 172.200.4.106 (executor 1) (46/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 48.0 in stage 0.0 (TID 48, 172.200.4.106, executor 1, partition 48, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 47.0 in stage 0.0 (TID 47) in 20 ms on 172.200.4.106 (executor 1) (47/50) 20/07/03 04:54:03 INFO TaskSetManager: Starting task 49.0 in stage 0.0 (TID 49, 172.200.4.106, executor 1, partition 49, PROCESS_LOCAL, 7870 bytes) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 48.0 in stage 0.0 (TID 48) in 18 ms on 172.200.4.106 (executor 1) (48/50) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 43.0 in stage 0.0 (TID 43) in 99 ms on 172.200.4.103 (executor 0) (49/50) 20/07/03 04:54:03 INFO TaskSetManager: Finished task 49.0 in stage 0.0 (TID 49) in 15 ms on 172.200.4.106 (executor 1) (50/50) 20/07/03 04:54:03 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 20/07/03 04:54:03 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 15.403 s 20/07/03 04:54:03 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 15.484457 s Pi is roughly 3.1415662283132457 20/07/03 04:54:03 INFO SparkUI: Stopped Spark web UI at http://hadoop101.yinzhengjie.org.cn:4040 20/07/03 04:54:03 INFO StandaloneSchedulerBackend: Shutting down all executors 20/07/03 04:54:03 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down 20/07/03 04:54:03 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 20/07/03 04:54:03 INFO MemoryStore: MemoryStore cleared 20/07/03 04:54:03 INFO BlockManager: BlockManager stopped 20/07/03 04:54:03 INFO BlockManagerMaster: BlockManagerMaster stopped 20/07/03 04:54:03 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 20/07/03 04:54:03 INFO SparkContext: Successfully stopped SparkContext 20/07/03 04:54:03 INFO ShutdownHookManager: Shutdown hook called 20/07/03 04:54:03 INFO ShutdownHookManager: Deleting directory /tmp/spark-8b32b21f-4e0c-449a-8ba7-42c80087577f 20/07/03 04:54:03 INFO ShutdownHookManager: Deleting directory /tmp/spark-8aadaab2-052c-4cb5-9fca-3eb67e91a8cc [root@hadoop101.yinzhengjie.org.cn ~]#

2>.查看HDFS集群指定的spark日志目录是否生成了数据

3>.再次刷新历史服务器的WebUI,发现的确有日志啦