Spark的Yarn运行模式部署实战案例

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.启动Hadoop集群

1>.修改yarn的配置文件

[root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/ha/etc/hadoop/yarn-site.xml [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/ha/etc/hadoop/yarn-site.xml <?xml version="1.0"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <configuration> <!-- Site specific YARN configuration properties --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> <description>Reducer获取数据的方式</description> </property> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> <description>启用resourcemanager的HA功能</description> </property> <property> <name>yarn.resourcemanager.cluster-id</name> <value>yinzhengjie-yarn-ha</value> <description>标识集群,以确保RM不会接替另一个群集的活动状态</description> </property> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm101,rm105</value> <description>ResourceManager的逻辑ID列表</description> </property> <property> <name>yarn.resourcemanager.hostname.rm101</name> <value>hadoop101.yinzhengjie.org.cn</value> <description>指定rm101逻辑别名对应的真实服务器地址,即您可以理解添加映射关系.</description> </property> <property> <name>yarn.resourcemanager.hostname.rm105</name> <value>hadoop105.yinzhengjie.org.cn</value> <description>指定rm105逻辑别名对应的真实服务器地址,即您可以理解添加映射关系.</description> </property> <property> <name>yarn.resourcemanager.zk-address</name> <value>hadoop101.yinzhengjie.org.cn:2181,hadoop102.yinzhengjie.org.cn:2181,hadoop103.yinzhengjie.org.cn:2181</value> <description>指定zookeeper集群的地址</description> </property> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> <description>启用自动故障转移(即自动恢复功能)</description> </property> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> <description>指定resourcemanager的状态信息存储在zookeeper集群</description> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> <description>启用或禁用日志聚合的配置,默认为false,即禁用,将该值设置为true,表示开启日志聚集功能使能</description> </property> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>604800</value> <description>删除聚合日志前要保留多长时间(默认单位是秒),默认值是"-1"表示禁用,请注意,将此值设置得太小,您将向Namenode发送垃圾邮件.</description> </property> <property> <name>yarn.log-aggregation.retain-check-interval-seconds</name> <value>3600</value> <description>单位为秒,检查聚合日志保留之间的时间.如果设置为0或负值,那么该值将被计算为聚合日志保留时间的十分之一;请注意,将此值设置得太小,您将向名称节点发送垃圾邮件.</description> </property> <!-- 配置Spark ON YARN--> <property> <name>yarn.nodemanager.pmem-check-enabled</name> <value>false</value> <description>是否启动一个线程检查每个任务正使用的物理内存量,如果任务超出分配值,则直接将其杀掉,默认是true</description> </property> <property> <name>yarn.nodemanager.vmem-check-enabled</name> <value>false</value> <description>是否启动一个线程检查每个任务正使用的虚拟内存量,如果任务超出分配值,则直接将其杀掉,默认是true</description> </property> </configuration> [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# rsync-hadoop.sh /yinzhengjie/softwares/ha/etc/hadoop/yarn-site.xml ******* [hadoop102.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/ha/etc/hadoop/yarn-site.xml] ******* 命令执行成功 ******* [hadoop103.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/ha/etc/hadoop/yarn-site.xml] ******* 命令执行成功 ******* [hadoop104.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/ha/etc/hadoop/yarn-site.xml] ******* 命令执行成功 ******* [hadoop105.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/ha/etc/hadoop/yarn-site.xml] ******* 命令执行成功 ******* [hadoop106.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/ha/etc/hadoop/yarn-site.xml] ******* 命令执行成功 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

2>.启动zookeeper集群

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8239 Jps hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8745 Jps hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9677 Jps hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 6486 Jps hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8208 Jps hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 6730 Jps [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# zookeeper.sh start 启动服务 ========== zookeeper101.yinzhengjie.org.cn zkServer.sh start ================ /yinzhengjie/softwares/jdk1.8.0_201/bin/java ZooKeeper JMX enabled by default Using config: /yinzhengjie/softwares/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== zookeeper102.yinzhengjie.org.cn zkServer.sh start ================ /yinzhengjie/softwares/jdk1.8.0_201/bin/java ZooKeeper JMX enabled by default Using config: /yinzhengjie/softwares/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== zookeeper103.yinzhengjie.org.cn zkServer.sh start ================ /yinzhengjie/softwares/jdk1.8.0_201/bin/java ZooKeeper JMX enabled by default Using config: /yinzhengjie/softwares/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10992 QuorumPeerMain 11133 Jps hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9063 Jps hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8630 QuorumPeerMain 8716 Jps hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8754 Jps 8661 QuorumPeerMain hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 6918 Jps hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 7296 Jps [root@hadoop101.yinzhengjie.org.cn ~]#

3>.启动hdfs集群

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10992 QuorumPeerMain 11133 Jps hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9063 Jps hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8630 QuorumPeerMain 8716 Jps hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8754 Jps 8661 QuorumPeerMain hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 6918 Jps hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 7296 Jps [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# start-dfs.sh Starting namenodes on [hadoop101.yinzhengjie.org.cn hadoop106.yinzhengjie.org.cn] hadoop106.yinzhengjie.org.cn: starting namenode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-namenode-hadoop106.yinzhengjie.org.cn.out hadoop101.yinzhengjie.org.cn: starting namenode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-namenode-hadoop101.yinzhengjie.org.cn.out hadoop102.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop102.yinzhengjie.org.cn.out hadoop104.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop104.yinzhengjie.org.cn.out hadoop105.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop105.yinzhengjie.org.cn.out hadoop106.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop106.yinzhengjie.org.cn.out hadoop101.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop101.yinzhengjie.org.cn.out hadoop103.yinzhengjie.org.cn: starting datanode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-datanode-hadoop103.yinzhengjie.org.cn.out Starting journal nodes [hadoop103.yinzhengjie.org.cn hadoop104.yinzhengjie.org.cn hadoop102.yinzhengjie.org.cn] hadoop103.yinzhengjie.org.cn: starting journalnode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-journalnode-hadoop103.yinzhengjie.org.cn.out hadoop104.yinzhengjie.org.cn: starting journalnode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-journalnode-hadoop104.yinzhengjie.org.cn.out hadoop102.yinzhengjie.org.cn: starting journalnode, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-journalnode-hadoop102.yinzhengjie.org.cn.out Starting ZK Failover Controllers on NN hosts [hadoop101.yinzhengjie.org.cn hadoop106.yinzhengjie.org.cn] hadoop101.yinzhengjie.org.cn: starting zkfc, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-zkfc-hadoop101.yinzhengjie.org.cn.out hadoop106.yinzhengjie.org.cn: starting zkfc, logging to /yinzhengjie/softwares/ha/logs/hadoop-root-zkfc-hadoop106.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8630 QuorumPeerMain 8982 Jps 8760 DataNode 8861 JournalNode hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 6962 DataNode 7063 JournalNode 7179 Jps hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9108 DataNode 9238 Jps hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8899 JournalNode 9011 Jps 8661 QuorumPeerMain 8798 DataNode hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10992 QuorumPeerMain 11873 Jps 11273 NameNode 11390 DataNode 11710 DFSZKFailoverController hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 7568 DFSZKFailoverController 7685 Jps 7417 DataNode 7340 NameNode [root@hadoop101.yinzhengjie.org.cn ~]#

4>.启动yarn集群

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8630 QuorumPeerMain 8982 Jps 8760 DataNode 8861 JournalNode hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 6962 DataNode 7063 JournalNode 7179 Jps hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9108 DataNode 9238 Jps hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8899 JournalNode 9011 Jps 8661 QuorumPeerMain 8798 DataNode hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10992 QuorumPeerMain 11873 Jps 11273 NameNode 11390 DataNode 11710 DFSZKFailoverController hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 7568 DFSZKFailoverController 7685 Jps 7417 DataNode 7340 NameNode [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# start-yarn.sh starting yarn daemons starting resourcemanager, logging to /yinzhengjie/softwares/ha/logs/yarn-root-resourcemanager-hadoop101.yinzhengjie.org.cn.out hadoop102.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/ha/logs/yarn-root-nodemanager-hadoop102.yinzhengjie.org.cn.out hadoop103.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/ha/logs/yarn-root-nodemanager-hadoop103.yinzhengjie.org.cn.out hadoop104.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/ha/logs/yarn-root-nodemanager-hadoop104.yinzhengjie.org.cn.out hadoop105.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/ha/logs/yarn-root-nodemanager-hadoop105.yinzhengjie.org.cn.out hadoop106.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/ha/logs/yarn-root-nodemanager-hadoop106.yinzhengjie.org.cn.out hadoop101.yinzhengjie.org.cn: starting nodemanager, logging to /yinzhengjie/softwares/ha/logs/yarn-root-nodemanager-hadoop101.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8899 JournalNode 9060 NodeManager 8661 QuorumPeerMain 9242 Jps 8798 DataNode hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8630 QuorumPeerMain 9031 NodeManager 8760 DataNode 9209 Jps 8861 JournalNode hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9108 DataNode 9286 NodeManager 9467 Jps hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 6962 DataNode 7063 JournalNode 7228 NodeManager 7406 Jps hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10992 QuorumPeerMain 12083 NodeManager 11959 ResourceManager 12519 Jps 11273 NameNode 11390 DataNode 11710 DFSZKFailoverController hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 7568 DFSZKFailoverController 7417 DataNode 7739 NodeManager 7340 NameNode 7917 Jps [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop105.yinzhengjie.org.cn ~]# jps 9108 DataNode 9286 NodeManager 9481 Jps [root@hadoop105.yinzhengjie.org.cn ~]# [root@hadoop105.yinzhengjie.org.cn ~]# yarn-daemon.sh start resourcemanager starting resourcemanager, logging to /yinzhengjie/softwares/ha/logs/yarn-root-resourcemanager-hadoop105.yinzhengjie.org.cn.out [root@hadoop105.yinzhengjie.org.cn ~]# [root@hadoop105.yinzhengjie.org.cn ~]# jps 9520 ResourceManager 9108 DataNode 9286 NodeManager 9582 Jps [root@hadoop105.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8899 JournalNode 9060 NodeManager 8661 QuorumPeerMain 9302 Jps 8798 DataNode hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9520 ResourceManager 9108 DataNode 9286 NodeManager 9647 Jps hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10992 QuorumPeerMain 12083 NodeManager 11959 ResourceManager 11273 NameNode 12651 Jps 11390 DataNode 11710 DFSZKFailoverController hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 6962 DataNode 7063 JournalNode 7465 Jps 7228 NodeManager hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9267 Jps 8630 QuorumPeerMain 9031 NodeManager 8760 DataNode 8861 JournalNode hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 7568 DFSZKFailoverController 7417 DataNode 7978 Jps 7739 NodeManager 7340 NameNode [root@hadoop101.yinzhengjie.org.cn ~]#

二.配置Spark On Yarn集群

1>.修改spark的配置文件

[root@hadoop101.yinzhengjie.org.cn ~]# cp /yinzhengjie/softwares/spark/conf/spark-env.sh.template /yinzhengjie/softwares/spark/conf/spark-env.sh #拷贝默认的配置文件

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# egrep -v "^*#|^$" /yinzhengjie/softwares/spark/conf/spark-env.sh

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/spark/conf/spark-env.sh #添加yarn的配置文件路径,需要注意的是,你spark如果有多台需要分发操作哟~

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# egrep -v "^*#|^$" /yinzhengjie/softwares/spark/conf/spark-env.sh

YARN_CONF_DIR=/yinzhengjie/softwares/ha/etc/hadoop

[root@hadoop101.yinzhengjie.org.cn ~]#

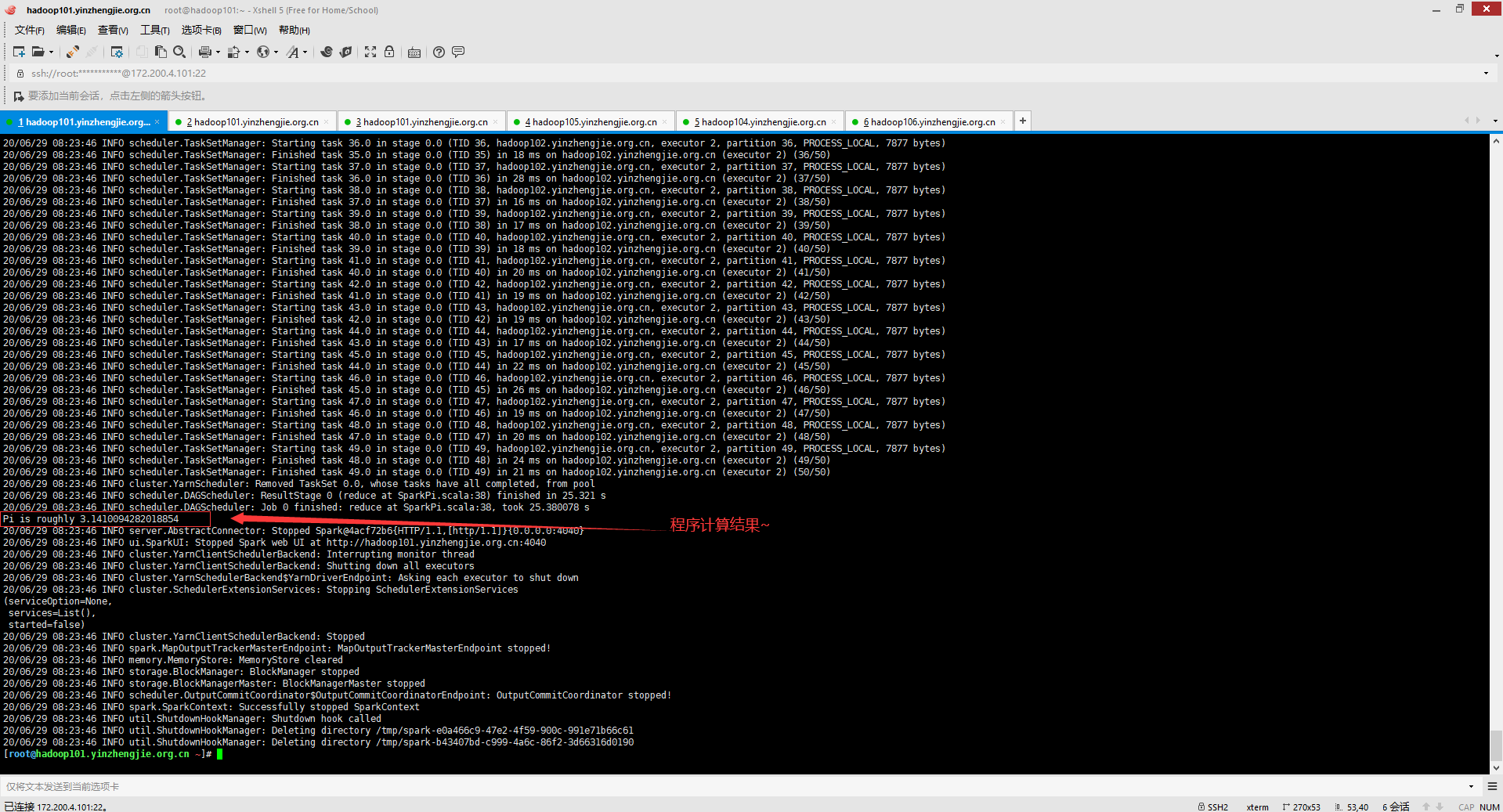

2>.命令行提交spark计算圆周率案例

[root@hadoop101.yinzhengjie.org.cn ~]# spark-submit > --class org.apache.spark.examples.SparkPi > --master yarn > --deploy-mode client > --executor-memory 1G > --total-executor-cores 2 > /yinzhengjie/softwares/spark/examples/jars/spark-examples_2.11-2.4.6.jar > 50 20/06/29 08:21:54 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 20/06/29 08:21:54 INFO spark.SparkContext: Running Spark version 2.4.6 20/06/29 08:21:54 INFO spark.SparkContext: Submitted application: Spark Pi 20/06/29 08:21:54 INFO spark.SecurityManager: Changing view acls to: root 20/06/29 08:21:54 INFO spark.SecurityManager: Changing modify acls to: root 20/06/29 08:21:54 INFO spark.SecurityManager: Changing view acls groups to: 20/06/29 08:21:54 INFO spark.SecurityManager: Changing modify acls groups to: 20/06/29 08:21:54 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 20/06/29 08:21:56 INFO util.Utils: Successfully started service 'sparkDriver' on port 26581. 20/06/29 08:21:56 INFO spark.SparkEnv: Registering MapOutputTracker 20/06/29 08:21:56 INFO spark.SparkEnv: Registering BlockManagerMaster 20/06/29 08:21:56 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 20/06/29 08:21:56 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 20/06/29 08:21:56 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-ec685ccb-b618-4c52-aa7e-bf28e18ff9d8 20/06/29 08:21:56 INFO memory.MemoryStore: MemoryStore started with capacity 366.3 MB 20/06/29 08:21:56 INFO spark.SparkEnv: Registering OutputCommitCoordinator 20/06/29 08:21:56 INFO util.log: Logging initialized @3426ms 20/06/29 08:21:56 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown 20/06/29 08:21:56 INFO server.Server: Started @3493ms 20/06/29 08:21:56 INFO server.AbstractConnector: Started ServerConnector@4acf72b6{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 20/06/29 08:21:56 INFO util.Utils: Successfully started service 'SparkUI' on port 4040. 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@11ee02f8{/jobs,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2e6ee0bc{/jobs/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4201a617{/jobs/job,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1bb9aa43{/jobs/job/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@420bc288{/stages,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@df5f5c0{/stages/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@308a6984{/stages/stage,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@58cd06cb{/stages/stage/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3be8821f{/stages/pool,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@64b31700{/stages/pool/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3b65e559{/storage,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@bae47a0{/storage/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@74a9c4b0{/storage/rdd,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@85ec632{/storage/rdd/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1c05a54d{/environment,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@65ef722a{/environment/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5fd9b663{/executors,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@214894fc{/executors/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@10567255{/executors/threadDump,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@e362c57{/executors/threadDump/json,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1c4ee95c{/static,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@492fc69e{/,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@117632cf{/api,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1a1d3c1a{/jobs/job/kill,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1c65121{/stages/stage/kill,null,AVAILABLE,@Spark} 20/06/29 08:21:56 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://hadoop101.yinzhengjie.org.cn:4040 20/06/29 08:21:56 INFO spark.SparkContext: Added JAR file:/yinzhengjie/softwares/spark/examples/jars/spark-examples_2.11-2.4.6.jar at spark://hadoop101.yinzhengjie.org.cn:26581/jars/spark-examples_2.11-2.4.6.jar with timestamp 1593390116380 20/06/29 08:22:00 INFO yarn.Client: Requesting a new application from cluster with 6 NodeManagers 20/06/29 08:22:00 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container) 20/06/29 08:22:00 INFO yarn.Client: Will allocate AM container, with 896 MB memory including 384 MB overhead 20/06/29 08:22:00 INFO yarn.Client: Setting up container launch context for our AM 20/06/29 08:22:00 INFO yarn.Client: Setting up the launch environment for our AM container 20/06/29 08:22:00 INFO yarn.Client: Preparing resources for our AM container 20/06/29 08:22:00 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME. 20/06/29 08:22:14 INFO yarn.Client: Uploading resource file:/tmp/spark-b43407bd-c999-4a6c-86f2-3d66316d0190/__spark_libs__4287260190968696284.zip -> hdfs://yinzhengjie-hdfs-ha/user/root/.sparkStaging/application_1593389957653_0001/__spark_libs__4287260190968696284.zip 20/06/29 08:22:50 INFO yarn.Client: Uploading resource file:/tmp/spark-b43407bd-c999-4a6c-86f2-3d66316d0190/__spark_conf__2844283093964797340.zip -> hdfs://yinzhengjie-hdfs-ha/user/root/.sparkStaging/application_1593389957653_0001/__spark_conf__.zip 20/06/29 08:22:50 INFO spark.SecurityManager: Changing view acls to: root 20/06/29 08:22:50 INFO spark.SecurityManager: Changing modify acls to: root 20/06/29 08:22:50 INFO spark.SecurityManager: Changing view acls groups to: 20/06/29 08:22:50 INFO spark.SecurityManager: Changing modify acls groups to: 20/06/29 08:22:50 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 20/06/29 08:22:51 INFO yarn.Client: Submitting application application_1593389957653_0001 to ResourceManager 20/06/29 08:22:51 INFO impl.YarnClientImpl: Submitted application application_1593389957653_0001 20/06/29 08:22:51 INFO cluster.SchedulerExtensionServices: Starting Yarn extension services with app application_1593389957653_0001 and attemptId None 20/06/29 08:22:52 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:22:52 INFO yarn.Client: client token: N/A diagnostics: [Mon Jun 29 08:22:51 +0800 2020] Scheduler has assigned a container for AM, waiting for AM container to be launched ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: default start time: 1593390171580 final status: UNDEFINED tracking URL: http://hadoop101.yinzhengjie.org.cn:8088/proxy/application_1593389957653_0001/ user: root 20/06/29 08:22:53 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:22:54 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:22:55 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:22:56 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:22:57 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:22:58 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:22:59 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:00 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:01 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:02 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:03 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:04 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:05 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:06 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:07 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:08 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:09 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:10 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:11 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:12 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:13 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:14 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:15 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:16 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:17 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:18 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:19 INFO yarn.Client: Application report for application_1593389957653_0001 (state: ACCEPTED) 20/06/29 08:23:20 INFO yarn.Client: Application report for application_1593389957653_0001 (state: RUNNING) 20/06/29 08:23:20 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: 172.200.4.102 ApplicationMaster RPC port: -1 queue: default start time: 1593390171580 final status: UNDEFINED tracking URL: http://hadoop101.yinzhengjie.org.cn:8088/proxy/application_1593389957653_0001/ user: root 20/06/29 08:23:20 INFO cluster.YarnClientSchedulerBackend: Application application_1593389957653_0001 has started running. 20/06/29 08:23:20 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 23197. 20/06/29 08:23:20 INFO netty.NettyBlockTransferService: Server created on hadoop101.yinzhengjie.org.cn:23197 20/06/29 08:23:20 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 20/06/29 08:23:20 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, hadoop101.yinzhengjie.org.cn, 23197, None) 20/06/29 08:23:20 INFO storage.BlockManagerMasterEndpoint: Registering block manager hadoop101.yinzhengjie.org.cn:23197 with 366.3 MB RAM, BlockManagerId(driver, hadoop101.yinzhengjie.org.cn, 23197, None) 20/06/29 08:23:20 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, hadoop101.yinzhengjie.org.cn, 23197, None) 20/06/29 08:23:20 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, hadoop101.yinzhengjie.org.cn, 23197, None) 20/06/29 08:23:21 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5438fa43{/metrics/json,null,AVAILABLE,@Spark} 20/06/29 08:23:21 INFO cluster.YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> hadoop102.yinzhengjie.org.cn, PROXY_URI_BASES -> http://hadoop102.yinzhengjie.org.cn:8088/proxy/application_159338 9957653_0001, RM_HA_URLS -> null,null), /proxy/application_1593389957653_000120/06/29 08:23:21 INFO cluster.YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after waiting maxRegisteredResourcesWaitingTime: 30000(ms) 20/06/29 08:23:21 INFO cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(spark-client://YarnAM) 20/06/29 08:23:21 INFO spark.SparkContext: Starting job: reduce at SparkPi.scala:38 20/06/29 08:23:21 INFO scheduler.DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 50 output partitions 20/06/29 08:23:21 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38) 20/06/29 08:23:21 INFO scheduler.DAGScheduler: Parents of final stage: List() 20/06/29 08:23:21 INFO scheduler.DAGScheduler: Missing parents: List() 20/06/29 08:23:21 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents 20/06/29 08:23:21 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 2.0 KB, free 366.3 MB) 20/06/29 08:23:21 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1381.0 B, free 366.3 MB) 20/06/29 08:23:21 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop101.yinzhengjie.org.cn:23197 (size: 1381.0 B, free: 366.3 MB) 20/06/29 08:23:21 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1163 20/06/29 08:23:21 INFO scheduler.DAGScheduler: Submitting 50 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14)) 20/06/29 08:23:21 INFO cluster.YarnScheduler: Adding task set 0.0 with 50 tasks 20/06/29 08:23:35 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.200.4.102:12103) with ID 2 20/06/29 08:23:35 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, hadoop102.yinzhengjie.org.cn, executor 2, partition 0, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:36 INFO storage.BlockManagerMasterEndpoint: Registering block manager hadoop102.yinzhengjie.org.cn:26493 with 366.3 MB RAM, BlockManagerId(2, hadoop102.yinzhengjie.org.cn, 26493, None) 20/06/29 08:23:44 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop102.yinzhengjie.org.cn:26493 (size: 1381.0 B, free: 366.3 MB) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, hadoop102.yinzhengjie.org.cn, executor 2, partition 1, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 8994 ms on hadoop102.yinzhengjie.org.cn (executor 2) (1/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, hadoop102.yinzhengjie.org.cn, executor 2, partition 2, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 40 ms on hadoop102.yinzhengjie.org.cn (executor 2) (2/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, hadoop102.yinzhengjie.org.cn, executor 2, partition 3, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 38 ms on hadoop102.yinzhengjie.org.cn (executor 2) (3/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4, hadoop102.yinzhengjie.org.cn, executor 2, partition 4, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 69 ms on hadoop102.yinzhengjie.org.cn (executor 2) (4/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5, hadoop102.yinzhengjie.org.cn, executor 2, partition 5, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 34 ms on hadoop102.yinzhengjie.org.cn (executor 2) (5/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6, hadoop102.yinzhengjie.org.cn, executor 2, partition 6, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 60 ms on hadoop102.yinzhengjie.org.cn (executor 2) (6/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7, hadoop102.yinzhengjie.org.cn, executor 2, partition 7, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 218 ms on hadoop102.yinzhengjie.org.cn (executor 2) (7/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8, hadoop102.yinzhengjie.org.cn, executor 2, partition 8, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 69 ms on hadoop102.yinzhengjie.org.cn (executor 2) (8/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9, hadoop102.yinzhengjie.org.cn, executor 2, partition 9, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 88 ms on hadoop102.yinzhengjie.org.cn (executor 2) (9/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 10.0 in stage 0.0 (TID 10, hadoop102.yinzhengjie.org.cn, executor 2, partition 10, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 35 ms on hadoop102.yinzhengjie.org.cn (executor 2) (10/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 11.0 in stage 0.0 (TID 11, hadoop102.yinzhengjie.org.cn, executor 2, partition 11, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 10.0 in stage 0.0 (TID 10) in 41 ms on hadoop102.yinzhengjie.org.cn (executor 2) (11/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 12.0 in stage 0.0 (TID 12, hadoop102.yinzhengjie.org.cn, executor 2, partition 12, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 11.0 in stage 0.0 (TID 11) in 31 ms on hadoop102.yinzhengjie.org.cn (executor 2) (12/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 13.0 in stage 0.0 (TID 13, hadoop102.yinzhengjie.org.cn, executor 2, partition 13, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 12.0 in stage 0.0 (TID 12) in 35 ms on hadoop102.yinzhengjie.org.cn (executor 2) (13/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 14.0 in stage 0.0 (TID 14, hadoop102.yinzhengjie.org.cn, executor 2, partition 14, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 13.0 in stage 0.0 (TID 13) in 29 ms on hadoop102.yinzhengjie.org.cn (executor 2) (14/50) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Starting task 15.0 in stage 0.0 (TID 15, hadoop102.yinzhengjie.org.cn, executor 2, partition 15, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:44 INFO scheduler.TaskSetManager: Finished task 14.0 in stage 0.0 (TID 14) in 33 ms on hadoop102.yinzhengjie.org.cn (executor 2) (15/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 16.0 in stage 0.0 (TID 16, hadoop102.yinzhengjie.org.cn, executor 2, partition 16, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 15.0 in stage 0.0 (TID 15) in 23 ms on hadoop102.yinzhengjie.org.cn (executor 2) (16/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 17.0 in stage 0.0 (TID 17, hadoop102.yinzhengjie.org.cn, executor 2, partition 17, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 16.0 in stage 0.0 (TID 16) in 20 ms on hadoop102.yinzhengjie.org.cn (executor 2) (17/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 18.0 in stage 0.0 (TID 18, hadoop102.yinzhengjie.org.cn, executor 2, partition 18, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 17.0 in stage 0.0 (TID 17) in 21 ms on hadoop102.yinzhengjie.org.cn (executor 2) (18/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 19.0 in stage 0.0 (TID 19, hadoop102.yinzhengjie.org.cn, executor 2, partition 19, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 18.0 in stage 0.0 (TID 18) in 28 ms on hadoop102.yinzhengjie.org.cn (executor 2) (19/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 20.0 in stage 0.0 (TID 20, hadoop102.yinzhengjie.org.cn, executor 2, partition 20, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 19.0 in stage 0.0 (TID 19) in 22 ms on hadoop102.yinzhengjie.org.cn (executor 2) (20/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 21.0 in stage 0.0 (TID 21, hadoop102.yinzhengjie.org.cn, executor 2, partition 21, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 20.0 in stage 0.0 (TID 20) in 22 ms on hadoop102.yinzhengjie.org.cn (executor 2) (21/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 22.0 in stage 0.0 (TID 22, hadoop102.yinzhengjie.org.cn, executor 2, partition 22, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 21.0 in stage 0.0 (TID 21) in 22 ms on hadoop102.yinzhengjie.org.cn (executor 2) (22/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 23.0 in stage 0.0 (TID 23, hadoop102.yinzhengjie.org.cn, executor 2, partition 23, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 22.0 in stage 0.0 (TID 22) in 42 ms on hadoop102.yinzhengjie.org.cn (executor 2) (23/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 24.0 in stage 0.0 (TID 24, hadoop102.yinzhengjie.org.cn, executor 2, partition 24, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 23.0 in stage 0.0 (TID 23) in 26 ms on hadoop102.yinzhengjie.org.cn (executor 2) (24/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 25.0 in stage 0.0 (TID 25, hadoop102.yinzhengjie.org.cn, executor 2, partition 25, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 24.0 in stage 0.0 (TID 24) in 27 ms on hadoop102.yinzhengjie.org.cn (executor 2) (25/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 26.0 in stage 0.0 (TID 26, hadoop102.yinzhengjie.org.cn, executor 2, partition 26, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 25.0 in stage 0.0 (TID 25) in 30 ms on hadoop102.yinzhengjie.org.cn (executor 2) (26/50) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Starting task 27.0 in stage 0.0 (TID 27, hadoop102.yinzhengjie.org.cn, executor 2, partition 27, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:45 INFO scheduler.TaskSetManager: Finished task 26.0 in stage 0.0 (TID 26) in 21 ms on hadoop102.yinzhengjie.org.cn (executor 2) (27/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 28.0 in stage 0.0 (TID 28, hadoop102.yinzhengjie.org.cn, executor 2, partition 28, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 27.0 in stage 0.0 (TID 27) in 1169 ms on hadoop102.yinzhengjie.org.cn (executor 2) (28/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 29.0 in stage 0.0 (TID 29, hadoop102.yinzhengjie.org.cn, executor 2, partition 29, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 28.0 in stage 0.0 (TID 28) in 17 ms on hadoop102.yinzhengjie.org.cn (executor 2) (29/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 30.0 in stage 0.0 (TID 30, hadoop102.yinzhengjie.org.cn, executor 2, partition 30, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 29.0 in stage 0.0 (TID 29) in 16 ms on hadoop102.yinzhengjie.org.cn (executor 2) (30/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 31.0 in stage 0.0 (TID 31, hadoop102.yinzhengjie.org.cn, executor 2, partition 31, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 30.0 in stage 0.0 (TID 30) in 16 ms on hadoop102.yinzhengjie.org.cn (executor 2) (31/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 32.0 in stage 0.0 (TID 32, hadoop102.yinzhengjie.org.cn, executor 2, partition 32, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 31.0 in stage 0.0 (TID 31) in 21 ms on hadoop102.yinzhengjie.org.cn (executor 2) (32/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 33.0 in stage 0.0 (TID 33, hadoop102.yinzhengjie.org.cn, executor 2, partition 33, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 32.0 in stage 0.0 (TID 32) in 17 ms on hadoop102.yinzhengjie.org.cn (executor 2) (33/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 34.0 in stage 0.0 (TID 34, hadoop102.yinzhengjie.org.cn, executor 2, partition 34, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 33.0 in stage 0.0 (TID 33) in 17 ms on hadoop102.yinzhengjie.org.cn (executor 2) (34/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 35.0 in stage 0.0 (TID 35, hadoop102.yinzhengjie.org.cn, executor 2, partition 35, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 34.0 in stage 0.0 (TID 34) in 18 ms on hadoop102.yinzhengjie.org.cn (executor 2) (35/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 36.0 in stage 0.0 (TID 36, hadoop102.yinzhengjie.org.cn, executor 2, partition 36, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 35.0 in stage 0.0 (TID 35) in 18 ms on hadoop102.yinzhengjie.org.cn (executor 2) (36/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 37.0 in stage 0.0 (TID 37, hadoop102.yinzhengjie.org.cn, executor 2, partition 37, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 36.0 in stage 0.0 (TID 36) in 28 ms on hadoop102.yinzhengjie.org.cn (executor 2) (37/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 38.0 in stage 0.0 (TID 38, hadoop102.yinzhengjie.org.cn, executor 2, partition 38, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 37.0 in stage 0.0 (TID 37) in 16 ms on hadoop102.yinzhengjie.org.cn (executor 2) (38/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 39.0 in stage 0.0 (TID 39, hadoop102.yinzhengjie.org.cn, executor 2, partition 39, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 38.0 in stage 0.0 (TID 38) in 17 ms on hadoop102.yinzhengjie.org.cn (executor 2) (39/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 40.0 in stage 0.0 (TID 40, hadoop102.yinzhengjie.org.cn, executor 2, partition 40, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 39.0 in stage 0.0 (TID 39) in 18 ms on hadoop102.yinzhengjie.org.cn (executor 2) (40/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 41.0 in stage 0.0 (TID 41, hadoop102.yinzhengjie.org.cn, executor 2, partition 41, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 40.0 in stage 0.0 (TID 40) in 20 ms on hadoop102.yinzhengjie.org.cn (executor 2) (41/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 42.0 in stage 0.0 (TID 42, hadoop102.yinzhengjie.org.cn, executor 2, partition 42, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 41.0 in stage 0.0 (TID 41) in 19 ms on hadoop102.yinzhengjie.org.cn (executor 2) (42/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 43.0 in stage 0.0 (TID 43, hadoop102.yinzhengjie.org.cn, executor 2, partition 43, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 42.0 in stage 0.0 (TID 42) in 19 ms on hadoop102.yinzhengjie.org.cn (executor 2) (43/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 44.0 in stage 0.0 (TID 44, hadoop102.yinzhengjie.org.cn, executor 2, partition 44, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 43.0 in stage 0.0 (TID 43) in 17 ms on hadoop102.yinzhengjie.org.cn (executor 2) (44/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 45.0 in stage 0.0 (TID 45, hadoop102.yinzhengjie.org.cn, executor 2, partition 45, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 44.0 in stage 0.0 (TID 44) in 22 ms on hadoop102.yinzhengjie.org.cn (executor 2) (45/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 46.0 in stage 0.0 (TID 46, hadoop102.yinzhengjie.org.cn, executor 2, partition 46, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 45.0 in stage 0.0 (TID 45) in 26 ms on hadoop102.yinzhengjie.org.cn (executor 2) (46/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 47.0 in stage 0.0 (TID 47, hadoop102.yinzhengjie.org.cn, executor 2, partition 47, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 46.0 in stage 0.0 (TID 46) in 19 ms on hadoop102.yinzhengjie.org.cn (executor 2) (47/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 48.0 in stage 0.0 (TID 48, hadoop102.yinzhengjie.org.cn, executor 2, partition 48, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 47.0 in stage 0.0 (TID 47) in 20 ms on hadoop102.yinzhengjie.org.cn (executor 2) (48/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Starting task 49.0 in stage 0.0 (TID 49, hadoop102.yinzhengjie.org.cn, executor 2, partition 49, PROCESS_LOCAL, 7877 bytes) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 48.0 in stage 0.0 (TID 48) in 24 ms on hadoop102.yinzhengjie.org.cn (executor 2) (49/50) 20/06/29 08:23:46 INFO scheduler.TaskSetManager: Finished task 49.0 in stage 0.0 (TID 49) in 21 ms on hadoop102.yinzhengjie.org.cn (executor 2) (50/50) 20/06/29 08:23:46 INFO cluster.YarnScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool 20/06/29 08:23:46 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 25.321 s 20/06/29 08:23:46 INFO scheduler.DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 25.380078 s Pi is roughly 3.1410094282018854 20/06/29 08:23:46 INFO server.AbstractConnector: Stopped Spark@4acf72b6{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 20/06/29 08:23:46 INFO ui.SparkUI: Stopped Spark web UI at http://hadoop101.yinzhengjie.org.cn:4040 20/06/29 08:23:46 INFO cluster.YarnClientSchedulerBackend: Interrupting monitor thread 20/06/29 08:23:46 INFO cluster.YarnClientSchedulerBackend: Shutting down all executors 20/06/29 08:23:46 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down 20/06/29 08:23:46 INFO cluster.SchedulerExtensionServices: Stopping SchedulerExtensionServices (serviceOption=None, services=List(), started=false) 20/06/29 08:23:46 INFO cluster.YarnClientSchedulerBackend: Stopped 20/06/29 08:23:46 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 20/06/29 08:23:46 INFO memory.MemoryStore: MemoryStore cleared 20/06/29 08:23:46 INFO storage.BlockManager: BlockManager stopped 20/06/29 08:23:46 INFO storage.BlockManagerMaster: BlockManagerMaster stopped 20/06/29 08:23:46 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 20/06/29 08:23:46 INFO spark.SparkContext: Successfully stopped SparkContext 20/06/29 08:23:46 INFO util.ShutdownHookManager: Shutdown hook called 20/06/29 08:23:46 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-e0a466c9-47e2-4f59-900c-991e71b66c61 20/06/29 08:23:46 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-b43407bd-c999-4a6c-86f2-3d66316d0190 [root@hadoop101.yinzhengjie.org.cn ~]#

3>.交互式命令行实操案例

[root@hadoop101.yinzhengjie.org.cn ~]# ll /tmp/data/ total 8 -rw-r--r-- 1 root root 46 Jun 28 03:14 1.txt -rw-r--r-- 1 root root 60 Jun 28 03:14 2.txt [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat /tmp/data/1.txt hello java java python kafka shell spark java [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat /tmp/data/2.txt hello golang bigdata shell java python world java spark c++ [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 5 items drwxr-xr-x - root supergroup 0 2020-05-30 11:57 /hbase drwxr-xr-x - root supergroup 0 2020-05-29 18:55 /input_fruit drwx------ - root supergroup 0 2020-05-29 18:32 /tmp drwxr-xr-x - root supergroup 0 2020-06-29 08:22 /user drwxr-xr-x - root supergroup 0 2020-05-30 04:46 /yinzhengjie [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp Found 2 items drwx------ - root supergroup 0 2020-05-29 18:29 /tmp/hadoop-yarn drwxrwxrwt - root root 0 2020-05-29 18:32 /tmp/logs [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -put /tmp/data/ /tmp/ [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp Found 3 items drwxr-xr-x - root supergroup 0 2020-06-29 08:43 /tmp/data drwx------ - root supergroup 0 2020-05-29 18:29 /tmp/hadoop-yarn drwxrwxrwt - root root 0 2020-05-29 18:32 /tmp/logs [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp/data/ Found 2 items -rw-r--r-- 3 root supergroup 46 2020-06-29 08:43 /tmp/data/1.txt -rw-r--r-- 3 root supergroup 60 2020-06-29 08:43 /tmp/data/2.txt [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# spark-shell --master yarn 20/06/29 08:39:01 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 20/06/29 08:39:18 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME. Spark context Web UI available at http://hadoop101.yinzhengjie.org.cn:4040 Spark context available as 'sc' (master = yarn, app id = application_1593389957653_0002). Spark session available as 'spark'. Welcome to ____ __ / __/__ ___ _____/ /__ _ / _ / _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_ version 2.4.6 /_/ Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_201) Type in expressions to have them evaluated. Type :help for more information. scala> scala> sc.textFile("/tmp/data/").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).collect res1: Array[(String, Int)] = Array((kafka,1), (world,1), (python,2), (golang,1), (hello,2), (java,5), (spark,2), (c++,1), (bigdata,1), (shell,2)) scala>

三.配置spark日志功能

1>.修改spark的默认配置文件

[root@hadoop101.yinzhengjie.org.cn ~]# cp /yinzhengjie/softwares/spark/conf/spark-defaults.conf.template /yinzhengjie/softwares/spark/conf/spark-defaults.conf [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/spark/conf/spark-defaults.conf [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# egrep -v "^*#|^$" /yinzhengjie/softwares/spark/conf/spark-defaults.conf spark.yarn.historyServer.address=hadoop101.yinzhengjie.org.cn:18080 spark.history.ui.port=18080 [root@hadoop101.yinzhengjie.org.cn ~]#

2>.

3>.