Apache Hadoop配置历史服务器实战案例

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.搭建完全分布式集群

博主推荐阅读: https://www.cnblogs.com/yinzhengjie2020/p/12424192.html

二.配置历史服务器实操案例

1>.历史服务器功能说明

可以在ResourManager的WebUI查看一个Job的历史运行情况,比较恶心的是重启YARN服务后,之前的历史日志会被清空。

2>.编辑配置文件并下发到所有节点上

[root@hadoop101.yinzhengjie.org.cn ~]# echo ${HADOOP_HOME} /yinzhengjie/softwares/hadoop-2.10.0 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# vim ${HADOOP_HOME}/etc/hadoop/mapred-site.xml [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat ${HADOOP_HOME}/etc/hadoop/mapred-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> <description>指定MR运行在YARN上</description> </property> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop106.yinzhengjie.org.cn:10020</value> <description>配置历史服务器端地址,默认端口为10020</description> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop106.yinzhengjie.org.cn:19888</value> <description>历史服务器WebUI端地址,默认端口是19888</description> </property> </configuration> [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# rsync-hadoop.sh /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml ******* [hadoop102.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml] ******* 命令执行成功 ******* [hadoop103.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml] ******* 命令执行成功 ******* [hadoop104.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml] ******* 命令执行成功 ******* [hadoop105.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml] ******* 命令执行成功 ******* [hadoop106.yinzhengjie.org.cn] node starts synchronizing [/yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml] ******* 命令执行成功 [root@hadoop101.yinzhengjie.org.cn ~]#

3>.启动历史服务

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8772 Jps 8456 SecondaryNameNode hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8182 DataNode 8648 NodeManager 8844 Jps hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8944 Jps 8737 NodeManager 8198 DataNode hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8245 DataNode 9141 Jps 8935 NodeManager hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 14834 Jps 13214 NameNode hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 12806 Jps 12427 ResourceManager [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible rm -m shell -a 'mr-jobhistory-daemon.sh start historyserver' hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> starting historyserver, logging to /yinzhengjie/softwares/hadoop-2.10.0/logs/mapred-root-historyserver-hadoop106.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 14994 Jps 13214 NameNode hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9201 Jps 8245 DataNode 8935 NodeManager hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8835 Jps 8456 SecondaryNameNode hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8737 NodeManager 8198 DataNode 9003 Jps hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8182 DataNode 8648 NodeManager 8904 Jps hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 12427 ResourceManager 12893 JobHistoryServer 13006 Jps [root@hadoop101.yinzhengjie.org.cn ~]#

4>.启动MapReduce测试程序

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -mkdir /inputDir [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 2 items drwxr-xr-x - root supergroup 0 2020-03-12 16:18 /inputDir drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# vim wc.txt [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat wc.txt yinzhengjie 18 bigdata bigdata java python java golang java [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /inputDir [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -put wc.txt /inputDir [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /inputDir Found 1 items -rw-r--r-- 3 root supergroup 60 2020-03-12 16:20 /inputDir/wc.txt [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /inputDir Found 1 items -rw-r--r-- 3 root supergroup 60 2020-03-12 16:20 /inputDir/wc.txt [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 2 items drwxr-xr-x - root supergroup 0 2020-03-12 16:20 /inputDir drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hadoop jar ${HADOOP_HOME}/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.0.jar wordcount /inputDir /outputDir 20/03/12 16:22:48 INFO client.RMProxy: Connecting to ResourceManager at hadoop106.yinzhengjie.org.cn/172.200.4.106:8032 20/03/12 16:22:49 INFO input.FileInputFormat: Total input files to process : 1 20/03/12 16:22:49 INFO mapreduce.JobSubmitter: number of splits:1 20/03/12 16:22:49 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled 20/03/12 16:22:49 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1583999614207_0001 20/03/12 16:22:49 INFO conf.Configuration: resource-types.xml not found 20/03/12 16:22:49 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'. 20/03/12 16:22:49 INFO resource.ResourceUtils: Adding resource type - name = memory-mb, units = Mi, type = COUNTABLE 20/03/12 16:22:49 INFO resource.ResourceUtils: Adding resource type - name = vcores, units = , type = COUNTABLE 20/03/12 16:22:50 INFO impl.YarnClientImpl: Submitted application application_1583999614207_0001 20/03/12 16:22:50 INFO mapreduce.Job: The url to track the job: http://hadoop106.yinzhengjie.org.cn:8088/proxy/application_1583999614207_0001/ 20/03/12 16:22:50 INFO mapreduce.Job: Running job: job_1583999614207_0001 20/03/12 16:22:57 INFO mapreduce.Job: Job job_1583999614207_0001 running in uber mode : false 20/03/12 16:22:57 INFO mapreduce.Job: map 0% reduce 0% 20/03/12 16:23:04 INFO mapreduce.Job: map 100% reduce 0% 20/03/12 16:23:11 INFO mapreduce.Job: map 100% reduce 100% 20/03/12 16:23:11 INFO mapreduce.Job: Job job_1583999614207_0001 completed successfully 20/03/12 16:23:11 INFO mapreduce.Job: Counters: 49 File System Counters FILE: Number of bytes read=84 FILE: Number of bytes written=411203 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=181 HDFS: Number of bytes written=54 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=3817 Total time spent by all reduces in occupied slots (ms)=4790 Total time spent by all map tasks (ms)=3817 Total time spent by all reduce tasks (ms)=4790 Total vcore-milliseconds taken by all map tasks=3817 Total vcore-milliseconds taken by all reduce tasks=4790 Total megabyte-milliseconds taken by all map tasks=3908608 Total megabyte-milliseconds taken by all reduce tasks=4904960 Map-Reduce Framework Map input records=3 Map output records=9 Map output bytes=96 Map output materialized bytes=84 Input split bytes=121 Combine input records=9 Combine output records=6 Reduce input groups=6 Reduce shuffle bytes=84 Reduce input records=6 Reduce output records=6 Spilled Records=12 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=462 CPU time spent (ms)=1900 Physical memory (bytes) snapshot=481079296 Virtual memory (bytes) snapshot=4315381760 Total committed heap usage (bytes)=284688384 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=60 File Output Format Counters Bytes Written=54 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

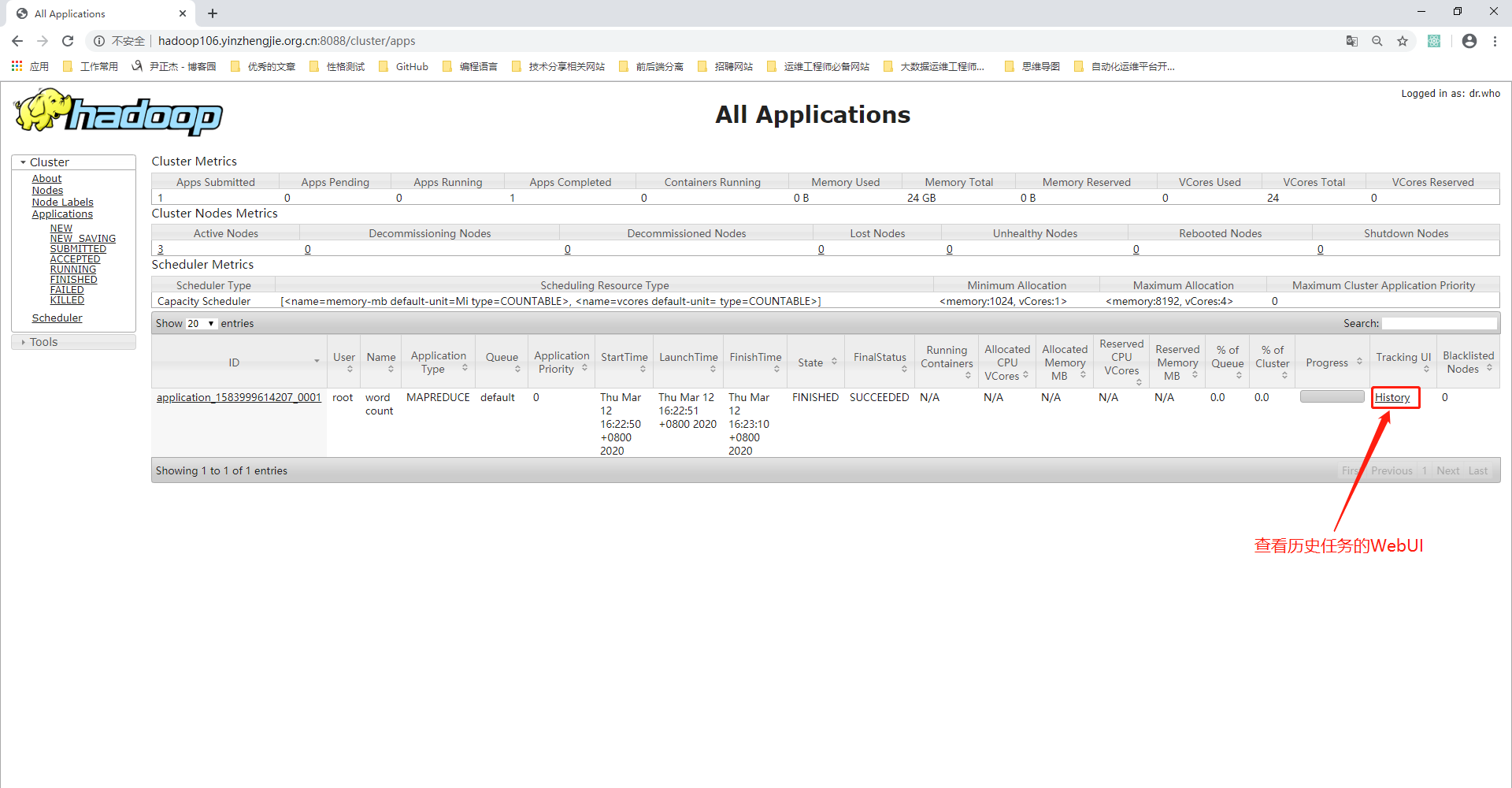

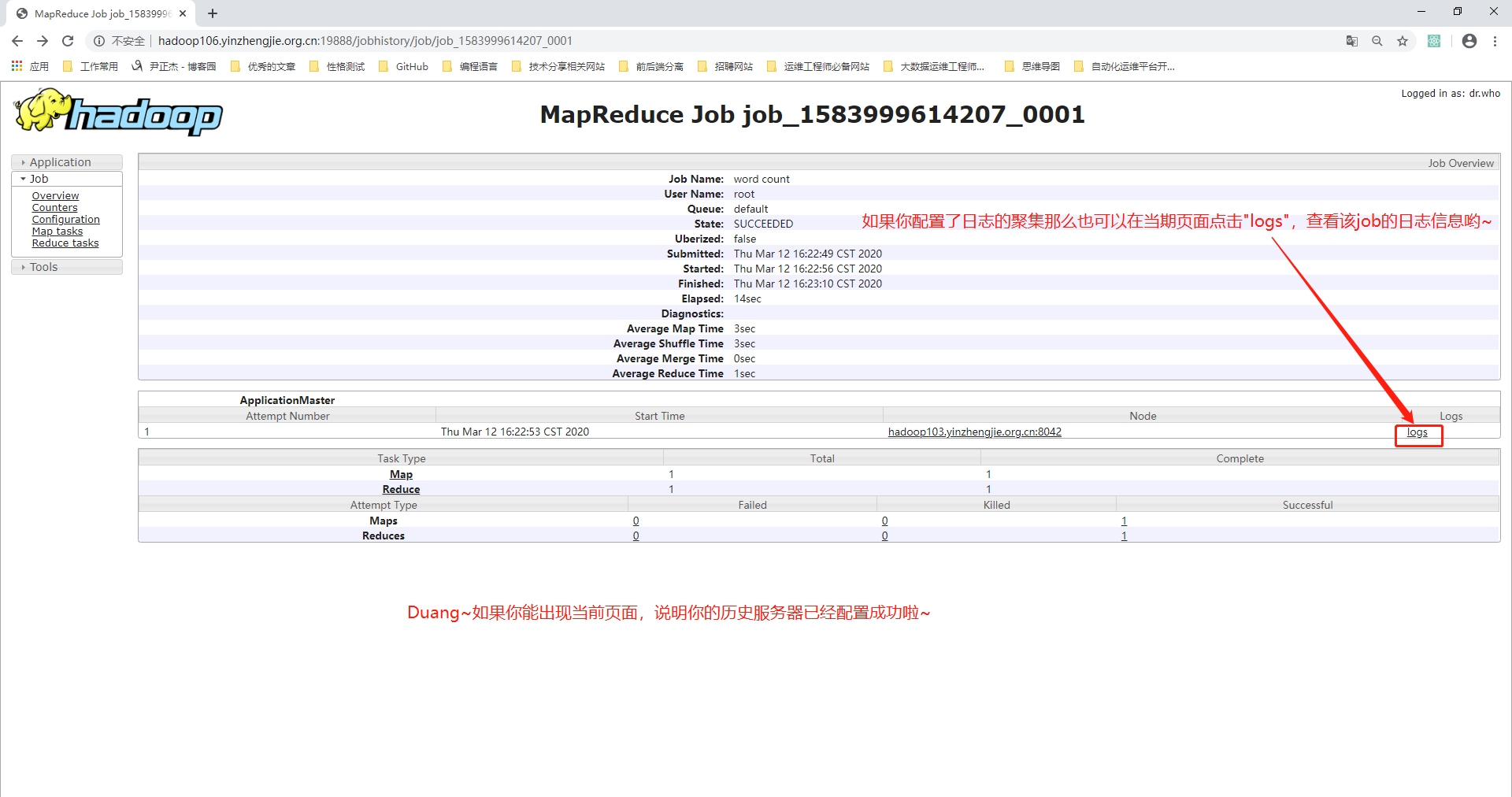

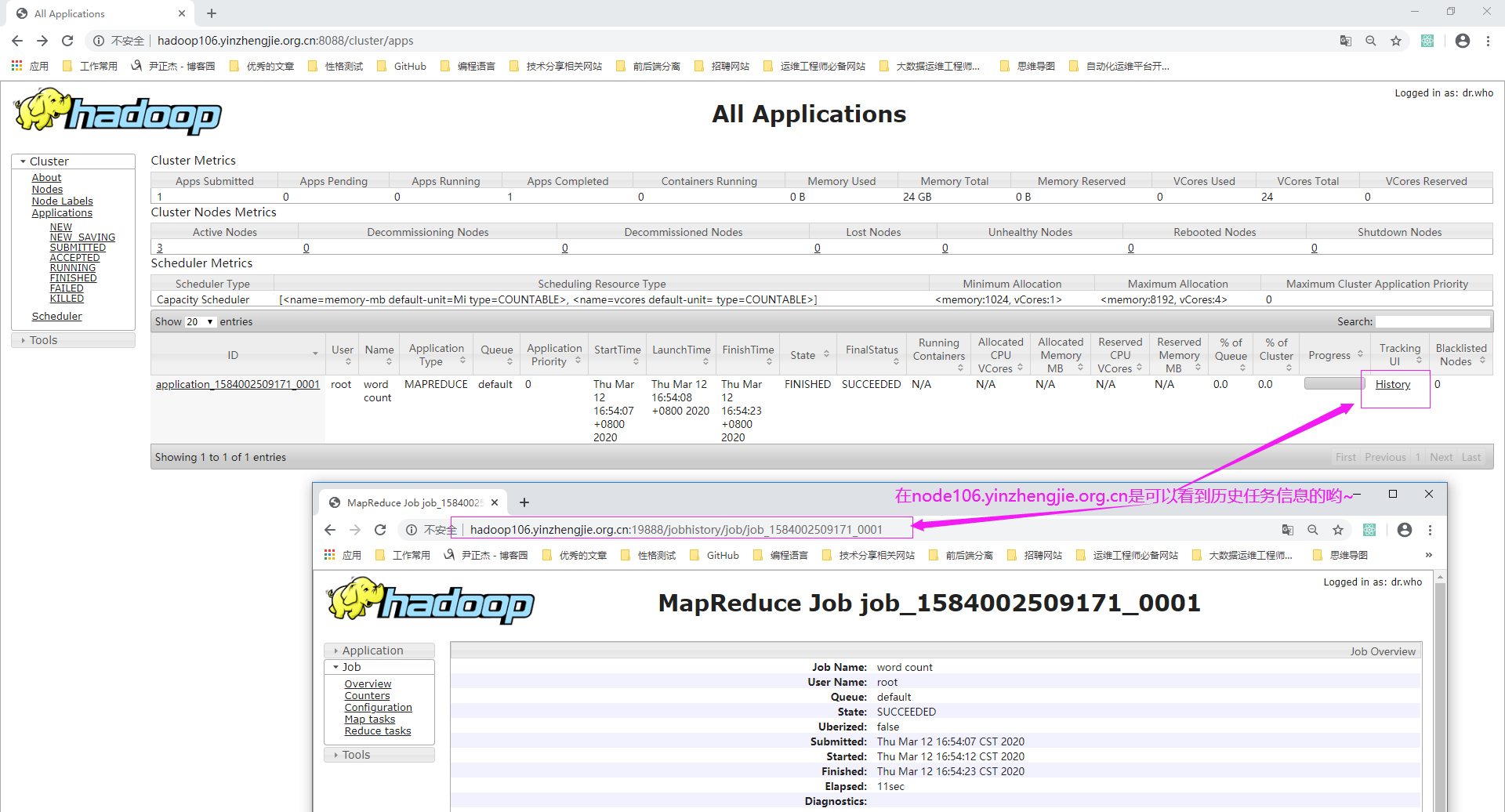

5>.查看历史任务的WebUI

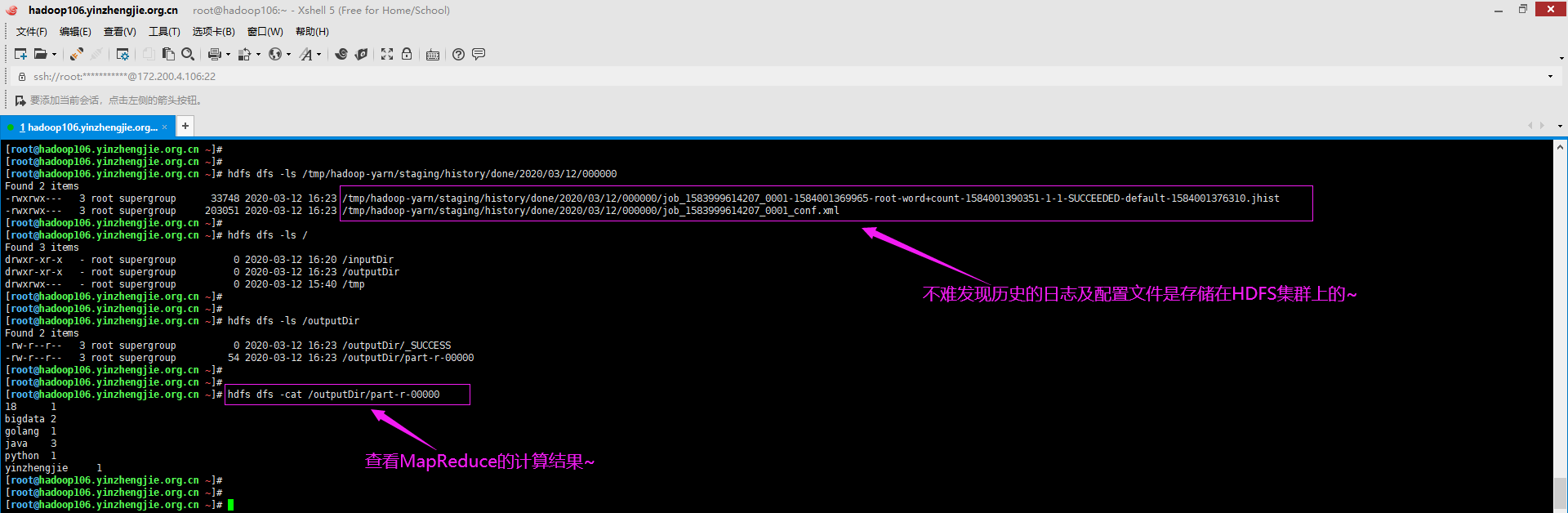

6>.查看MapReduce的计算结果

[root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp/hadoop-yarn [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp/hadoop-yarn Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 16:22 /tmp/hadoop-yarn/staging [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp/hadoop-yarn/staging Found 2 items drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp/hadoop-yarn/staging/history drwx------ - root supergroup 0 2020-03-12 16:22 /tmp/hadoop-yarn/staging/root [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp/hadoop-yarn/staging/history Found 2 items drwxrwx--- - root supergroup 0 2020-03-12 16:23 /tmp/hadoop-yarn/staging/history/done drwxrwxrwt - root supergroup 0 2020-03-12 16:22 /tmp/hadoop-yarn/staging/history/done_intermediate [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp/hadoop-yarn/staging/history/done Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 16:23 /tmp/hadoop-yarn/staging/history/done/2020 [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp/hadoop-yarn/staging/history/done/2020 Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 16:23 /tmp/hadoop-yarn/staging/history/done/2020/03 [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp/hadoop-yarn/staging/history/done/2020/03/12 Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 16:23 /tmp/hadoop-yarn/staging/history/done/2020/03/12/000000 [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /tmp/hadoop-yarn/staging/history/done/2020/03/12/000000 Found 2 items -rwxrwx--- 3 root supergroup 33748 2020-03-12 16:23 /tmp/hadoop-yarn/staging/history/done/2020/03/12/000000/job_1583999614207_0001-1584001369965-root-word+count-1584001390351-1-1-SUCCEEDED-default-1584001376310.jhist -rwxrwx--- 3 root supergroup 203051 2020-03-12 16:23 /tmp/hadoop-yarn/staging/history/done/2020/03/12/000000/job_1583999614207_0001_conf.xml [root@hadoop106.yinzhengjie.org.cn ~]#

[root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 3 items drwxr-xr-x - root supergroup 0 2020-03-12 16:20 /inputDir drwxr-xr-x - root supergroup 0 2020-03-12 16:23 /outputDir drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -ls /outputDir Found 2 items -rw-r--r-- 3 root supergroup 0 2020-03-12 16:23 /outputDir/_SUCCESS -rw-r--r-- 3 root supergroup 54 2020-03-12 16:23 /outputDir/part-r-00000 [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# [root@hadoop106.yinzhengjie.org.cn ~]# hdfs dfs -cat /outputDir/part-r-00000 18 1 bigdata 2 golang 1 java 3 python 1 yinzhengjie 1 [root@hadoop106.yinzhengjie.org.cn ~]#

7>.停止历史服务

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8245 DataNode 10345 NodeManager 10879 Jps hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9984 Jps 8456 SecondaryNameNode hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8182 DataNode 10646 Jps 10142 NodeManager hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10194 NodeManager 10562 Jps 8198 DataNode hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 20405 Jps 13214 NameNode hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 15089 JobHistoryServer 15379 Jps 14749 ResourceManager [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible rm -m shell -a 'mr-jobhistory-daemon.sh stop historyserver' hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> stopping historyserver [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8245 DataNode 10345 NodeManager 10938 Jps hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 20637 Jps 13214 NameNode hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10194 NodeManager 8198 DataNode 10620 Jps hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 10704 Jps 8182 DataNode 10142 NodeManager hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8456 SecondaryNameNode 10047 Jps hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 15504 Jps 14749 ResourceManager [root@hadoop101.yinzhengjie.org.cn ~]#

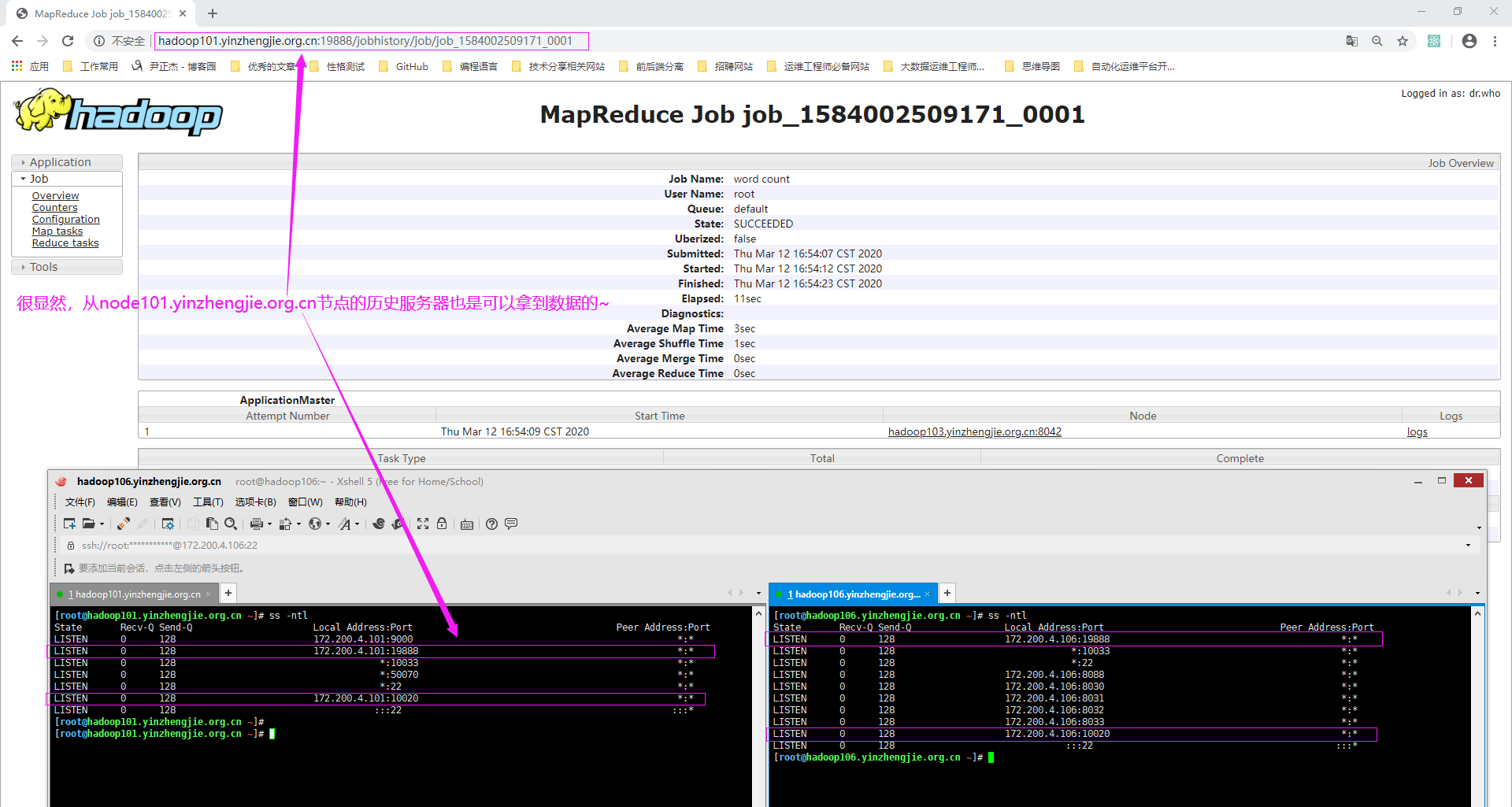

三.配置历史服务器的高可用

1>.修改node101.yinzhengjie.org.cn的配置

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8963 Jps 8456 SecondaryNameNode hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8182 DataNode 8648 NodeManager 9103 Jps hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8737 NodeManager 8198 DataNode 9194 Jps hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8245 DataNode 8935 NodeManager 9483 Jps hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 15602 Jps 13214 NameNode hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 12427 ResourceManager 13115 Jps 12893 JobHistoryServer [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# cat ${HADOOP_HOME}/etc/hadoop/mapred-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> <description>指定MR运行在YARN上</description> </property> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop101.yinzhengjie.org.cn:10020</value> <description>配置历史服务器端地址,默认端口为10020</description> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop101.yinzhengjie.org.cn:19888</value> <description>历史服务器WebUI端地址,默认端口是19888</description> </property> <property> <name>mapreduce.jobhistory.intermediate-done-dir</name> <value>/yinzhengjie/jobhistory/tmp</value> <description>MapReduce作业写入历史文件的HDFS目录</description> </property> <property> <name>mapreduce.jobhistory.done-dir</name> <value>/yinzhengjie/jobhistory/manager</value> <description>MR JobHistory服务器管理历史文件的HDFS目录</description> </property> </configuration> [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop106.yinzhengjie.org.cn ~]# cat ${HADOOP_HOME}/etc/hadoop/mapred-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> <description>指定MR运行在YARN上</description> </property> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop106.yinzhengjie.org.cn:10020</value> <description>配置历史服务器端地址,默认端口为10020</description> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop106.yinzhengjie.org.cn:19888</value> <description>历史服务器WebUI端地址,默认端口是19888</description> </property> <property> <name>mapreduce.jobhistory.intermediate-done-dir</name> <value>/yinzhengjie/jobhistory/tmp</value> <description>MapReduce作业写入历史文件的HDFS目录</description> </property> <property> <name>mapreduce.jobhistory.done-dir</name> <value>/yinzhengjie/jobhistory/manager</value> <description>MR JobHistory服务器管理历史文件的HDFS目录</description> </property> </configuration> [root@hadoop106.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# mr-jobhistory-daemon.sh start historyserver starting historyserver, logging to /yinzhengjie/softwares/hadoop-2.10.0/logs/mapred-root-historyserver-hadoop101.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# jps 15664 JobHistoryServer 15734 Jps 13214 NameNode [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ansible all -m shell -a 'jps' hadoop105.yinzhengjie.org.cn | SUCCESS | rc=0 >> 9029 Jps 8456 SecondaryNameNode hadoop103.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8245 DataNode 8935 NodeManager 9543 Jps hadoop104.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8182 DataNode 8648 NodeManager 9165 Jps hadoop102.yinzhengjie.org.cn | SUCCESS | rc=0 >> 8737 NodeManager 9253 Jps 8198 DataNode hadoop101.yinzhengjie.org.cn | SUCCESS | rc=0 >> 15664 JobHistoryServer 15862 Jps 13214 NameNode hadoop106.yinzhengjie.org.cn | SUCCESS | rc=0 >> 12427 ResourceManager 12893 JobHistoryServer 13182 Jps [root@hadoop101.yinzhengjie.org.cn ~]#

2>.再次运行MapReduce

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -rm -r /outputDir [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hadoop jar ${HADOOP_HOME}/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.0.jar wordcount /inputDir /outputDir 20/03/12 16:54:05 INFO client.RMProxy: Connecting to ResourceManager at hadoop106.yinzhengjie.org.cn/172.200.4.106:8032 20/03/12 16:54:06 INFO input.FileInputFormat: Total input files to process : 1 20/03/12 16:54:06 INFO mapreduce.JobSubmitter: number of splits:1 20/03/12 16:54:06 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled 20/03/12 16:54:06 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1584002509171_0001 20/03/12 16:54:07 INFO conf.Configuration: resource-types.xml not found 20/03/12 16:54:07 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'. 20/03/12 16:54:07 INFO resource.ResourceUtils: Adding resource type - name = memory-mb, units = Mi, type = COUNTABLE 20/03/12 16:54:07 INFO resource.ResourceUtils: Adding resource type - name = vcores, units = , type = COUNTABLE 20/03/12 16:54:07 INFO impl.YarnClientImpl: Submitted application application_1584002509171_0001 20/03/12 16:54:07 INFO mapreduce.Job: The url to track the job: http://hadoop106.yinzhengjie.org.cn:8088/proxy/application_1584002509171_0001/ 20/03/12 16:54:07 INFO mapreduce.Job: Running job: job_1584002509171_0001 20/03/12 16:54:13 INFO mapreduce.Job: Job job_1584002509171_0001 running in uber mode : false 20/03/12 16:54:13 INFO mapreduce.Job: map 0% reduce 0% 20/03/12 16:54:19 INFO mapreduce.Job: map 100% reduce 0% 20/03/12 16:54:24 INFO mapreduce.Job: map 100% reduce 100% 20/03/12 16:54:25 INFO mapreduce.Job: Job job_1584002509171_0001 completed successfully 20/03/12 16:54:25 INFO mapreduce.Job: Counters: 49 File System Counters FILE: Number of bytes read=84 FILE: Number of bytes written=411085 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=181 HDFS: Number of bytes written=54 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=3060 Total time spent by all reduces in occupied slots (ms)=2894 Total time spent by all map tasks (ms)=3060 Total time spent by all reduce tasks (ms)=2894 Total vcore-milliseconds taken by all map tasks=3060 Total vcore-milliseconds taken by all reduce tasks=2894 Total megabyte-milliseconds taken by all map tasks=3133440 Total megabyte-milliseconds taken by all reduce tasks=2963456 Map-Reduce Framework Map input records=3 Map output records=9 Map output bytes=96 Map output materialized bytes=84 Input split bytes=121 Combine input records=9 Combine output records=6 Reduce input groups=6 Reduce shuffle bytes=84 Reduce input records=6 Reduce output records=6 Spilled Records=12 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=171 CPU time spent (ms)=1190 Physical memory (bytes) snapshot=475779072 Virtual memory (bytes) snapshot=4305944576 Total committed heap usage (bytes)=292028416 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=60 File Output Format Counters Bytes Written=54 [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 4 items drwxr-xr-x - root supergroup 0 2020-03-12 16:20 /inputDir drwxr-xr-x - root supergroup 0 2020-03-12 16:54 /outputDir drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp drwxrwx--- - root supergroup 0 2020-03-12 16:51 /yinzhengjie [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 16:51 /yinzhengjie/jobhistory [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/jobhistory Found 2 items drwxrwx--- - root supergroup 0 2020-03-12 16:54 /yinzhengjie/jobhistory/manager drwxrwxrwt - root supergroup 0 2020-03-12 16:54 /yinzhengjie/jobhistory/tmp [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 4 items drwxr-xr-x - root supergroup 0 2020-03-12 16:20 /inputDir drwxr-xr-x - root supergroup 0 2020-03-12 16:54 /outputDir drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp drwxrwx--- - root supergroup 0 2020-03-12 16:51 /yinzhengjie [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 16:51 /yinzhengjie/jobhistory [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/jobhistory Found 2 items drwxrwx--- - root supergroup 0 2020-03-12 16:54 /yinzhengjie/jobhistory/manager drwxrwxrwt - root supergroup 0 2020-03-12 16:54 /yinzhengjie/jobhistory/tmp [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/jobhistory/manager Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 16:54 /yinzhengjie/jobhistory/manager/2020 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/jobhistory/manager/2020/03/12 Found 1 items drwxrwx--- - root supergroup 0 2020-03-12 16:54 /yinzhengjie/jobhistory/manager/2020/03/12/000000 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/jobhistory/manager/2020/03/12/000000 Found 2 items -rwxrwx--- 3 root supergroup 33731 2020-03-12 16:54 /yinzhengjie/jobhistory/manager/2020/03/12/000000/job_1584002509171_0001-1584003247341-root-word+count-1584003263906-1-1-SUCCEEDED-default-1584003252477.jhist -rwxrwx--- 3 root supergroup 202992 2020-03-12 16:54 /yinzhengjie/jobhistory/manager/2020/03/12/000000/job_1584002509171_0001_conf.xml [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 4 items drwxr-xr-x - root supergroup 0 2020-03-12 16:20 /inputDir drwxr-xr-x - root supergroup 0 2020-03-12 16:54 /outputDir drwxrwx--- - root supergroup 0 2020-03-12 15:40 /tmp drwxrwx--- - root supergroup 0 2020-03-12 16:51 /yinzhengjie [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /outputDir Found 2 items -rw-r--r-- 3 root supergroup 0 2020-03-12 16:54 /outputDir/_SUCCESS -rw-r--r-- 3 root supergroup 54 2020-03-12 16:54 /outputDir/part-r-00000 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -cat /outputDir/part-r-00000 18 1 bigdata 2 golang 1 java 3 python 1 yinzhengjie 1 [root@hadoop101.yinzhengjie.org.cn ~]#

3>.访问node101.yinzhengjie.org.cn节点历史服务器的WebUI