Hadoop基础-完全分布式模式部署yarn日志聚集功能

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

其实我们不用配置也可以在服务器后台通过命令行的形式查看相应的日志,但为了更方便查看日志,我们可以将其配置成通过webUI的形式访问日志,本篇博客会手把手的教你如何实操。如果你的集群配置比较低的话,并不建议开启日志,但是一般的大数据集群,服务器配置应该都不低,不过最好根据实际情况考虑。

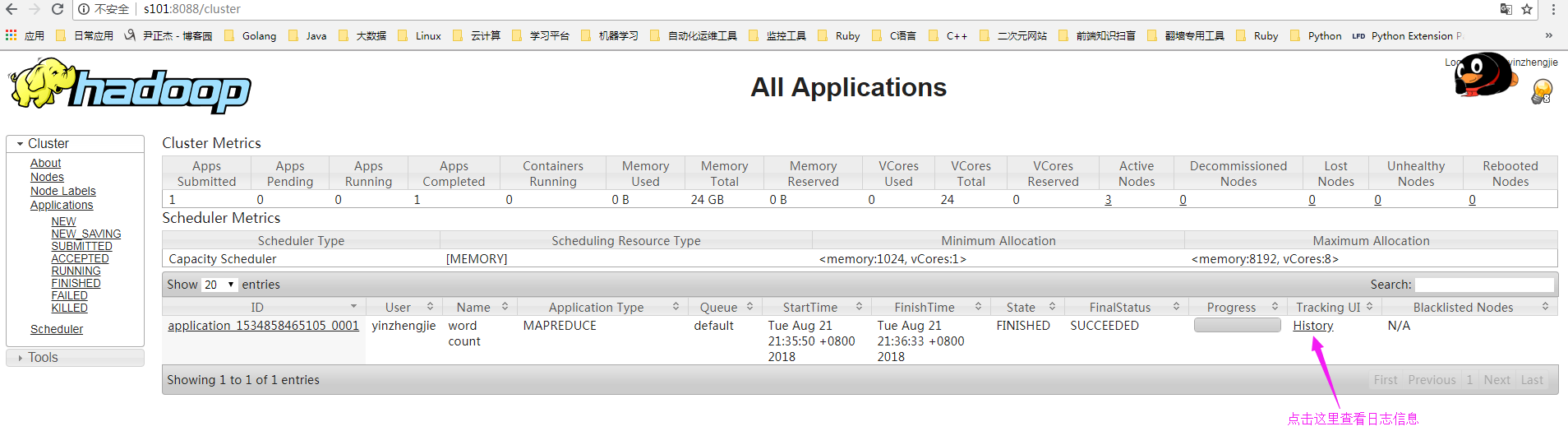

一.查看日志信息

1>.通过web界面查看日志信息

2>.webUI默认是无法查看到日志信息

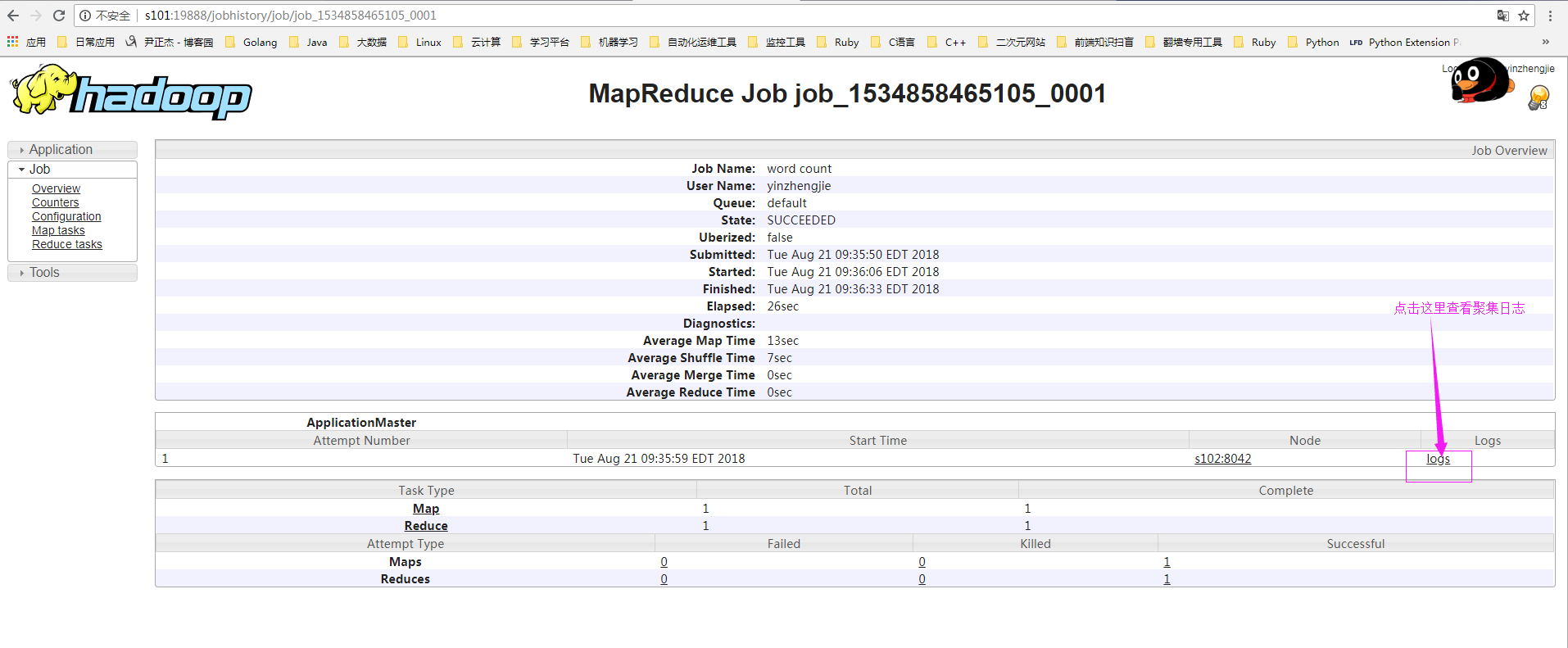

3>.通过命令行查看

日志默认存放在安装hadoop目录的logs文件夹中,其实我们不用配置web页面也可以查看相应的日志,下图就是通过命令行的形式查看日志。

二.配置日志聚集功能

1>.停止hadoop集群

[yinzhengjie@s101 ~]$ stop-dfs.sh Stopping namenodes on [s101 s105] s101: stopping namenode s105: stopping namenode s103: stopping datanode s104: stopping datanode s102: stopping datanode Stopping journal nodes [s102 s103 s104] s102: stopping journalnode s104: stopping journalnode s103: stopping journalnode Stopping ZK Failover Controllers on NN hosts [s101 s105] s101: stopping zkfc s105: stopping zkfc [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ stop-yarn.sh stopping yarn daemons s101: stopping resourcemanager s105: no resourcemanager to stop s102: stopping nodemanager s104: stopping nodemanager s103: stopping nodemanager s102: nodemanager did not stop gracefully after 5 seconds: killing with kill -9 s104: nodemanager did not stop gracefully after 5 seconds: killing with kill -9 s103: nodemanager did not stop gracefully after 5 seconds: killing with kill -9 no proxyserver to stop [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ mr-jobhistory-daemon.sh stop historyserver stopping historyserver [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ more `which xcall.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -lt 1 ];then echo "请输入参数" exit fi #获取用户输入的命令 cmd=$@ for (( i=101;i<=105;i++ )) do #使终端变绿色 tput setaf 2 echo ============= s$i $cmd ============ #使终端变回原来的颜色,即白灰色 tput setaf 7 #远程执行命令 ssh s$i $cmd #判断命令是否执行成功 if [ $? == 0 ];then echo "命令执行成功" fi done [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 7189 Jps 命令执行成功 ============= s102 jps ============ 4080 Jps 2335 QuorumPeerMain 命令执行成功 ============= s103 jps ============ 4247 Jps 2333 QuorumPeerMain 命令执行成功 ============= s104 jps ============ 2328 QuorumPeerMain 4187 Jps 命令执行成功 ============= s105 jps ============ 4431 Jps 命令执行成功 [yinzhengjie@s101 ~]$

2>.修改“yarn-site.xml”配置文件

1 [yinzhengjie@s101 ~]$ more /soft/hadoop/etc/hadoop/yarn-site.xml 2 <?xml version="1.0"?> 3 <configuration> 4 <property> 5 <name>yarn.resourcemanager.hostname</name> 6 <value>s101</value> 7 </property> 8 <property> 9 <name>yarn.nodemanager.aux-services</name> 10 <value>mapreduce_shuffle</value> 11 </property> 12 13 <property> 14 <name>yarn.nodemanager.pmem-check-enabled</name> 15 <value>false</value> 16 </property> 17 18 <property> 19 <name>yarn.nodemanager.vmem-check-enabled</name> 20 <value>false</value> 21 </property> 22 23 <property> 24 <name>yarn.log-aggregation-enable</name> 25 <value>true</value> 26 </property> 27 28 <!-- 日志保留时间设置7天 --> 29 <property> 30 <name>yarn.log-aggregation.retain-seconds</name> 31 <value>604800</value> 32 </property> 33 34 35 </configuration> 36 37 38 <!-- 39 40 yarn-site.xml配置文件的作用: 41 #主要用于配置调度器级别的参数. 42 43 yarn.resourcemanager.hostname 参数的作用: 44 #指定资源管理器(resourcemanager)的主机名 45 46 yarn.nodemanager.aux-services 参数的作用: 47 #指定nodemanager使用shuffle 48 49 yarn.nodemanager.pmem-check-enabled 参数的作用: 50 #是否启动一个线程检查每个任务正使用的物理内存量,如果任务超出分配值,则直接将其杀掉,默认是true 51 52 yarn.nodemanager.vmem-check-enabled 参数的作用: 53 #是否启动一个线程检查每个任务正使用的虚拟内存量,如果任务超出分配值,则直接将其杀掉,默认是true 54 55 yarn.log-aggregation-enable 参数的作用: 56 #是否开启webUI日志聚集功能使能,默认为flase 57 58 yarn.log-aggregation.retain-seconds 参数的作用: 59 #指定日志保留时间,单位为妙 60 --> 61 [yinzhengjie@s101 ~]$

3>.分发配置文件到各个节点

[yinzhengjie@s101 ~]$ more `which xrsync.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -lt 1 ];then echo "请输入参数"; exit fi #获取文件路径 file=$@ #获取子路径 filename=`basename $file` #获取父路径 dirpath=`dirname $file` #获取完整路径 cd $dirpath fullpath=`pwd -P` #同步文件到DataNode for (( i=102;i<=105;i++ )) do #使终端变绿色 tput setaf 2 echo =========== s$i %file =========== #使终端变回原来的颜色,即白灰色 tput setaf 7 #远程执行命令 rsync -lr $filename `whoami`@s$i:$fullpath #判断命令是否执行成功 if [ $? == 0 ];then echo "命令执行成功" fi done [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xrsync.sh /soft/hadoop-2.7.3/ =========== s102 %file =========== 命令执行成功 =========== s103 %file =========== 命令执行成功 =========== s104 %file =========== 命令执行成功 =========== s105 %file =========== 命令执行成功 [yinzhengjie@s101 ~]$

4>.启动hadoop集群

[yinzhengjie@s101 ~]$ start-dfs.sh Starting namenodes on [s101 s105] s105: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s105.out s101: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s101.out s103: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s103.out s102: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s102.out s104: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s104.out Starting journal nodes [s102 s103 s104] s102: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s102.out s103: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s103.out s104: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s104.out Starting ZK Failover Controllers on NN hosts [s101 s105] s105: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s105.out s101: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s101.out [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ start-yarn.sh starting yarn daemons s101: starting resourcemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-resourcemanager-s101.out s105: starting resourcemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-resourcemanager-s105.out s102: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s102.out s103: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s103.out s104: starting nodemanager, logging to /soft/hadoop-2.7.3/logs/yarn-yinzhengjie-nodemanager-s104.out [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ mr-jobhistory-daemon.sh start historyserver starting historyserver, logging to /soft/hadoop-2.7.3/logs/mapred-yinzhengjie-historyserver-s101.out [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 7648 DFSZKFailoverController 8210 Jps 7333 NameNode 8123 JobHistoryServer 7836 ResourceManager 命令执行成功 ============= s102 jps ============ 4131 DataNode 4217 JournalNode 4363 NodeManager 4491 Jps 2335 QuorumPeerMain 命令执行成功 ============= s103 jps ============ 4384 JournalNode 4529 NodeManager 4661 Jps 4298 DataNode 2333 QuorumPeerMain 命令执行成功 ============= s104 jps ============ 4324 JournalNode 4470 NodeManager 2328 QuorumPeerMain 4601 Jps 4238 DataNode 命令执行成功 ============= s105 jps ============ 4482 NameNode 4778 Jps 4590 DFSZKFailoverController 命令执行成功 [yinzhengjie@s101 ~]$

5>.在yarn上执行MapReduce程序

[yinzhengjie@s101 ~]$ hdfs dfs -rm -R /yinzhengjie/data/output 18/08/21 09:22:52 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes. Deleted /yinzhengjie/data/output [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ hadoop jar /soft/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount /yinzhengjie/data/input /yinzhengjie/data/output 18/08/21 09:23:10 INFO client.RMProxy: Connecting to ResourceManager at s101/172.30.1.101:8032 18/08/21 09:23:11 INFO input.FileInputFormat: Total input paths to process : 1 18/08/21 09:23:11 INFO mapreduce.JobSubmitter: number of splits:1 18/08/21 09:23:12 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1534857666985_0001 18/08/21 09:23:12 INFO impl.YarnClientImpl: Submitted application application_1534857666985_0001 18/08/21 09:23:12 INFO mapreduce.Job: The url to track the job: http://s101:8088/proxy/application_1534857666985_0001/ 18/08/21 09:23:12 INFO mapreduce.Job: Running job: job_1534857666985_0001 18/08/21 09:23:29 INFO mapreduce.Job: Job job_1534857666985_0001 running in uber mode : false 18/08/21 09:23:29 INFO mapreduce.Job: map 0% reduce 0% 18/08/21 09:23:45 INFO mapreduce.Job: map 100% reduce 0% 18/08/21 09:23:56 INFO mapreduce.Job: map 100% reduce 100% 18/08/21 09:23:56 INFO mapreduce.Job: Job job_1534857666985_0001 completed successfully 18/08/21 09:23:56 INFO mapreduce.Job: Counters: 49 File System Counters FILE: Number of bytes read=4469 FILE: Number of bytes written=249687 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=3931 HDFS: Number of bytes written=3315 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=13048 Total time spent by all reduces in occupied slots (ms)=7445 Total time spent by all map tasks (ms)=13048 Total time spent by all reduce tasks (ms)=7445 Total vcore-milliseconds taken by all map tasks=13048 Total vcore-milliseconds taken by all reduce tasks=7445 Total megabyte-milliseconds taken by all map tasks=13361152 Total megabyte-milliseconds taken by all reduce tasks=7623680 Map-Reduce Framework Map input records=104 Map output records=497 Map output bytes=5733 Map output materialized bytes=4469 Input split bytes=114 Combine input records=497 Combine output records=288 Reduce input groups=288 Reduce shuffle bytes=4469 Reduce input records=288 Reduce output records=288 Spilled Records=576 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=98 CPU time spent (ms)=1180 Physical memory (bytes) snapshot=442728448 Virtual memory (bytes) snapshot=4217950208 Total committed heap usage (bytes)=290455552 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=3817 File Output Format Counters Bytes Written=3315 [yinzhengjie@s101 ~]$

6>.查看yarn的记录信息

7>.查看历史日志

8>.查看日志信息