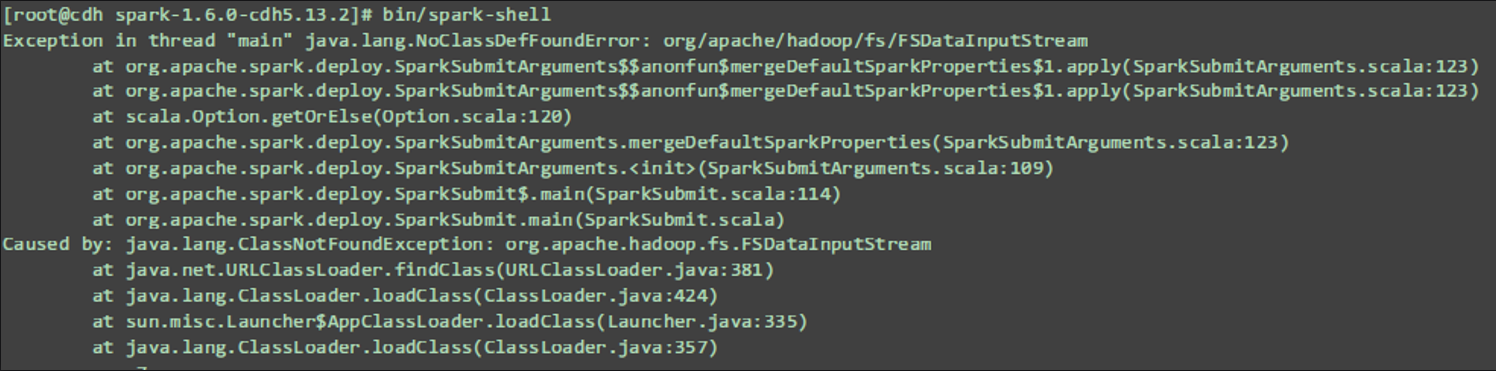

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/ FSDataInputStream

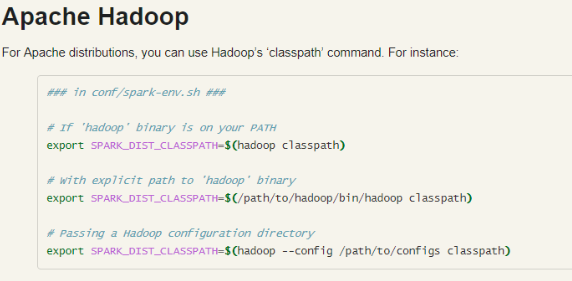

export SPARK_DIST_CLASSPATH=$(hadoop classpath)

还需要配置上 :

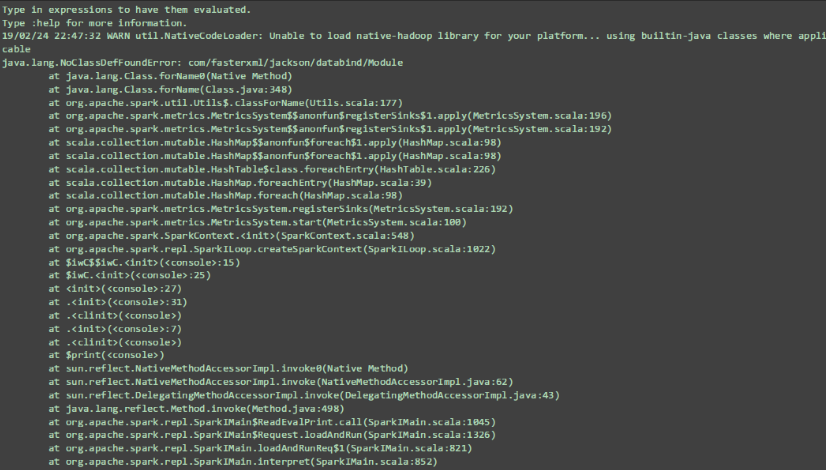

Spark 启动java.lang.NoClassDefFoundError: com/fasterxml/jackson/databind/Module

使用Maven下载以下依赖 : jackson-databind-xxx.jar、 jackson-core-xxx.jar、 jackson-annotations-xxx.jar

放到 $HADOOP_HOME/share/hadoop/common下

<dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-databind</artifactId> <version>2.4.4</version> </dependency> <dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-core</artifactId> <version>2.4.4</version> </dependency> <dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-annotations</artifactId> <version>2.4.4</version> </dependency>

Spark启动报 java.lang.ClassNotFoundError: parquet/hadoop/ParquetOutputCommitter

解决的版本是 将下面的jar包下载下来放到Spark的启动ClassPath下,然后开启Spark

<dependency> <groupId>com.twitter</groupId> <artifactId>parquet-hadoop</artifactId> <version>1.4.3</version> </dependency>

将上面的 Jar 包 , 放入 /opt/app/spark-1.6.0-cdh5.13.2/sbin 如果Sbin 不行的话 在lib目录下也放一个相同的即可