一、分词器

Elasticsearch中,内置了很多分词器(analyzers),例如standard(标准分词器)、english(英文分词)和chinese(中文分词),默认是standard.

standard tokenizer:以单词边界进行切分

standard token filter:什么都不做

lowercase token filter:将所有字母转换为小写

stop token filer(默认被禁用):移除停用词,比如a the it等等

启用english,停用词token filter

PUT /my_index { "settings": { "analysis": { "analyzer": { "es_std":{ "type":"standard", "stopwords":"_english_" } } } } }

三、标准分词测试代码

GET /my_index/_analyze { "analyzer": "standard", "text":"a dog is in the house" }

结果:

{ "tokens": [ { "token": "a", "start_offset": 0, "end_offset": 1, "type": "<ALPHANUM>", "position": 0 }, { "token": "dog", "start_offset": 2, "end_offset": 5, "type": "<ALPHANUM>", "position": 1 }, { "token": "is", "start_offset": 6, "end_offset": 8, "type": "<ALPHANUM>", "position": 2 }, { "token": "in", "start_offset": 9, "end_offset": 11, "type": "<ALPHANUM>", "position": 3 }, { "token": "the", "start_offset": 12, "end_offset": 15, "type": "<ALPHANUM>", "position": 4 }, { "token": "house", "start_offset": 16, "end_offset": 21, "type": "<ALPHANUM>", "position": 5 } ] }

四、设置的英文分词测试代码

GET /my_index/_analyze { "analyzer": "es_std", "text":"a dog is in the house" }

结果:

{ "tokens": [ { "token": "dog", "start_offset": 2, "end_offset": 5, "type": "<ALPHANUM>", "position": 1 }, { "token": "house", "start_offset": 16, "end_offset": 21, "type": "<ALPHANUM>", "position": 5 } ] }

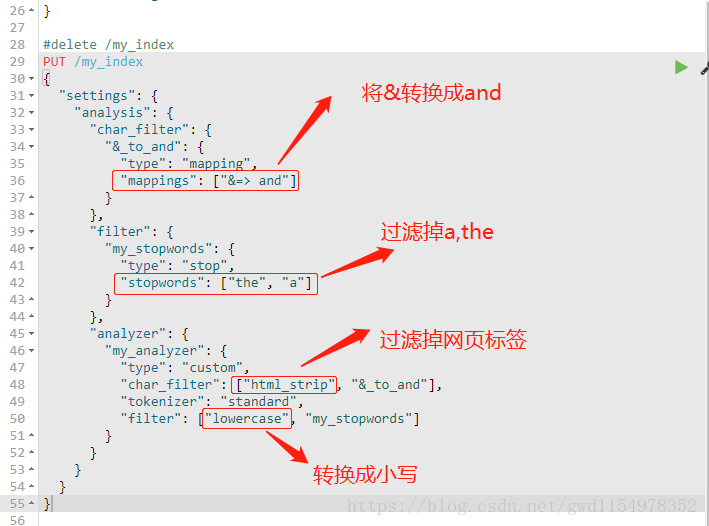

五、自定义分词器

PUT /my_index { "settings": { "analysis": { "char_filter": { "&_to_and": { "type": "mapping", "mappings": ["&=> and"] } }, "filter": { "my_stopwords": { "type": "stop", "stopwords": ["the", "a"] } }, "analyzer": { "my_analyzer": { "type": "custom", "char_filter": ["html_strip", "&_to_and"], "tokenizer": "standard", "filter": ["lowercase", "my_stopwords"] } } } } }

测试:

GET /my_index/_analyze { "text": "tom&jerry are a friend in the house, <a>, HAHA!!", "analyzer": "my_analyzer" }

结果:

{ "tokens": [ { "token": "tomandjerry", "start_offset": 0, "end_offset": 9, "type": "<ALPHANUM>", "position": 0 }, { "token": "are", "start_offset": 10, "end_offset": 13, "type": "<ALPHANUM>", "position": 1 }, { "token": "friend", "start_offset": 16, "end_offset": 22, "type": "<ALPHANUM>", "position": 3 }, { "token": "in", "start_offset": 23, "end_offset": 25, "type": "<ALPHANUM>", "position": 4 }, { "token": "house", "start_offset": 30, "end_offset": 35, "type": "<ALPHANUM>", "position": 6 }, { "token": "haha", "start_offset": 42, "end_offset": 46, "type": "<ALPHANUM>", "position": 7 } ] }

六、type中的使用

PUT /my_index/_mapping/my_type { "properties": { "content": { "type": "text", "analyzer": "my_analyzer" } } }