一、数据准备

现准备原始json数据(test.json)如下:

{"movie":"1193","rate":"5","timeStamp":"978300760","uid":"1"}

{"movie":"661","rate":"3","timeStamp":"978302109","uid":"1"}

{"movie":"914","rate":"3","timeStamp":"978301968","uid":"1"}

{"movie":"3408","rate":"4","timeStamp":"978300275","uid":"1"}

{"movie":"2355","rate":"5","timeStamp":"978824291","uid":"1"}

{"movie":"1197","rate":"3","timeStamp":"978302268","uid":"1"}

{"movie":"1287","rate":"5","timeStamp":"978302039","uid":"1"}

{"movie":"2804","rate":"5","timeStamp":"978300719","uid":"1"}

{"movie":"594","rate":"4","timeStamp":"978302268","uid":"1"}

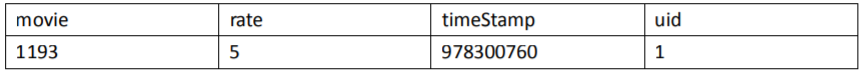

现在将数据导入到hive中,并且最终想要得到这么一个结果:

可以使用:内置函数(get_json_object)或者自定义函数完成

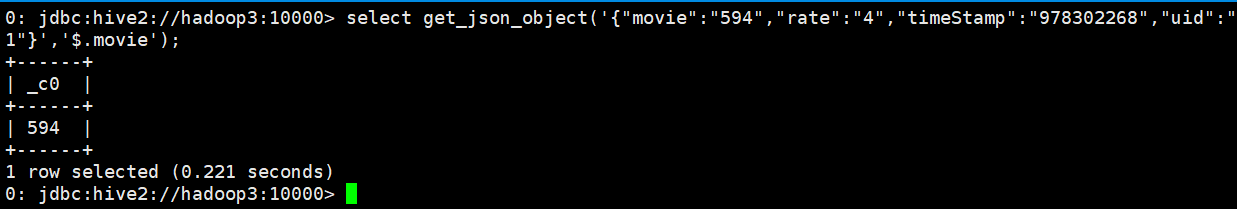

二、get_json_object(string json_string, string path)

返回值:String

说明:解析json的字符串json_string,返回path指定的内容。如果输入的json字符串无效,那么返回NUll,这个函数每次只能返回一个数据项。

0: jdbc:hive2://hadoop3:10000> select get_json_object('{"movie":"594","rate":"4","timeStamp":"978302268","uid":"1"}','$.movie');

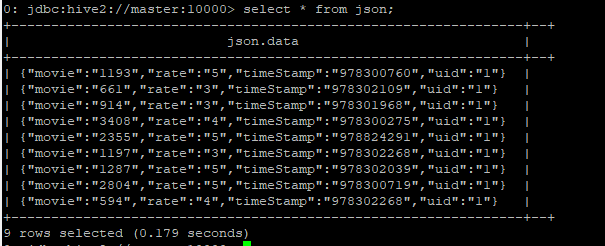

1、创建json表并将数据导入

0: jdbc:hive2://master:10000> create table json(data string); No rows affected (0.572 seconds)

0: jdbc:hive2://master:10000> load data local inpath '/home/hadoop/json.txt' into table json;

No rows affected (1.046 seconds)

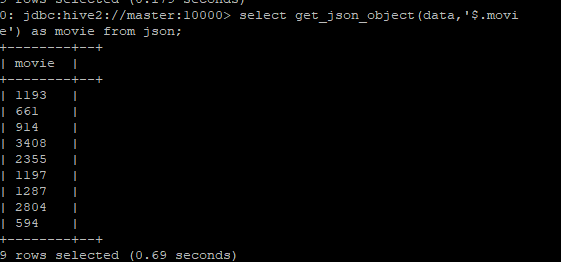

0: jdbc:hive2://master:10000> select get_json_object(data,'$.movie') as movie from json;

三、json_tuple(jsonStr, k1, k2, ...)

参数为一组键k1,k2,。。。。。和json字符串,返回值的元组。该方法比get_json_object高效,因此可以在一次调用中输入多次键

0: jdbc:hive2://master:10000> select b.b_movie,b.b_rate,b.b_timeStamp,b.b_uid from json a lateral view

json_tuple(a.data,'movie','rate','timeStamp','uid') b as b_movie,b_rate,b_timeStamp,b_uid;

注意点:

json_tuple相当于get_json_object的优势就是一次可以解析多个Json字段。但是如果我们有个Json数组,这两个函数都无法处理

四、Json数组解析

1、使用Hive自带的函数解析Json数组

Hive的内置的explode函数,explode()函数接收一个 array或者map 类型的数据作为输入,然后将 array 或 map 里面的元素按照每行的形式输出。其可以配合 LATERAL VIEW 一起使用。

hive> select explode(array('A','B','C')); OK A B C Time taken: 4.879 seconds, Fetched: 3 row(s) hive> select explode(map('A',10,'B',20,'C',30)); OK A 10 B 20 C 30 Time taken: 0.261 seconds, Fetched: 3 row(s)

这个explode函数和我们解析json数据是有关系的,我们可以使用explode函数将json数组里面的元素按照一行一行的形式输出:

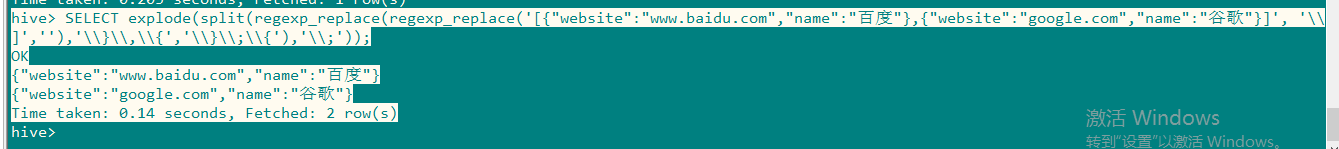

hive> SELECT explode(split(regexp_replace(regexp_replace('[{"website":"www.baidu.com","name":"百度"},{"website":"google.com","name":"谷歌"}]', '\]',''),'\}\,\{','\}\;\{'),'\;')); OK {"website":"www.baidu.com","name":"百度"} {"website":"google.com","name":"谷歌"} Time taken: 0.14 seconds, Fetched: 2 row(s)

说明:

SELECT explode(split( regexp_replace( regexp_replace( '[ {"website":"www.baidu.com","name":"百度"}, {"website":"google.com","name":"谷歌"} ]', '\[|\]',''), --将 Json 数组两边的中括号去掉 '\}\,\{' --将 Json 数组元素之间的逗号换成分号 ,'\}\;\{'), '\;')); --以分号作为分隔符

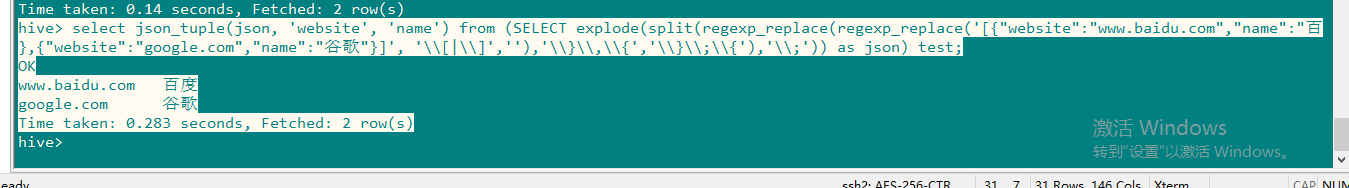

结合 get_json_object 或 json_tuple 来解析里面的字段:

hive> select json_tuple(json, 'website', 'name') from (SELECT explode(split(regexp_replace(regexp_replace('[{"website":"www.baidu.com","name":"百},{"website":"google.com","name":"谷歌"}]', '\[|\]',''),'\}\,\{','\}\;\{'),'\;')) as json) test; OK www.baidu.com 百度 google.com 谷歌 Time taken: 0.283 seconds, Fetched: 2 row(s)

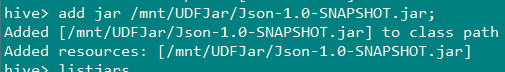

2、自定义函数解析JSON数组

虽然可以使用Hive自带的函数类解析Json数组,但是使用起来有些麻烦。Hive提供了强大的自定义函数(UDF)的接口,我们可以使用这个功能来编写解析JSON数组的UDF。具体测试过程如下:

<dependencies>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>2.1.1</version>

</dependency>

</dependencies>

import org.apache.hadoop.hive.ql.exec.Description; import org.apache.hadoop.hive.ql.exec.UDF; import org.json.JSONArray; import org.json.JSONException; import java.util.ArrayList; @Description(name = "json_array", value = "_FUNC_(array_string) - Convert a string of a JSON-encoded array to a Hive array of strings.") public class JsonArray extends UDF{ public ArrayList<String> evaluate(String jsonString) { if (jsonString == null) { return null; } try { JSONArray extractObject = new JSONArray(jsonString); ArrayList<String> result = new ArrayList<String>(); for (int ii = 0; ii < extractObject.length(); ++ii) { result.add(extractObject.get(ii).toString()); } return result; } catch (JSONException e) { return null; } catch (NumberFormatException e) { return null; } } }

将上面的代码进行编译打包,jar包名为:HiveJsonTest-1.0-SNAPSHOT.jar

hive> add jar /mnt/HiveJsonTest-1.0-SNAPSHOT.jar; Added [/mnt/HiveJsonTest-1.0-SNAPSHOT.jar] to class path Added resources: [/mnt/HiveJsonTest-1.0-SNAPSHOT.jar]

hive> create temporary function json_array as 'JsonArray'; OK Time taken: 0.111 seconds

hive> select explode(json_array('[{"website":"www.baidu.com","name":"百度"},{"website":"google.com"name":"谷歌"}]')); OK {"website":"www.baidu.com","name":"百度"} {"website":"google.com","name":"谷歌"} Time taken: 10.427 seconds, Fetched: 2 row(s)

hive> select json_tuple(json, 'website', 'name') from (SELECT explode(json_array('[{"website":"www.baidu.com","name":"百度"},{"website":"google.com","name":"谷歌"}]')) as json) test; OK www.baidu.com 百度 google.com 谷歌 Time taken: 0.265 seconds, Fetched: 2 row(s)

3、自定义函数解析json对象

package com.laotou; import org.apache.commons.lang3.StringUtils; import org.apache.hadoop.hive.ql.exec.UDF; import org.json.JSONException; import org.json.JSONObject; import org.json.JSONTokener; /** * * add jar jar/bdp_udf_demo-1.0.0.jar; * create temporary function getJsonObject as 'com.laotou.JsonObjectParsing'; * Json对象解析UDF * @Author: * @Date: 2019/8/9 */ public class JsonObjectParsing extends UDF { public static String evaluate(String jsonStr, String keyName) throws JSONException { if(StringUtils.isBlank(jsonStr) || StringUtils.isBlank(keyName)){ return null; } JSONObject jsonObject = new JSONObject(new JSONTokener(jsonStr)); Object objValue = jsonObject.get(keyName); if(objValue==null){ return null; } return objValue.toString(); } }

3、1准备数据

3、2测试