一 简介:

Filebeat是轻量级单用途的日志收集工具,用于在没有安装java的服务器上专门收集日志,可以将日志转发到logstash、elasticsearch或redis等场景中进行下一步处理。

流程:Filebeat收集日志发送到logstash ===> logstash收到日志写入redis或者kafka ===> logstash收集redis或者kafka日志写入到elk

二 filebeat收集日志

1.1.1 安装filebeat

下载地址:https://artifacts.elastic.co/downloads/beats/filebeat/#解压

yum -y install filebeat-5.6.5-x86_64.rpm #编辑配置文件 vim /etc/filebeat/filebeat.yml paths: #增加日志收集路径收集系统日志 - /var/log/*.log - /var/log/messages exclude_lines: ["^DBG"] #以什么开头的不收集 #include_lines: ["^ERR", "^WARN"] #只收集以什么开头的 exclude_files: [".gz$"] #.gz结尾不收集 document_type: "system-log-dev-filebeat" #增加一个type #日志收集输出到文件 做测试用 output.file: path: "/tmp" filename: "filebeat.txt"

- input_type: log #收集Nginx日志

paths:

- /var/log/nginx/access_json.log #日志收集路径

exclude_lines: ["^DBG"]

exclude_files: [".gz$"]

document_type: "nginx-log-dev-filebeat" #定义type

#日志收集写入到logstash output.logstash: hosts: ["192.168.10.167:5400"] #logstash 服务器地址可写入多个 enabled: true #是否开启输出到logstash 默认开启 worker: 1 #进程数 compression_level: 3 #压缩级别 #loadbalance: true #多个输出的时候开启负载

1.1.2 重启并验证

[root@localhost tmp]# systemctl restart filebeat.service [root@localhost tmp]# ls filebeat.txt filebeat.txt [root@DNS-Server tools]# /tools/kafka/bin/kafka-topics.sh --list --zookeeper 192.168.10.10:2181,192.168.10.167:2181,192.168.10.171:2181 __consumer_offsets nginx-access-kafkaceshi nginx-accesslog-kafka-test

二 写入kafka并验证

[root@DNS-Server ~]# cat /etc/logstash/conf.d/filebeat.conf input { beats { port => "5400" #filebate使用的端口 codec => "json" } } output { if [type] == "system-log-dev-filebeat" { #fulebate定义的type kafka { bootstrap_servers => "192.168.10.10:9092" topic_id => "system-log-filebe-dev" #定义kafka主题 codec => "json" } } if [type] == "nginx-log-dev-filebeat" { kafka { bootstrap_servers => "192.168.10.10:9092" topic_id => "nginx-log-filebe-dev" codec => "json" } } }

[root@DNS-Server ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/filebeat.conf -t

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

Configuration OK

[root@DNS-Server ~]# /tools/kafka/bin/kafka-topics.sh --list --zookeeper 192.168.10.10:2181,192.168.10.167:2181,192.168.10.171:2181 __consumer_offsets nginx-access-kafkaceshi nginx-accesslog-kafka-test nginx-log-filebe-dev system-log-filebe-dev [root@DNS-Server ~]# systemctl restart logstash.service

三 logstash写入elk

3.1.1 编写配置文件并验证重启

[root@DNS-Server ~]# cat /etc/logstash/conf.d/filebeat_elk.conf input { kafka { bootstrap_servers => "192.168.10.10:9092" topics => "system-log-filebe-dev" #kafka的主题 group_id => "system-log-filebeat" codec => "json" consumer_threads => 1 decorate_events => true } kafka { bootstrap_servers => "192.168.10.10:9092" topics => "nginx-log-filebe-dev" group_id => "nginx-log-filebeat" codec => "json" consumer_threads => 1 decorate_events => true } } output { if [type] == "system-log-dev-filebeat"{ elasticsearch { hosts => ["192.168.10.10:9200"] index=> "systemlog-filebeat-dev-%{+YYYY.MM.dd}" } } if [type] == "nginx-log-dev-filebeat"{ #filebeat定义的type类型 elasticsearch { hosts => ["192.168.10.10:9200"] index=> "logstash-nginxlog-filebeatdev-%{+YYYY.MM.dd}" } } } [root@DNS-Server ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/filebeat_elk.conf -t WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console Configuration OK [root@DNS-Server ~]# systemctl restart logstash.service

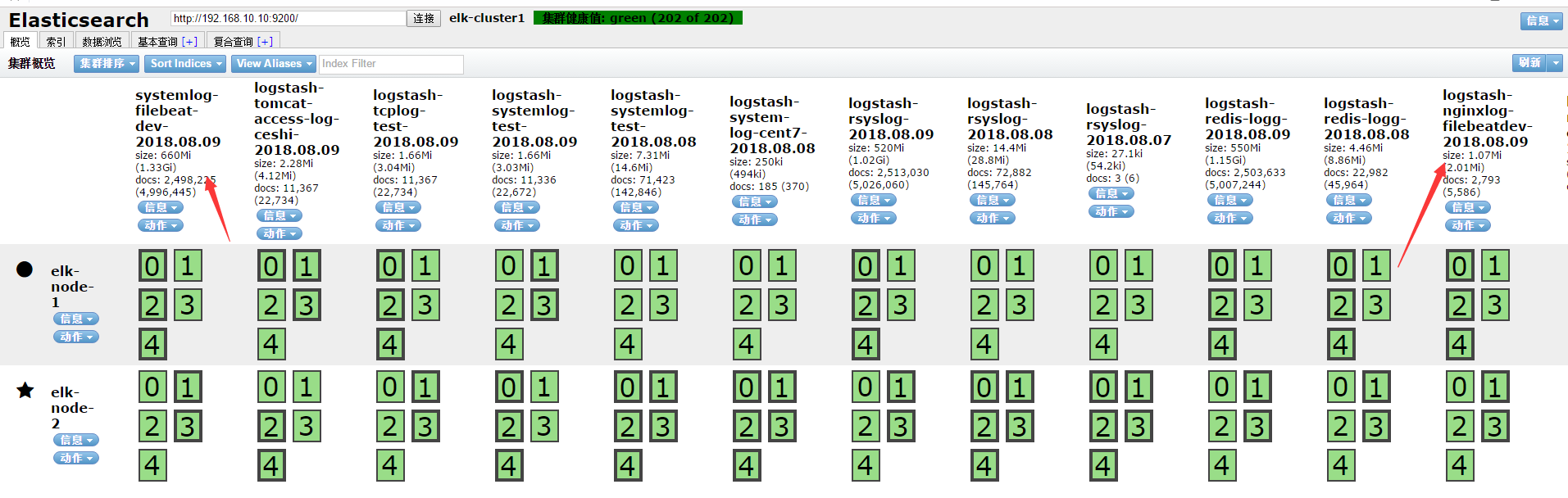

3.1.2 elasticsearch-head验证