一 Service的概念

运行在Pod中的应用是向客户端提供服务的守护进程,比如,nginx、tomcat、etcd等等,它们都是受控于控制器的资源对象,存在生命周期,我们知道Pod资源对象在自愿或非自愿终端后,只能被重构的Pod对象所替代,属于不可再生类组件。而在动态和弹性的管理模式下,Service 为该类Pod对象提供了一个固定、统一的访问接口和负载均衡能力。是不是觉得一堆话都没听明白呢????

其实,就是说Pod存在生命周期,有销毁,有重建,无法提供一个固定的访问接口给客户端。并且为了同类的Pod都能够实现工作负载的价值,由此Service资源出现了,可以为一类Pod资源对象提供一个固定的访问接口和负载均衡,类似于阿里云的负载均衡或者是LVS的功能。

但是要知道的是,Service和Pod对象的IP地址,一个是虚拟地址,一个是Pod IP地址,都仅仅在集群内部可以进行访问,无法接入集群外部流量。而为了解决该类问题的办法可以是在单一的节点上做端口暴露(hostPort)以及让Pod资源共享工作节点的网络名称空间(hostNetwork)以外,还可以使用NodePort或者是LoadBalancer类型的Service资源,或者是有7层负载均衡能力的Ingress资源。

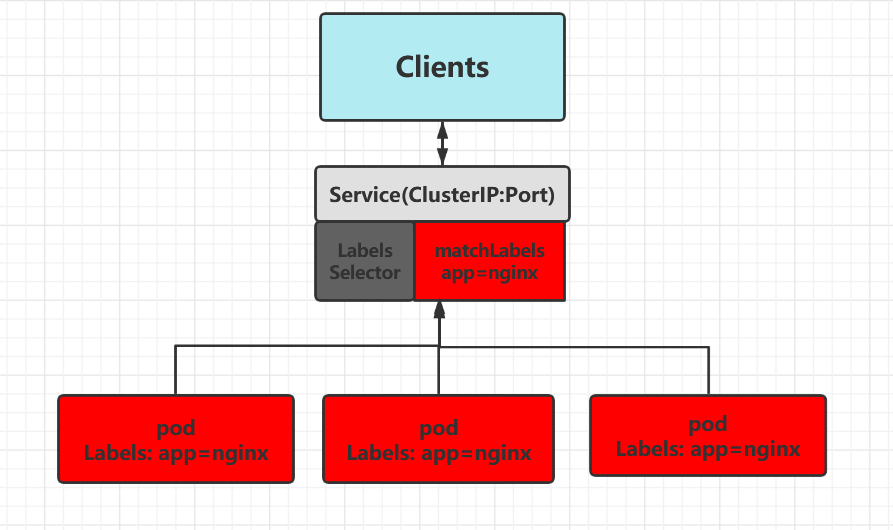

Service是Kubernetes的核心资源类型之一,Service资源基于标签选择器将一组Pod定义成一个逻辑组合,并通过自己的IP地址和端口调度代理请求到组内的Pod对象,如下图所示,它向客户端隐藏了真是的,处理用户请求的Pod资源,使得从客户端上看,就像是由Service直接处理并响应一样,是不是很像负载均衡器呢!

Service对象的IP地址也称为Cluster IP,它位于为Kubernetes集群配置指定专用的IP地址范围之内,是一种虚拟的IP地址,它在Service对象创建之后保持不变,并且能够被同一集群中的Pod资源所访问。Service端口用于接受客户端请求,并将请求转发至后端的Pod应用的相应端口,这样的代理机制,也称为端口代理,它是基于TCP/IP 协议栈的传输层。

二 kube-proxy实现模型

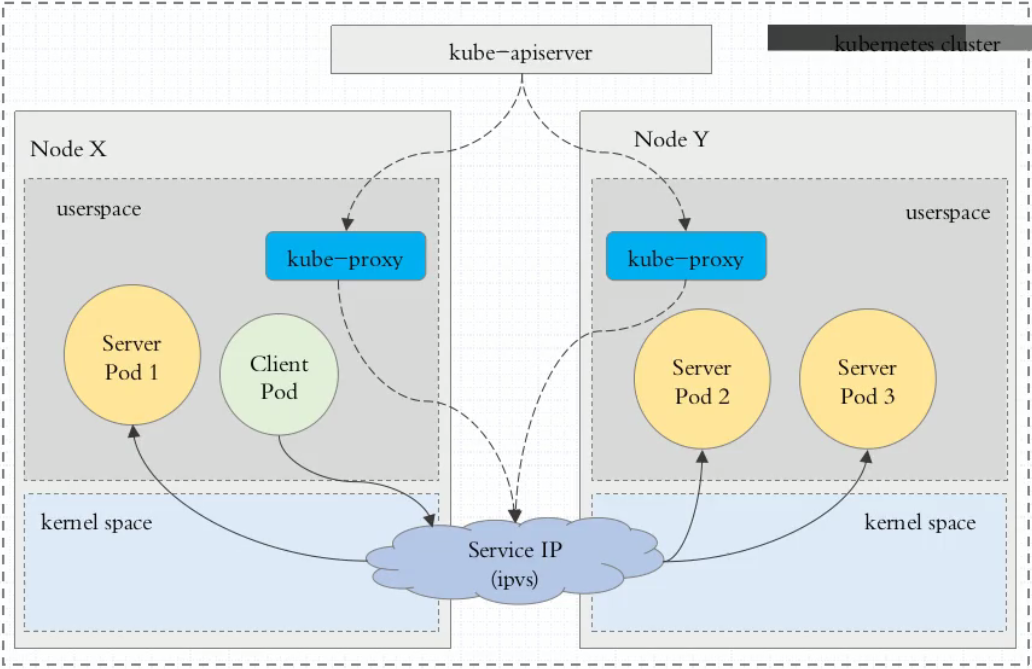

在 Kubernetes 集群中,每个 Node 运行一个 kube-proxy 进程。kube-proxy 负责为 Service 实现了一种 VIP(虚拟 IP)的形式,而不是 ExternalName 的形式。 在 Kubernetes v1.0 版本,代理完全在 userspace。在 Kubernetes v1.1 版本,新增了 iptables 代理,但并不是默认的运行模式。 从 Kubernetes v1.2 起,默认就是 iptables 代理。在Kubernetes v1.8.0-beta.0中,添加了ipvs代理。在 Kubernetes v1.0 版本,Service 是 “4层”(TCP/UDP over IP)概念。 在 Kubernetes v1.1 版本,新增了 Ingress API(beta 版),用来表示 “7层”(HTTP)服务。

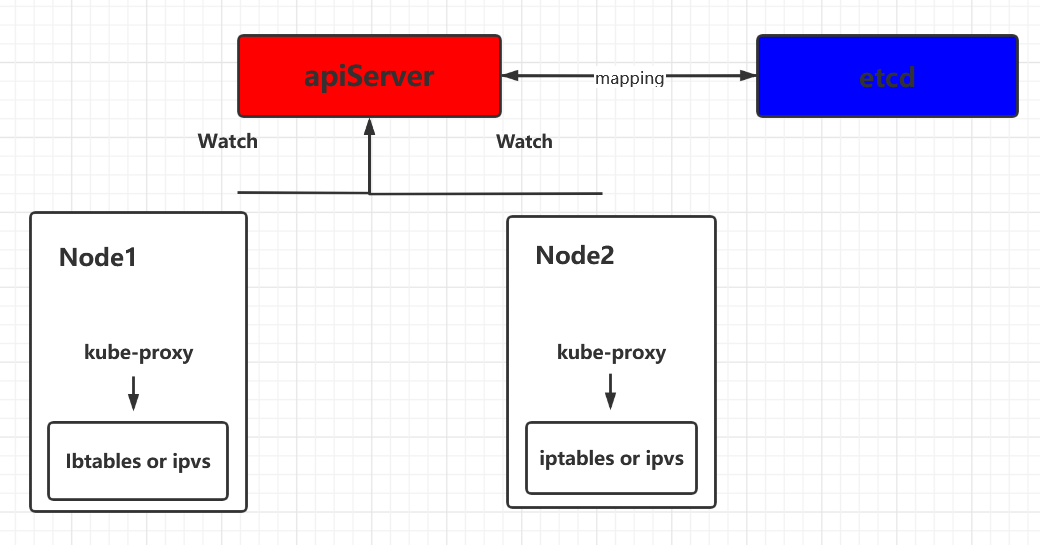

kube-proxy 这个组件始终监视着apiserver中有关Service的变动信息,获取任何一个与Service资源相关的变动状态,通过watch监视,一旦有Service资源相关的变动和创建,kube-proxy都要转换为当前节点上的能够实现资源调度规则(例如:iptables、ipvs)

kube-proxy工作原理

2.1、userspace代理模式

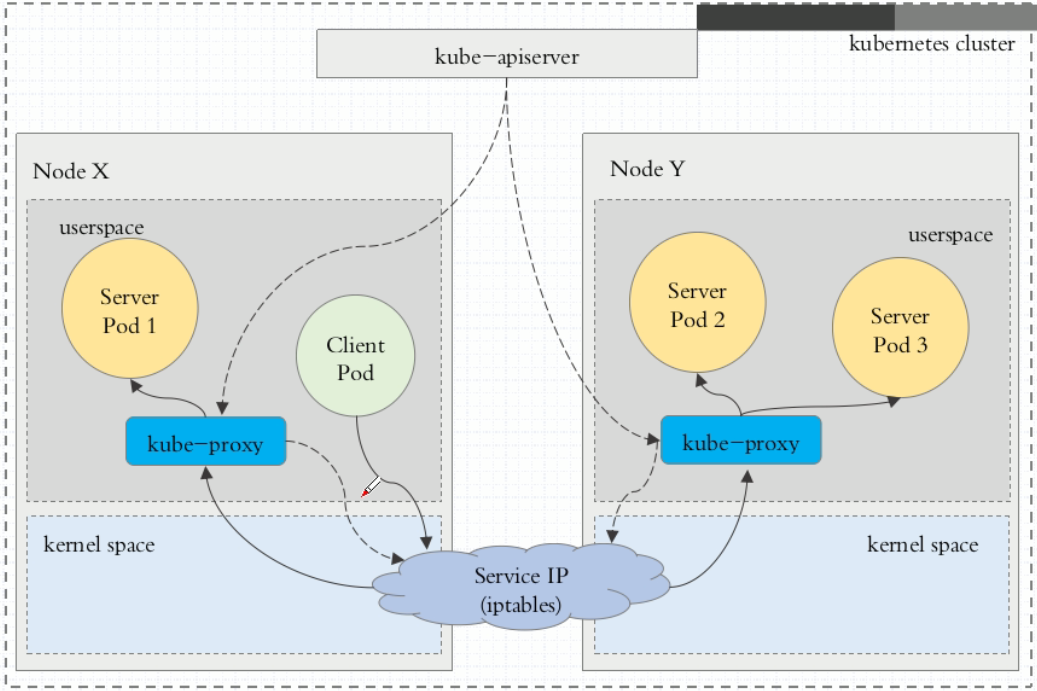

这种模式,当客户端Pod请求内核空间的Service iptables后,把请求转到给用户空间监听的kube-proxy 的端口,由kube-proxy来处理后,再由kube-proxy将请求转给内核空间的 Service ip,再由Service iptalbes根据请求转给各节点中的的Service pod。

由此可见这个模式有很大的问题,由客户端请求先进入内核空间的,又进去用户空间访问kube-proxy,由kube-proxy封装完成后再进去内核空间的iptables,再根据iptables的规则分发给各节点的用户空间的pod。这样流量从用户空间进出内核带来的性能损耗是不可接受的。在Kubernetes 1.1版本之前,userspace是默认的代理模型。

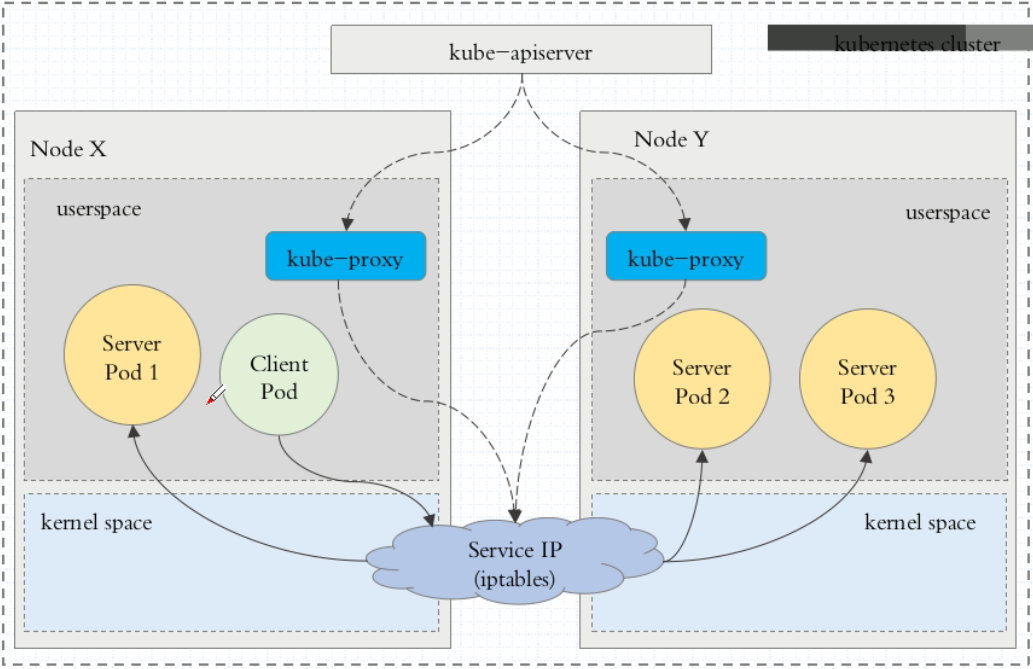

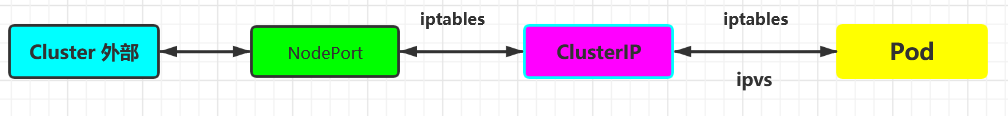

2.2、 iptables代理模式

客户端IP请求时,直接请求本地内核Service ip,根据iptables的规则直接将请求转发到到各pod上,因为使用iptable NAT来完成转发,也存在不可忽视的性能损耗。另外,如果集群中存在上万的Service/Endpoint,那么Node上的iptables rules将会非常庞大,性能还会再打折扣。iptables代理模式由Kubernetes 1.1版本引入,自1.2版本开始成为默认类型。

2.3、ipvs代理模式

Kubernetes自1.9-alpha版本引入了ipvs代理模式,自1.11版本开始成为默认设置。客户端IP请求时到达内核空间时,根据ipvs的规则直接分发到各pod上。kube-proxy会监视Kubernetes Service对象和Endpoints,调用netlink接口以相应地创建ipvs规则并定期与Kubernetes Service对象和Endpoints对象同步ipvs规则,以确保ipvs状态与期望一致。访问服务时,流量将被重定向到其中一个后端Pod。

与iptables类似,ipvs基于netfilter 的 hook 功能,但使用哈希表作为底层数据结构并在内核空间中工作。这意味着ipvs可以更快地重定向流量,并且在同步代理规则时具有更好的性能。此外,ipvs为负载均衡算法提供了更多选项,例如:

rr:轮询调度

lc:最小连接数

dh:目标哈希

sh:源哈希

sed:最短期望延迟

nq:不排队调度

注意: ipvs模式假定在运行kube-proxy之前在节点上都已经安装了IPVS内核模块。当kube-proxy以ipvs代理模式启动时,kube-proxy将验证节点上是否安装了IPVS模块,如果未安装,则kube-proxy将回退到iptables代理模式。

如果某个服务后端pod发生变化,标签选择器适应的pod有多一个,适应的信息会立即反映到apiserver上,而kube-proxy一定可以watch到etc中的信息变化,而将它立即转为ipvs或者iptables中的规则,这一切都是动态和实时的,删除一个pod也是同样的原理。如图:

三、Service的定义

3.1、清单创建 Service

[root@master ~]# kubectl explain svc

KIND: Service

VERSION: v1

DESCRIPTION:

Service is a named abstraction of software service (for example, mysql)

consisting of local port (for example 3306) that the proxy listens on, and

the selector that determines which pods will answer requests sent through

the proxy.

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <Object>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

Spec defines the behavior of a service.

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

status <Object>

Most recently observed status of the service. Populated by the system.

Read-only. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

其中重要的4个字段:

apiVersion:

kind:

metadata:

spec:

clusterIP: 可以自定义,也可以动态分配

ports:(与后端容器端口关联)

selector:(关联到哪些pod资源上)

type:服务类型

3.2、service的类型

对一些应用(如 Frontend)的某些部分,可能希望通过外部(Kubernetes 集群外部)IP 地址暴露 Service。

Kubernetes ServiceTypes 允许指定一个需要的类型的 Service,默认是 ClusterIP 类型。

Type 的取值以及行为如下:

ClusterIP:通过集群的内部 IP 暴露服务,选择该值,服务只能够在集群内部可以访问,这也是默认的 ServiceType。

NodePort:通过每个 Node 上的 IP 和静态端口(NodePort)暴露服务。NodePort 服务会路由到 ClusterIP 服务,这个 ClusterIP 服务会自动创建。通过请求

LoadBalancer:使用云提供商的负载均衡器,可以向外部暴露服务。外部的负载均衡器可以路由到 NodePort 服务和 ClusterIP 服务。

ExternalName:通过返回 CNAME 和它的值,可以将服务映射到 externalName 字段的内容(例如, foo.bar.example.com)。 没有任何类型代理被创建,这只有 Kubernetes 1.7 或更高版本的 kube-dns 才支持。

3.2.1、ClusterIP的service类型演示:

[root@master ~]# cat Svc_ClusterIP.yaml

apiVersion: v1

kind: Service

metadata:

name: svc-clusterip

namespace: default

spec:

selector: #标签选择器选择的标签

app: nginx-pod #标签

type: ClusterIP #类型为ClusterIP

ports:

- name: http

port: 80 #pod暴漏服务的端口

targetPort: 80 #svc的端口

[root@master ~]# kubectl get svc --show-labels

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE LABELS

kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 3d5h component=apiserver,provider=kubernetes

svc-clusterip ClusterIP 10.254.160.167 <none> 80/TCP 3m16s <none>

svc-demo NodePort 10.254.0.200 <none> 80:30001/TCP 6h18m <none>

[root@master ~]# kubectl describe svc svc-clusterip

Name: svc-clusterip

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx-pod

Type: ClusterIP

IP Families: <none>

IP: 10.254.160.167

IPs: 10.254.160.167

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: 172.7.166.146:80

Session Affinity: None

Events: <none>

从上演示可以总结出:service不会直接到pod,service是直接到endpoint资源,就是地址加端口,再由endpoint再关联到pod。

[root@master ~]# kubectl get ep

NAME ENDPOINTS AGE

kubernetes 192.168.1.242:6443 3d5h

svc-clusterip 172.7.166.146:80 7m50s

svc-demo 172.7.104.7:80,172.7.166.147:80 6h23m

[root@master ~]# kubectl describe ep svc-clusterip

Name: svc-clusterip

Namespace: default

Labels: <none>

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2021-08-06T11:27:13Z

Subsets:

Addresses: 172.7.166.146

NotReadyAddresses: <none> #pod的readinessProbe探针没有就绪 pod的IP会放在这里 不会调度流量

Ports:

Name Port Protocol

---- ---- --------

http 80 TCP

Events: <none>

验证一下 readiness探针没有就绪 pod流量不会调度

kind: Service

apiVersion: v1

metadata:

name: services-readiness-demo

namespace: default

spec:

selector:

app: demoapp-with-readiness

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demoapp2

spec:

replicas: 2

selector:

matchLabels:

app: demoapp-with-readiness

template:

metadata:

creationTimestamp: null

labels:

app: demoapp-with-readiness

spec:

containers:

- image: ikubernetes/demoapp:v1.0

name: demoapp

imagePullPolicy: IfNotPresent

readinessProbe:

httpGet:

path: '/readyz'

port: 80

initialDelaySeconds: 15

periodSeconds: 10

[root@master ~]# kubectl apply -f svc-redness.yaml

service/services-readiness-demo created

deployment.apps/demoapp2 created

[root@master ~]# kubectl describe ep services-readiness-demo

Name: services-readiness-demo

Namespace: default

Labels: <none>

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2021-08-06T12:09:24Z

Subsets:

Addresses: 172.7.166.148,172.7.166.149 #后端的pod都在这个端点了 可以调度流量

NotReadyAddresses: <none>

Ports:

Name Port Protocol

---- ---- --------

http 80 TCP

Events: <none>

[root@master ~]# curl -XPOST -d 'readyz=failed' http://10.254.250.237/readyz #把就绪探针的状态改变

[root@master ~]kubectl describe ep services-readiness-demo

Name: services-readiness-demo

Namespace: default

Labels: <none>

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2021-08-06T12:12:24Z

Subsets:

Addresses: 172.7.166.149

NotReadyAddresses: 172.7.166.148 #其中一个pod被提出就绪端点

Ports:

Name Port Protocol

---- ---- --------

http 80 TCP

Events: <none>

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demoapp2-677db795b4-nhdrv 1/1 Running 0 5m26s

demoapp2-677db795b4-nx64n 0/1 Running 0 5m26s

service只要创建完,就会在dns中添加一个资源记录进行解析,添加完成即可进行解析。资源记录的格式为:SVC_NAME.NS_NAME.DOMAIN.LTD.

默认的集群service 的A记录:svc.cluster.local.

服务创建的A记录:svc-clusterip.default.svc.cluster.local.

[root@master ~]# kubectl exec -it mypod -- sh

[root@mypod /]# curl svc-clusterip

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

[root@mypod /]# cat /etc/resolv.conf

nameserver 10.254.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

3.2.2、NodePort的service类型演示:

NodePort即节点Port,通常在部署Kubernetes集群系统时会预留一个端口范围用于NodePort,其范围默认为:30000~32767之间的端口。定义NodePort类型的Service资源时,需要使用.spec.type进行明确指定。

kind: Service

apiVersion: v1

metadata:

name: demoapp-nodeport-svc

spec:

type: NodePort

clusterIP: 10.254.56.1

selector:

app: nginx-ds

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 31398

# externalTrafficPolicy: Local #优先调用本地的pod

[root@master ~]# kubectl apply -f svc_nodeport.yaml

service/demoapp-nodeport-svc created

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp-nodeport-svc NodePort 10.254.56.1 <none> 80:31398/TCP 4s

kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 3d5h

svc-clusterip ClusterIP 10.254.160.167 <none> 80/TCP 33m

svc-demo NodePort 10.254.0.200 <none> 80:30001/TCP 6h48m

[root@master ~]# curl 192.168.1.243:31398

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

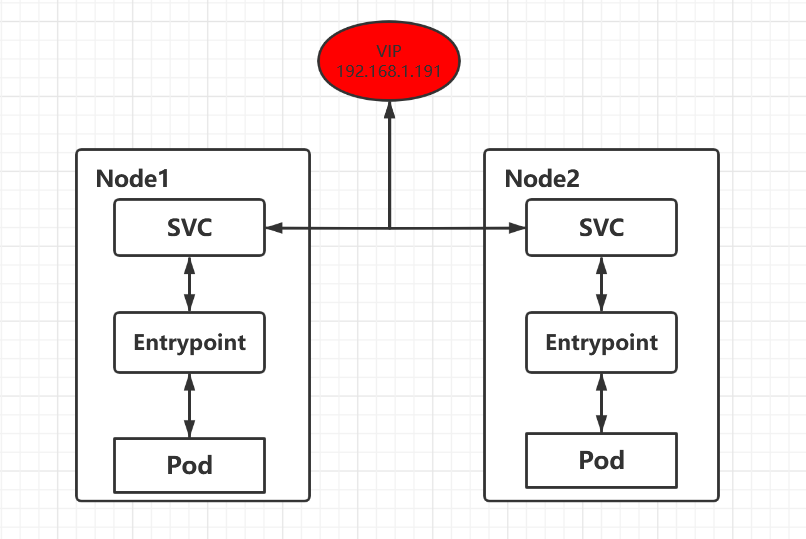

3.2.3 Externalip

内网服务 把指定ip的所有流量转给svc的clusterip 配合lvs使用

[root@master service]# cat externalip.yaml

kind: Service

apiVersion: v1

metadata:

name: demoapp-externalip-svc

namespace: default

spec:

type: ClusterIP

selector:

app: demoapp

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

externalIPs:

- 192.168.1.191

[root@master service]# kubectl apply -f externalip.yaml

root@master service]# ip address add 192.168.1.191 dev eth0

service/demoapp-externalip-svc configured

[root@master service]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp-externalip-svc ClusterIP 10.254.4.145 192.168.1.191 80/TCP 2m19s

kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 4d

services-readiness-demo ClusterIP 10.254.250.237 <none> 80/TCP 18h

[root@master service]# curl 192.168.1.191

iKubernetes demoapp v1.0 !! ClientIP: 172.7.219.64, ServerName: demoapp2-677db795b4-nhdrv, ServerIP: 172.7.166.149!

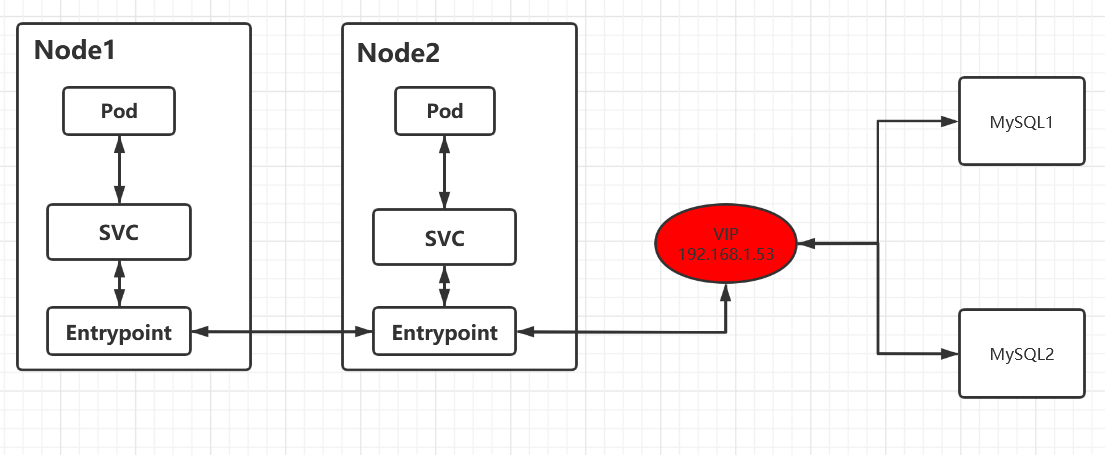

3.2.4 Endpoints

创建Endpoints 可以为不是集群的资源提供一个进入集群的流量入口 因为不是集群资源没有labels 所以svc无法管理

创建和service同名的Endpoints管理ip 来接入集群 因为不是集群资源无法装readiness就绪探针检查 出现问题需要手动把地址提出Endpoint

apiVersion: v1

kind: Endpoints

metadata:

name: mysql-external

namespace: default

subsets:

- addresses:

- ip: 192.168.1.53 #列表

ports:

- name: mysql

port: 3306

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: mysql-external

namespace: default

spec:

type: ClusterIP

ports:

- name: mysql

port: 3306

targetPort: 3306

protocol: TCP

[root@master service]# kubectl apply -f mysql_endpoint.yaml

endpoints/mysql-external created

service/mysql-external created

[root@master service]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mysql-external ClusterIP 10.254.85.148 <none> 3306/TCP 6s

[root@master service]# kubectl describe svc mysql-external

Name: mysql-external

Namespace: default

Labels: <none>

Annotations: <none>

Selector: <none>

Type: ClusterIP

IP Families: <none>

IP: 10.254.85.148

IPs: 10.254.85.148

Port: mysql 3306/TCP

TargetPort: 3306/TCP

Endpoints: 192.168.1.53:3306

Session Affinity: None

Events: <none>

[root@master service]# kubectl describe endpoints mysql-external

Name: mysql-external

Namespace: default

Labels: <none>

Annotations: <none>

Subsets:

Addresses: 192.168.1.53

NotReadyAddresses: <none>

Ports:

Name Port Protocol

---- ---- --------

mysql 3306 TCP

Events: <none>

[root@master service]# mysql -h 10.254.85.148 -u root -p #测试

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or g.

Your MariaDB connection id is 7

Server version: 5.5.68-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

MariaDB [(none)]> show databases;

3.2.5 Headless service

有时不需要或不想要负载均衡,以及单独的 Service IP。 遇到这种情况,可以通过指定 Cluster IP(spec.clusterIP)的值为 "None" 来创建 Headless Service

对这类 Service 并不会分配 Cluster IP,kube-proxy 不会处理它们,而且平台也不会为它们进行负载均衡和路由。 DNS 如何实现自动配置,依赖于 Service 是否定义了 selector。

[root@k8s-master mainfests]# vim myapp-svc-headless.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp-headless

namespace: default

spec:

selector:

app: myapp

release: canary

clusterIP: "None" #headless的clusterIP值为None

ports:

- port: 80

targetPort: 80

[root@master service]# kubectl apply -f headless_service.yaml

service/myapp-headless created

[root@master service]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myapp-headless ClusterIP None <none> 80/TCP 5s

[root@master service]# kubectl get pod -l app=demoapp -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp2-677db795b4-9vf7x 1/1 Running 0 3d21h 172.7.104.25 node2 <none> <none>

demoapp2-677db795b4-nhdrv 1/1 Running 1 4d15h 172.7.166.177 node1 <none> <none>

[root@master service]# dig -t A myapp-headless.default.svc.cluster.local. @10.254.0.10

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7_9.5 <<>> -t A myapp-headless.default.svc.cluster.local. @10.254.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 45241

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;myapp-headless.default.svc.cluster.local. IN A

;; ANSWER SECTION:

myapp-headless.default.svc.cluster.local. 30 IN A 172.7.104.25

myapp-headless.default.svc.cluster.local. 30 IN A 172.7.166.177

;; Query time: 1 msec

;; SERVER: 10.254.0.10#53(10.254.0.10)

;; WHEN: Wed Aug 11 11:11:15 CST 2021

;; MSG SIZE rcvd: 181

项目示例

Mysql -> endpoint -> svc -> pod -> endpoint -> svc

Mysql Svc

[root@master service]# cat mysql_endpoint.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: mysql

namespace: default

subsets:

- addresses:

- ip: 192.168.1.53

ports:

- name: mysql

port: 3306

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: mysql

namespace: default

spec:

type: ClusterIP

ports:

- name: mysql

port: 3306

targetPort: 3306

protocol: TCP

Wordpress Pod

apiVersion: v1

kind: Pod

metadata:

name: wordpress

labels:

app: wordpress

spec:

containers:

- name: nginx-proxy

image: rongshangtianxia/nginx:proxy

ports:

- containerPort: 80

hostPort: 80

readinessProbe:

httpGet:

path: /wp-login.php

port: 80

scheme: HTTP

env:

- name: HOST

value: "127.0.0.1"

- name: PORT

value: "9000"

- name: wordpress

image: wordpress:php7.4-fpm-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

hostPort: 9000

livenessProbe:

tcpSocket:

port: 9000

env:

- name: WORDPRESS_DB_HOST

value: "mysql"

- name: WORDPRESS_DB_USER

value: "root"

- name: WORDPRESS_DB_PASSWORD

value: "123456"

- name: WORDPRESS_DB_NAME

value: "wordpress"

Wordpress Svc

apiVersion: v1

kind: Service

metadata:

name: wp-svc

spec:

type: NodePort

selector:

app: wordpress

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 31001

externalTrafficPolicy: Local #把流量调度到本地的pod

Mysql -> wordpress php -> wp-php-svc -> wordpress nginx -> wp-nginx-svc Nodeport

Mysql Svc

apiVersion: v1

kind: Pod

metadata:

name: wordpress

labels:

app: wordpress

spec:

containers:

- name: nginx-proxy

image: rongshangtianxia/nginx:proxy

ports:

- containerPort: 80

hostPort: 80

readinessProbe:

httpGet:

path: /wp-login.php

port: 80

scheme: HTTP

env:

- name: HOST

value: "127.0.0.1"

- name: PORT

value: "9000"

- name: wordpress

image: wordpress:php7.4-fpm-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

hostPort: 9000

livenessProbe:

tcpSocket:

port: 9000

env:

- name: WORDPRESS_DB_HOST

value: "mysql"

- name: WORDPRESS_DB_USER

value: "root"

- name: WORDPRESS_DB_PASSWORD

value: "123456"

- name: WORDPRESS_DB_NAME

value: "wordpress"

wordpress php pod

apiVersion: v1

kind: Pod

metadata:

name: wordpress

labels:

app: wordpress

spec:

containers:

- name: nginx-proxy

image: rongshangtianxia/nginx:proxy

ports:

- containerPort: 80

hostPort: 80

readinessProbe:

httpGet:

path: /wp-login.php

port: 80

scheme: HTTP

env:

- name: HOST

value: "127.0.0.1"

- name: PORT

value: "9000"

- name: wordpress

image: wordpress:php7.4-fpm-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

hostPort: 9000

livenessProbe:

tcpSocket:

port: 9000

env:

- name: WORDPRESS_DB_HOST

value: "mysql"

- name: WORDPRESS_DB_USER

value: "root"

- name: WORDPRESS_DB_PASSWORD

value: "123456"

- name: WORDPRESS_DB_NAME

value: "wordpress"

wordpress php svc

[root@master service]# cat wordpress-php-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: wp-php-svc

spec:

type: ClusterIP

selector:

app: wordpress-php

ports:

- name: http

protocol: TCP

port: 9000

targetPort: 9000

wordpress nginx pod

[root@master service]# cat wordpress-nginx.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress-nginx

labels:

app: wordpress-nginx

spec:

containers:

- name: nginx-proxy

image: rongshangtianxia/nginx:proxy

ports:

- containerPort: 80

hostPort: 80

readinessProbe:

httpGet:

path: /wp-login.php

port: 80

scheme: HTTP

env:

- name: HOST

value: "wp-php-svc"

- name: PORT

value: "9000"

Wordpress Nginx Svc NodePort

[root@master service]# cat wordpress-nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: wp-nginx-svc

spec:

type: NodePort

selector:

app: wordpress-nginx

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 31001