训练seq2seq模型:

parlai train_model --task cornell_movie --model seq2seq --model-file tmp/model_s2s --batchsize 8 --rnn-class gru --hiddensize 200 --numlayers 2 --bidirectional True --attention dot --attention-time post --lookuptable enc_dec --num-epochs 6 --optimizer adam --learningrate 1e-3

本地交互模式:

parlai interactive --model-file zoo:dodecadialogue/empathetic_dialogues_ft/model --inference beam --beam-size 5 --beam-min-length 10 --beam-block-ngram 3 --beam-context-block-ngram 3

格式化数据集:

python parlai/scripts/convert_data_to_parlai_format.py --task cornell_movie --outfile tmp.txt

构建字典

parlai build_dict -t cornell_movie --dict-file temp_dict_by_nltk.txt --dict-lower True --dict_tokenizer nltk

消息传递过程:

query = teacher.act() student.observe(query) reply = student.act() teacher.observe(reply)

python 打印调用栈

import traceback traceback.print_stack()

list of metrics

accuracy: Exact match text accuracy bleu-4: BLEU-4 of the generation, under a standardized (model-independent) tokenizer clen: Average length of context in number of tokens clip: Fraction of batches with clipped gradients ctpb: Context tokens per batch ctps: Context tokens per second ctrunc: Fraction of samples with some context truncation ctrunclen: Average length of context tokens truncated exps: Examples per second exs: Number of examples processed since last print f1: Unigram F1 overlap, under a standardized (model-independent) tokenizer gnorm: Gradient norm gpu_mem: Fraction of GPU memory used. May slightly underestimate true value. hits@1: Fraction of correct choices in 1 guess. (Similar to recall@K) hits@5: Fraction of correct choices in 5 guesses. (Similar to recall@K) interdistinct-1: Fraction of n-grams unique across all generations interdistinct-2: Fraction of n-grams unique across all generations intradictinct-2: Fraction of n-grams unique within each utterance intradistinct-1: Fraction of n-grams unique within each utterance jga: Joint Goal Accuracy llen: Average length of label in number of tokens loss: Loss lr: The most recent learning rate applied ltpb: Label tokens per batch ltps: Label tokens per second ltrunc: Fraction of samples with some label truncation ltrunclen: Average length of label tokens truncated rouge-1: ROUGE metrics rouge-2: ROUGE metrics rouge-L: ROUGE metrics token_acc: Token-wise accuracy (generative only) token_em: Utterance-level token accuracy. Roughly corresponds to perfection under greedy search (generative only) total_train_updates: Number of SGD steps taken across all batches tpb: Total tokens (context + label) per batch tps: Total tokens (context + label) per second ups: Updates per second (approximate)

print(train_report)

{

'exs': SumMetric(400),

'clen': AverageMetric(32.88),

'ctrunc': AverageMetric(0),

'ctrunclen': AverageMetric(0),

'llen': AverageMetric(13.43),

'ltrunc': AverageMetric(0),

'ltrunclen': AverageMetric(0),

'loss': AverageMetric(9.64),

'ppl': PPLMetric(1.536e+04),

'token_acc': AverageMetric(0.1015),

'token_em': AverageMetric(0),

'exps': GlobalTimerMetric(107.3),

'ltpb': GlobalAverageMetric(107.4),

'ltps': GlobalTimerMetric(1441),

'ctpb': GlobalAverageMetric(263.1),

'ctps': GlobalTimerMetric(3527),

'tpb': GlobalAverageMetric(370.5),

'tps': GlobalTimerMetric(4968),

'ups': GlobalTimerMetric(13.41),

'gnorm': GlobalAverageMetric(3.744),

'clip': GlobalAverageMetric(1),

'lr': GlobalAverageMetric(1),

'gpu_mem': GlobalAverageMetric(0.2491),

'total_train_updates': GlobalFixedMetric(50)

}

torch_agent.py的batch_act调用栈

File "/home/lee/anaconda3/envs/pariai/bin/parlai", line 33, in <module>

sys.exit(load_entry_point('parlai', 'console_scripts', 'parlai')())

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/__main__.py", line 14, in main

superscript_main()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 324, in superscript_main

return SCRIPT_REGISTRY[cmd].klass._run_from_parser_and_opt(opt, parser)

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 107, in _run_from_parser_and_opt

return script.run()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 939, in run

return self.train_loop.train()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 903, in train

for _train_log in self.train_steps():

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 804, in train_steps

world.parley()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 867, in parley

batch_act = self.batch_act(agent_idx, batch_observations[agent_idx])

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 835, in batch_act

batch_actions = a.batch_act(batch_observation)

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/torch_agent.py", line 2128, in batch_act

traceback.print_stack()

print(batch_reply)

[

{'id': 'Seq2Seq', 'episode_done': False, 'metrics': {'clen': AverageMetric(285), 'ctrunc': AverageMetric(0), 'ctrunclen': AverageMetric(0), 'llen': AverageMetric(20), 'ltrunc': AverageMetric(0), 'ltrunclen': AverageMetric(0), 'loss': AverageMetric(10.44), 'ppl': PPLMetric(3.43e+04), 'token_acc': AverageMetric(0), 'token_em': AverageMetric(0)}},

{'id': 'Seq2Seq', 'episode_done': False, 'metrics': {'clen': AverageMetric(10), 'ctrunc': AverageMetric(0), 'ctrunclen': AverageMetric(0), 'llen': AverageMetric(33), 'ltrunc': AverageMetric(0), 'ltrunclen': AverageMetric(0), 'loss': AverageMetric(10.62), 'ppl': PPLMetric(4.108e+04), 'token_acc': AverageMetric(0.06061), 'token_em': AverageMetric(0)}},

{'id': 'Seq2Seq', 'episode_done': False, 'metrics': {'clen': AverageMetric(52), 'ctrunc': AverageMetric(0), 'ctrunclen': AverageMetric(0), 'llen': AverageMetric(4), 'ltrunc': AverageMetric(0), 'ltrunclen': AverageMetric(0), 'loss': AverageMetric(9.659), 'ppl': PPLMetric(1.565e+04), 'token_acc': AverageMetric(0), 'token_em': AverageMetric(0)}},

{'id': 'Seq2Seq', 'episode_done': False, 'metrics': {'clen': AverageMetric(60), 'ctrunc': AverageMetric(0), 'ctrunclen': AverageMetric(0), 'llen': AverageMetric(3), 'ltrunc': AverageMetric(0), 'ltrunclen': AverageMetric(0), 'loss': AverageMetric(8.532), 'ppl': PPLMetric(5076), 'token_acc': AverageMetric(0.3333), 'token_em': AverageMetric(0)}},

{'id': 'Seq2Seq', 'episode_done': False, 'metrics': {'clen': AverageMetric(9), 'ctrunc': AverageMetric(0), 'ctrunclen': AverageMetric(0), 'llen': AverageMetric(24), 'ltrunc': AverageMetric(0), 'ltrunclen': AverageMetric(0), 'loss': AverageMetric(10.06), 'ppl': PPLMetric(2.339e+04), 'token_acc': AverageMetric(0.125), 'token_em': AverageMetric(0)}},

{'id': 'Seq2Seq', 'episode_done': False, 'metrics': {'clen': AverageMetric(14), 'ctrunc': AverageMetric(0), 'ctrunclen': AverageMetric(0), 'llen': AverageMetric(20), 'ltrunc': AverageMetric(0), 'ltrunclen': AverageMetric(0), 'loss': AverageMetric(8.522), 'ppl': PPLMetric(5024), 'token_acc': AverageMetric(0.4), 'token_em': AverageMetric(0)}},

{'id': 'Seq2Seq', 'episode_done': False, 'metrics': {'clen': AverageMetric(5), 'ctrunc': AverageMetric(0), 'ctrunclen': AverageMetric(0), 'llen': AverageMetric(21), 'ltrunc': AverageMetric(0), 'ltrunclen': AverageMetric(0), 'loss': AverageMetric(10.05), 'ppl': PPLMetric(2.314e+04), 'token_acc': AverageMetric(0.1905), 'token_em': AverageMetric(0)}},

{'id': 'Seq2Seq', 'episode_done': False, 'metrics': {'clen': AverageMetric(12), 'ctrunc': AverageMetric(0), 'ctrunclen': AverageMetric(0), 'llen': AverageMetric(3), 'ltrunc': AverageMetric(0), 'ltrunclen': AverageMetric(0), 'loss': AverageMetric(10.15), 'ppl': PPLMetric(2.56e+04), 'token_acc': AverageMetric(0), 'token_em': AverageMetric(0)}}

]

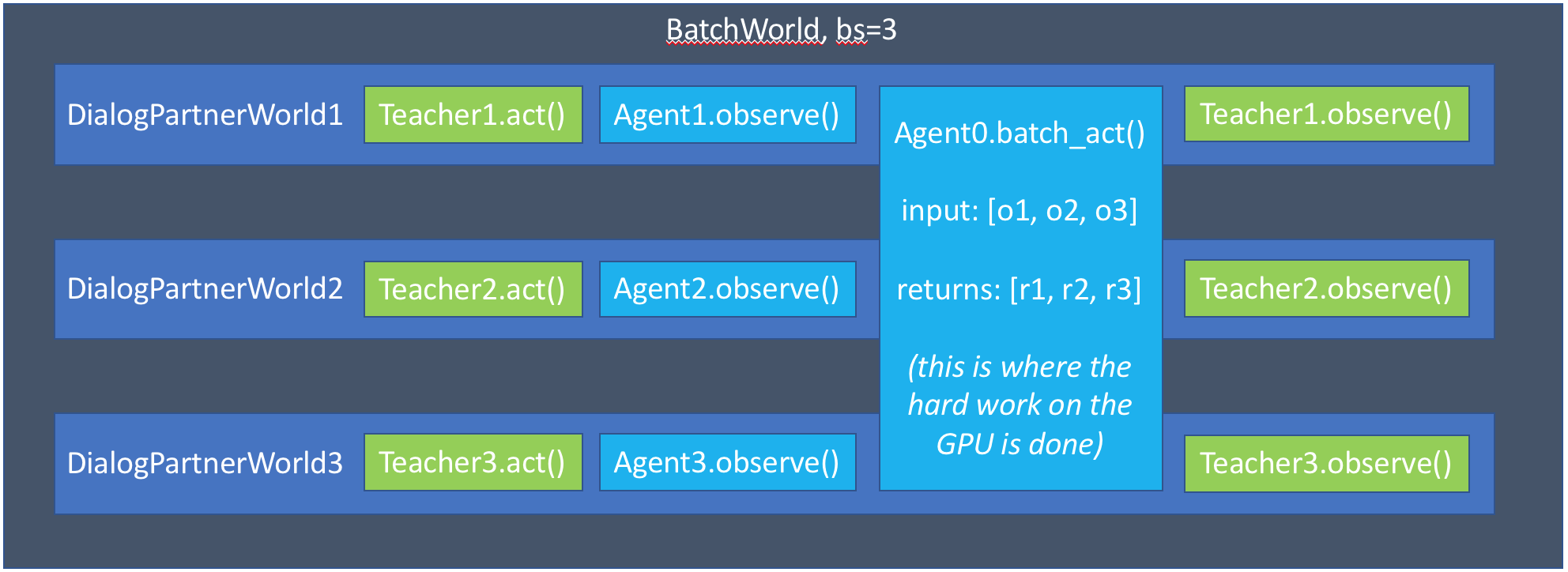

batch交互过程:

metrics.report调用栈

File "/home/lee/anaconda3/envs/pariai/bin/parlai", line 33, in <module>

sys.exit(load_entry_point('parlai', 'console_scripts', 'parlai')())

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/__main__.py", line 14, in main

superscript_main()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 324, in superscript_main

return SCRIPT_REGISTRY[cmd].klass._run_from_parser_and_opt(opt, parser)

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 107, in _run_from_parser_and_opt

return script.run()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 941, in run

return self.train_loop.train()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 905, in train

for _train_log in self.train_steps():

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 842, in train_steps

yield self.log()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 751, in log

train_report = self.world.report()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 963, in report

return self.world.report()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 392, in report

m = a.report()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/teachers.py", line 225, in report

return self.metrics.report()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/metrics.py", line 914, in report

traceback.print_stack()

File "/home/lee/anaconda3/envs/pariai/bin/parlai", line 33, in <module>

sys.exit(load_entry_point('parlai', 'console_scripts', 'parlai')())

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/__main__.py", line 14, in main

superscript_main()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 324, in superscript_main

return SCRIPT_REGISTRY[cmd].klass._run_from_parser_and_opt(opt, parser)

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 107, in _run_from_parser_and_opt

return script.run()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 941, in run

return self.train_loop.train()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 905, in train

for _train_log in self.train_steps():

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 842, in train_steps

yield self.log()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 751, in log

train_report = self.world.report()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 963, in report

return self.world.report()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 392, in report

m = a.report()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/torch_agent.py", line 1172, in report

report = self.global_metrics.report()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/metrics.py", line 914, in report

traceback.print_stack()

clear metric的调用栈:

File "/home/lee/anaconda3/envs/pariai/bin/parlai", line 33, in <module>

sys.exit(load_entry_point('parlai', 'console_scripts', 'parlai')())

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/__main__.py", line 14, in main

superscript_main()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 324, in superscript_main

return SCRIPT_REGISTRY[cmd].klass._run_from_parser_and_opt(opt, parser)

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 107, in _run_from_parser_and_opt

return script.run()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 941, in run

return self.train_loop.train()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 905, in train

for _train_log in self.train_steps():

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 842, in train_steps

yield self.log()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 753, in log

self.world.reset_metrics()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 977, in reset_metrics

self.world.reset_metrics()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 272, in reset_metrics

a.reset_metrics()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/teachers.py", line 239, in reset_metrics

self.metrics.clear()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/metrics.py", line 934, in clear

traceback.print_stack()

File "/home/lee/anaconda3/envs/pariai/bin/parlai", line 33, in <module>

sys.exit(load_entry_point('parlai', 'console_scripts', 'parlai')())

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/__main__.py", line 14, in main

superscript_main()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 324, in superscript_main

return SCRIPT_REGISTRY[cmd].klass._run_from_parser_and_opt(opt, parser)

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/script.py", line 107, in _run_from_parser_and_opt

return script.run()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 941, in run

return self.train_loop.train()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 905, in train

for _train_log in self.train_steps():

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 842, in train_steps

yield self.log()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/scripts/train_model.py", line 753, in log

self.world.reset_metrics()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 977, in reset_metrics

self.world.reset_metrics()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/worlds.py", line 272, in reset_metrics

a.reset_metrics()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/torch_generator_agent.py", line 638, in reset_metrics

super().reset_metrics()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/torch_agent.py", line 2103, in reset_metrics

self.global_metrics.clear()

File "/mnt/hdd2/yanghh/ParlAI-master/parlai/core/metrics.py", line 934, in clear

traceback.print_stack()

eval_step()调用栈:

File "/home/lee/anaconda3/envs/pariai/bin/parlai", line 33, in <module>

sys.exit(load_entry_point('parlai', 'console_scripts', 'parlai')())

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/__main__.py", line 14, in main

superscript_main()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/script.py", line 324, in superscript_main

return SCRIPT_REGISTRY[cmd].klass._run_from_parser_and_opt(opt, parser)

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/script.py", line 107, in _run_from_parser_and_opt

return script.run()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 935, in run

return self.train_loop.train()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 899, in train

for _train_log in self.train_steps():

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 850, in train_steps

stop_training = self.validate()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 500, in validate

self.valid_worlds, opt, 'valid', opt['validation_max_exs']

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 627, in _run_eval

task_report = self._run_single_eval(opt, v_world, max_exs_per_worker)

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 593, in _run_single_eval

valid_world.parley()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/worlds.py", line 865, in parley

batch_act = self.batch_act(agent_idx, batch_observations[agent_idx])

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/worlds.py", line 833, in batch_act

batch_actions = a.batch_act(batch_observation)

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/torch_agent.py", line 2208, in batch_act

traceback.print_stack()

_set_text_vec调用栈:

File "/home/lee/anaconda3/envs/pariai/bin/parlai", line 33, in <module>

sys.exit(load_entry_point('parlai', 'console_scripts', 'parlai')())

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/__main__.py", line 14, in main

superscript_main()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/script.py", line 324, in superscript_main

return SCRIPT_REGISTRY[cmd].klass._run_from_parser_and_opt(opt, parser)

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/script.py", line 107, in _run_from_parser_and_opt

return script.run()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 935, in run

return self.train_loop.train()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 899, in train

for _train_log in self.train_steps():

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/scripts/train_model.py", line 802, in train_steps

world.parley()

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/worlds.py", line 873, in parley

obs = self.batch_observe(other_index, batch_act, agent_idx)

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/worlds.py", line 817, in batch_observe

observation = agents[index].observe(observation)

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/torch_agent.py", line 1855, in observe

label_truncate=self.label_truncate,

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/torch_generator_agent.py", line 656, in vectorize

return super().vectorize(*args, **kwargs)

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/torch_agent.py", line 1590, in vectorize

self._set_text_vec(obs, history, text_truncate)

File "/mnt/hdd2/yanghh/experiments/ParlAI-master/parlai/core/torch_agent.py", line 1439, in _set_text_vec

traceback.print_stack()

Transformer某一层的参数:

"encoder.layers.6.attention.q_lin.weight", "encoder.layers.6.attention.q_lin.bias", "encoder.layers.6.attention.k_lin.weight", "encoder.layers.6.attention.k_lin.bias", "encoder.layers.6.attention.v_lin.weight", "encoder.layers.6.attention.v_lin.bias", "encoder.layers.6.attention.out_lin.weight", "encoder.layers.6.attention.out_lin.bias", "encoder.layers.6.norm1.weight", "encoder.layers.6.norm1.bias", "encoder.layers.6.ffn.lin1.weight", "encoder.layers.6.ffn.lin1.bias", "encoder.layers.6.ffn.lin2.weight", "encoder.layers.6.ffn.lin2.bias", "encoder.layers.6.norm2.weight", "encoder.layers.6.norm2.bias", "decoder.layers.6.self_attention.q_lin.weight", "decoder.layers.6.self_attention.q_lin.bias", "decoder.layers.6.self_attention.k_lin.weight", "decoder.layers.6.self_attention.k_lin.bias", "decoder.layers.6.self_attention.v_lin.weight", "decoder.layers.6.self_attention.v_lin.bias", "decoder.layers.6.self_attention.out_lin.weight", "decoder.layers.6.self_attention.out_lin.bias", "decoder.layers.6.norm1.weight", "decoder.layers.6.norm1.bias", "decoder.layers.6.encoder_attention.q_lin.weight", "decoder.layers.6.encoder_attention.q_lin.bias", "decoder.layers.6.encoder_attention.k_lin.weight", "decoder.layers.6.encoder_attention.k_lin.bias", "decoder.layers.6.encoder_attention.v_lin.weight", "decoder.layers.6.encoder_attention.v_lin.bias", "decoder.layers.6.encoder_attention.out_lin.weight", "decoder.layers.6.encoder_attention.out_lin.bias", "decoder.layers.6.norm2.weight", "decoder.layers.6.norm2.bias", "decoder.layers.6.ffn.lin1.weight", "decoder.layers.6.ffn.lin1.bias", "decoder.layers.6.ffn.lin2.weight", "decoder.layers.6.ffn.lin2.bias", "decoder.layers.6.norm3.weight", "decoder.layers.6.norm3.bias",

未完待续。。。。。。