1 词频统计、降序排序

# encoding=utf-8

import jieba

from click._compat import raw_input

# read need analyse file

article = open("freq_wrod_test.txt", "r").read()

words = jieba.cut(article, cut_all = False)

# count word freq

word_freq = {}

for word in words:

if word in word_freq:

word_freq[word] += 1

else:

word_freq[word] = 1

# sorted

freq_word = []

for word, freq in word_freq.items():

freq_word.append((word, freq))

freq_word.sort(key = lambda x:x[1], reverse=True)

max_number = int(raw_input("需要前多少位高频词? "))

# display

for word, freq in freq_word[:max_number]:

print(word, freq)

结果

需要前多少位高频词? 10

, 59

的 53

。 46

- 33

26

编程 16

: 14

python 13

了 12

Python 12

发现个问题, 好多标点符号这些无用信息也统计上了

2 人工去掉停顿

# encoding=utf-8 import jieba from click._compat import raw_input # for stopWords

# stopwords = []

# for word in open("stopwords.txt", "r"):

# stopwords.append(word.strip()) stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()] # add extra stopword stopwords.append(''); stopwords.append(' ') stopwords.append('') stopwords.append(' ') stopwords.append(' ') # read need analyse file article = open("freq_wrod_test.txt", "r").read() words = jieba.cut(article, cut_all = False) # count word freq word_freq = {} for word in words: if (word in stopwords): continue if word in word_freq: word_freq[word] += 1 else: word_freq[word] = 1 # sorted freq_word = [] for word, freq in word_freq.items(): freq_word.append((word, freq)) freq_word.sort(key = lambda x:x[1], reverse=True) max_number = int(raw_input("需要前多少位高频词? ")) # display for word, freq in freq_word[:max_number]: print(word, freq)

结果:

需要前多少位高频词? 10

编程 16

python 13

Python 12

教程 9

写 9

项目 7

代码 7

程序 6

经验 5

找 5

发现了两个python一个意思却分别进行统计了,如何统计同义词呢???

3 去掉同义词

大概思路就是,首先构造同义词字典,词间用tab间隔开,每一行的同义词都翻译为第一个词的意思。

1 读取同义词字典。以行为单位,每一行的每一个词都存入字典,字典值为这一行的首个词

2 判断某个词是否在字典内,如果在取字典中对应的词替换当前词。

# encoding=utf-8

import jieba

from click._compat import raw_input

# for stopWords

# stopwords = []

# for word in open("C:\Users\Luo Chen\Desktop\stop_words.txt", "r"):

# stopwords.append(word.strip())

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

# add extra stopword

stopwords.append('');

stopwords.append(' ')

stopwords.append('')

stopwords.append(' ')

stopwords.append(' ')

# tongyici

combine_dict = {}

for line in open("tongyici.txt", "r"):

seperate_word = line.strip().split(" ")

num = len(seperate_word)

for i in range(1, num):

combine_dict[seperate_word[i]] = seperate_word[0]

# read need analyse file

article = open("freq_wrod_test.txt", "r").read()

words = jieba.cut(article, cut_all = False)

# count word freq

word_freq = {}

for word in words:

if word in stopwords:

continue

if word in combine_dict:

word = combine_dict[word]

if word in word_freq:

word_freq[word] += 1

else:

word_freq[word] = 1

# sorted

freq_word = []

for word, freq in word_freq.items():

freq_word.append((word, freq))

freq_word.sort(key = lambda x:x[1], reverse=True)

max_number = int(raw_input("需要前多少位高频词? "))

# display

for word, freq in freq_word[:max_number]:

print(word, freq)

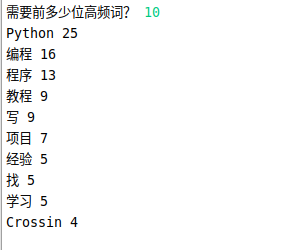

结果:

同义词字典