1 package com.liveyc.common.listener; 2 3 import javax.servlet.ServletContextEvent; 4 import javax.servlet.ServletContextListener; 5 import org.springframework.web.context.WebApplicationContext; 6 import org.springframework.web.context.support.WebApplicationContextUtils; 7 8 import com.liveyc.common.utils.ApplicationContextHelper; 9 import com.liveyc.common.utils.Constants; 10 import com.liveyc.common.utils.GetConnection; 11 import com.liveyc.common.utils.ServiceHelper; 12 13 14 public class ApplicantListener implements ServletContextListener{ 15 16 private static WebApplicationContext webApplicationContext; 17 private static ApplicationContextHelper helper = new ApplicationContextHelper(); 18 @Override 19 public void contextInitialized(ServletContextEvent sce) { 20 // TODO Auto-generated method stub 21 webApplicationContext = WebApplicationContextUtils.getWebApplicationContext(sce.getServletContext()); 22 helper.setApplicationContext(webApplicationContext); 23 Constants.WEB_APP_CONTEXT = webApplicationContext; 24 25 GetConnection getConnection = ServiceHelper.getgetConnection(); 26 Constants.CONN1 = getConnection.conn1(); 27 Constants.CONN2 = getConnection.conn2(); 28 } 29 30 @Override 31 public void contextDestroyed(ServletContextEvent sce) { 32 // TODO Auto-generated method stub 33 34 } 35 36 }

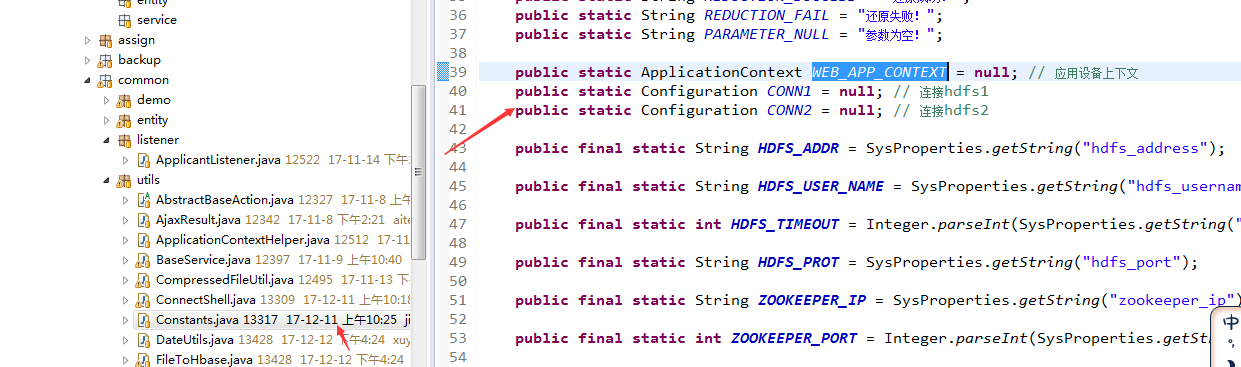

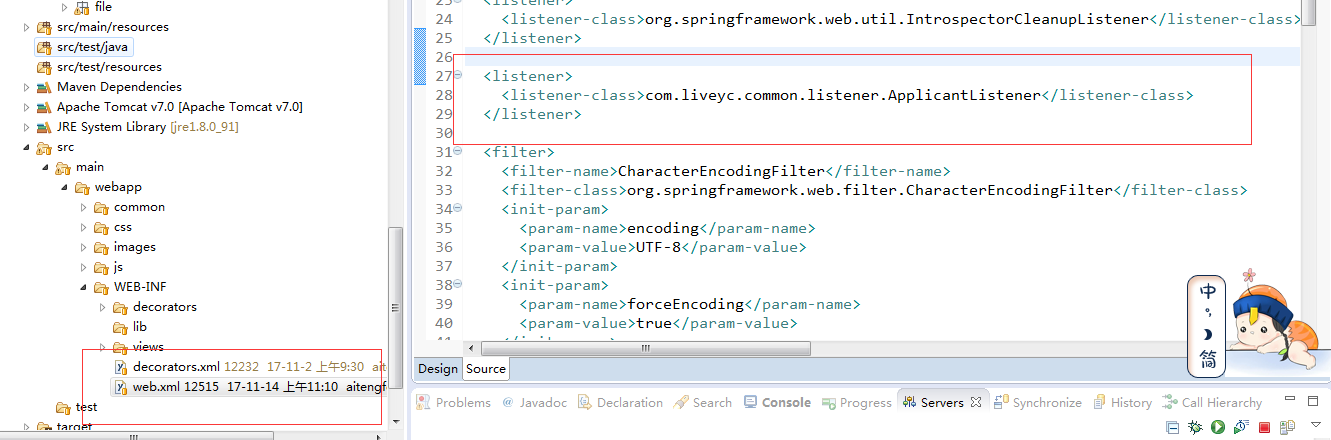

web.xml配置

spring注入

1 package com.liveyc.common.utils; 2 3 import java.net.URI; 4 5 import org.apache.commons.logging.Log; 6 import org.apache.commons.logging.LogFactory; 7 import org.apache.hadoop.conf.Configuration; 8 import org.apache.hadoop.fs.FSDataOutputStream; 9 import org.apache.hadoop.fs.FileStatus; 10 import org.apache.hadoop.fs.FileSystem; 11 import org.apache.hadoop.fs.Path; 12 import org.apache.hadoop.hdfs.server.namenode.ha.proto.HAZKInfoProtos; 13 import org.apache.hadoop.ipc.Client; 14 import org.apache.zookeeper.Watcher; 15 import org.apache.zookeeper.ZooKeeper; 16 import org.apache.zookeeper.data.Stat; 17 import org.springframework.web.bind.annotation.RequestMapping; 18 19 import com.google.protobuf.InvalidProtocolBufferException; 20 21 public class GetConnection { 22 23 private static final Log LOG = LogFactory.getLog(HdfsUtils.class); 24 25 public Configuration conn1(){ 26 Configuration conf = new Configuration(); 27 System.setProperty("HADOOP_USER_NAME", Constants.HDFS_USER_NAME); 28 //String hostname = getHostname(Constants.ZOOKEEPER_IP, Constants.ZOOKEEPER_PORT, Constants.ZOOKEEPER_TIMEOUT, Constants.DATA_DIR); 29 String hostname = SysProperties.getString("hostname1"); 30 if (hostname != null && hostname != "") { 31 conf.set("fs.default.name", "hdfs://" + hostname + ":" + Constants.HDFS_PROT); // active节点 32 // 或者通过nameservice的名字直接连接 ,不用通过zookeeper获取active状态的节点 33 // conf.set("fs.default.name", "hdfs://" + nameservice1 + ":" + 34 // HDFS_PROT); // active节点 35 conf.set("dfs.socket.timeout", "900000"); 36 conf.set("dfs.datanode.handler.count", "20"); 37 conf.set("dfs.namenode.handler.count", "30"); 38 conf.set("dfs.datanode.socket.write.timeout", "10800000"); 39 40 } else { 41 conf.set("fs.default.name", Constants.HDFS_ADDR); // 如果没配HA默认使用配置的master 42 } 43 Client.setConnectTimeout(conf, Constants.HDFS_TIMEOUT); // 超时时间 44 return conf; 45 } 46 47 48 public Configuration conn2(){ 49 Configuration conf = new Configuration(); 50 System.setProperty("HADOOP_USER_NAME", Constants.HDFS_USER_NAME); 51 //String hostname = getHostname(Constants.ZOOKEEPER_IP, Constants.ZOOKEEPER_PORT, Constants.ZOOKEEPER_TIMEOUT, Constants.DATA_DIR); 52 String hostname = SysProperties.getString("hostname2"); 53 if (hostname != null && hostname != "") { 54 conf.set("fs.default.name", "hdfs://" + hostname + ":" + Constants.HDFS_PROT); // active节点 55 // 或者通过nameservice的名字直接连接 ,不用通过zookeeper获取active状态的节点 56 // conf.set("fs.default.name", "hdfs://" + nameservice1 + ":" + 57 // HDFS_PROT); // active节点 58 conf.set("dfs.socket.timeout", "900000"); 59 conf.set("dfs.datanode.handler.count", "20"); 60 conf.set("dfs.namenode.handler.count", "30"); 61 conf.set("dfs.datanode.socket.write.timeout", "10800000"); 62 } else { 63 conf.set("fs.default.name", Constants.HDFS_ADDR); // 如果没配HA默认使用配置的master 64 } 65 Client.setConnectTimeout(conf, Constants.HDFS_TIMEOUT); // 超时时间 66 return conf; 67 } 68 69 70 public static boolean getActive(){ 71 Configuration conf = Constants.CONN1; 72 FileSystem fs = null; 73 try { 74 fs = FileSystem.get(URI.create(Constants.hdfsRootPath), conf); 75 FileStatus[] stats = fs.listStatus(new Path(Constants.hdfsRootPath)); 76 } catch (Exception e) { 77 return false; 78 } 79 return true; 80 } 81 82 83 public static Configuration getconf(){ 84 Configuration conf = new Configuration(); 85 if(GetConnection.getActive()){ 86 conf = Constants.CONN1; 87 }else{ 88 conf = Constants.CONN2; 89 } 90 return conf; 91 } 92 /** 93 * 94 * @Title: getHostname 95 * @Description: 获取active节点(集群配置HA的前提下) 96 * @param ZOOKEEPER_IP 97 * zookeeperip 98 * @param ZOOKEEPER_PORT 99 * zookeeper端口号 100 * @param ZOOKEEPER_TIMEOUT 101 * 超时时间 102 * @param DATA_DIR 103 * HA在zookeeper下的路径 104 * @return 105 */ 106 public String getHostname(String ZOOKEEPER_IP, int ZOOKEEPER_PORT, int ZOOKEEPER_TIMEOUT, String DATA_DIR) { 107 String hostname = null; 108 Watcher watcher = new Watcher() { 109 @Override 110 public void process(org.apache.zookeeper.WatchedEvent event) { 111 LOG.info("event:" + event.toString()); 112 } 113 }; 114 ZooKeeper zk = null; 115 byte[] data1 = null; 116 String[] iparr = ZOOKEEPER_IP.split(";"); 117 for (String ip : iparr) { 118 try { 119 zk = new ZooKeeper(ip + ":" + ZOOKEEPER_PORT, ZOOKEEPER_TIMEOUT, watcher); 120 data1 = zk.getData(DATA_DIR, true, new Stat()); 121 } catch (Exception e) { 122 LOG.info("This ip is not active..." + ip); 123 continue; 124 } 125 if (data1 != null) { 126 LOG.info("This ip is normal..." + ip); 127 try { 128 hostname = HAZKInfoProtos.ActiveNodeInfo.parseFrom(data1).getHostname(); 129 } catch (InvalidProtocolBufferException e) { 130 LOG.error(e); 131 } 132 return hostname; 133 } 134 } 135 return hostname; 136 } 137 }