第三课的主要内容有:

css例子

以下四个html页面在浏览器中打开即可看到效果。

css_background_color.html:

<html>

<head>

<style type="text/css">

body {background-color: yellow}

h1 {background-color: #00ff00}

h2 {background-color: transparent}

p {background-color: rgb(250,0,255)}

p.no2 {background-color: gray; padding: 20px;}

</style>

</head>

<body>

<h1>这是标题 1</h1>

<h2>这是标题 2</h2>

<p>这是段落</p>

<p class="no2">这个段落设置了内边距。</p>

</body>

</html>

css_board_color.html:

<html>

<head>

<style type="text/css">

p.one

{

border-style: solid;

border-color: #0000ff

}

p.two

{

border-style: solid;

border-color: #ff0000 #0000ff

}

p.three

{

border-style: solid;

border-color: #ff0000 #00ff00 #0000ff

}

p.four

{

border-style: solid;

border-color: #ff0000 #00ff00 #0000ff rgb(250,0,255)

}

</style>

</head>

<body>

<p class="one">One-colored border!</p>

<p class="two">Two-colored border!</p>

<p class="three">Three-colored border!</p>

<p class="four">Four-colored border!</p>

<p><b>注释:</b>"border-width" 属性如果单独使用的话是不会起作用的。请首先使用 "border-style" 属性来设置边框。</p>

</body>

</html>

css_font_family.html:

<html>

<head>

<style type="text/css">

p.serif{font-family:"Times New Roman",Georgia,Serif}

p.sansserif{font-family:Arial,Verdana,Sans-serif}

</style>

</head>

<body>

<h1>CSS font-family</h1>

<p class="serif">This is a paragraph, shown in the Times New Roman font.</p>

<p class="sansserif">This is a paragraph, shown in the Arial font.</p>

</body>

</html>

css_text_decoration.html:

<html>

<head>

<style type="text/css">

h1 {text-decoration: overline}

h2 {text-decoration: line-through}

h3 {text-decoration: underline}

h4 {text-decoration:blink}

a {text-decoration: none}

</style>

</head>

<body>

<h1>这是标题 1</h1>

<h2>这是标题 2</h2>

<h3>这是标题 3</h3>

<h4>这是标题 4</h4>

<p><a href="http://www.w3school.com.cn/index.html">这是一个链接</a></p>

</body>

</html>

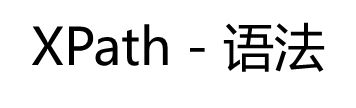

解析xml,下面是课程中使用到的book.xml:

<?xml version="1.0" encoding="ISO-8859-1"?>

<bookstore>

<book>

<title lang="eng">Harry Potter</title>

<price>29.99</price>

</book>

<book>

<title lang="eng">Learning XML</title>

<price>39.95</price>

</book>

</bookstore>

Python处理XML方法之DOM:

![]()

from xml.dom import minidom

doc = minidom.parse('book.xml')

root = doc.documentElement

# print(dir(root))

print(root.nodeName)

books = root.getElementsByTagName('book')

print(type(books))

for book in books:

titles = book.getElementsByTagName('title')

print(titles[0].childNodes[0].nodeValue)

#results

bookstore

<class 'xml.dom.minicompat.NodeList'>

Harry Potter

Learning XML

Python处理XML方法之SAX:

![]()

1 import string 2 from xml.parsers.expat import ParserCreate 3 4 class DefaultSaxHandler(object): 5 def start_element(self, name, attrs): 6 self.element = name 7 print('element: %s, attrs: %s' % (name, str(attrs))) 8 9 def end_element(self, name): 10 print('end element: %s' % name) 11 12 def char_data(self, text): 13 if text.strip(): 14 print("%s's text is %s" % (self.element, text)) 15 16 handler = DefaultSaxHandler() 17 parser = ParserCreate() 18 parser.StartElementHandler = handler.start_element 19 parser.EndElementHandler = handler.end_element 20 parser.CharacterDataHandler = handler.char_data 21 with open('book.xml', 'r') as f: 22 parser.Parse(f.read())

1 element: bookstore, attrs: {} 2 element: book, attrs: {} 3 element: title, attrs: {'lang': 'eng'} 4 title's text is Harry Potter 5 end element: title 6 element: price, attrs: {} 7 price's text is 29.99 8 end element: price 9 end element: book 10 element: book, attrs: {} 11 element: title, attrs: {'lang': 'eng'}

1 010-12345 2 0 9 3 分组 4 ('010', '12345') 5 010-12345 6 010 7 12345 8 分割 9 <class '_sre.SRE_Pattern'> 10 ['one', 'two', 'three', 'four', ''] 11 ('20', '15', '45')

12 title's text is Learning XML 13 end element: title 14 element: price, attrs: {} 15 price's text is 39.95 16 end element: price 17 end element: book 18 end element: bookstore

实例:

1 import re 2 3 m = re.match(r'd{3}-d{3,8}', '010-12345') 4 # print(dir(m)) 5 print(m.string) 6 print(m.pos, m.endpos) 7 8 # 分组 9 print('分组') 10 m = re.match(r'^(d{3})-(d{3,8})$', '010-12345') 11 print(m.groups()) 12 print(m.group(0)) 13 print(m.group(1)) 14 print(m.group(2)) 15 16 # 分割 17 print('分割') 18 p = re.compile(r'd+') 19 print(type(p)) 20 print(p.split('one1two3three3four4')) 21 22 t = '20:15:45' 23 m = re.match(r'^(0[0-9]|1[0-9]|2[0-3]|[0-9]):(0[0-9]|1[0-9]|2[0-9]|3[0-9]|4[0-9]|5[0-9]|[0-9]):(0[0-9]|1[0-9]|2[0-9]|3[0-9]|4[0-9]|5[0-9]|[0-9])$', t) 24 print(m.groups())

输出结果:

010-12345

0 9

分组

('010', '12345')

010-12345

010

12345

分割

<class '_sre.SRE_Pattern'>

['one', 'two', 'three', 'four', '']

('20', '15', '45')

电商网站数据爬取

selenium直接pip安装即可。pip install selenium

windows上需要使用使用浏览器的驱动,我使用的chrome浏览器,和课程中的一样。驱动是chromedriver。

我这里提供一个下载地址:http://docs.seleniumhq.org/download/

我的驱动是放在tools这个文件夹里的。

下载好驱动后,需要将这个驱动添加到系统属性变量中才行,不然会出错。

准备工作已经完成了。下面我们开始爬取17huo.com这个网站.我们要爬取大衣这个分类里的每个商品的标题、价格。课程的时间已经过去很久,

网站已经改版,我对课程中的代码自己进行了改动,实测可用,成功爬取前三页的信息。0、1、2共三页。

1 from selenium import webdriver 2 import time 3 4 browser = webdriver.Chrome() 5 browser.set_page_load_timeout(50) 6 browser.get('http://www.17huo.com/newsearch/?k=%E5%A4%A7%E8%A1%A3') 7 page_info = browser.find_element_by_css_selector('body > div.wrap > div.search_container > div.pagem.product_list_pager > div') 8 # print(page_info.text) 9 # 共 40 页,每页 60 条 10 pages = int((page_info.text.split(',')[0]).split(' ')[1]) 11 # print(pages) 12 for page in range(pages): 13 if page > 2: 14 break 15 url = 'http://www.17huo.com/newsearch/?k=大衣&page=' + str(page + 1) 16 browser.get(url) 17 browser.execute_script("window.scrollTo(0, document.body.scrollHeight);") 18 time.sleep(5) # 不然会load不完整 19 goods = browser.find_element_by_css_selector( 20 '.book-item-list').find_elements_by_tag_name('a') 21 print('%d页有%d件商品' % ((page + 1), len(goods))) 22 for good in goods: 23 try: 24 title = good.find_element_by_css_selector('a:nth-child(1) > p:nth-child(2)').text 25 #a:nth - child(2) > div:nth - child(3) > div:nth - child(2) 26 price = good.find_element_by_css_selector('span:nth - child(1)').text 27 #span:nth - child(1) 28 print(title, price) 29 except: 30 print(good.text)

部分结果:

1 1页有180件商品 2 3 ¥ 155.00 4 黄格子大衣 5 黄格子大衣 6 7 ¥ 350.00 8 中老年妈妈冬季仿貂绒大衣连帽女装宽松外套羊剪绒上衣 9 KXLCMML1308 10 11 ¥ 350.00 12 中老年女装冬新款羊剪绒加厚仿皮草宽松外套妈妈装大衣 13 KXLCMML1307