总体架构

192.168.199.223(zookeeper、codis-proxy、codis-dashborad:18080、codis-fe:18090、codis-server)

192.168.199.224(zookeeper、codis-server)

192.168.199.254(zookeeper、codis-proxy、codis-server)

一,zookeeper

作用:用于存放数据路由表。

描述:zookeeper简称zk。在生产环境中,zk部署越多,其可靠性越高。由于zk集群是以宕机个数过半才会让整个集群宕机,因此,奇数个zk更佳。

部署:按照1.2中的部署规划,将在如下几台机器上部署该程序。

序号 IP 主机名 部署程序

01 192.168.199.223 test zookeeper:2181

02 192.168.199.224 jason_1 zookeeper:2181

03 192.168.199.254 jason_2 zookeeper:2181

1.修改hosts文件

#该文件三台主机保持一致

[root@test ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.199.223 node1 192.168.199.224 node2 192.168.199.254 node3

2.Java环境(请查看 https://www.cnblogs.com/xiaoyou2018/p/9945272.html)

3.zookeeper

wget http://ftp.wayne.edu/apache/zookeeper/zookeeper-3.4.13/zookeeper-3.4.13.tar.gz

解压

tar -zxvf zookeeper-3.4.13.tar.gz -C /usr/local/zookeeper/

mv zookeeper-3.4.13 zookeeper

[root@jason tools]# cd /usr/local/zookeeper/conf [root@jason tools]# cp zoo_sample.cfg zoo.cfg

[root@jason conf]# cat zoo.cfg maxClientCnxns=50 tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/zookeeper ###dataLogDir=/data/zookeeper/log clientPort=2181 server.1=node1:2888:3888 server.2=node2:2888:3888 server.3=node3:2888:3888

[root@jason tools]#mkdir -p /data

说明:server.A=B:C:D:其中 A 是一个数字,表示这个是第几号服务器;B 是这个服务器的 ip 地址;C 表示的是这个服务器与集群中的 Leader 服务器交换信息的端口;D 表示的是万一集群中的 Leader 服务器挂了,需要一个端口来重新进行选举,选出一个新的 Leader,而这个端口就是用来执行选举时服务器相互通信的端口。如果是伪集群的配置方式,由于 B 都是一样,所以不同的 Zookeeper 实例通信端口号不能一样,所以要给它们分配不同的端口号。

第三步:其他处理

创建第二步中的dataDir目录,并设置当前zk的结点ID。[注:在192.168.199.223、224、254上ID值各不相同]

echo 1 > /data/zookeeper/myid (在223上)

echo 2 > /data/zookeeper/myid (在224上)

echo 13> /data/zookeeper/myid (在254上)

依次启动各个服务器的zookeeper服务 (要全部zookeeper角色的服务器都依次启动,不然查看status会报错) [root@test ~]# cd /usr/local/zookeeper/bin/ [root@test bin]# ./zkServer.sh start ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED

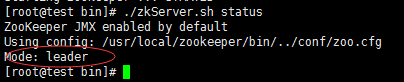

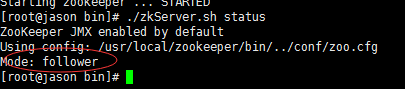

查看角色状态

[root@test bin]# ./zkServer.sh status

192.168.199.223

192.168.199.224

启动失败 查看 https://blog.csdn.net/weiyongle1996/article/details/73733228

./zkServer.sh start-foreground

安装go环境

wget https://dl.google.com/go/go1.11.2.linux-amd64.tar.gz

tar -zxvf go1.11.2.linux-amd64.tar.gz -C /usr/local/

配置环境变量(/etc/profile 添加)

export JAVA_HOME=/usr/local/java/jdk1.8.0_191 JRE_HOME=${JAVA_HOME}/jre CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib PATH=${JAVA_HOME}/bin:$PATH export GOROOT=/usr/local/go export GOPATH=/usr/local/codis export PATH=$PATH:/usr/local/go/bin #JAVA_HOME=/usr/local/jdk #CLASS_PATH=$JAVA_HOME/lib:$JAVA_HOME/jre/lib #export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.6 export PATH=$PATH:$GOROOT/bin:$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin

[root@jason go]# source /etc/profile

[root@jason go]# go version go version go1.11.2 linux/amd64

下载编译codis

mkdir -p $GOPATH/src/github.com/CodisLabs cd $_ && git clone https://github.com/CodisLabs/codis.git -b release3.2 cd $GOPATH/src/github.com/CodisLabs/codis make

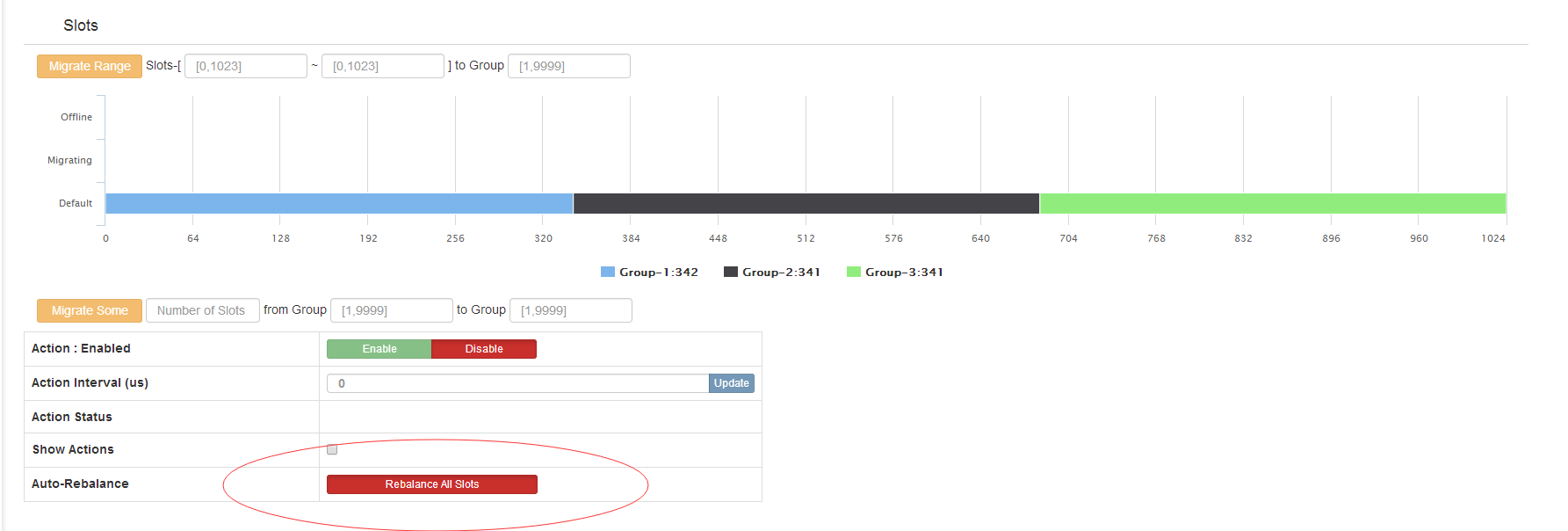

再次介绍一下codis的各个组件,以明确在集群中应该如何启动

Codis Server:基于 redis-3.2.8 分支开发。增加了额外的数据结构,以支持 slot 有关的操作以及数据迁移指令。具体的修改可以参考文档 redis 的修改。在集群中充当redis实例。

Codis Proxy:客户端连接的 Redis 代理服务, 实现了 Redis 协议。 除部分命令不支持以外(不支持的命令列表),表现的和原生的 Redis 没有区别(就像 Twemproxy)。对于同一个业务集群而言,可以同时部署多个 codis-proxy 实例;不同 codis-proxy 之间由 codis-dashboard 保证状态同步。

Codis Dashboard:集群管理工具,支持 codis-proxy、codis-server 的添加、删除,以及据迁移等操作。在集群状态发生改变时,codis-dashboard 维护集群下所有 codis-proxy 的状态的一致性。

Codis FE:集群管理界面

在node1启动FE和Dashboard用来管理集群;

node1和node3启动codis-proxy;

node1,node2,node3启动codis-server

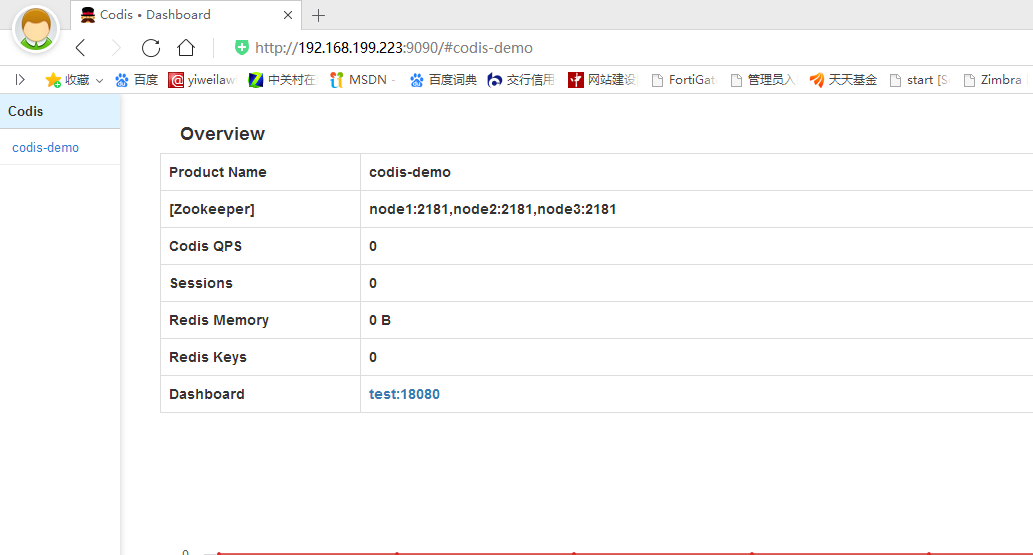

首先启动Dashboard,关联zookeeper集群,将dashboard信息保存在zookeeper集群中,FE通过读取zookeeper中保存的dashboard信息来连接需要被管理的集群。

#启动dashboard,将coordinator修改为zookeeper模式,自定义product_name和auth

[root@test bin]# cd $GOPATH/src/github.com/CodisLabs/codis [root@test codis]# cd config/ [root@test config]# cat dashboard.toml ################################################## # # # Codis-Dashboard # # # ################################################## # Set Coordinator, only accept "zookeeper" & "etcd" & "filesystem". # for zookeeper/etcd, coorinator_auth accept "user:password" # Quick Start #coordinator_name = "filesystem" #coordinator_addr = "/tmp/codis" coordinator_name = "zookeeper" coordinator_addr = "node1:2181,node2:2181,node3:2181" #coordinator_auth = "" # Set Codis Product Name/Auth. product_name = "codis-demo" product_auth = "123456" # Set bind address for admin(rpc), tcp only. admin_addr = "0.0.0.0:18080" # Set arguments for data migration (only accept 'sync' & 'semi-async'). migration_method = "semi-async" migration_parallel_slots = 100 migration_async_maxbulks = 200 migration_async_maxbytes = "32mb" migration_async_numkeys = 500 migration_timeout = "30s" # Set configs for redis sentinel. sentinel_client_timeout = "10s" sentinel_quorum = 2 sentinel_parallel_syncs = 1 sentinel_down_after = "30s" sentinel_failover_timeout = "5m" sentinel_notification_script = "" sentinel_client_reconfig_script = ""

启动dashboard

cd $GOPATH/src/github.com/CodisLabs/codis/admin [root@test admin]# ./codis-dashboard-admin.sh start

启动FE

cd $GOPATH/src/github.com/CodisLabs/codis

[root@test bin]# nohup ./bin/codis-fe --ncpu=1 --log=/usr/local/codis/src/github.com/CodisLabs/codis/log/fe.log --log-level=WARN --zookeeper=node1:2181 --listen=0.0.0.0:9090 &

[1] 3634 [root@test bin]# nohup: ignoring input and appending output to ‘nohup.out’

启东失败可以查看日志

登录监听的9090端口查看FE管理界面,接下来集群操作可在FE中完成

http://192.168.199.223:9090

启动两个codis-proxy,

分别修改node1和node3的proxy.toml配置文件

[root@test config]# cat proxy.toml |grep -Ev '^$|^#' product_name = "codis-demo" product_auth = "123456" session_auth = "56789" admin_addr = "0.0.0.0:11080" proxy_addr = "0.0.0.0:19000" jodis_name = "zookeeper" jodis_addr = "192.168.199.223:2181,192.168.199.224:2181,192.168.199.254:2181" jodis_timeout = "20s" session_recv_timeout = "0s"

product_name = "chatroom" # 设置项目名

product_auth = "123456" # 设置登录dashboard的密码(注意:与redis中requirepass一致)

session_auth = "56789" # Redis客户端的登录密码(注意:与redis中requirepass不一致)

# Set bind address for admin(rpc), tcp only.

admin_addr = "0.0.0.0:11080"

# Set bind address for proxy, proto_type can be “tcp”,”tcp4”, “tcp6”, “unix”

or “unixpacket”.

proto_type = “tcp4”

proxy_addr = "0.0.0.0:19000" #绑定端口(Redis客户端连接此端口)

# 外部存储

jodis_name = "zookeeper" # 外部存储类型

jodis_addr = “192.168.199.223:2181,192.168.199.224:2181,192.168.199.254:2181” # 外部存储列表

jodis_timeout = “20s”

#会话设置

session_recv_timeout = “0s” #如果不为0可能导致应用程序出现”write: broken pipe”的问题

启动proxy

./admin/codis-proxy-admin.sh start

#因为node1上同时存在proxy和dashboard,启动proxy后,proxy会自动连接127.0.0.1的dashboard,node3的proxy需要在FE中添加到dashboard。使用admin_addr配置参数连接。启动proxy后,尽快执行new proxy操作,默认30秒超时,超时后退出proxy进程。

报错的话可以查看日志

cd $GOPATH/src/github.com/CodisLabs/codis/log [root@jason log]# ls codis-proxy.log.2018-12-28 codis-proxy.log.2018-12-29 codis-proxy.log.2019-01-02 codis-proxy.out

redis(三台主机一样)

[root@test codis]# cd $GOPATH/src/github.com/CodisLabs/codis/config cp -r redis.conf redis-6379.conf cp -r redis.conf redis-6380.conf

6379主配置、6380从配置

cat redis-6379.conf daemonize yes pidfile /usr/loca/codis/proc/redis-6379.pid port 6379 timeout 86400 tcp-keepalive 60 loglevel notice logfile /usr/local/codis/log/redis-6379.log databases 16 save “” save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error no rdbcompression yes dbfilename dump-6379.rdb dir /usr/local/codis/data/redis_data_6379 masterauth "123456" slave-serve-stale-data yes repl-disable-tcp-nodelay no slave-priority 100 requirepass "123456" maxmemory 10gb maxmemory-policy allkeys-lru appendonly no appendfsync everysec no-appendfsync-on-rewrite yes auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb lua-time-limit 5000 slowlog-log-slower-than 10000 slowlog-max-len 128 hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-entries 512 list-max-ziplist-value 64 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 0 0 0 client-output-buffer-limit pubsub 0 0 0 hz 10 aof-rewrite-incremental-fsync yes repl-backlog-size 33554432

cat redis-6380.conf daemonize yes pidfile "/usr/loca/codis/proc/redis-6380.pid" port 6380 timeout 86400 tcp-keepalive 60 loglevel notice logfile "/usr/local/codis/log/redis-6380.log" databases 16 save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error no rdbcompression yes dbfilename "dump-6380.rdb" dir "/usr/local/codis/data/redis_data_6380" masterauth "123456" slave-serve-stale-data yes repl-disable-tcp-nodelay no slave-priority 100 requirepass "123456" maxmemory 10gb maxmemory-policy allkeys-lru appendonly no appendfsync everysec no-appendfsync-on-rewrite yes auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb lua-time-limit 5000 slowlog-log-slower-than 10000 slowlog-max-len 128 hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-entries 512 list-max-ziplist-value 64 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 0 0 0 client-output-buffer-limit pubsub 0 0 0 hz 10 aof-rewrite-incremental-fsync yes repl-backlog-size 32mb

创建目录

mkdir -p /usr/local/codis/{data,log}

mkdir -p /usr/local/codis/data/{redis_data_6379,redis_data_6380}

启动redis

cd $GOPATH/src/github.com/CodisLabs/codis ./bin/codis-server ./config/redis-6379.conf ./bin/codis-server ./config/redis-6380.conf

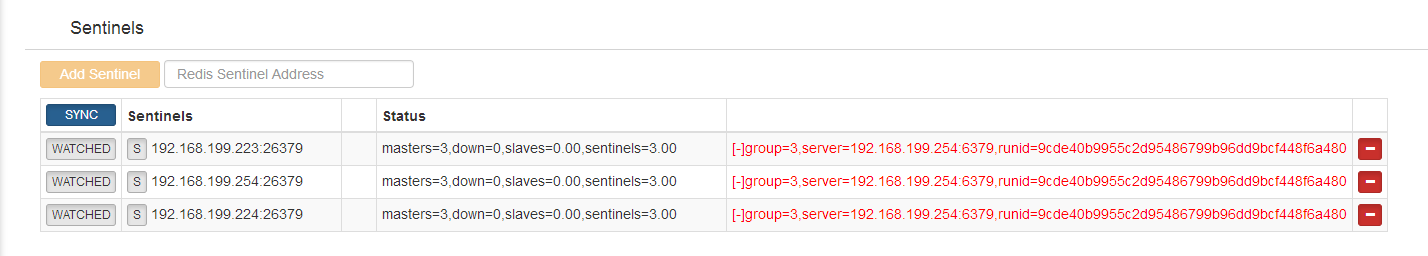

Redis-sentinel

三台主机一样

cd $GOPATH/src/github.com/CodisLabs/codis/config [root@jason config]# cat sentinel.conf bind 0.0.0.0 protected-mode no port 26379 dir "/usr/local/codis/data"

启动 sentinel

[root@test codis]# ./bin/redis-sentinel ./config/sentinel.conf

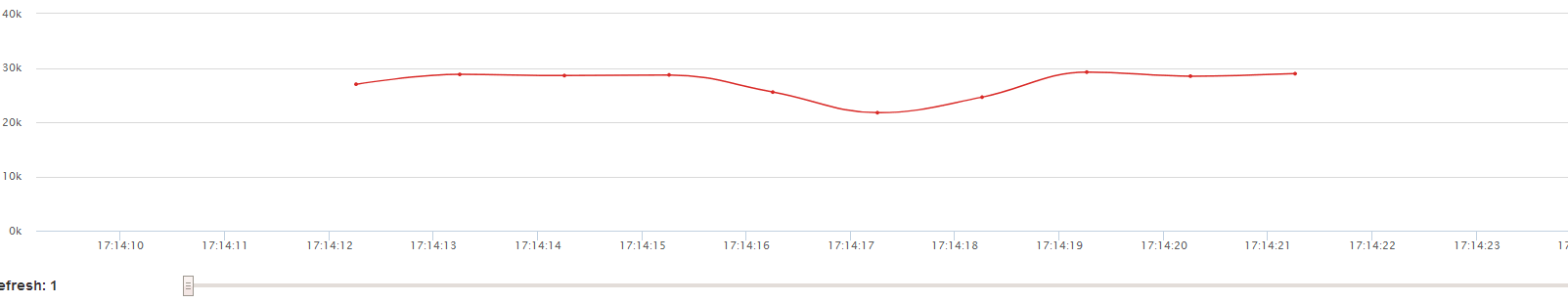

用codis-proxy代理-压力测试

上面我们已经配置好了2个codis-proxy,接下来使用它们其一对部署的集群进行压力测试

在其中一台codis-server上使用redis-benchmark命令进行测试。命令如下:

cd /usr/loca/codis

./bin/redis-benchmark -h 192.168.199.223 -p 19000 -q -n 1000000 -c 20 -d 100k

命令行执行结果:

[root@localhost codis]# ./bin/redis-benchmark -h 10.0.10.44 -p 19000 -q -n 1000000 -c 20 -d 100k

PING_INLINE: 21769.42 requests per second

PING_BULK: 18310.32 requests per second

SET: 17011.14 requests per second

GET: 18086.45 requests per second

INCR: 17886.21 requests per second

LPUSH: 17146.48 requests per second

RPUSH: 17003.91 requests per second

LPOP: 17160.31 requests per second

RPOP: 17233.65 requests per second

SADD: 19542.32

参考:

https://www.cnblogs.com/xuanzhi201111/p/4425194.html

https://www.jianshu.com/p/6994cef7c675

https://blog.csdn.net/qifengzou/article/details/72902503

http://blog.51cto.com/xiumin/1954795

https://wuyanteng.github.io/2017/11/28/Codis3.2%E9%9B%86%E7%BE%A4%E9%83%A8%E7%BD%B2/