1、maven依赖,pom.xml文件

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.xiaostudy.flink</groupId> <artifactId>flink-kafka-to-hive</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>8</maven.compiler.source> <maven.compiler.target>8</maven.compiler.target> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <flink.version>1.9.0</flink.version> <flink-table.version>1.9.0-csa1.0.0.0</flink-table.version> <kafka.version>2.3.0</kafka.version> <hadoop.version>3.0.0</hadoop.version> <fastjson.version>1.2.70</fastjson.version> <hive.version>2.1.1</hive.version> </properties> <dependencies> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_2.12</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.12</artifactId> <version>${flink.version}</version> <exclusions> <exclusion> <groupId>javax.el</groupId> <artifactId>javax.el-api</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_2.12</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-sql-parser</artifactId> <version>${flink-table.version}</version> </dependency> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>${kafka.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-api-java</artifactId> <version>1.10.0</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-planner-blink_2.11</artifactId> <version>${flink-table.version}</version> </dependency> <!-- hive --> <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-jdbc</artifactId> <version>${hive.version}</version> </dependency> <!-- hdfs --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>${hadoop.version}</version> <exclusions> <exclusion> <groupId>com.google.guava</groupId> <artifactId>guava</artifactId> </exclusion> </exclusions> </dependency> <!-- mr2 --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-common</artifactId> <version>${hadoop.version}</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-jobclient</artifactId> <version>${hadoop.version}</version> </dependency> <dependency> <groupId>javax.el</groupId> <artifactId>javax.el-api</artifactId> <version>3.0.1-b06</version> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>${fastjson.version}</version> </dependency> <dependency> <groupId>org.oracle</groupId> <artifactId>ojdbc14</artifactId> <version>10.2.0.5</version> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <configuration> <source>1.8</source> <target>1.8</target> <encoding>${project.build.sourceEncoding}</encoding> </configuration> <version>3.1</version> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-shade-plugin</artifactId> <version>2.2</version> <configuration> <filters> <filter> <artifact>*:*</artifact> <excludes> <exclude>META-INF/*.SF</exclude> <exclude>META-INF/*.DSA</exclude> <exclude>META-INF/*.RSA</exclude> </excludes> </filter> </filters> </configuration> <!--<executions>--> <!--<execution>--> <!--<phase>--> <!--package--> <!--</phase>--> <!--<goals>--> <!--<goal>--> <!--shade--> <!--</goal>--> <!--</goals>--> <!--<configuration>--> <!--<transformers>--> <!--<transformer implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">--> <!--<resource>--> <!--reference.conf--> <!--</resource>--> <!--</transformer>--> <!--</transformers>--> <!--</configuration>--> <!--</execution>--> <!--</executions>--> </plugin> <!-- 使用maven-assembly-plugin插件打包 --> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-assembly-plugin</artifactId> <version>3.2.0</version> <configuration> <archive> <manifest> <addClasspath>true</addClasspath> <classpathPrefix>lib/</classpathPrefix> <mainClass>com.xiaostudy.flink.job.StartMain</mainClass> </manifest> </archive> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> </configuration> <executions> <execution> <id>make-assembly</id> <phase>package</phase> <goals> <goal>single</goal> </goals> </execution> </executions> </plugin> </plugins> </build> </project>

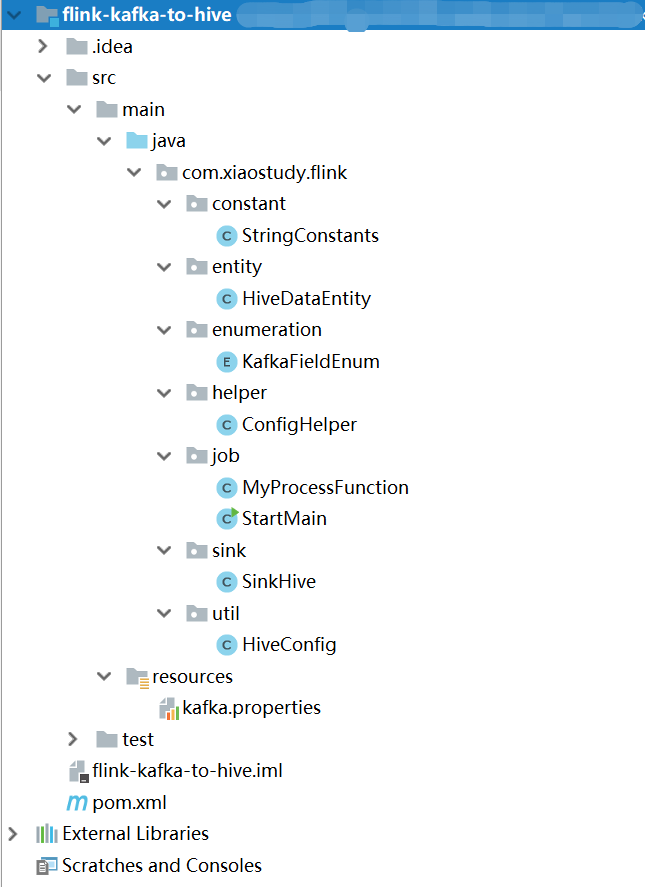

2、代码结构

3、任务入口StartMain.java

package com.xiaostudy.flink.job; import com.xiaostudy.flink.entity.HiveDataEntity; import com.xiaostudy.flink.helper.ConfigHelper; import com.xiaostudy.flink.sink.SinkHive; import org.apache.flink.api.common.serialization.SimpleStringSchema; import org.apache.flink.streaming.api.CheckpointingMode; import org.apache.flink.streaming.api.TimeCharacteristic; import org.apache.flink.streaming.api.datastream.DataStreamSource; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer; import java.util.Properties; public class StartMain { /** * job 运行入口 * * @param args */ public static void main(String[] args) throws Exception { //获取flink的运行环境 //1.构建执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); // //只有开启了CheckPointing,才会有重启策略 // env.enableCheckpointing(30 * 1000); // //此处设置重启策略为:出现异常重启3次,隔10秒一次 // env.getConfig().setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 10000)); // //系统异常退出或人为 Cancel 掉,不删除checkpoint数据 // env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION); // //设置Checkpoint模式(与Kafka整合,一定要设置Checkpoint模式为Exactly_Once) // env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE); env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime); //每隔20分钟进行启动一个检查点 env.enableCheckpointing(20 * 60 * 1000, CheckpointingMode.AT_LEAST_ONCE); //设置kafka基本信息 Properties properties = ConfigHelper.getKafkaProperties(); FlinkKafkaConsumer<String> myConsumer = new FlinkKafkaConsumer<>("test", new SimpleStringSchema(), properties); myConsumer.setStartFromGroupOffsets(); // 数据源输入 DataStreamSource<String> stringDataStreamSource = env.addSource(myConsumer); // kafka消息转实体 SingleOutputStreamOperator<HiveDataEntity> outputStreamOperator = stringDataStreamSource.process(new MyProcessFunction()); outputStreamOperator.addSink(new SinkHive()).name("kafka-to-hive"); env.execute("kafka-to-hive-任务"); } }

4、ProcessFunction类

package com.xiaostudy.flink.job; import com.xiaostudy.flink.constant.StringConstants; import com.xiaostudy.flink.entity.HiveDataEntity; import com.xiaostudy.flink.enumeration.KafkaFieldEnum; import org.apache.flink.streaming.api.functions.ProcessFunction; import org.apache.flink.util.Collector; import java.util.List; public class MyProcessFunction extends ProcessFunction<String, HiveDataEntity> { private static final long serialVersionUID = -2852475205749156550L; @Override public void processElement(String value, Context context, Collector<HiveDataEntity> out) { System.out.println(String.format("消息:%s", value)); out.collect(convert(value)); } private static HiveDataEntity convert(String kafkaValue) { try { HiveDataEntity glycEntity = new HiveDataEntity(); if (null == kafkaValue || kafkaValue.trim().length() <= 0) { return glycEntity; } String[] values = kafkaValue.split(StringConstants.KAFKA_SPLIT_CHAR); List<String> fieldNames = KafkaFieldEnum.getNameList(); if (fieldNames.size() != values.length) { return glycEntity; } String sjsj = values[0]; String ds = values[1]; glycEntity.setDs(ds); glycEntity.setSjsj(sjsj); return glycEntity; } catch (Exception e) { e.printStackTrace(); return new HiveDataEntity(); } } }

5、SinkFunction类

package com.xiaostudy.flink.sink; import com.xiaostudy.flink.constant.StringConstants; import com.xiaostudy.flink.entity.HiveDataEntity; import com.xiaostudy.flink.util.HiveConfig; import org.apache.flink.configuration.Configuration; import org.apache.flink.streaming.api.functions.sink.RichSinkFunction; import org.apache.flink.streaming.api.functions.sink.SinkFunction; import java.sql.Connection; import java.sql.DriverManager; import java.sql.PreparedStatement; public class SinkHive extends RichSinkFunction<HiveDataEntity> implements SinkFunction<HiveDataEntity> { private Connection connection; private PreparedStatement statement; // 1,初始化,正常情况下只执行一次 @Override public void open(Configuration parameters) throws Exception { super.open(parameters); Class.forName(StringConstants.HIVE_DRIVER_NAME); connection = DriverManager.getConnection(HiveConfig.HIVE_ADDR, HiveConfig.HIVE_USER, HiveConfig.HIVE_PASSWORD); System.out.println("SinkHive-open"); } private String ycHiveTableName = "hive_table"; private String sql; // 2,执行 @Override public void invoke(HiveDataEntity glycEntity, Context context) throws Exception { if (null == glycEntity) { return; } sql = String.format("insert into test.%s partition(dt=20210421) values ('%s','%s')", ycHiveTableName, glycEntity.getSjsj(), glycEntity.getDs()); statement = connection.prepareStatement(sql); statement.execute(); System.out.println("SinkHive-invoke"); } // 3,关闭,正常情况下只执行一次 @Override public void close() throws Exception { super.close(); if (statement != null) { statement.close(); } if (connection != null) { connection.close(); } System.out.println("SinkHive-close"); } }

6、HiveConfig类

package com.xiaostudy.flink.util; public class HiveConfig { //hive 连接信息 public static String HIVE_ADDR = "jdbc:hive2://localhost:10001/test"; public static String HIVE_USER = "liwei"; public static String HIVE_PASSWORD = "liwei"; }

7、kafka配置类

package com.xiaostudy.flink.helper; import java.io.IOException; import java.io.InputStream; import java.util.Properties; public class ConfigHelper { /** * kafka的配置 */ private final static Properties KAFKA_PROPERTIES = new Properties(); /** * 获取kafka的配置 * * @return * @throws IOException */ public static Properties getKafkaProperties() { if (KAFKA_PROPERTIES.isEmpty()) { synchronized (KAFKA_PROPERTIES) { loadProperties(KAFKA_PROPERTIES, "kafka.properties"); } } Properties properties = new Properties(); for (String key : KAFKA_PROPERTIES.stringPropertyNames()) { properties.setProperty(key, KAFKA_PROPERTIES.getProperty(key)); } return properties; } /** * @param properties * @param file * @throws IOException */ private static synchronized void loadProperties(Properties properties, String file) { if (properties.isEmpty()) { try (InputStream in = Thread.currentThread().getContextClassLoader().getResourceAsStream(file)) { properties.load(in); if (properties.isEmpty()) { throw new RuntimeException("配置文件内容为空"); } } catch (IOException e) { throw new RuntimeException("读取kafka配置文件错误"); } } } }

8、kafka字段枚举

package com.xiaostudy.flink.enumeration; import java.util.ArrayList; import java.util.List; /** * kafka数据字段,顺便不可变 * * @author liwei * @date 2021/3/5 21:32 */ public enum KafkaFieldEnum { SJSJ("sjsj") , DS("ds") ; private String name; private KafkaFieldEnum(String name) { this.name = name; } public static List<String> getNameList() { List<String> list = new ArrayList<>(); KafkaFieldEnum[] values = KafkaFieldEnum.values(); for (KafkaFieldEnum provinceCodeEnum : values) { list.add(provinceCodeEnum.name); } return list; } }

9、hive实体类

package com.xiaostudy.flink.entity; import java.io.Serializable; /** * @author liwei * @since 2021-03-10 */ public class HiveDataEntity implements Serializable { private static final long serialVersionUID = 1L; //数据日期 yyyy-MM-dd HH-mm-ss private String sjsj; private String ds; public String getSjsj() { return sjsj; } public void setSjsj(String sjsj) { this.sjsj = sjsj; } public String getDs() { return ds; } public void setDs(String ds) { this.ds = ds; } }

10、常量类

package com.xiaostudy.flink.constant; public class StringConstants { public static final String KAFKA_SPLIT_CHAR = "\|\|"; public static String HIVE_DRIVER_NAME = "org.apache.hive.jdbc.HiveDriver"; }

11、kafka.properties

bootstrap.servers=localhost:9092

group.id=test1

enable.auto.commit=true

auto.commit.interval.ms=1000

session.timeout.ms=300000

key.serializer=org.apache.kafka.common.serialization.StringSerializer

value.serializer=org.apache.kafka.common.serialization.StringSerializer

message.timeout.ms=300000

12、运行命令,例如:

flink run -m yarn-cluster -yjm 1G -ytm 1G -yn 1 -ys 1 /.../flink-kafka-to-hive.jar -class com.xiaostudy.flink.job.StartMain

或者

/.../flink/bin/yarn-session.sh -n 7 -s 15 -jm 8g -tm 32g -nm flink_kafka_to_hive -d /.../bin/flink run -yid application_***_0001 /.../flink-kafka-to-hive.jar 注:application_***_0001为上面第一个yarn-session启动容器id