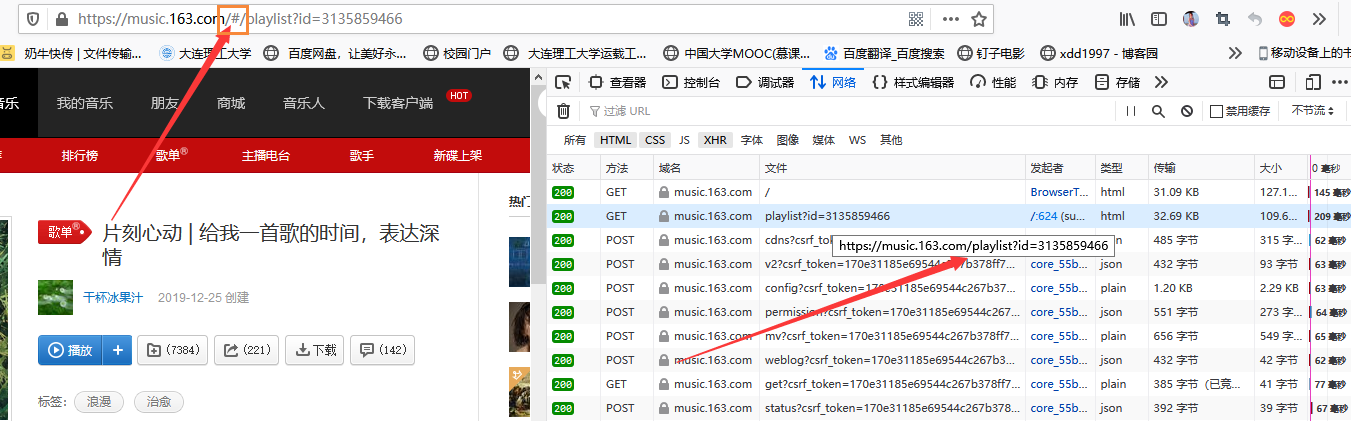

以网易云歌单为例:https://music.163.com/#/playlist?id=3212113629

坑在于要提交的网站,从下图可以看到要提交的网站是https://music.163.com/playlist?id=3212113629,而非直接复制的https://music.163.com/#/playlist?id=3212113629

开始工作

首先获取页面html

import requests from bs4 import BeautifulSoup url = "https://music.163.com/playlist?id=3212113629" # 注意直接复制的地址有个#,这里要去掉 demo = requests.get(url).text soup = BeautifulSoup(demo, "html.parser")

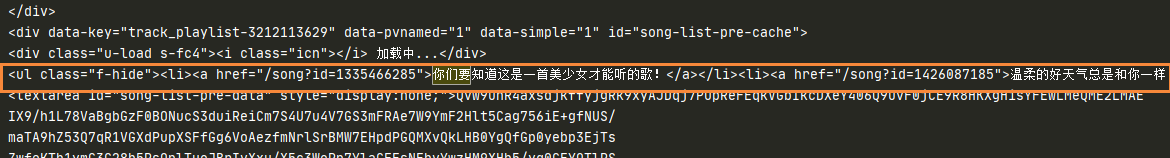

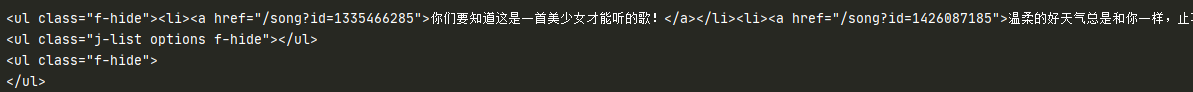

通过打印soup可以看到要下载的内容在ul标签

for ss in soup.find_all('ul'): print(ss)

运行会发现,有许多ul标签

可以进行二次查找

for ss0 in soup.find_all('ul',{"class":"f-hide"}): print(ss0) 或者 for ss0 in soup.find_all('ul',class:="f-hide"):

完整代码为:

# write by xdd1997 xdd2026@qq.com # 2020-08-07 import requests from bs4 import BeautifulSoup url = "https://music.163.com/playlist?id=3212113629" demo = requests.get(url).text soup = BeautifulSoup(demo, "html.parser") for ss0 in soup.find_all('ul',{"class":"f-hide"}): for ii in ss0.find_all('a'): print(ii.string)

更新,添加头部信息 2020-08-20

第二天运行了几次后,发现不行了,应该是网易云有来源审查反爬机制,故添加了头部信息

#encoding = utf8 # write by xdd1997 xdd2026@qq.com # 2020-08-20 import requests from bs4 import BeautifulSoup

url = "https://music.163.com/playlist?id=5138652624" # 注意直接复制的网址要去掉#号 try: kv = {'user-agent':'Mozilla/5.0'} #应对爬虫审查 r = requests.get(url,headers=kv) r.raise_for_status() #若返回值不是202,则抛出一个异常 r.encoding = r.apparent_encoding except: print("进入网站失败") demo = r.text soup = BeautifulSoup(demo, "html.parser") #print(soup) index = 0 for ss in soup.find_all('ul',{"class":"f-hide"}): # 查找<ul class="f-hide"> ...</ul> for ii in ss.find_all('a'): # print(ii.string) index = index + 1 print( str(index) + ' '+ '点歌 ' + ii.string) ''' for i in soup.ul.descendants: print(i.string) print('------------------------------------------') for i in soup.ul.children: print(i.string) '''