2020-06-01 03:51:36 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.memusage.MemoryUsage',

'scrapy.extensions.logstats.LogStats']

2020-06-01 03:51:36 [twisted] CRITICAL: Unhandled error in Deferred:

2020-06-01 03:51:36 [twisted] CRITICAL:

Traceback (most recent call last):

File "/home/jji/.local/lib/python3.7/site-packages/twisted/internet/defer.py", line 1418, in _inlineCallbacks

result = g.send(result)

File "/home/jji/.local/lib/python3.7/site-packages/scrapy/crawler.py", line 79, in crawl

self.spider = self._create_spider(*args, **kwargs)

File "/home/jji/.local/lib/python3.7/site-packages/scrapy/crawler.py", line 102, in _create_spider

return self.spidercls.from_crawler(self, *args, **kwargs)

File "/home/jji/.local/lib/python3.7/site-packages/scrapy/spiders/__init__.py", line 51, in from_crawler

spider = cls(*args, **kwargs)

TypeError: __init__() got an unexpected keyword argument '_job'

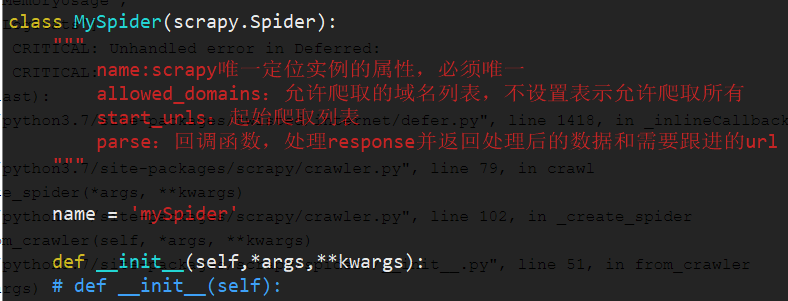

出现这个问题的解决方案是:

把__init__函数改为这样就可以了。