包括 namenode 和 datanode 在内都没有启动。JPS查看不到除了它本身之外的任何进程

查看 out 文件内容如下:

1 2020-10-19 20:10:50,206 ERROR [main] namenode.NameNode (NameNode.java:1587) - Failed to start namenode.

2 java.net.SocketException: Permission denied

3 at sun.nio.ch.Net.bind0(Native Method) ~[?:1.8.0_191]

4 at sun.nio.ch.Net.bind(Net.java:433) ~[?:1.8.0_191]

5 at sun.nio.ch.Net.bind(Net.java:425) ~[?:1.8.0_191]

6 at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223) ~[?:1.8.0_191]

7 at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74) ~[?:1.8.0_191]

8 at org.mortbay.jetty.nio.SelectChannelConnector.open(SelectChannelConnector.java:216) ~[jetty-6.1.26.jar:6.1.26] 9 at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:934) ~[hadoop-common-2.7.7.jar:?]

10 at org.apache.hadoop.http.HttpServer2.start(HttpServer2.java:876) ~[hadoop-common-2.7.7.jar:?]

11 at org.apache.hadoop.hdfs.server.namenode.NameNodeHttpServer.start(NameNodeHttpServer.java:142) ~[hadoop-hdfs-2. 7.7.jar:?]

12 at org.apache.hadoop.hdfs.server.namenode.NameNode.startHttpServer(NameNode.java:761) ~[hadoop-hdfs-2.7.7.jar:?] 13 at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:640) ~[hadoop-hdfs-2.7.7.jar:?]

14 at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:820) ~[hadoop-hdfs-2.7.7.jar:?]

15 at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:804) ~[hadoop-hdfs-2.7.7.jar:?]

16 at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1516) ~[hadoop-hdfs-2.7.7.jar:?] 17 at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1582) [hadoop-hdfs-2.7.7.jar:?]

查看 log 文件报错部分如下:

73 2020-10-19 20:10:50,024 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.h adoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

74 2020-10-19 20:10:50,168 INFO org.apache.hadoop.http.HttpServer2: Added filter 'org.apache.hadoop.hdfs.web.AuthFilter ' (class=org.apache.hadoop.hdfs.web.AuthFilter)

75 2020-10-19 20:10:50,169 INFO org.apache.hadoop.http.HttpServer2: addJerseyResourcePackage: packageName=org.apache.ha doop.hdfs.server.namenode.web.resources;org.apache.hadoop.hdfs.web.resources, pathSpec=/webhdfs/v1/*

76 2020-10-19 20:10:50,200 INFO org.apache.hadoop.http.HttpServer2: HttpServer.start() threw a non Bind IOException

77 java.net.SocketException: Permission denied

78 at sun.nio.ch.Net.bind0(Native Method)

79 at sun.nio.ch.Net.bind(Net.java:433)

80 at sun.nio.ch.Net.bind(Net.java:425)

81 at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223)

82 at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)

83 at org.mortbay.jetty.nio.SelectChannelConnector.open(SelectChannelConnector.java:216)

84 at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:934)

85 at org.apache.hadoop.http.HttpServer2.start(HttpServer2.java:876)

86 at org.apache.hadoop.hdfs.server.namenode.NameNodeHttpServer.start(NameNodeHttpServer.java:142)

87 at org.apache.hadoop.hdfs.server.namenode.NameNode.startHttpServer(NameNode.java:761)

88 at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:640)

89 at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:820)

90 at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:804)

91 at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1516)

92 at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1582)

93 2020-10-19 20:10:50,202 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Stopping NameNode metrics system...

94 2020-10-19 20:10:50,203 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system stopped.

95 2020-10-19 20:10:50,203 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system shutdown com plete.

96 2020-10-19 20:10:50,217 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 1

还不知道问题出在何处,网上各种搜都搜不到。只有官方的一篇FAQ里面讲到了出现这种报错,里面给的解释是说程序使用到了一个小于 1024 的端口,但是我查遍了所有配置文件,没有哪里用到了小于1024的端口。

而这个问题出现在我本机安装了 docker desktop 之后,于是就怀疑是不是这个影响了什么。但是也毫无头绪,准备卸载 docker for windows 试试。

卸载了 docker 也没用。搞了几天之后,在 docker 里面安装 docker-hive 也启动不了报错。然后拿着报错去网上搜,在 stackoverflow 上发现了有人说有一部分端口被保留不允许绑定,并且给了一条命令

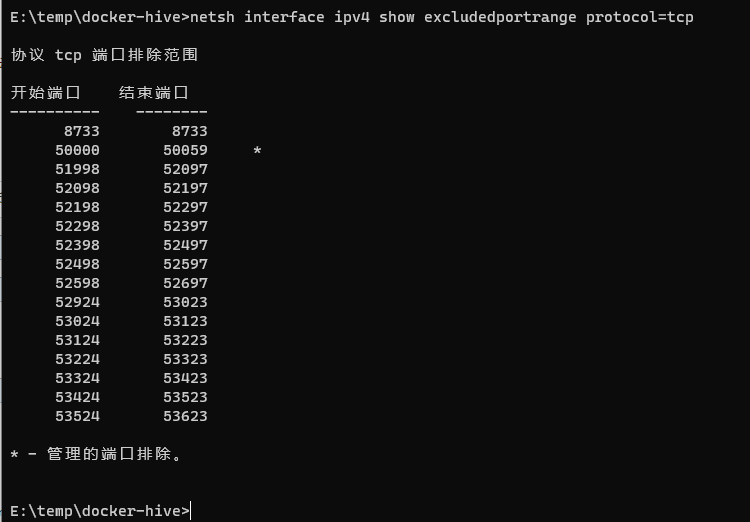

netsh interface ipv4 show excludedportrange protocol=tcp

执行命令之后,得到如下结果:

(上图结果是我修改后的)

于是我就去百度查“管理的端口排除”,得到了这篇文章,到此,终于明白是怎么回事了!!

是装 docker for windows 前开启了 Hyper-V,系统保留了一部分端口给 Hyper-V。按照前面那篇文章的说法去做(修改动态端口范围),然后就可以正常启动hadoop了