花了好多天去推导RBM公式,只能说数学是硬伤,推导过程在后面给出大概,看了下yusugomori的java版源码,又花了一天时间来写C++版本,其主要思路参照yusugomori。发现java和C++好多地方差不多,呵呵。本人乃初学小娃,错误难免,多多指教。

出处:http://www.cnblogs.com/wn19910213/p/3441707.html

RBM.h

1 #include <iostream> 2 3 using namespace std; 4 5 class RBM 6 { 7 public: 8 size_t N; 9 size_t n_visible; 10 size_t n_hidden; 11 double **W; 12 double *hbias; 13 double *vbias; 14 15 RBM(size_t,size_t,size_t,double**,double*,double*); 16 ~RBM(); 17 18 void contrastive_divergence(int *,double,int); 19 void sample_h_given_v(int*,double*,int*); 20 double sigmoid(double); 21 double Vtoh_sigm(int* ,double* ,int ); 22 void gibbs_hvh(int* ,double* ,int* ,double* ,int* ); 23 double HtoV_sigm(int* ,int ,int ); 24 void sample_v_given_h(int* ,double* ,int* ); 25 void reconstruct(int* ,double*); 26 27 private: 28 };

RBM.cpp

#include <iostream> #include <stdlib.h> #include <stdio.h> #include <cmath> #include <cstring> #include "RBM.h" using namespace std; void test_rbm(); double uniform(double ,double); double binomial(double ); int main() { test_rbm(); return 0; } //start void test_rbm() { srand(0); size_t train_N = 6; size_t test_N = 2; size_t n_visible = 6; size_t n_hidden = 3; double learning_rate = 0.1; int training_num = 1000; int k = 1; int train_data[6][6] = { {1, 1, 1, 0, 0, 0}, {1, 0, 1, 0, 0, 0}, {1, 1, 1, 0, 0, 0}, {0, 0, 1, 1, 1, 0}, {0, 0, 1, 0, 1, 0}, {0, 0, 1, 1, 1, 0} }; RBM rbm(train_N,n_visible,n_hidden,NULL,NULL,NULL); //第一步、构造 for(size_t j=0;j<training_num;j++) { for(size_t i=0;i<train_N;i++) { rbm.contrastive_divergence(train_data[i],learning_rate,k); //第二步、训练数据集 } } //测试数据 int test_data[2][6] = { {1,1,0,0,0,0}, {0,0,0,1,1,0} }; double reconstructed_data[2][6]; for(size_t i=0;i<test_N;i++) { rbm.reconstruct(test_data[i],reconstructed_data[i]); //第三步、重构数据,其主要过程就是说把训练出来的权重,偏移量拿出来对测试数据先转换到隐含层,在转换回来。 for(size_t j=0;j<n_visible;j++) { cout << reconstructed_data[i][j] << " "; } cout << endl; } } void RBM::reconstruct(int* test_data,double* reconstructed_data) { double* h = new double[n_hidden]; double temp; for(size_t i=0;i<n_hidden;i++) { h[i] = Vtoh_sigm(test_data,W[i],hbias[i]); } for(size_t i=0;i<n_visible;i++) { temp = 0.0; for(size_t j=0;j<n_hidden;j++) { temp += W[j][i] * h[j]; } temp += vbias[i]; reconstructed_data[i] = sigmoid(temp); } delete[] h; } //第二步1、CD-K void RBM::contrastive_divergence(int *train_data,double learning_rate,int k) { double* ph_sigm_out = new double[n_hidden]; int* ph_sample = new int[n_hidden]; double* nv_sigm_outs = new double[n_visible]; int* nv_samples = new int[n_visible]; double* nh_sigm_outs = new double[n_hidden]; int* nh_samples = new int[n_hidden]; sample_h_given_v(train_data,ph_sigm_out,ph_sample); //获得h0 for(size_t i=0;i<k;i++) //根据hinton教授指出只需要抽样到V1即可有足够好的近似,所以k=1 { if(i == 0) { gibbs_hvh(ph_sample,nv_sigm_outs,nv_samples,nh_sigm_outs,nh_samples); //获得V1,h1 } else { gibbs_hvh(nh_samples,nv_sigm_outs,nv_samples,nh_sigm_outs,nh_samples); } } //更新权值,双向偏移量。由于hinton提出的CD-K,可以知道其v0代表的是原始数据x //h0即ph_sigm_out,h0近似等于对v0下h的概率 //v1即代表的是经过一次转换后的x,近似等于对h0下v的概率。 //h1同理。CD-K主要就是求出这个三个数据,便能够很好的近似计算梯度。至于为什么我也不知道。 for(size_t i=0;i<n_hidden;i++) { for(size_t j=0;j<n_visible;j++) { //可以根据权重公式发现,其实P(hi=1|v)代表的就是h0,p(hi=1|Vyk)和Vyk代表的就是h1和V1. W[i][j] += learning_rate * (ph_sigm_out[i] * train_data[j] - nh_sigm_outs[i] * nv_samples[j]) / N; } hbias[i] += learning_rate * (ph_sample[i] - nh_sigm_outs[i]) / N; } for(size_t i=0;i<n_visible;i++) { vbias[i] += learning_rate * (train_data[i] - nv_samples[i]) / N; } delete[] ph_sigm_out; delete[] ph_sample; delete[] nv_sigm_outs; delete[] nv_samples; delete[] nh_sigm_outs; delete[] nh_samples; } void RBM::gibbs_hvh(int* ph_sample,double* nv_sigm_outs,int* nv_samples,double* nh_sigm_outs,int* nh_samples) { sample_v_given_h(ph_sample,nv_sigm_outs,nv_samples); sample_h_given_v(nv_samples,nh_sigm_outs,nh_samples); } void RBM::sample_v_given_h(int* h0_sample,double* nv_sigm_outs,int* nv_samples) { for(size_t i=0;i<n_visible;i++) { nv_sigm_outs[i] = HtoV_sigm(h0_sample,i,vbias[i]); nv_samples[i] = binomial(nv_sigm_outs[i]); } } double RBM::HtoV_sigm(int* h0_sample,int i,int vbias) { double temp = 0.0; for(size_t j=0;j<n_hidden;j++) { temp += W[j][i] * h0_sample[j]; } temp += vbias; return sigmoid(temp); } void RBM::sample_h_given_v(int* train_data,double* ph_sigm_out,int* ph_sample) { for(size_t i=0;i<n_hidden;i++) { ph_sigm_out[i] = Vtoh_sigm(train_data,W[i],hbias[i]); ph_sample[i] = binomial(ph_sigm_out[i]); } } double binomial(double p) { if(p<0 || p>1){ return 0; } double r = rand()/(RAND_MAX + 1.0); if(r < p) { return 1; } else { return 0; } } double RBM::Vtoh_sigm(int* train_data,double* W,int hbias) { double temp = 0.0; for(size_t i=0;i<n_visible;i++) { temp += W[i] * train_data[i]; } temp += hbias; return sigmoid(temp); } double RBM::sigmoid(double x) { return 1.0/(1.0 + exp(-x)); } RBM::RBM(size_t train_N,size_t n_v,size_t n_h,double **w,double *hb,double *vb) { N = train_N; n_visible = n_v; n_hidden = n_h; if(w == NULL) { W = new double*[n_hidden]; double a = 1.0/n_visible; for(size_t i=0;i<n_hidden;i++) { W[i] = new double[n_visible]; } for(size_t i=0;i<n_hidden;i++) { for(size_t j=0;j<n_visible;j++) { W[i][j] = uniform(-a,a); } } } else { W = w; } if(hb == NULL) { hbias = new double[n_hidden]; for(size_t i=0;i<n_hidden;i++) { hbias[i] = 0.0; } } else { hbias = hb; } if(vb == NULL) { vbias = new double[n_visible]; for(size_t i=0;i<n_visible;i++) { vbias[i] = 0.0; } } else { vbias = vb; } } RBM::~RBM() { for(size_t i=0;i<n_hidden;i++) { delete[] W[i]; } delete[] W; delete[] hbias; delete[] vbias; } double uniform(double min,double max) { return rand() / (RAND_MAX + 1.0) * (max - min) + min; }

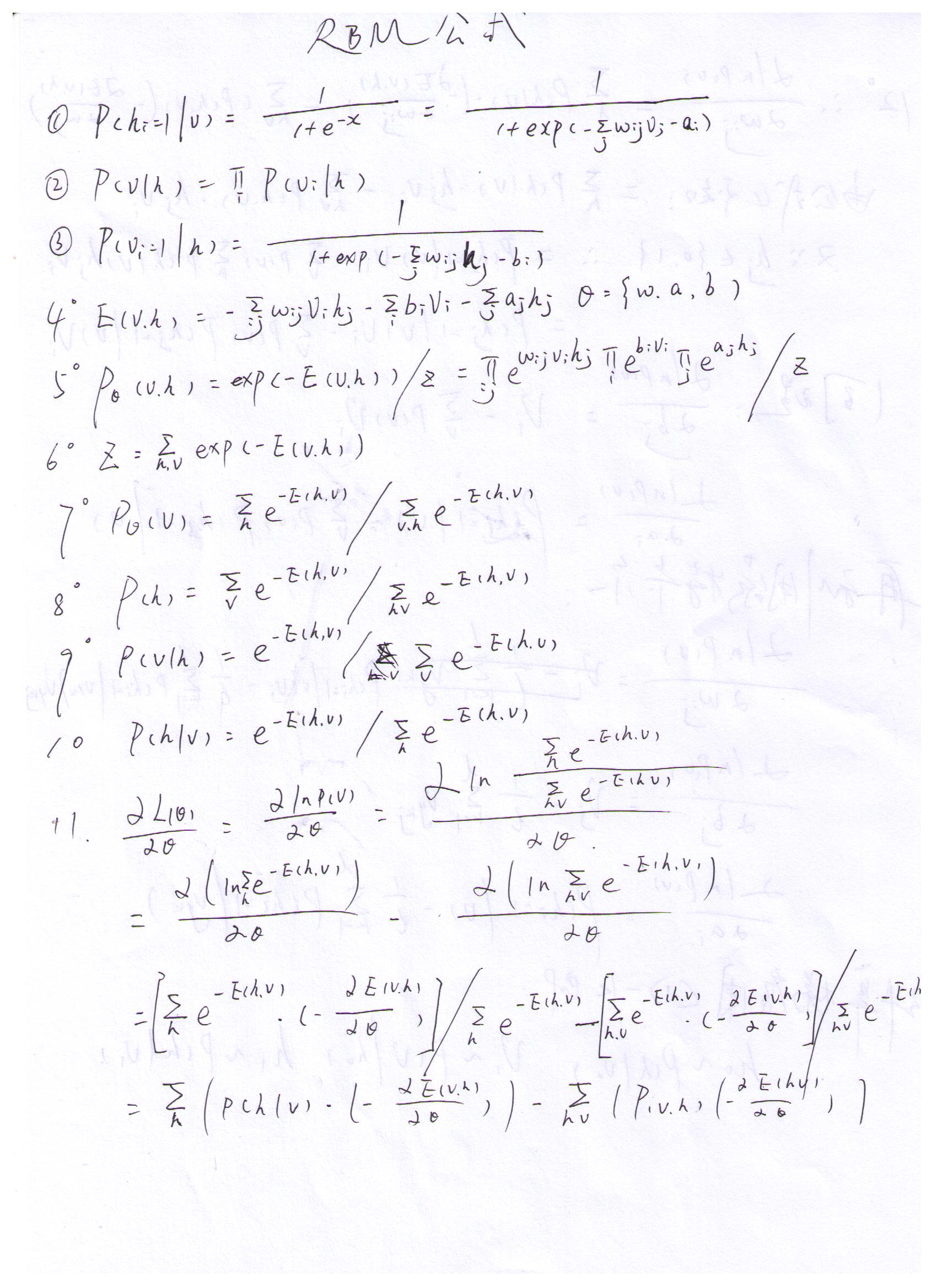

推导过程: