python 爬取历史天气

官网:http://lishi.tianqi.com/luozhuangqu/201802.html

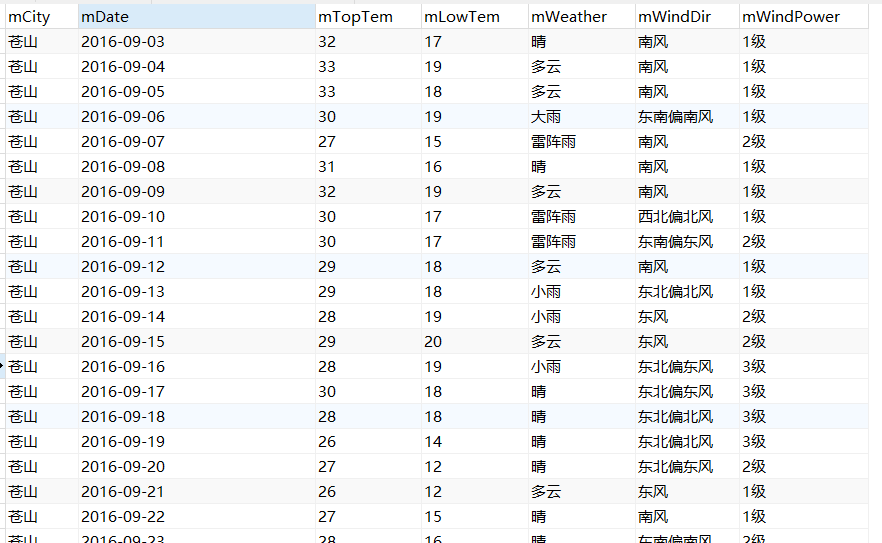

# encoding:utf-8 import requests from bs4 import BeautifulSoup import pymysql import pandas as pd date_list = [x.strftime('%Y%m') for x in list(pd.date_range(start='2016-09', end='2018-09', freq="m"))] url_str = "http://lishi.tianqi.com/" # citys = ["苍山", "费县", "河东区", "莒南", "临沭", "兰山市", "罗庄区", "蒙阴", "平邑", "郯城", "沂南", "沂水"] # city_code = ["cangshan", "feixian", "hedong", "junan", "linshu", "lanshan", "luozhuangqu", "mengyin", "pingyi", # "tancheng", "yinan", "yishui"] city_code = ["yishui"] urls = [] # url拼接 for city in city_code: for date_item in date_list: url = url_str + city + "/" + date_item + ".html" urls.append(url) # 数据爬取 for url in urls: response = requests.get(url) soup = BeautifulSoup(response.text, 'html.parser') weather_list = soup.select('div[class="tqtongji2"]') for weather in weather_list: weather_date = weather.select('a')[0].string.encode('utf-8') ul_list = weather.select('ul') for ul in ul_list[1:]: li_list = ul.select('li') tCity = "沂水" tDate = li_list[0].string tTopTem = li_list[1].string tLowTem = li_list[2].string tWeather = li_list[3].string tWindDir = li_list[4].string tWindPower = li_list[5].string # 数据库存储 conn = pymysql.connect(host='localhost', user='root', passwd='123456', database='weather', charset='utf8') # 链接数据库 cursor = conn.cursor() # 获得游标 # 向数据库添加数据的SQL语句 sql = "insert into mWeather (mCity,mDate,mTopTem,mLowTem,mWeather,mWindDir,mWindPower) values ('%s','%s','%s','%s','%s','%s','%s')" % (tCity, tDate, tTopTem, tLowTem, tWeather, tWindDir, tWindPower) cursor.execute(sql) # 执行 conn.commit() # 提交添加数据的命令 cursor.close() conn.close() print(tCity + " 城市 " + tDate + " 数据 ----- 爬取成功!")