说明以下说描述的的安装rac是安装一个rac备端,只需要安装grid软件和oracle软件即可。

一、硬件配置:

cup:Linux db51 3.10.0-1062.el7.x86_64 #1 SMP Wed Aug 7 18:08:02 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

内存:250G

OCR:3G*3

DATA:480G*6

二、软件环境:

操作系统:CentOS Linux release 7.7.1908 (Core)

数据库:oracle 11.2.0.4 R2 RAC

三、业务系统:

业务系统

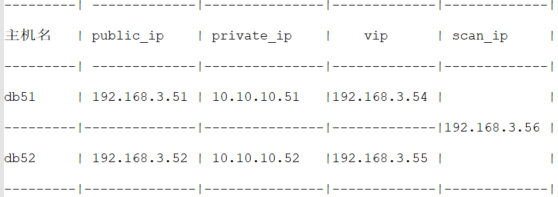

四、主机规划:

五、数据库安装前准备

1、配置DNS内容(可忽略):

vi /etc/resolv.conf

nameserver 192.168.3.1

nameserver 192.168.3.2

2、改hosts文件

节点一db51和节点二db52:

#pub

192.168.3.51 db51

192.168.3.52 db52

#vip

192.168.3.54 db51-vip

10.10.10.52 db52-vip

#priv

10.10.10.51 db51-priv

192.168.3.55 db52-priv

#scan

172.16.53.56 db-scan

3、关闭防火墙

systemctl status firewalld

systemctl stop firewalld

systemctl disable firewalld

4、关闭selinux

Setenforce 0

vi /etc/sysconfig/selinux

将SELINUX值改为disabled

6、关闭透明大页

==查看:

cat /sys/kernel/mm/transparent_hugepage/enabled

[always] madvise never

默认为always。

==关闭大页第一种方法:

(1).编辑/etc/sysconfig/grub 文件,在 GRUB_CMDLINE_LINUX 那一行后面追加 transparent_hugepage=never

例如:

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="crashkernel=auto rd.lvm.lv=rootvg/root rd.lvm.lv=rootvg/swap rhgb quiet transparent_hugepage=never"

GRUB_DISABLE_RECOVERY="true"

(2).再使用 grub2-mkconfig 生成grub.cfg配置文件。

# grub2-mkconfig -o /boot/grub2/grub.cfg

(3).重启系统使配置生效。

grep Huge /proc/meminfo

=======第二种方法:

vi /etc/rc.local

if test -f /sys/kernel/mm/transparent_hugepage/enabled; then

echo never > /sys/kernel/mm/transparent_hugepage/enabled

fi

if test -f /sys/kernel/mm/transparent_hugepage/defrag; then

echo never > /sys/kernel/mm/transparent_hugepage/defrag

fi

保存后

chmod +x /etc/rc.d/rc.local

重启服务器

六、集群参数配置

1、设置内核参数

/etc/sysctl.conf 文件中增加以下参数:

fs.aio-max-nr = 3145728

kernel.shmmni = 4096

kernel.shmall = 65011712 ## 250G/4kb 字节(24G除以页4KB的大小)

kernel.shmmax = 214748364800 #250G为使用 250*1024*1024*1024*0.8

kernel.sem = 250 32000 100 128

fs.file-max = 6815744

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

2、设置limits参数

/etc/security/limits.conf 文件中添加如下参数

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft memlock 3145728

oracle hard memlock 3145728

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

====================================================

/etc/pam.d/login 文件中添加如下参数:

session required pam_limits.so

====================================================

/etc/profile 文件中添加如下参数:

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

====================================================

3、创建用户、组

groupadd -g 200 oinstall

groupadd -g 201 dba

groupadd -g 202 oper

groupadd -g 203 asmadmin

groupadd -g 204 asmoper

groupadd -g 205 asmdba

useradd -u 200 -g oinstall -G dba,asmdba,oper,asmadmin oracle

useradd -u 201 -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid

4、上传安装软件到/opt/soft并用相应帐户解压

将软件上传到一节点/soft目录,并用unzip命令解压。

p13390677_112040_Linux-x86-64_1of7.zip

p13390677_112040_Linux-x86-64_2of7.zip

p13390677_112040_Linux-x86-64_3of7.zip

p19404309_112040_Linux-x86-64.zip

p27475913_112040_Linux-x86-64GIPSU.zip

p6880880_112000_Linux-x86-64.zip

=============================================================================

可省略:

安装前打19404309补丁

安装软件前,对安装介质更新Patch 19404309

unzip p19404309_112040_Linux-x86-64.zip -d /stage

cp /stage/b19404309/grid/cvu_prereq.xml /stage/grid/stage/cvu

cp /stage/b19404309/database/cvu_prereq.xml /stage/database/stage/cvu

=============================================================================

安装cvuqdisk

两节点root用户下安装

cvuqdisk存于oracle GI安装介质的cv/rpm目录下

export CVUQDISK_GRP=asmadmin

rpm -ivh cvuqdisk-1.0.10-1.rpm

5、配置互信(grid / oracle用户,如下两种方法,我在这里用的方法二)

方法一>(比较老的办法)#####grid 和 oracle用户操作的用户都是这样(oracle和grid用户都要执行)

##节点1,节点2都执行如下:

cd

mkdir ~/.ssh

chmod 700 ~/.ssh

ssh-keygen -t rsa

ssh-keygen -t dsa

##配置互相ssh

#节点1执行如下:

cd

cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys

cat ~/.ssh/id_dsa.pub >>~/.ssh/authorized_keys

ssh db52 cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys

ssh db52 cat ~/.ssh/id_dsa.pub >>~/.ssh/authorized_keys

scp ~/.ssh/authorized_keys db52:~/.ssh/authorized_keys

###节点1和节点2分别执行执行如下:

ssh db51 date

ssh db52 date

ssh db51-priv date

ssh db52-priv date

方法二> 利用解压的软件包(sshUserSetup.sh)

sshUserSetup.sh在GI安装介质解压缩后的oui/prov/resources/scripts目录下。

ROOT:

设置GRID SSH:

./sshUserSetup.sh -user grid -hosts "db51 db52" -advanced -noPromptPassphrase

设置ORACLE SSH:

./sshUserSetup.sh -user oracle -hosts "db51 db52" -advanced -noPromptPassphrase

###然后节点1和节点2分别执行执行如下:

ssh db51 date

ssh db52 date

ssh db51-priv date

ssh db52-priv date

6、创建oracle所需目录

mkdir -p /oracle/app/grid/

mkdir -p /oracle/app/11.2.0/grid

mkdir -p /oracle/app/oracle/product/11.2.0/db_1

chown -R grid:oinstall /oracle

chown -R oracle:oinstall /oracle/app/oracle/

chmod -R 775 /oracle

7、设置grid和orale账户环境

=======================================================================

Grid用户:

export ORACLE_BASE=/oracle/app/grid

export ORACLE_HOME=/oracle/app/11.2.0/grid

export ORACLE_SID=+ASM1

export PATH=$PATH:$ORACLE_HOME/bin:$HOME/bin

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/Apache/Apache/bin:$PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib:/usr/local/lib

export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

CLASSPATH=$CLASSPATH:$ORACLE_HOME/network/jlib

export CLASSPATH

ORA_NLS33=$ORACLE_HOME/nls/admin/data

export ORA_NLS33

=======================================================================

oracle用户:

export ORACLE_BASE=/oracle/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export ORACLE_SID=orcldb1

export PATH=$PATH:$ORACLE_HOME/bin:$HOME/bin

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/Apache/Apache/bin:$PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib:/usr/local/lib

export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

CLASSPATH=$CLASSPATH:$ORACLE_HOME/network/jlib

export CLASSPATH

ORA_NLS33=$ORACLE_HOME/nls/admin/data

export ORA_NLS33

#export NLS_LANG=american_america.AL32UTF8

export NLS_LANG="SIMPLIFIED CHINESE_CHINA".ZHS16GBK

=======================================================================

8、检查软件包

rpm -q make

gcc

libaio

glibc

compat-libstdc++-33

elfutils-libelf

elfutils-libelf-devel

glibc-common

glibc-devel

glibc-headers

gcc-c++

libaio-devel

libgcc

libstdc++

libstdc++-devel

sysstat

ksh

expat

sysstat

unixODBC

compat-libcap1

unixODBC-devel

缺少的依赖包利用yum安装。

七、小编这里用的多路径配置识别配置

1.多路径依赖包和服务

yum install -y device-mapper*

mpathconf --enable

systemctl start multipathd.service

systemctl enable multipathd.service

===========================================================================================

多路径在fidisk -l查看的时候会出现相同两个盘wwid,个是用了双链路,只要对应一个wwid即可。

我这里查出来的需要做共享磁盘是如下:

/dev/sdb

/dev/sdc

/dev/sdd

/dev/sde

/dev/sdf

/dev/sdg

/dev/sdh

/dev/sdi

/dev/sdj

/dev/sdk

/dev/sdl

/dev/sdm

/dev/sdn

/dev/sdo

/dev/sdp

/dev/sdq

/dev/sdr

/dev/sds

获取磁盘的UUID:

/usr/lib/udev/scsi_id -g -u -d /dev/sdb

....

....

....

查询结果如下:

/dev/sdb 36000d3100419d400000000000000005a

/dev/sdc 36000d3100419d400000000000000005c

/dev/sdd 36000d3100419d400000000000000005e

/dev/sde 36000d3100419d4000000000000000060

/dev/sdf 36000d3100419d4000000000000000062

/dev/sdg 36000d3100419d400000000000000005b

/dev/sdh 36000d3100419d400000000000000005d

/dev/sdi 36000d3100419d400000000000000005f

/dev/sdj 36000d3100419d4000000000000000061

/dev/sdk 36000d3100419d400000000000000005a

/dev/sdl 36000d3100419d400000000000000005c

/dev/sdm 36000d3100419d400000000000000005e

/dev/sdn 36000d3100419d4000000000000000060

/dev/sdo 36000d3100419d4000000000000000062

/dev/sdp 36000d3100419d400000000000000005b

/dev/sdq 36000d3100419d400000000000000005d

/dev/sdr 36000d3100419d400000000000000005f

/dev/sds 36000d3100419d4000000000000000061

会发现有两两相同的wwid,这个就是我开始提到的。

==========================================================================================

2./etc/multipath.conf 配置文件:

查询/usr/lib/udev/scsi_id -g -u -d /dev/sdc提供wwid

例子:

# This is a basic configuration file with some examples, for device mapper

# multipath.

#

# For a complete list of the default configuration values, run either

# multipath -t

# or

# multipathd show config

#

# For a list of configuration options with descriptions, see the multipath.conf

# man page

## By default, devices with vendor = "IBM" and product = "S/390.*" are

## blacklisted. To enable mulitpathing on these devies, uncomment the

## following lines.

#blacklist_exceptions {

# device {

# vendor "IBM"

# product "S/390.*"

# }

#}

## Use user names, instead of using WWIDs as names.

defaults {

user_friendly_names yes

find_multipaths yes

}

##

## Here is an example of how to configure some standard options.

##

#

#defaults {

# polling_interval 10

# path_selector "round-robin 0"

# path_grouping_policy multibus

# uid_attribute ID_SERIAL

# prio alua

# path_checker readsector0

# rr_min_io 100

# max_fds 8192

# rr_weight priorities

# failback immediate

# no_path_retry fail

# user_friendly_names yes

#}

##

## The wwid line in the following blacklist section is shown as an example

## of how to blacklist devices by wwid. The 2 devnode lines are the

## compiled in default blacklist. If you want to blacklist entire types

## of devices, such as all scsi devices, you should use a devnode line.

## However, if you want to blacklist specific devices, you should use

## a wwid line. Since there is no guarantee that a specific device will

## not change names on reboot (from /dev/sda to /dev/sdb for example)

## devnode lines are not recommended for blacklisting specific devices.

##

#blacklist {

# wwid 26353900f02796769

# devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

# devnode "^hd[a-z]"

#}

multipaths {

multipath {

wwid 36000d3100419d400000000000000005d

alias rac3_data01

}

multipath {

wwid 36000d3100419d400000000000000005f

alias rac3_data02

}

multipath {

wwid 36000d3100419d4000000000000000061

alias rac3_data03

}

multipath {

wwid 36000d3100419d400000000000000005e

alias rac3_data04

}

multipath {

wwid 36000d3100419d4000000000000000060

alias rac3_data05

}

multipath {

wwid 36000d3100419d4000000000000000062

alias rac3_data06

}

# path_grouping_policy multibus

# path_selector "round-robin 0"

# failback manual

# rr_weight priorities

# no_path_retry 5

multipath {

wwid 36000d3100419d400000000000000005b

alias ocr_vote1

}

multipath {

wwid 36000d3100419d400000000000000005a

alias ocr_vote2

}

multipath {

wwid 36000d3100419d400000000000000005c

alias ocr_vote3

}

}

#devices {

# device {

# vendor "COMPAQ "

# product "HSV110 (C)COMPAQ"

# path_grouping_policy multibus

# path_checker readsector0

# path_selector "round-robin 0"

# hardware_handler "0"

# failback 15

# rr_weight priorities

# no_path_retry queue

# }

# device {

# vendor "COMPAQ "

# product "MSA1000 "

# path_grouping_policy multibus

# }

#}

======

3.配置裸设备映射

cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d400000000000000005b", SYMLINK+="asm-ocr1", GROUP="asmadmin", OWNER="grid", MODE="0660"

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d400000000000000005a", SYMLINK+="asm-ocr2", GROUP="asmadmin", OWNER="grid", MODE="0660"

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d400000000000000005c", SYMLINK+="asm-ocr3", GROUP="asmadmin", OWNER="grid", MODE="0660"

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d400000000000000005d", SYMLINK+="asm-data01", GROUP="asmadmin", OWNER="grid", MODE="0660"

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d400000000000000005f", SYMLINK+="asm-data02", GROUP="asmadmin", OWNER="grid", MODE="0660"

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d4000000000000000061", SYMLINK+="asm-data03", GROUP="asmadmin", OWNER="grid", MODE="0660"

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d400000000000000005e", SYMLINK+="asm-data04", GROUP="asmadmin", OWNER="grid", MODE="0660"

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d4000000000000000060", SYMLINK+="asm-data05", GROUP="asmadmin", OWNER="grid", MODE="0660"

KERNEL=="dm-*", ENV{DM_UUID}=="mpath-36000d3100419d4000000000000000062", SYMLINK+="asm-data06", GROUP="asmadmin", OWNER="grid", MODE="0660"

重新注册加载到服务:

/sbin/udevadm control --reload

/sbin/udevadm trigger --type=devices --action=change

systemctl restart systemd-udev-trigger.service

八、安装Grid Infrastucture

1、预检查(查看生成的日志有没有失败的地方进行处理)

cd /opt/soft/grid

# ./runcluvfy.sh stage -pre crsinst -n db51,db52 -verbose >/home/grid/grid_check.log

2、准备Gi安装的 响应文件

cp /opt/soft/grid/response/grid_install.rsp grid_install.rsp.bak

vi grid_install.rsp

oracle.install.responseFileVersion=/oracle/install/rspfmt_crsinstall_response_schema_v11_2_0

ORACLE_HOSTNAME=db51

INVENTORY_LOCATION=/oracle/app/grid/oraInventory

SELECTED_LANGUAGES=en

oracle.install.option=CRS_CONFIG

ORACLE_BASE=/oracle/app/grid

ORACLE_HOME=/oracle/app/11.2.0/grid

oracle.install.asm.OSDBA=asmdba

oracle.install.asm.OSOPER=asmoper

oracle.install.asm.OSASM=asmadmin

oracle.install.crs.config.gpnp.scanName=db-scan

oracle.install.crs.config.gpnp.scanPort=1521

oracle.install.crs.config.clusterName=db-cluster

oracle.install.crs.config.gpnp.configureGNS=false

oracle.install.crs.config.gpnp.gnsSubDomain=

oracle.install.crs.config.gpnp.gnsVIPAddress=

oracle.install.crs.config.autoConfigureClusterNodeVIP=

oracle.install.crs.config.clusterNodes=db51:db51-vip,db52:db52-vip

oracle.install.crs.config.networkInterfaceList=bond0:172.16.53.0:1,bond1:192.168.55.0:2

oracle.install.crs.config.storageOption=ASM_STORAGE

oracle.install.crs.config.sharedFileSystemStorage.diskDriveMapping=

oracle.install.crs.config.sharedFileSystemStorage.votingDiskLocations=

oracle.install.crs.config.sharedFileSystemStorage.votingDiskRedundancy=NORMAL

oracle.install.crs.config.sharedFileSystemStorage.ocrLocations=

oracle.install.crs.config.sharedFileSystemStorage.ocrRedundancy=NORMAL

oracle.install.crs.config.useIPMI=false

oracle.install.crs.config.ipmi.bmcUsername=

oracle.install.crs.config.ipmi.bmcPassword=

oracle.install.asm.SYSASMPassword=oracle123

oracle.install.asm.diskGroup.name=ASMOCR

oracle.install.asm.diskGroup.redundancy=NORMAL

oracle.install.asm.diskGroup.AUSize=1

oracle.install.asm.diskGroup.disks=/dev/mapper/ocr_vote1,/dev/mapper/ocr_vote2,/dev/mapper/ocr_vote3

oracle.install.asm.diskGroup.diskDiscoveryString=/dev/mapper/ocr*

oracle.install.asm.monitorPassword=oracle123

oracle.install.crs.upgrade.clusterNodes=

oracle.install.asm.upgradeASM=false

oracle.installer.autoupdates.option=SKIP_UPDATES

oracle.installer.autoupdates.downloadUpdatesLoc=

AUTOUPDATES_MYORACLESUPPORT_USERNAME=

AUTOUPDATES_MYORACLESUPPORT_PASSWORD=

PROXY_HOST=

PROXY_PORT=

PROXY_USER=

PROXY_PWD=

PROXY_REALM=

3、通过响应文件安装GI

chmod 775 /opt/soft/grid/response/grid_install.rsp

./runInstaller -ignorePrereq -silent -force -responseFile /soft/grid/response/grid_install.rsp -showProgress

=============================================================================================================================

执行完成前输出两个脚本(分别在节点一和节点二执行):

/oracle/app/grid/oraInventory/orainstRoot.sh

/oracle/app/11.2.0/grid/root.sh

**********************************************************************************************************************************************************

** 执行遇到问题: *

** 1 /u01/app/11.2.0/grid/bin/clscfg.bin: error while loading shared libraries: libcap.so.1: cannot open shared object file: No such file or directory *

** 解决办法: *

** 1).安装yum yum install -y compat-libcap1 *

** 2).删除root.sh运行的内容(howe2) *

** perl /u01/app/11.2.0/grid/crs/install/rootcrs.pl -verbose-deconfig –force *

**********************************************************************************************************************************************************

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

注意坑(centos7以上安装11.2.0.4的坑,在执行安装root.sh脚本会出项的坑):

https://blog.csdn.net/ctypyb2002/article/details/101763120

在 centos 7 下安装 11.2.0.4 GI,运行 root.sh 时报错 Failed to start the Clusterware. Last 20 lines of the alert log follow:

解决方法:

root用户创建服务文件:

touch /usr/lib/systemd/system/ohas.service

chmod 777 /usr/lib/systemd/system/ohas.service

# vi /usr/lib/systemd/system/ohas.service

[Unit]

Description=Oracle High Availability Services

After=syslog.target

[Service]

ExecStart=/etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple

Restart=always

[Install]

WantedBy=multi-user.target

启动该服务:

systemctl daemon-reload

systemctl enable ohas.service

systemctl start ohas.service

systemctl status ohas.service

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

========================================

4.上述完成以后在其中一个节点执行

[grid@rac1 grid]$

/oracle/app/11.2.0/grid/cfgtoollogs/configToolAllCommands RESPONSE_FILE=/u01/soft/grid/grid_install.rsp

Setting the invPtrLoc to /u01/app/11.2.0/grid/oraInst.loc

perform - mode is starting for action: configure

perform - mode finished for action: configure

You can see the log file: /u01/app/11.2.0/grid/cfgtoollogs/oui/configActions2018-02-27_08-27-54-AM.log

========================================

5.验证,查看CRS信息:

crsctl status res -t

crs_stat -t

6.检查每个节点ASM实例:

ps -ef|grep smon

出现:osysmond.bin

五、安装数据库软件

1.准备GI安装的响应文件

cp /opt/soft/database/response/db_install.rsp db_install.rsp.bak

vi db_install.rsp

需要按照实际情况修改。空的不用填。

oracle.install.responseFileVersion=/oracle/install/rspfmt_dbinstall_response_schema_v11_2_0

oracle.install.option=INSTALL_DB_SWONLY

ORACLE_HOSTNAME=db51

UNIX_GROUP_NAME=oinstall

INVENTORY_LOCATION=/oracle/app/oracle/oraInventory

SELECTED_LANGUAGES=en,zh_CN

ORACLE_HOME=/oracle/app/oracle/product/11.2.0/db_1

ORACLE_BASE=/oracle/app/oracle

oracle.install.db.InstallEdition=EE

oracle.install.db.EEOptionsSelection=false

oracle.install.db.optionalComponents=

oracle.install.db.DBA_GROUP=dba

oracle.install.db.OPER_GROUP=oper

oracle.install.db.CLUSTER_NODES=db51,db52

oracle.install.db.isRACOneInstall=false

oracle.install.db.racOneServiceName=

oracle.install.db.config.starterdb.type=GENERAL_PURPOSE

oracle.install.db.config.starterdb.globalDBName=orcldb

oracle.install.db.config.starterdb.SID=orcldb

oracle.install.db.config.starterdb.characterSet=AL32UTF8

oracle.install.db.config.starterdb.memoryOption=false

oracle.install.db.config.starterdb.memoryLimit=

oracle.install.db.config.starterdb.installExampleSchemas=false

oracle.install.db.config.starterdb.enableSecuritySettings=false

oracle.install.db.config.starterdb.password.ALL=oracle

oracle.install.db.config.starterdb.password.SYS=

oracle.install.db.config.starterdb.password.SYSTEM=

oracle.install.db.config.starterdb.password.SYSMAN=

oracle.install.db.config.starterdb.password.DBSNMP=

oracle.install.db.config.starterdb.control=DB_CONTROL

oracle.install.db.config.starterdb.gridcontrol.gridControlServiceURL=

oracle.install.db.config.starterdb.automatedBackup.enable=false

oracle.install.db.config.starterdb.automatedBackup.osuid=

oracle.install.db.config.starterdb.automatedBackup.ospwd=

oracle.install.db.config.starterdb.storageType=

oracle.install.db.config.starterdb.fileSystemStorage.dataLocation=

oracle.install.db.config.starterdb.fileSystemStorage.recoveryLocation=

oracle.install.db.config.asm.diskGroup=

oracle.install.db.config.asm.ASMSNMPPassword=oracle

MYORACLESUPPORT_USERNAME=

MYORACLESUPPORT_PASSWORD=

SECURITY_UPDATES_VIA_MYORACLESUPPORT=false

DECLINE_SECURITY_UPDATES=true

PROXY_PORT=

PROXY_USER=

PROXY_PWD=

PROXY_REALM=

COLLECTOR_SUPPORTHUB_URL=

oracle.installer.autoupdates.option=SKIP_UPDATES

AUTOUPDATES_MYORACLESUPPORT_USERNAME=

AUTOUPDATES_MYORACLESUPPORT_PASSWORD=

2. 通过响应文件安装oracle软件

chmod 775 /home/oracle/db.rsp

./runInstaller -ignorePrereq -silent -force -responseFile /opt/soft/database/response/db_install.rsp -showProgress

==============================================================================================================================================

安装oracle软件时候报错遇到坑:INS-35354

错误解释:安装oracle提示信息如下:

The system on which you are attempting to install Oracle RAC is not part of a valid cluster.

解决方案:编辑/u01/app/oraInventory/ContentsXML/inventory.xml,找到属性

<HOME NAME="Ora11g_gridinfrahome1" LOC="/u01/app/11.2.0/grid" TYPE="O" IDX="1">添加CRS="true"属性,两个节点都要修改,修改完毕重新执行安装

如下:

[oracle@rac2 ~]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml

<?xml version="1.0" standalone="yes" ?>

<!-- Copyright (c) 1999, 2013, Oracle and/or its affiliates.

All rights reserved. -->

<!-- Do not modify the contents of this file by hand. -->

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>11.2.0.4.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME="Ora11g_gridinfrahome1" LOC="/u01/app/11.2.0/grid" TYPE="O" IDX="1" CRS="true">

<NODE_LIST>

<NODE NAME="rac1"/>

<NODE NAME="rac2"/>

</NODE_LIST>

</HOME>

<HOME NAME="OraDb11g_home1" LOC="/u01/app/oracle/product/11.2.0/db_1" TYPE="O" IDX="2">

<NODE_LIST>

<NODE NAME="rac1"/>

<NODE NAME="rac2"/>

</NODE_LIST>

</HOME>

</HOME_LIST>

<COMPOSITEHOME_LIST>

</COMPOSITEHOME_LIST>

</INVENTORY>

=========================================================================================================================================================

执行最后需要在root执行脚本(节点一和节点二):

/oracle/app/oracle/product/11.2.0/db_1/root.sh

六、手工创建磁盘组

GI,ORACLE软件安装后,需要创建磁盘组安装DB

grid用户sqlplus / as sysasm

set line 200

col NAME for a20

col FAILGROUP for a20

col PATH for a30

select group_number,name,state,type,total_mb,free_mb,usable_file_mb,allocation_unit_size/1024/1024 unit_mb from v$asm_diskgroup order by 1;

select group_number,name,path,mount_status,state,redundancy,total_mb,free_mb,failgroup,create_date from v$asm_disk order by 1;

SQL> show parameter asm_diskstring

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

asm_diskstring string /dev/mapper/ocr*

SQL> alter system set asm_diskstring='/dev/mapper/*' scope=both sid='*';

System altered.

SQL>

SQL> show parameter asm_diskstring

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

asm_diskstring string /dev/mapper/*

CREATE DISKGROUP ASMDATA external REDUNDANCY disk '/dev/mapper/rac3_data01','/dev/mapper/rac3_data02','/dev/mapper/rac3_data03','/dev/mapper/rac3_data04','/dev/mapper/rac3_data05','/dev/mapper/rac3_data06' ATTRIBUTE 'au_size'='1M', 'compatible.asm' = '11.2';

节点二离线,需要手动在二节点mount磁盘组

alter diskgroup ASMDATA mount;

仅供参考

=======================================================================

安装有出错的地方可以重新删除对应目录文件重新来过,都是这么踩着坑过来的:

重置格式化磁盘

dd if=/dev/zero of=/dev/mapper/ocr_vote1 bs=1024k count=50

dd if=/dev/zero of=/dev/mapper/ocr_vote2 bs=1024k count=50

dd if=/dev/zero of=/dev/mapper/ocr_vote3 bs=1024k count=50

删除对应oracle目录

rm -f /etc/init.d/init.ohasd

rm -f /etc/init.d/ohasd

rm -f /etc/oracle

rm -f /etc/init.d/init.cssd

rm -f /etc/init.d/init.crs

rm -f /etc/init.d/init.crsd

rm -f /etc/init.d/init.evmd

rm -rf /etc/oracle/scls_scr

rm -f /etc/inittab.crs

rm -f /etc/ohasd

rm -f /etc/oraInst.loc

rm -f /etc/oratab

rm -f /var/tmp/.oracle

rm -f /etc/oraInst.loc

rm -f /etc/inittab.*

cat /dev/null > /etc/inittab

rm -rf /tmp/*

rm -rf /tmp/.*

rm -f /usr/local/bin/dbhome

rm -f /usr/local/bin/oraenv

rm -f /usr/local/bin/coraenv

rm -rf /opt/ORCLfmap

rm -rf /oracle/app/*

=========================================================================

#################################################################################################################################################################

#################################################################################################################################################################

#################################################################################################################################################################

#################################################################################################################################################################

rac adg的安装部署如下

rac adg部署

==前提:备库安装grid和oracle软件即可。

1.DG基础环境

主库rac环境节点一: 192.168.3.15 crm-scan | db_name=orcldb db_unique_name=orcldb service_names=orcldb |主机名:db1

备库rac环境节点一:192.168.3.56 bossdb-scan | db_name=orcldb db_unique_name=orcldbst service_names=orcldb |主机名:db51

2,主库开启强制归档以及开启归档模,以及修改主库初始化参数

①开启强制归档

alter database force logging;

select force_logging from v$database;

②初始化参数

alter system set LOG_ARCHIVE_CONFIG='DG_CONFIG=(ORCLDB,ORCLDBST)' scope=both sid='*';

alter system set LOG_ARCHIVE_DEST_1='LOCATION=+ASMDATA VALID_FOR=(ALL_LOGFILES,ALL_ROLES) DB_UNIQUE_NAME=ORCLDB' scope=spfile;

alter system set LOG_ARCHIVE_DEST_2='SERVICE=ORCLDBST LGWR ASYNC VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE) DB_UNIQUE_NAME=ORCLDBST' scope=both sid='*';

alter system set FAL_CLIENT='ORCLDB' scope=both sid='*';

alter system set FAL_SERVER='ORCLDBST' scope=both sid='*';

alter system set STANDBY_FILE_MANAGEMENT=AUTO scope=both sid='*';

alter system set DB_FILE_NAME_CONVERT='+ASMDATA','+ASMDATA'scope=spfile sid='*';

alter system set LOG_FILE_NAME_CONVERT='+ASMDATA','+ASMDATA' scope=spfile sid='*';

3.主库添加standby redo logfile

添加日志规则:

如果节点RAC主库每个thread redo log有N组,standby redo log则需要standbby redo log组数为(n+1)*thread组。

主库查询redo 日志组数,有2个thread,每个thread有4组日志,standby则需要10组

===================================================================

主库日志查询如下:

SQL> select member from v$logfile;

MEMBER

-------------------------------------------------------------------

+ASMDATA/orcldb/onlinelog/group_2.284.1061552117

+ASMDATA/orcldb/onlinelog/group_1.283.1061552115

+ASMDATA/orcldb/onlinelog/group_3.287.1061552223

+ASMDATA/orcldb/onlinelog/group_4.288.1061552223

+ASMDATA/orcldb/onlinelog/group_5.272.1061566739

+ASMDATA/orcldb/onlinelog/group_6.271.1061566769

+ASMDATA/orcldb/onlinelog/group_7.269.1061566769

+ASMDATA/orcldb/onlinelog/group_8.270.1061566771

SQL> select thread#,group#,members,bytes,bytes/1024/1024 from v$log;

THREAD# GROUP# MEMBERS BYTES BYTES/1024/1024

---------- ---------- ---------- ---------- ---------------

1 1 1 1073741824 1024

1 2 1 1073741824 1024

2 3 1 1073741824 1024

2 4 1 1073741824 1024

1 5 1 1073741824 1024

1 6 1 1073741824 1024

2 7 1 1073741824 1024

2 8 1 1073741824 1024

======================================================================

添加standby redo logfile:

alter database add standby logfile thread 1 group 9 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 1 group 10 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 1 group 11 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 1 group 12 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 1 group 13 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 14 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 15 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 16 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 17 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 18 ('+ASMDATA') size 1024M;

查看:

SQL> select group#,status,type,member from v$logfile;

4.主库和备库监听配置以及TNS配置

主库:

orcldb =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = 192.168.3.16)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcldb)

)

)

orcldbST =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = 192.168.3.56)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcldbst)

)

)

5.在主库上生成用于备库的参数文件

SQL> create pfile='init20211013.ora' from spfile;

6.将主库的参数文件,密码文件拷贝到备库。

将密码文件复制到节点1和节点2的dbs下

cp /oracle/app/oracle/product/11.2.0/db_1/dbs/orapworcldb1 oracle@172.16.53.51:/oracle/app/oracle/product/11.2.0/db_1/dbs/

7.在备库上更改参数文件,修改参数以及创建目录结构。

cat init20211013.ora

orcldb2.__db_cache_size=25031606272

orcldb1.__db_cache_size=26910654464

orcldb2.__java_pool_size=469762048

orcldb1.__java_pool_size=469762048

orcldb2.__large_pool_size=469762048

orcldb1.__large_pool_size=469762048

orcldb1.__oracle_base='/oracle/app/oracle'#ORACLE_BASE set from environment

orcldb2.__oracle_base='/oracle/app/oracle'#ORACLE_BASE set from environment

orcldb1.__pga_aggregate_target=42949672960

orcldb2.__pga_aggregate_target=42949672960

orcldb1.__sga_target=171798691840

orcldb2.__sga_target=171798691840

orcldb1.__shared_io_pool_size=0

orcldb2.__shared_io_pool_size=0

orcldb2.__shared_pool_size=6039797760

orcldb1.__shared_pool_size=4093640704

orcldb2.__streams_pool_size=0

orcldb1.__streams_pool_size=67108864

*._gc_policy_time=0

*._gc_undo_affinity=FALSE

*._index_partition_large_extents='FALSE'

*._memory_imm_mode_without_autosga=FALSE

*._optimizer_adaptive_cursor_sharing=FALSE

*._optimizer_extended_cursor_sharing='NONE'

*._optimizer_extended_cursor_sharing_rel='NONE'

*._optimizer_null_aware_antijoin=FALSE

*._optimizer_use_feedback=FALSE

*._partition_large_extents='FALSE'

*._PX_use_large_pool=TRUE

*._undo_autotune=FALSE

*._use_adaptive_log_file_sync='FALSE'

*.audit_file_dest='/oracle/app/oracle/admin/orcldb/adump'

*.audit_trail='db'

*.cluster_database=true

*.compatible='11.2.0.4.0'

*.control_files='+ASMDATA/orcldbst/controlfile/current.256.1085995227','+ASMDATA/orcldbst/controlfile/current.257.1085995229'

*.db_block_size=8192

*.db_create_file_dest='+ASMDATA'

*.db_domain=''

*.db_file_name_convert='+ASMDATA','+ASMDATA'

*.db_name='orcldb'

*.db_unique_name='orcldbst'

*.deferred_segment_creation=FALSE

*.diagnostic_dest='/oracle/app/oracle'

*.dispatchers='(PROTOCOL=TCP) (SERVICE=orcldbXDB)'

*.enable_ddl_logging=TRUE

*.event='28401 trace name context forever,level 1','10949 trace name context forever,level 1'

*.fal_client='ORCLDBST'

*.fal_server='ORCLDB'

orcldb1.instance_number=1

orcldb2.instance_number=2

*.log_archive_config='DG_CONFIG=(ORCLDBST,ORCLDB)'

*.log_archive_dest_1='LOCATION=+ASMDATA VALID_FOR=(ALL_LOGFILES,ALL_ROLES) DB_UNIQUE_NAME=ORCLDBST'

*.log_archive_dest_2='SERVICE=ORCLDB LGWR ASYNC VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE) DB_UNIQUE_NAME=ORCLDB'

*.log_file_name_convert='+ASMDATA','+ASMDATA'

*.memory_target=0

*.open_cursors=1000

*.optimizer_index_cost_adj=30

*.parallel_force_local=TRUE

*.pga_aggregate_target=42949672960

*.processes=1500

*.remote_login_passwordfile='exclusive'

*.resource_limit=TRUE

*.resource_manager_plan='force:'

*.session_cached_cursors=500

*.sga_max_size=171798691840

*.sga_target=171798691840

*.standby_file_management='AUTO'

orcldb2.thread=2

orcldb1.thread=1

*.undo_retention=18000

orcldb1.undo_tablespace='UNDOTBS1'

orcldb2.undo_tablespace='UNDOTBS2'

8.在备库增加静态监听(若要使用在线恢复的方式,由于我这里网络和库比较大使用下面序号9下面rman备份的方式进行恢复)

①备库grid用户下配置监听和sqlnet.ora(两节点都操作)

[grid@orcldb1 admin]$ cat sqlnet.ora

# sqlnet.ora.crmdb1 Network Configuration File: /oracle/app/11.2.0/grid/network/admin/sqlnet.ora.crmdb1

# Generated by Oracle configuration tools.

NAMES.DIRECTORY_PATH= (TNSNAMES, EZCONNECT)

ADR_BASE = /oracle/app/grid

[grid@crmdb1 admin]$ cat listener.ora

LISTENER=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER)))) # line added by Agent

LISTENER_SCAN1=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_SCAN1)))) # line added by Agent

ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER_SCAN1=ON # line added by Agent

ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER=ON # line added by Agent

srvctl start scan_listener

srvctl start listener

若要使用duplicate在线恢复的方式备库需要配置静态注册(在oracle用户下配置静态监听):

nomount为block状态下,只有配置静态注册可以连接。

Listener.ora

SID_LIST_LISTENER =

(SID_LIST =

(SID_DESC =

(GLOBAL_DBNAME = orcldbst)

(ORACLE_HOME = /oracle/app/11.2.0/grid)

(SID_NAME = orcldb1)

)

)

LISTENER =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = bossdb-scan)(PORT = 1521))

)

9.启动备库数据库到nomount状态

SQL> startup pfile=init20211008.ora nomount;

10.备库restore控制文件

rman target /

restore standby controlfile from '/dbbackup/acct_recover_bak/controlfile_a90bj162_orcldb_20211014';

=====================================================================

遇到了oracle-19870,ora-19504,ora-17502,ora=15001错误,解决如下:

查询ls -l $ORACLE_HOME/bin/oracle

-rwsr-s--x oracle:oinstall $ORACLE_HOME/bin/oracle的权限

需要更改oracle:oinstall 为oracle:asmadmin,需要用grid用户更改如下:

/oracle/app/11.2.0/grid/bin/setasmgidwrap o=$ORACLE_HOME/bin/oracle

在更改chmod 6755 $ORACLE_HOME/bin/oracle 主组属组的权限为-rwsr-s--x

======================================================================

11.备库做控制文件恢复成功以后打开数据库到mount状态

alter database mount;

加载备份集

RMAN> catalog start with '/dbbackup/acct_recover_bak/'; ----此目录为你备端存放备份集的目录

12.备库开始恢复数据库

restore database;

13

create spfile='+asmdata/orcldbst/spfileorcldbst.ora' from pfile='/oracle/app/oracle/product/11.2.0/db_1/dbs/init20211013.ora';

分别在一节点和二节点配置:

节点一:

vi initorcldb1.ora

spfile='+asmdata/orcldbst/spfileorcldbst.ora'

借点二:

vi initorcldb2.ora

spfile='+asmdata/orcldbst/spfileorcldbst.ora'

14.添加资源

srvctl add database -d orcldb -o $ORACLE_HOME -p +asmdata/orcldbst/spfileorcldbst.ora

srvctl add instance -d orcldb -i orcldb1 -n bossdb51

srvctl add instance -d orcldb -i orcldb2 -n bossdb52

srvctl config database -d orcldb

srvctl start database -d orcldb

15:

检查

local_listener(vip)

remote_listener

SQL> show parameter local_listener

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

local_listener string (ADDRESS=(PROTOCOL=TCP)(HOST=

172.16.53.54)(PORT=1521))

SQL> show parameter remote_listener

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

remote_listener string bossdb-scan:1521

如果remote_listener为空则要alter system set remote_listener='bossdb-scan:1521' scope=spfile;

要不然会报错连接ora12514 ,ora10107(重新copy密码文件)

16.至此恢复完成,后续可以检查监听的连通性,通过使用sqlplus sys/oracle@ip:1521/db_name as sysdba的方式主库备库互连进行测试验证;

18.重做日志:

查询日志如下:

1 +ASMDATA/orcldbst/onlinelog/group_2.337.1086023865

2 +ASMDATA/orcldbst/onlinelog/group_1.336.1086023851

3 +ASMDATA/orcldbst/onlinelog/group_3.338.1086023879

4 +ASMDATA/orcldbst/onlinelog/group_4.339.1086023891

5 +ASMDATA/orcldbst/onlinelog/group_5.340.1086023907

6 +ASMDATA/orcldbst/onlinelog/group_6.341.1086023927

7 +ASMDATA/orcldbst/onlinelog/group_7.342.1086023941

8 +ASMDATA/orcldbst/onlinelog/group_8.343.1086023955

9 +ASMDATA/orcldb/onlinelog/group_9.2692.1085828027

10 +ASMDATA/orcldb/onlinelog/group_10.2732.1085828027

11 +ASMDATA/orcldb/onlinelog/group_11.2681.1085828029

12 +ASMDATA/orcldb/onlinelog/group_12.2464.1085828029

13 +ASMDATA/orcldb/onlinelog/group_13.2473.1085828031

14 +ASMDATA/orcldb/onlinelog/group_14.2704.1085828031

15 +ASMDATA/orcldb/onlinelog/group_15.2433.1085828033

16 +ASMDATA/orcldb/onlinelog/group_16.2649.1085828035

17 +ASMDATA/orcldb/onlinelog/group_17.2731.1085828035

18 +ASMDATA/orcldb/onlinelog/group_18.2541.1085828037

发现9~18日志路径不对,删除重做不然启动日志应用会有报错:

删除:

alter database drop standby logfile group 9;

alter database drop standby logfile group 10;

alter database drop standby logfile group 11;

alter database drop standby logfile group 12;

alter database drop standby logfile group 13;

alter database drop standby logfile group 14;

alter database drop standby logfile group 15;

alter database drop standby logfile group 16;

alter database drop standby logfile group 17;

alter database drop standby logfile group 18;

重做:

alter database add standby logfile thread 1 group 9 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 1 group 10 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 1 group 11 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 1 group 12 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 1 group 13 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 14 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 15 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 16 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 17 ('+ASMDATA') size 1024M;

alter database add standby logfile thread 2 group 18 ('+ASMDATA') size 1024M;

17:启动在线日志应用,在一个节点启动即可:

①.应用日志:

alter database recover managed standby database using current logfile disconnect from session;

②.取消应用日志:

alter database recover managed standby database cancel;

③.主库检查LNS进程:

select process,status from v$managed_standby;

如果没有LNS进程,则需要检查DG环境。

完成!!!!