Tensorflow2.0笔记

本博客为Tensorflow2.0学习笔记,感谢北京大学微电子学院曹建老师

4.2AlexNet

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class AlexNet8(Model):

def __init__(self):

super(AlexNet8, self).__init__()

self.c1 = Conv2D(filters=96, kernel_size=(3, 3))

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.p1 = MaxPool2D(pool_size=(3, 3), strides=2)

self.c2 = Conv2D(filters=256, kernel_size=(3, 3))

self.b2 = BatchNormalization()

self.a2 = Activation('relu')

self.p2 = MaxPool2D(pool_size=(3, 3), strides=2)

self.c3 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c4 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c5 = Conv2D(filters=256, kernel_size=(3, 3), padding='same',

activation='relu')

self.p3 = MaxPool2D(pool_size=(3, 3), strides=2)

self.flatten = Flatten()

self.f1 = Dense(2048, activation='relu')

self.d1 = Dropout(0.5)

self.f2 = Dense(2048, activation='relu')

self.d2 = Dropout(0.5)

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p2(x)

x = self.c3(x)

x = self.c4(x)

x = self.c5(x)

x = self.p3(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d1(x)

x = self.f2(x)

x = self.d2(x)

y = self.f3(x)

return y

model = AlexNet8()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/AlexNet8.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '

')

file.write(str(v.shape) + '

')

file.write(str(v.numpy()) + '

')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

借鉴点:激活函数使用Relu,提升训练速度;Dropout防止过拟合。

AlexNet网络诞生于2012年,其ImageNet Top5错误率为16.4 %,可以说AlexNet的出现使得已经沉寂多年的深度学习领域开启了黄金时代。AlexNet的总体结构和LeNet5有相似之处,但是有一些很重要的改进:

A)由五层卷积、三层全连接组成,输入图像尺寸为224 * 224 * 3,网络规模远大于LeNet5;

B)使用了Relu激活函数;

C)进行了舍弃(Dropout)操作,以防止模型过拟合,提升鲁棒性;

D)增加了一些训练上的技巧,包括数据增强、学习率衰减、权重衰减(L2正则化)等。AlexNet的网络结构如图5-23所示。

可以看到,图 5-20 所示的网络结构将模型分成了两部分,这是由于当时用于训练AlexNet 的显卡为 GTX 580(显存为 3GB),单块显卡运算资源不足的原因。

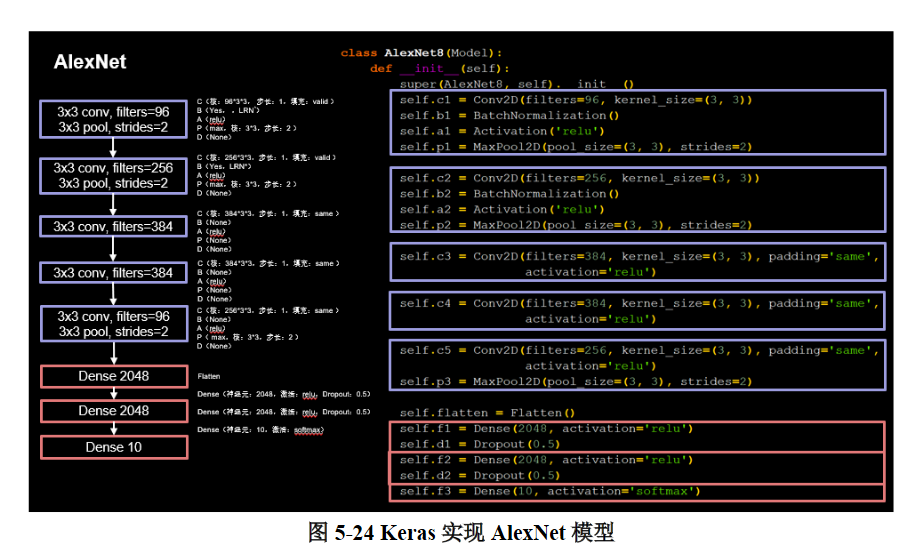

在 Tensorflow 框架下利用 Keras 来搭建 AlexNet 模型,这里做了一些调整,将输入图像尺寸改为 32 * 32 * 3 以适应 cifar10 数据集,并且将原始的 AlexNet 模型中的 11 * 11、7 * 7、5 * 5 等大尺寸卷积核均替换成了 3 * 3 的小卷积核,如图 5-24 所示。

图中紫色块代表卷积部分,可以看到卷积操作共进行了5次:

A)第1次卷积:共有96个3 * 3的卷积核,不进行全零填充,进行BN操作,激活函数为Relu,进行最大池化,池化核尺寸为3 * 3,步长为2;

self.c1 = Conv2D(filters=96, kernel_size=(3, 3))

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.p1 = MaxPool2D(pool_size=(3, 3), strides=2)

B)第2次卷积:与第1次卷积类似,除卷积核个数由96增加到256之外几乎相同;

self.c2 = Conv2D(filters=256, kernel_size=(3, 3))

self.b2 = BatchNormalization()

self.a2 = Activation('relu')

self.p2 = MaxPool2D(pool_size=(3, 3), strides=2)

C)第3次卷积:共有384个3 * 3的卷积核,进行全零填充,激活函数为Relu,不进行BN操作以及最大池化;

self.c3 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

D)第4次卷积:与第3次卷积几乎完全相同;

self.c4 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

E)第5次卷积:共有96个3 * 3的卷积核,进行全零填充,激活函数为Relu,不进行BN操作,进行最大池化,池化核尺寸为3 * 3,步长为2。

self.c5 = Conv2D(filters=256, kernel_size=(3, 3), padding='same',

activation='relu')

self.p3 = MaxPool2D(pool_size=(3, 3), strides=2)

图中红色块代表全连接部分,共有三层:

1)第一层共2048个神经元,激活函数为Relu,进行0.5的dropout;

self.flatten = Flatten()

self.f1 = Dense(2048, activation='relu')

self.d1 = Dropout(0.5)

2)第二层与第一层几乎完全相同;

self.f2 = Dense(2048, activation='relu')

self.d2 = Dropout(0.5)

3)第三层共10个神经元,进行10分类。

self.f3 = Dense(10, activation='softmax')

可以看到,与结构类似的LeNet5相比,AlexNet模型的参数量有了非常明显的提升,卷积运算的层数也更多了,这有利于更好地提取特征;Relu激活函数的使用加快了模型的训练速度;Dropout的使用提升了模型的鲁棒性,这些优势使得AlexNet的性能大大提升。