本贴记录学习hadoop中遇到的各种异常, 包括推荐系统分类下的和本分类下的, 持续更新

1, 搭建ha时, active和standy之间不能自由切换

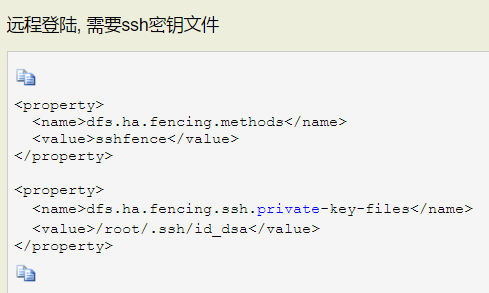

经检查, 配置文件错误, 私钥配置 root 前没加 /

2, eclipse插件安装好以后, 不能上传文件

插件版本 hadoop-eclipse-plugin-2.6.0

查看error.log为: "Map/Reduce location status updater".java.lang.NullPointerException

最后查看博客: http://blog.csdn.net/taoli1986/article/details/52892934, 因为没有在hdfs中建立根目录导致

解决: 在nameNode上新建根目录:

hdfs dfs -mkdir -p ~/first

后又出现权限问题, 修改hdfs-site.xml中权限认证

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

3, 写好程序打成jar以后, 上传hadoop执行时, 出现: Unsupported major.minor version 52.0

Exception in thread "main" java.lang.UnsupportedClassVersionError: com/wenbronk/mapreduce/RunMapReduce : Unsupported major.minor version 52.0 at java.lang.ClassLoader.defineClass1(Native Method) at java.lang.ClassLoader.defineClass(ClassLoader.java:800) at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) at java.net.URLClassLoader.defineClass(URLClassLoader.java:449) at java.net.URLClassLoader.access$100(URLClassLoader.java:71) at java.net.URLClassLoader$1.run(URLClassLoader.java:361) at java.net.URLClassLoader$1.run(URLClassLoader.java:355) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:354) at java.lang.ClassLoader.loadClass(ClassLoader.java:425) at java.lang.ClassLoader.loadClass(ClassLoader.java:358) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:274) at org.apache.hadoop.util.RunJar.main(RunJar.java:205)

原因: java版本不对, 代码编写使用jdk8, hadoop集群使用jdk7, 更换客户端jdk后解决

4, 运行时报 No route to host

17/04/03 05:26:06 INFO hdfs.DFSClient: Exception in createBlockOutputStream java.net.NoRouteToHostException: No route to host at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739) at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:529) at org.apache.hadoop.hdfs.DFSOutputStream.createSocketForPipeline(DFSOutputStream.java:1526) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1328) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1281) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:526) 17/04/03 05:26:06 INFO hdfs.DFSClient: Abandoning BP-1159285863-192.168.208.106-1491149429179:blk_1073741826_1002 17/04/03 05:26:06 INFO hdfs.DFSClient: Excluding datanode 192.168.208.107:50010

原因: centos6.5 防火墙阻拦, 关掉防火墙后正常

5, 第一次代码执行 程序运行服务器模式时, 报异常:

Permission denied: user=wenbr, access=EXECUTE, inode="/tmp":root:supergroup:drwx-----

解决: 权限问题, 在nn上执行:

hadoop fs -chown -R root:root /tmp

然后异常换了:

The ownership on the staging directory /tmp/hadoop-yarn/staging/wenbr/.staging is not as expected. It is owned by root. The directory must be owned by the submitter wenbr or by wenbr

未解决, 修改电脑名为root, 重启电脑仍未解决, 最后看着这个: http://www.cnblogs.com/hxsyl/p/6098391.html

解决了

$HADOOP_HOME/bin/hdfs dfs -chmod -R 755 /tmp