1.下载hadoop

2.增加账户

sudo adduser --system --no-create-home --disabled-login --disabled-password --group hadoop

添加到管理员组

sudo usermod -aG root hadoop

3.安装ssh

sudo apt-get install openssh-server #安装

sudo /etc/init.d/ssh start #启动

ps -e | grep ssh #测试

ssh-keygen -t rsa -P "" #生成秘钥

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys #导入公钥

ssh localhost #登入测试

exit #登出

ssh localhost #再次登入

4.安装hadoop

cd /opt/hadoop/

tar -zxvf hadoop-1.2.1.tar.gz

授权:sudo chown -R hadoop:hadoop /opt/hadoop/hadoop-1.2.1

设定hadoop-env.sh(Java 安装路径):

进入hadoop目录,打开conf目录下到hadoop-env.sh,添加以下信息:

export JAVA_HOME=/opt/java/jdk1.6.0_29

export HADOOP_HOME=/opt/hadoop/hadoop-1.2.1

export PATH=$PATH:/opt/hadoop/hadoop-1.2.1/bin

export HADOOP_HOME_WARN_SUPPRESS="TRUE"

source /opt/hadoop/hadoop-1.2.1/conf/hadoop-env.sh #更新配置

hadoop version #测试

5.#####################伪分布模式配置#####################

cd /opt/hadoop/hadoop-1.2.1

mkdir tmp

mkdir -p hdfs/name

mkdir -p hdfs/data

sudo chmod g-w /opt/hadoop/hadoop-1.2.1/hdfs/data #sudo chmod 775 /opt/hadoop/hadoop-1.2.1/hdfs/data

gedit /opt/hadoop/hadoop-1.2.1/conf/core-site.xml:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/hadoop-1.2.1/tmp</value>

</property>

</configuration>

gedit /opt/hadoop/hadoop-1.2.1/conf/hdfs-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/opt/hadoop/hadoop-1.2.1/hdfs/name</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/opt/hadoop/hadoop-1.2.1/hdfs/data</value>

</property>

</configuration>

gedit /opt/hadoop/hadoop-1.2.1/conf/mapred-site.xml:

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

</configuration>

6.格式化HDFS

source /opt/hadoop/hadoop-1.2.1/conf/hadoop-env.sh

hadoop namenode -format

7.启动Hadoop

cd /opt/hadoop/hadoop-1.2.1/bin

start-all.sh #启动所有服务(namenode,datanode) 停止stop-all.sh

8.验证安装成功

jps

9.检查运行状态

通过下面的操作来查看服务是否正常,在Hadoop中用于监控集群健康状态的Web界面:

http://localhost:50030/ #Hadoop 管理介面

http://localhost:50060/ #Hadoop Task Tracker 状态

http://localhost:50070/ #Hadoop DFS 状态

10.伪分布模式下运行Hadoop自带的wordcount:

/opt/hadoop/hadoop-1.2.1$ ./bin/start-all.sh

/home/works$ echo "welcome to hadoop world" >test1.txt

echo "big data is popular" >test2.txt

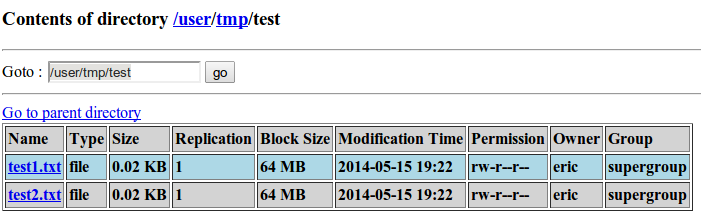

/opt/hadoop/hadoop-1.2.1$ ./bin/hadoop dfs -put /home/works/ ./tmp/test

./bin/hadoop dfs -ls ./tmp/test/*

[http://localhost.localdomain:50075/browseDirectory.jsp?dir=%2Fuser%2Ftmp%2Ftest&namenodeInfoPort=50070]

/opt/hadoop/hadoop-1.2.1$ ./bin/hadoop jar hadoop-examples-1.2.1.jar wordcount /user/tmp/test /user/tmp/out

./bin/hadoop dfs -ls

参考:

升级成2.2.0 http://bigdatahandler.com/hadoop-hdfs/installing-single-node-hadoop-2-2-0-on-ubuntu/

http://www.iteblog.com/archives/856

http://blog.csdn.net/hitwengqi/article/details/8008203

http://www.cnblogs.com/welbeckxu/category/346329.html