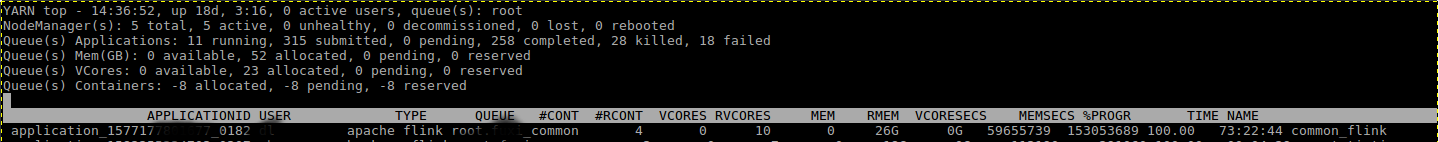

1.yarn top,查看yarn上面的资源使用情况

2.队列使用状态

queue -status root.xxx_common Queue Information : Queue Name : root.xxx_common State : RUNNING Capacity : 100.0% Current Capacity : 21.7% Maximum Capacity : -100.0% Default Node Label expression : Accessible Node Labels :

3.查看yarn上运行的任务列表,如果集群有krb认证的话,需要先kinit,认证后可以看到所有正在运行的任务

yarn application -list

结果

Total number of applications (application-types: [] and states: [SUBMITTED, ACCEPTED, RUNNING]):12

Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URL

application_15771778xxxxx_0664 xx-flink-test Apache Flink xxx-xx root.xxx_common RUNNING UNDEFINED 100% http://xxx-76:35437

application_15771778xxxxx_0663 xx-flink-debug Apache Flink xx root.xxx_common RUNNING UNDEFINED 100% http://xxx-79:42443

application_15771778xxxxx_0641 xxx-flink Apache Flink xxx-xx root.xxx_common RUNNING UNDEFINED 100% http://xxx-76:38067

application_15771778xxxxx_0182 common_flink Apache Flink xx root.xxx_common RUNNING UNDEFINED 100% http://xxx-79:38583

application_15822552xxxxx_0275 testjar XXX-FLINK xxx root.xxx_common RUNNING UNDEFINED 100% http://xxx-78:36751

application_15822552xxxxx_0259 flinksql XXX-FLINK hdfs root.xxx_common RUNNING UNDEFINED 100% http://xxx-77:37127

application_15822552xxxxx_0026 kudu-test Apache Flink hdfs root.xxx_common RUNNING UNDEFINED 100% http://xxx-78:43071

application_15822552xxxxx_0307 xxx_statistic XXX Flink xxx root.xxx_common RUNNING UNDEFINED 100% http://xxx:18000

application_15822552xxxxx_0308 xxx-statistic XXX Flink xxx root.xxx_common ACCEPTED UNDEFINED 0% N/A

application_15810489xxxxx_0003 xxx-flink Apache Flink xx root.xxx_common RUNNING UNDEFINED 100% http://xxx-78:8081

application_15810489xxxxx_0184 common_flink Apache Flink xx root.xxx_common RUNNING UNDEFINED 100% http://xxx-76:35659

application_15810489xxxxx_0154 Flink session cluster Apache Flink hdfs root.xxx_common RUNNING UNDEFINED 100% http://xxx-80:38797

使用状态进行筛选

yarn application -list -appStates RUNNING

Total number of applications (application-types: [] and states: [RUNNING]):12

Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URL

application_157717780xxxx_0664 xx-flink-test Apache Flink xxx-xx root.xxx_common RUNNING UNDEFINED 100% http://xxxxx-xx:35437

4.查看任务状态信息

yarn application -status application_1582255xxxx_0314 Application Report : Application-Id : application_1582255xxxx_0314 Application-Name : select count(*) from tb1 (Stage-1) Application-Type : MAPREDUCE User : hive Queue : root.xxxx_common Start-Time : 1583822835423 Finish-Time : 1583822860082 Progress : 100% State : FINISHED Final-State : SUCCEEDED Tracking-URL : http://xxx-xxxx-xx:19888/jobhistory/job/job_15822552xxxx_0314 RPC Port : 32829 AM Host : xxxx-xxxx-xx Aggregate Resource Allocation : 162810 MB-seconds, 78 vcore-seconds Log Aggregation Status : SUCCEEDED Diagnostics :

5.查看yarn上任务的log

xxx@xxx-xx:~$ yarn logs -applicationId application_1582255xxxxx_0259 /tmp/logs/xxx/logs/application_1582255xxxxx_0259 does not exist.

你使用哪里keytab进行认证,就只能查看改用户下的application的日志,比如是xxx的keytab,就只能查看/tmp/logs/xxx目录下的日志

hive的MR任务的运行用户都是hive,所以要先使用hive.keytab进行认证

kinit -kt /var/lib/hive/hive.keytab hive

查看日志

yarn logs -applicationId application_1582255xxxxx_0313

内容大致

Container: container_e49_15822552xxxx_0313_01_000003 on xxxxxxx-xx_8041 ============================================================================= LogType:stderr Log Upload Time:Tue Mar 10 14:04:57 +0800 2020 LogLength:0 Log Contents: LogType:stdout Log Upload Time:Tue Mar 10 14:04:57 +0800 2020 LogLength:0 Log Contents: LogType:syslog Log Upload Time:Tue Mar 10 14:04:57 +0800 2020 LogLength:71619 Log Contents: 2020-03-10 14:04:42,509 INFO [main] org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties 2020-03-10 14:04:42,569 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s). 2020-03-10 14:04:42,569 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: ReduceTask metrics system started 2020-03-10 14:04:42,609 INFO [main] org.apache.hadoop.mapred.YarnChild: Executing with tokens: 2020-03-10 14:04:42,609 INFO [main] org.apache.hadoop.mapred.YarnChild: Kind: mapreduce.job, Service: job_1582255xxxxx_0313, Ident: (org.apache.hadoop.mapreduce.security.token.JobTokenIdentifier@505fc5a4) 2020-03-10 14:04:42,770 INFO [main] org.apache.hadoop.mapred.YarnChild: Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:xxx-nameservice, Ident: (token for hive: HDFS_DELEGATION_TOKEN owner=hive/xxxxx-xx@XXX-XXXXX-XX, renewer=yarn, realUser=, issueDate=1583820254237, maxDate=1584425054237, sequenceNumber=32956, masterKeyId=647) 2020-03-10 14:04:42,800 INFO [main] org.apache.hadoop.mapred.YarnChild: Sleeping for 0ms before retrying again. Got null now. 2020-03-10 14:04:42,955 INFO [main] org.apache.hadoop.mapred.YarnChild: mapreduce.cluster.local.dir for child: /data02/yarn/nm/usercache/hive/appcache/application_15822xxxx_0313,/data03/yarn/nm/usercache/hive/appcache/application_1582255xxxx_0313,/data04/yarn/nm/usercache/hive/appcache/application_1582xxxx_0313,/data05/yarn/nm/usercache/hive/appcache/application_15822552xxxx_0313,/data06/yarn/nm/usercache/hive/appcache/application_158225xxxxx_0313,/data07/yarn/nm/usercache/hive/appcache/application_158225xxxxxx_0313,/data08/yarn/nm/usercache/hive/appcache/application_158225xxxx_0313,/data09/yarn/nm/usercache/hive/appcache/application_1582255xxxx_0313,/data10/yarn/nm/usercache/hive/appcache/application_158225xxxx_0313,/data11/yarn/nm/usercache/hive/appcache/application_158225xxxx_0313,/data12/yarn/nm/usercache/hive/appcache/application_158225xxxx_0313,/data01/yarn/nm/usercache/hive/appcache/application_158225xxx_0313 2020-03-10 14:04:43,153 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id 2020-03-10 14:04:43,633 INFO [main] org.apache.hadoop.mapred.Task: Using ResourceCalculatorProcessTree : [ ] 2020-03-10 14:04:43,716 INFO [main] org.apache.hadoop.mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@62f4ff3b 2020-03-10 14:04:43,847 INFO [fetcher#10] org.apache.hadoop.io.compress.CodecPool: Got brand-new decompressor [.snappy] 2020-03-10 14:04:43,872 INFO [main] org.apache.hadoop.io.compress.CodecPool: Got brand-new compressor [.snappy]

6.yarn kill application

yarn application -kill application_1583941025138_0001

20/03/12 00:04:05 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.2.105:8032 Killing application application_1583941025138_0001 20/03/12 00:04:05 INFO impl.YarnClientImpl: Killed application application_1583941025138_0001

yarn kill job

yarn job -kill job_xxxxx

参考:https://www.jianshu.com/p/f510a1f8e5f0